Czichos H., Saito T., Smith L.E. (Eds.) Handbook of Metrology and Testing

Подождите немного. Документ загружается.

1118 Part E Modeling and Simulation Methods

and magnetic polarization of a magnet, upon various

external conditions such as temperature, pressure and

magnetic field, instead of the detailed trajectories of

individual particles. The statistical mechanics is such

a disciplinary, which reveals simple rules governing

systems of huge numbers of components. In many inter-

esting cases, unfortunately, statistical mechanics cannot

provide a final compact expression for the desired phys-

ical quantities because of the many-body effect of the

interactions among components, although the bare in-

teraction is two-body. Many approximate theories have

been developed which make the field called condensed

matter physics very rich. With the huge leaping of

power of computers, the Monte Carlo approach based

on the principle of statistical mechanics has been de-

veloped in the recent decades. This chapter is devoted

to introducing the basic notions for the Monte Carlo

method [22.1], with several examples of application.

22.1.1 Boltzmann Weight

According to the principle of statistical mechanics, the

probability for a particular microscopic state appearing

in an equilibrium system attached to a heat reservoir

with temperature T is given by the Boltzmann weight

p

i

= e

−βE

i

/

i

e

−βE

i

≡ e

−βE

i

/Z , (22.1)

where β =1/k

B

T, E

i

the energy of the system at the

particular state, and Z the partition function. The ex-

pectation value of any physical quantity, such as the

magnetic polarization of a magnet, can be evaluated by

O=

1

Z

i

O

i

e

−βE

i

. (22.2)

The difficulty one meets in applications is: there are too

many possible states such that it is practically unable

to compute the partition function and the expectation

values for desired physical quantities.

22.1.2 Monte Carlo Technique

The Monte Carlo techniques overcome this difficulty by

choosing a subset of possible states, and approximate

the expectation value of a physical quantity by the av-

erage within the subset of states of limited number. The

way for picking up the subset of states can be speci-

fied by ourselves, and is very crucial to the accuracy

of the estimation thus made. A successful Monte Carlo

simulation thus relies heavily on the way of sampling.

Importance Sampling

One can regard the statistical expectation value as a time

average over the states a system passes through the

course of a measurement. This suggests that we can

take a sample of the states of the system such that

the possibility of any particular state being chosen is

proportional to its Boltzmann weight. This importance

sampling method is the simplest and most widely-used

in Monte Carlo approaches. It is easy to see that the esti-

mation for the desired expectation value is given simply

by

O

M

=

M

i=1

O

i

/M , (22.3)

since the Boltzmann weight has been already involved

into the process of sampling. This estimation is much

more accurate than a simple sampling process, espe-

cially at low temperatures where the system spends the

large portion of time in several states with low energies.

The implementation of the above importance sam-

pling is achieved by Markov processes. A Markov

process for Monte Carlo simulation generates a Markov

chain of states: starting from the state a, it indicates

a new one b, and upon inputting b it points the third one

c, and so on. A certain number of the states at the head

of these Markov chains are dropped, since they depend

on initial state a. However, after running a sufficiently

long time, the picked states should obey the Boltzmann

distribution. This goal is guaranteed by the ergodicity

property and the condition of detailed balance.

Ergodicity

This property requires that any state of the system can

be reached via the Markov process from any other state

supposing we run it sufficiently long. The importance

of this condition is very clear since otherwise the miss-

ing states will have zero weight in our simulation which

is not the case in reality. It is noticed that one still has

room to set the direct transition probability from one

state to many others to zero, as usually done in many

algorithms.

Detailed Balance

This condition is slightly subtle and requires some con-

sideration. For the desired distribution vector p, with the

components of the vector being the Boltzmann weight

for each state, suppose we find an appropriate Markov

process characterized by a set of transition probabilities

among possible states such that

j

p

i

P(i → j) =

j

p

j

P( j →i) , (22.4)

Part E 22.1

Monte Carlo Simulation 22.1 Fundamentals of the Monte Carlo Method 1119

where p

i

is the probability of the state i and P(i → j)

the transition probability from i-state to j-state. The

above condition is necessary since the desired distribu-

tion, namely the Boltzmann distribution, is the one for

equilibrium state. However, the Markov process satis-

fying the above condition does not necessarily generate

the desired distribution, even when the Markov chain is

run long enough. Actually, it is possible that a dynamic

limit cycle is realized as

p

(t +n) = P

n

p

(t) (22.5)

with n > 1, so that the desired distribution p can never

be reached. In order to avoid this situation, we set the

detailed balance condition

p

i

P(i → j) = p

j

P( j →i) . (22.6)

It is easy to see that this condition eliminates the possi-

bility of limit cycles. Then from the Perron–Frobenuius

theorem, it can be shown that the desired distribution p

is the only one that can be generated by the transition

matrix P.

To be short, a Markov process satisfying the condi-

tions (i) the ergodicity condition, (ii)

i

P(i → j) =1,

and (iii)

P(i → j)/P( j → i) = p

j

/p

i

= e

−β(E

j

−E

i

)

,

(22.7)

will generate the Boltzmann distribution at equilibrium.

Acceptance Ratio

There is still freedom when composing the transition

probabilities. One can take a selection probability g and

the acceptance ratio A such that

P(i → j) = g(i → j)A(i → j) . (22.8)

For simplicity, one usually takes the constant selection

probability for all possible states during the one-step

transition. As for the acceptance ratio, we can take them

as large as possible provided they satisfy the condition

(22.7), which makes the Markov chain move more effi-

ciently around the sampling space. It is easy to see that

the acceptance ratio is given by

A(i → j) =

e

−β(E

j

−E

i

)

, for E

j

−E

i

> 0 ;

1, otherwise .

(22.9)

This is the Metropolis algorithm [22.2].

With the selection probability and the acceptance

ratio determined, a Monte Carlo simulation goes like

the following: from a given initial state we pick up

a candidate for the next state out of the possible ones

according to the selection probability; then we check

if the candidate state is to be accepted according to

the probability specified by the acceptance ratio. This

routine is repeated for sufficiently long time before we

insert a measurement step where we calculate physical

quantities for the realized state.

It is then clear that the Markov chain of states thus

generated is stochastic, which is the most important

feature of the Monte Carlo techniques in comparison

with other deterministic methods such as molecular dy-

namics. The rules which specify a particular simulation

algorithm govern the distribution of the realized states

when a large number of states are piled up.

22.1.3 Random Numbers

The stochastic property of Monte Carlo simulations

comes in because one chooses a new state from the old

one in a random fashion. This process is realized by ran-

dom numbers. Therefore, generation of a sequence of

random numbers is of crucial importance for accurate

Monte Carlo simulations. Of course, as long as such

a sequence is generated by a deterministic procedure

written in a computer code, it cannot be really random.

It is usually called quasi random provided it satisfies the

following two conditions characteristic to true random

numbers.

Condition I: The period of the quasi random numbers

should be longer than the total length of simulations.

Condition II: The quasi random numbers should be

k-dimensional equidistributed with large enough k.In

other words, all possible sets consisting of k sequential

numbers appear with the same frequency in a period,

except the one of k zeros.

Classical Random Number Generators

The linear congruential sequence is given by

x

i+1

=ax

i

+b mod 2

w

, i =0, 1,... , (22.10)

where w stands for the number of bits of random num-

bers x

i

,anda and b are suitable sets of numbers which

maximizes the period of the quasi random numbers to

2

w

. The merit of this sequence is its simplicity. How-

ever, it has several shortcomings. First, the period is not

sufficiently long even with w =31. Second, a random

number is determined only by the previous one, and

there exists strong correlation among sequential num-

bers. Third, the multiple operation is time consuming.

Part E 22.1

1120 Part E Modeling and Simulation Methods

These shortcomings are overcome by the general-

ized feedback shift register (GFSR)

x

i+n

=x

i+m

⊕x

i

, i = 0, 1,... , (22.11)

where ⊕ denotes the exclusive- or logical operation.

The two integers n and m are chosen so that t

n

+t

m

+1

is an nth order primitive polynomial of t. When the ini-

tial seeds x

0

, x

1

,...,x

n−1

are prepared appropriately,

the period of this sequence is 2

n

−1. This period is

due to the independence of each bit. Since each step

only includes one logical operation, this algorithm is

very fast. However, statistical properties of this fam-

ily of random numbers is still not very satisfactory, as

shown by large-scale Monte Carlo simulations of the

two-dimensional Ising model [22.3] for which an exact

solution is available.

Advanced Generators

In order to increase the period of the sequence of quasi

random numbers, the twisted GFSR (TGFSR) has been

introduced [22.4,5]

x

i+n

=x

i+m

⊕x

i

A, i =0, 1,... , (22.12)

where A is a w ×w sparse matrix

A =

⎛

⎜

⎜

⎜

⎜

⎜

⎜

⎝

1

.

.

.

1

1

a

w−1

a

w−2

··· a

1

a

0

⎞

⎟

⎟

⎟

⎟

⎟

⎟

⎠

. (22.13)

Here a

w−1

,...,a

0

take 1 or 0 so that ϕ

A

(t

n

+t

m

)is

primitive with ϕ

A

(t) the characteristic polynomial of A.

This operation mixes the information of all bits, and

the period of each bit increases up to 2

nw

−1. In order

to accomplish the equidistribution property, tempering

operations consisting of certain bitshift and bitmask

logical operations are introduced in each step. Based

on these two operations the so-called TT800 algorithm

with w =32, n = 25 and m = 7 exhibits the period

2

800

−1 and 25-dimensional equidistribution.

A further improved generator known as the

Mersenne Twister is proposed [22.6]

x

i+n

=x

i+m

⊕

x

u

i

|x

l

i+1

A, i = 0, 1,... , (22.14)

where the matrix A is determined similarly to the

TGFSR. The key point is to use the incomplete array,

with

x

u

i

|x

l

i+1

standing for the operation to combine

the upper (w −r) bits of x

i

and the lower r bits

of x

i+1

. This operation drops the information of the

lower r bits of x

0

, and consequently the period is re-

duced from 2

nw

−1to2

p

−1, with p =nw −r.On

the other hand, if the period can be chosen as a prime

number, there exists an algorithm which evaluates the

characteristic polynomial ϕ

A

(t) with O(p

2

) compu-

tations. Then, the period can be extended thanks to

the r degrees of freedom. Taking w =32, n = 624,

m = 397 and r =31 results in a Mersenne prime num-

ber p =19 937, namely the MT19937 algorithm has

the period 2

19 937

−1 and 623-dimensional equidistri-

bution. Owing to such an astronomical period and good

statistical property on a rigorous mathematical basis,

this algorithm is now becoming the standard quasi

random number generator both in academic and com-

mercial uses [22.7].

22.1.4 Finite-Size Effects

In the thermodynamic limit, many physical quantities

diverge at the phase transition. Although all of them

take finite values in a finite system, diverging properties

can be evaluated from the scaling behavior of a series of

finite-size systems.

First-Order Phase Transition

In a first-order phase transition, divergence of physi-

cal quantities at the transition temperature is δ-function

like in the thermodynamic limit. The size dependence

of the divergent quantity and the transition temperature

in finite systems should be

Q(L, T

c

(L)) = Q

0

+C

1

L

d

, (22.15)

T

c

(L) =T

c

(∞) +C

1

L

−d

+···, (22.16)

Note that the constant term Q

0

is continuous from

outside the transition regime, and thus is important

physically as the divergent term.

Second-Order Phase Transition

In a second-order phase transition, physical quantities

diverge in a wide range around the critical temperature

in the thermodynamic limit as

Q(T) ∼

|

T −T

c

|

−ϕ

, (22.17)

where ϕ is the critical exponent of quantity Q. A typical

example is the susceptibility of a ferromagnet. Correla-

tions between order parameters develop in the following

Part E 22.1

Monte Carlo Simulation 22.2 Improved Algorithms 1121

log m

log t

(a) (b)

(c)

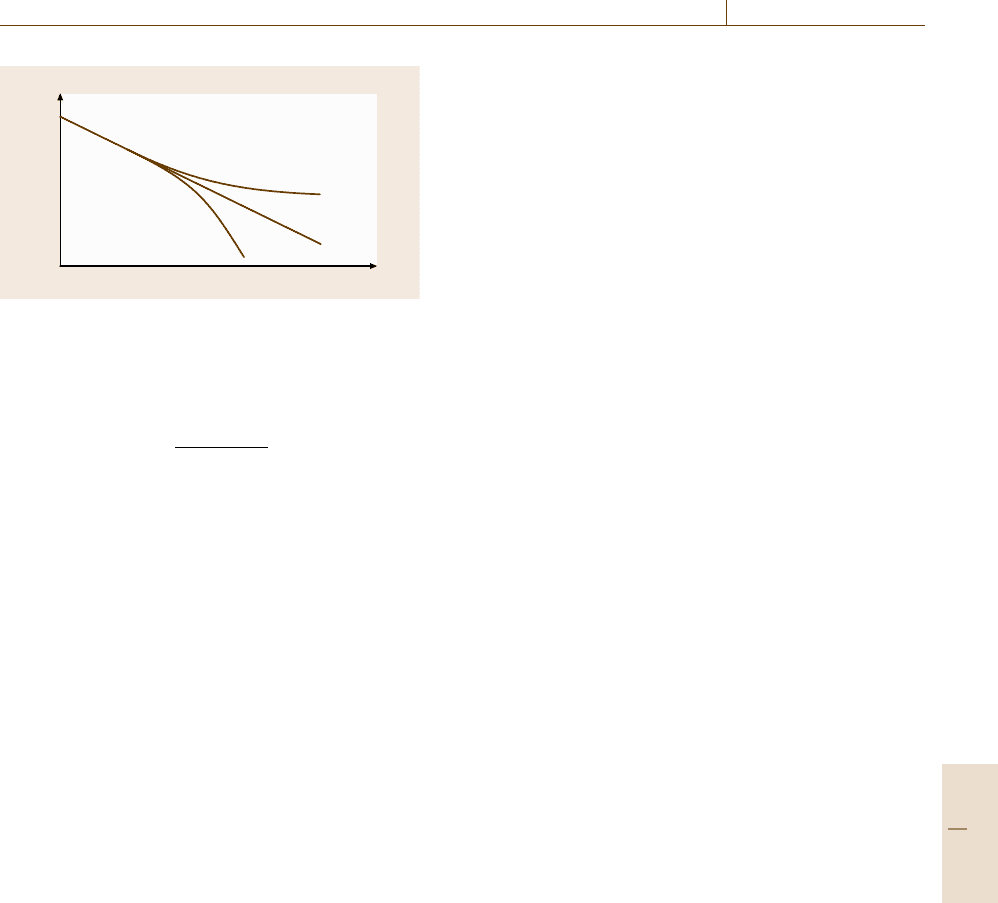

Fig. 22.1 Schematic figure of nonequilibrium relaxation of

order parameter from a fully polarized configuration for (a)

T > T

c

,(b) T = T

c

and (c) T < T

c

way

m

i

m

j

−m

i

2

∼

e

−|i−j|/ξ

|i − j|

d−2+η

, (22.18)

ξ ∼|T −T

c

|

−ν

, (22.19)

where m

i

stands for the order parameter at position i,

and ν and η are the critical exponents for the correlation

length and critical correlation function. While the criti-

cal temperature T

c

depends on details of systems such as

lattice structures, the critical exponents are not changed

by such details, a property known as the universality of

critical phenomena.

In a finite system, phase transition is supposed to

take place at the temperature where a correlated clus-

ter becomes as large as the system size, namely ξ ∼ L.

Finite-size scaling theory claims that physical quanti-

ties around the critical temperature can be expressed as

a function of L/ξ in the following fashion

Q(L, T ) ∼ L

ϕ/ν

f

sc

L

1/ν

(T −T

c

)

, (22.20)

where f

sc

(x) is a scaling function.

Several statements can be derived from the finite-

size scaling form (22.20): (1) At T = T

c

, this form

is reduced to Q(L, T

c

) ∼ L

ϕ/ν

, which stands for the

power-law size dependence at the critical temperature.

(2) When L

−ϕ/ν

Q(L, T) is plotted versus L

1/ν

(T −

T

c

), the curves for different L should collapse to each

other in the vicinity of T

c

provided T

c

, ν and ϕ are

known. Alternatively, one can use this so-called finite-

size scaling plot to evaluate the critical point and critical

exponents.

22.1.5 Nonequilibrium Relaxation Method

As discussed above, measurement of physical quanti-

ties should be made after equilibration of the system.

However, the relaxation process also contains valuable

information of equilibrium state, such as the transition

point and critical exponents. As an example, we con-

sider the magnetic polarization of a ferromagnet, which

presuming nonvanishing value only below the transi-

tion temperature as m(T) ∼(T

c

−T)

β

. If one starts from

a fully polarized configuration, the order parameter m

decays exponentially to zero as MC update proceeds

for T > T

c

; it converges to a finite value for T < T

c

,as

showninFig.22.1. The dynamical scaling form [22.8,9]

in the thermodynamic limit is given by

m(T ;t) ∼t

−β/(zν)

F

sc

t

1/(zν)

(T −T

c

)

, (22.21)

where z is the dynamical critical exponent. At T = T

c

,

(22.21) reduces to

m(T

c

;t) ∼t

−β/(zν)

. (22.22)

Therefore, it is possible to evaluate the critical

temperature T

c

and a combination of critical ex-

ponents β/(zν) [22.10–13]. Another combination of

critical exponents 1/(zν) is evaluated from the “finite-

time” scaling of (22.21). Extensions to quantum sys-

tems [22.14–16] and Kosterlitz–Thouless [22.17]and

first-order [22.18] phase transitions are possible.

22.2 Improved Algorithms

The conventional Monte Carlo algorithm explained

above suffers from the problem of slow relaxation

in cases such as nucleation process in first-order

phase transitions, critical slowing down in the vicinity

of second-order phase transitions, and complex free-

energy landscape of random and/or frustrated systems.

Several improved algorithms are proposed and shown

to be very efficient. Readers can refer to [22.19]for

a well-written review.

22.2.1 Reweighting Algorithms

Suppose the number of states between E and E + dE

is given by D(E)dE with the density of states (DOS)

Part E 22.2

1122 Part E Modeling and Simulation Methods

D(E), the probability for the system presuming energy

E is P(E, T) ∼ D(E)e

−βE

, known as the canonical

distribution. If the canonical distribution at a tempera-

ture β

0

is given, that at β is obtained by the reweighting

formula [22.20]

P(E;T) =

e

−(β−β

0

)E

P(E;T

0

)

e

−(β−β

0

)ε

P(ε;T

0

)dε

. (22.23)

Since the histogram P(E;T

0

) has a sharp peak around

the averaged energy of the system at T

0

, P(E;T) can be

evaluated from P(E;T

0

) with small enough statistical

errors only if T is close enough to T

0

. This limitation

can be overcome by taking data for several temperatures

around the target one [22.21,22].

The essential point of the histogram method is to no-

tice that the probability distribution used in simulations

can be different from that used for evaluating thermal

averages of physical quantities, namely the Boltz-

mann distribution. In the multicanonical method [22.23,

24], the simulated probability distribution is taken as

P(E;T) ≈1/E

0

(T), where E

0

(T ) stands for the range

of the energy covered in simulations at temperature T.

The reason that the multicanonical simulation works ef-

ficiently can be seen taking the example of a first-order

phase transition. Since the canonical distribution has

a sharp peak around the equilibrium energy, nucleation

processes governed by the two free-energy minima with

finite separation results in slow relaxation. This diffi-

culty is overcome by the broad energy range covered by

the multicanonical weight.

Since the DOS D(E) is not known a priori, it should

be evaluated in preliminary runs, and the approximate

DOS D

0

(E;T) is utilized in simulations. The probabil-

ity distribution

P

MC

(E;T) = D

−1

0

(E;T )D(E)/E

0

(T) (22.24)

does not have to be completely flat, and the reweighting

formula is given by

P(E;T) =

e

−βE

D

0

(E;T)P

MC

(E;T)

e

−βε

D

0

(ε;T )P

MC

(ε;T)dε

. (22.25)

From a practical point of view, construction of the

flat histogram (or precise estimation of the DOS)isthe

crucial point of the multicanonical method, and vari-

ous algorithms have been proposed [22.25–30] after the

original one. In all these algorithms, the DOS is eval-

uated by accumulating the entry number of a random

walk in the energy space. As the system size increases,

the deviation of the DOS grows exponentially, and the

estimation within limited preliminary runs by simple

accumulation becomes difficult.

In order to overcome this difficulty, a new algo-

rithm [22.31, 32] was proposed based on a “biased”

random walk in the energy space

0. Set i =0, D(E) ≡1andn = 1.

1. Select a site at random and measure the initial en-

ergy E

b

.

2. Choose a Monte Carlo move to the state with en-

ergy E

a

by the transition probability p(E

b

→ E

a

)

=min[D(E

b

)/D(E

a

), 1].

3. Update the DOS at the energy of the chosen state as

D(E) → D(E) f

i

, with a modification factor f

i

> 1.

4. If n < N,setn → n +1 and go to step 1.

5. If n = N,set f

i

→ f

i+1

(< f

i

), i → i +1, n = 1,

rescale the DOS andgotostep1.

6. Continue this process until f

i

→1.

The essential point of this algorithm is the intro-

duction of the modification factor f

i

.When f

0

and N

aretakensothat f

N

0

is comparable to the total num-

ber of states, the deviation of the DOS is reproduced in

the early stage of the iteration. Of course, f

i

> 1 means

that the detailed balance condition is not satisfied (in

this sense this algorithm does not belong to Monte Carlo

ones), and the DOS during the iteration has a systematic

discrepancy from the equilibrium one. The true DOS is

obtained at the final stage of the iteration, where f

i

=1

eventually holds.

The reweighting algorithm discussed above has

a bonus that some physical quantities not available in

conventional Monte Carlo algorithm can be treated by

this method. For example, the energy dependence of

the free-energy landscape can be evaluated [22.33, 34],

which is suitable for the analysis of the first-order phase

transition.

22.2.2 Cluster Algorithm and Extensions

In the vicinity of the critical temperature T

c

of a se-

cond-order phase transition, the correlation time τ of

a physical quantity Q defined in

Q(t

0

)Q(t

0

+t)∼e

−t/τ

, (22.26)

diverges as τ ∼ (T −T

c

)

−zν

, where z is a dynamical

critical exponent. The critical slowing down makes

computer simulations around the critical point very time

consuming. In order to overcome this difficulty, the

cluster algorithm (Swendsen–Wang (SW) algorithm)

was proposed [22.35].

Part E 22.2

Monte Carlo Simulation 22.2 Improved Algorithms 1123

Here we explain this algorithm using the Ising

model

−βH = K

i, j

σ

i

σ

j

,σ

i

=±1 . (22.27)

First, we consider the Hamiltonian H

l,m

in which the

interaction between sites l and m are removed. Then,

we define the conditional partition functions Z

P

l,m

and

Z

AP

l,m

for σ

l

σ

m

=+1and−1by

Z

P

l,m

=Tr

σ

e

−βH

l,m

δ

σ

l

σ

m

,1

, (22.28)

Z

AP

l,m

=Tr

σ

e

−βH

l,m

δ

σ

l

σ

m

,−1

. (22.29)

The partition function is expressed as

Z =Tr

σ

e

−βH

= e

K

Z

P

l,m

+ e

−K

Z

AP

l,m

. (22.30)

Using

Z

l,m

=Tr

σ

e

−βH

l,m

= Z

P

l,m

+Z

AP

l,m

, (22.31)

we can rewrite (22.29)as

Z = e

K

1− e

−2K

Z

P

l,m

+ e

−2K

Z

l,m

. (22.32)

This expression can be interpreted as: “If the spins at

sites l and m are parallel, create a bond with the proba-

bility p =1− e

−2K

.” When this procedure is repeated

for all nearest-neighbor pairs of spins, each spin is as-

signed to one of the clusters. The system is now mapped

on a percolation problem with N

c

clusters [22.36,37]

Z =Tr p

b

(1 − p)

n

2

N

c

, (22.33)

where b is the number of bonds and n is the number of

interactions which do not form bonds, and the trace is

taken for all possible configurations of clusters. In each

Monte Carlo step, we assign a new Ising variable for

each cluster independently. This nonlocal update using

percolation clusters reduces significantly the relaxation

time.

It was then proposed [22.38] that one can flip a sin-

gle cluster in one step which is grown from a randomly

chosen site. This process improves the efficiency of the

SW cluster algorithm [22.39].

The algorithm discussed above can be applied to the

Heisenberg model [22.38]

−βH = K

ij

S

i

·S

j

, S

2

i

=1 , (22.34)

using an operator R(r) with respect to a randomly cho-

sen unit vector r in the spin space

R(r)S = S−2(S·r)r ,

(22.35)

and a spin cluster is formed in the procedure: “If the

spins at sites l and m satisfies M ≡ (S

l

·r)(S

m

·r) > 0,

create a bond with the probability p =1 − e

−2KM

.”

This rule is a natural extension of the SW algorithm in

the Ising model (22.27), and r is updated in each Monte

Carlo step. A similar extension has been made to the

six-vertex model [22.40]. Since this model is a classical

counterpart of the quantum Heisenberg model, this ex-

tension enabled the cluster-update version of quantum

Monte Carlo simulations, as will be discussed later.

Further developments of the cluster algorithm took

place in connection with percolation theories. The in-

vaded cluster algorithm [22.41,42] which automatically

tunes physical parameters to the critical point is a typ-

ical example. It turns out that a simple algorithm to

tune the probability p directly until critical percolation

is observed [22.43] works better.

Another important progress is the worm algo-

rithm [22.44], which enables accelerated dynamics as

fast as the cluster update within the local update.

Although this algorithm is based on the closed-path

configurations appearing in high-temperature expan-

sions, it shares the fundamental concept with the cluster

algorithm, namely Monte Carlo simulations in alter-

native representations of original systems. Since this

algorithm exhibits its merits more clearly in quantum

Monte Carlo simulations, it will be explained later in

this chapter.

22.2.3 Hybrid Monte Carlo Method

As far as the ergodicity and detailed balance condi-

tion are satisfied, any kind of update process can be

adopted in a certain Monte Carlo algorithm. Then, it

is interesting to hybridize Monte Carlo and molecular

dynamics (MD) simulations. Since MD process corre-

sponds to a forced particle move on an equal-energy

surface, it is expected to be effective in frustrated sys-

tems at low temperatures. An implementation in this

direction is formulated for the Heisenberg spin glass

model [22.45,46]

H =

ij

J

ij

S

i

·S

j

, S

2

i

=1 , (22.36)

with the equation of motion

dS

i

dt

= S

i

× H

i

, H

i

=

∂H

∂S

i

=

j

J

ij

S

j

. (22.37)

The MD treatment for all spins is inserted in each Monte

Carlo step for certain time intervals.

Part E 22.2

1124 Part E Modeling and Simulation Methods

22.2.4 Simulated Annealing

and Extensions

In complex free-energy landscapes of random and/or

frustrated systems as shown in Fig. 22.2, conven-

tional Monte Carlo algorithms result in trapping to

a metastable state. Applications of the multicanoni-

cal and cluster algorithms brought some progress but

the results were not so satisfactory, since for exam-

ple frustrations suppress the formation of large clusters.

One therefore needs special treatments for this class of

problems.

F

q

Fig. 22.2 Schematic figure of a complex free-energy land-

scape

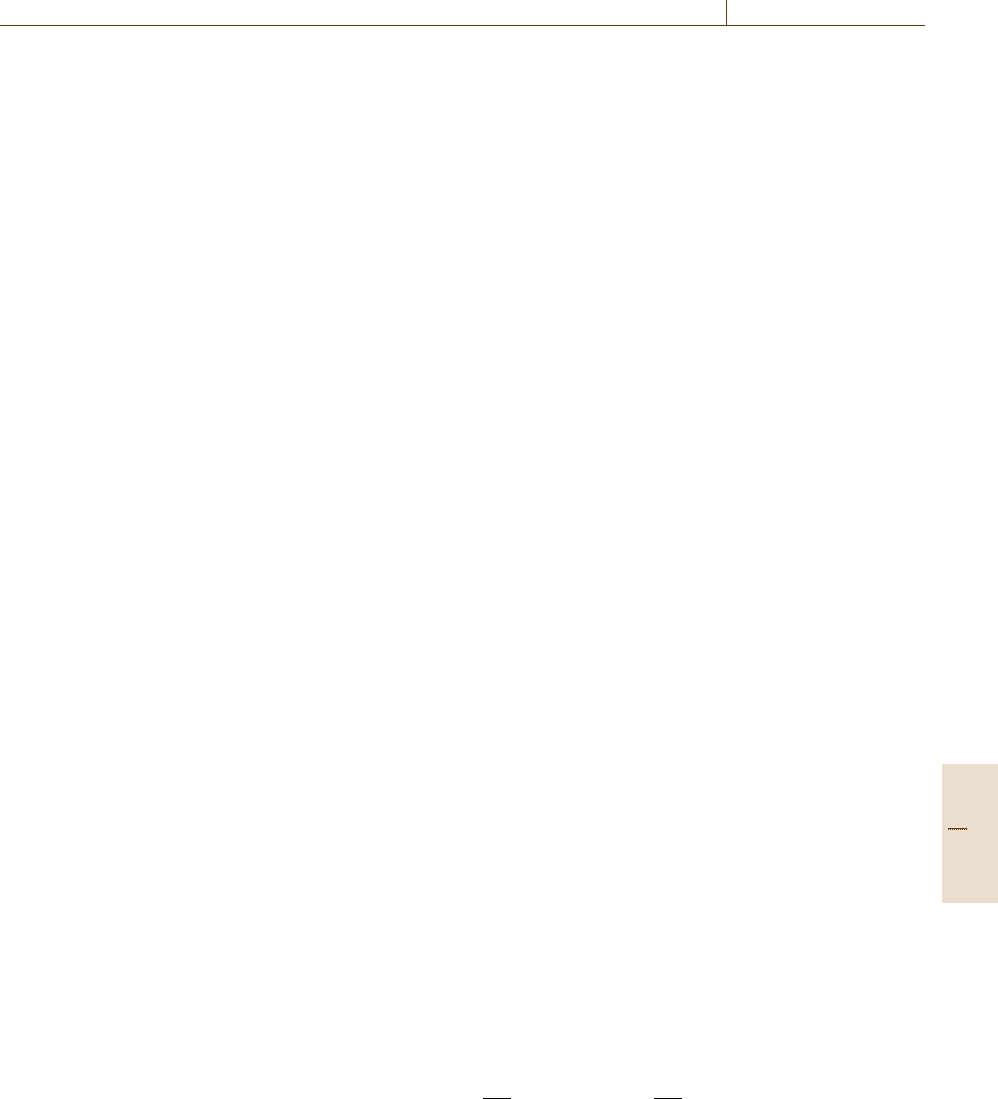

F

q

Fig. 22.3 Deep valleys of the free-energy landscape at

low temperatures (at the bottom) can be bypassed with

high-temperature configurations (upper ones correspond to

higher temperatures)

The simulated annealing [22.47] was originally pro-

posed for optimization problems, namely searching the

state which minimizes a cost function. The cost func-

tion can be taken as the energy of a physical system.

One can then introduce an auxiliary temperature,and

define a free energy at finite temperatures. The benefit is

that one can then search the global energy minimum via

some passes activated naturally by finite temperatures.

The simulation is started from a high temperature, and

the system is cooled down gradually.

Simulated Tempering

In the simulated tempering [22.48, 49] the inverse tem-

perature β is treated as a dynamical variable, such that

both the cooling and heating processes are implemented

in the algorithm. The reason this algorithm works well

is explained in Fig. 22.3. The probability distribution of

the original system

P(x) =

e

−βH(x)

Z(β)

, Z(β) =

x

e

−βH(x)

, (22.38)

is extended to a larger parameter space of x and {β

m

}

(m = 1, 2,...,M)as

P(x,β

m

) =

e

−β

m

H(x)

Z(β

m

)

˜

π

m

, (22.39)

˜

π

m

∝exp

g

m

+log Z(β

m

)

,

m

˜

π

m

=1 . (22.40)

One choice of the function g

m

is g

m

=−log Z(β

m

),

which results in

˜

π

m

= 1/M. That is, all β

m

are visited

equally.

Choosing several discrete inverse temperatures can

make the simulation efficient, and the optimal number

and distribution of {β

m

}are estimated as follows [22.49,

50]. First, the acceptance ratio of the flip between dif-

ferent temperatures is given by

exp

−(β

m

H(x

m

)−β

m+1

H(x

m+1

))

≈exp

−(Δβ)

2

dH/ dβ

=exp

−(Δβ)

2

NC/β

2

m

,

where N and C are the number of particles and the

specific heat, respectively. In order to keep the accep-

tance ratio independent of the system size, Δβ ∼ N

−1/2

or M ∼ N

1/2

should be satisfied. If C diverges at the

critical temperature, one should take M ∼ N

[1+α/(dν)]/2

,

with the critical exponent α of C and the space dimen-

sion d.

Next, each β

m

is determined iteratively in prelimi-

nary runs. A new set {β

m

} is constructed from the old

Part E 22.2

Monte Carlo Simulation 22.2 Improved Algorithms 1125

set {β

m

} as

β

1

=β

1

, (22.41)

β

m

=β

m−1

+(β

m

−β

m−1

)

p

m

c

(m = 2,...,M) , (22.42)

c =

1

M −1

M

m=2

p

m

, (22.43)

with β

1

<β

2

< ···<β

M

. The acceptance ratio p

m

at

β

m

is also evaluated during preliminary runs. The above

procedure arrives at equilibrium when all values of {p

m

}

become equal, which is consistent with the initial choice

of g

m

.

Monte Carlo simulations are performed using the

above sets of {β

m

} and {g

m

}.Sinceg

m

only depends

on β

m

, update without changing β

m

is the same as

the standard one, and the weight for update of β

m

is

proportional to e

−β

m

H+g

m

. Measurements on physical

quantities are made similarly to the conventional Monte

Carlo algorithm at each β

m

.

Exchange Monte Carlo

Instead of treating one system as in the simulated

tempering, the exchange Monte Carlo method [22.50]

takes M replicated systems at β

m

(m = 1, 2,...,M),

and exchanges the configurations between replicas of

close temperatures. The optimal distribution of β

m

is

evaluated similarly to that in the simulated temper-

ing as given in (22.41)–(22.43). Update in a replica

is the same as the conventional one, and exchange of

replicas at β

m

and β

m+1

is introduced by comparison

of the weights exp

−β

m

H(x

m

) −β

m+1

H(x

m+1

)

and

exp

−β

m

H(x

m+1

) −β

m+1

H(x

m

)

. This algorithm can

be regarded as a parallelized version of the simulated

tempering, and is often called parallel tempering.

This algorithm has several advantages compared

with the simulated tempering. First, it is unnecessary to

introduce the nontrivial function g

m

. Since determina-

tion of g

m

(or equivalently Z(β

m

)) is complicated and

time consuming, this property simplifies the coding and

accelerates simulations. Second, existence of fixed sets

of β

m

throughout simulations is suitable for observing

the replica overlap, which is important in the study of

spin glasses. Third, this algorithm fits very well with

parallel coding. Most calculations can be done inde-

pendently in each replica (i. e., in each CPU), and only

information on exchange rates and temperatures have to

be sent to other CPUs.

22.2.5 Replica Monte Carlo

The replica Monte Carlo [22.51] implemented for spin

glass models can be regarded as a mixture of the ex-

change Monte Carlo and the cluster algorithm. Consider

the Ising spin glass model

H =−

ij

β J

ij

σ

i

σ

j

, (22.44)

and take M replicas. The pair Hamiltonian of two close

temperatures is defined as (for simplicity, m = 1)

H

pair

(σ

1

,σ

2

) =−

ij

β

1

J

ij

σ

1

i

σ

1

j

+β

2

J

ij

σ

2

i

σ

2

j

.

(22.45)

Using a new Ising variable τ

(m)

i

= σ

(m)

i

σ

(m+1)

i

,(22.45)

is written as

H

pair

(σ

1

;τ

1

) =−

ij

β

1

+β

2

τ

1

i

τ

1

j

J

ij

σ

1

i

σ

1

j

.

(22.46)

The pair Hamiltonian is divided into clusters within

which τ

1

i

τ

1

j

=+1 is satisfied. When each cluster is rep-

resented by an effective Ising variable η

(m)

a

with the

index a of clusters, the pair Hamiltonian is expressed

as

H

cl

η

1

=−

a,b

k

a,b

η

1

a

η

1

b

, (22.47)

k

a,b

=(β

1

−β

2

)

i∈a, j∈b

J

ij

σ

1

i

σ

1

j

. (22.48)

The cluster update is introduced as Monte Carlo flips of

the variable η

1

a

starting from configurations with all-up

η

1

a

spins.

After the cluster flip, original Ising variables are

changed as σ

1,2

i

← η

1

a

σ

1,2

i

for i ∈ a, and conventional

Monte Carlo flips are performed in each replica to as-

sure the ergodicity.

In the representation (22.46), the exchange Monte

Carlo corresponds to a simultaneous flip of all τ

1

=−1

clusters. Although the present algorithm was proposed

as early as the cluster algorithm itself and much earlier

than the exchange Monte Carlo, it has not been used

so popularly to date due to complex coding and limited

applicability in comparison with the exchange Monte

Carlo. However, a recent study [22.52] showed that in

two dimensions this algorithm is much faster than the

exchange Monte Carlo.

Part E 22.2

1126 Part E Modeling and Simulation Methods

22.3 Quantum Monte Carlo Method

The quantum Monte Carlo method is a powerful method

to investigate both finite- and zero-temperature proper-

ties of quantum systems. The results are exact within

error bars. This method can be applied to large-size

systems in any dimensions. However, it has a prob-

lem when applied to the systems with large frustrations

or strong correlations. In such systems, almost half of

samples have negative weights, and Monte Carlo simu-

lations do not work well. Hence, readers had better

take care in using the quantum Monte Carlo method for

such systems. The negative-sign problem is explained

in Sect. 22.3.8.

There are several types of quantum Monte Carlo

algorithms. Depending on the model, the most suit-

able algorithm should be chosen: For spin systems

without magnetic fields, the cluster algorithm is ef-

fective (Sect. 22.3.3). Under magnetic fields, the worm

algorithm or the directed-loop algorithm is better

(Sect. 22.3.5). The continuous-time algorithm makes

simulations efficient (Sect. 22.3.4). For electron sys-

tems, the auxiliary-field algorithm is appropriate, es-

pecially for the Hubbard model (Sect. 22.3.6). To

investigate the ground-state properties, projection meth-

ods are suitable (Sect. 22.3.7).

There are other Monte Carlo methods to investigate

quantum systems. Here, we only consider the quantum

Monte Carlo algorithms by which results converge to

the exact value in principle. Hence, approximate meth-

ods like variational Monte Carlo methods or fixed-node

approximations are beyond our scope.

22.3.1 Suzuki–Trotter Formalism

Quantum Monte Carlo simulations in d-dimensional

systems are generally performed through mapping to

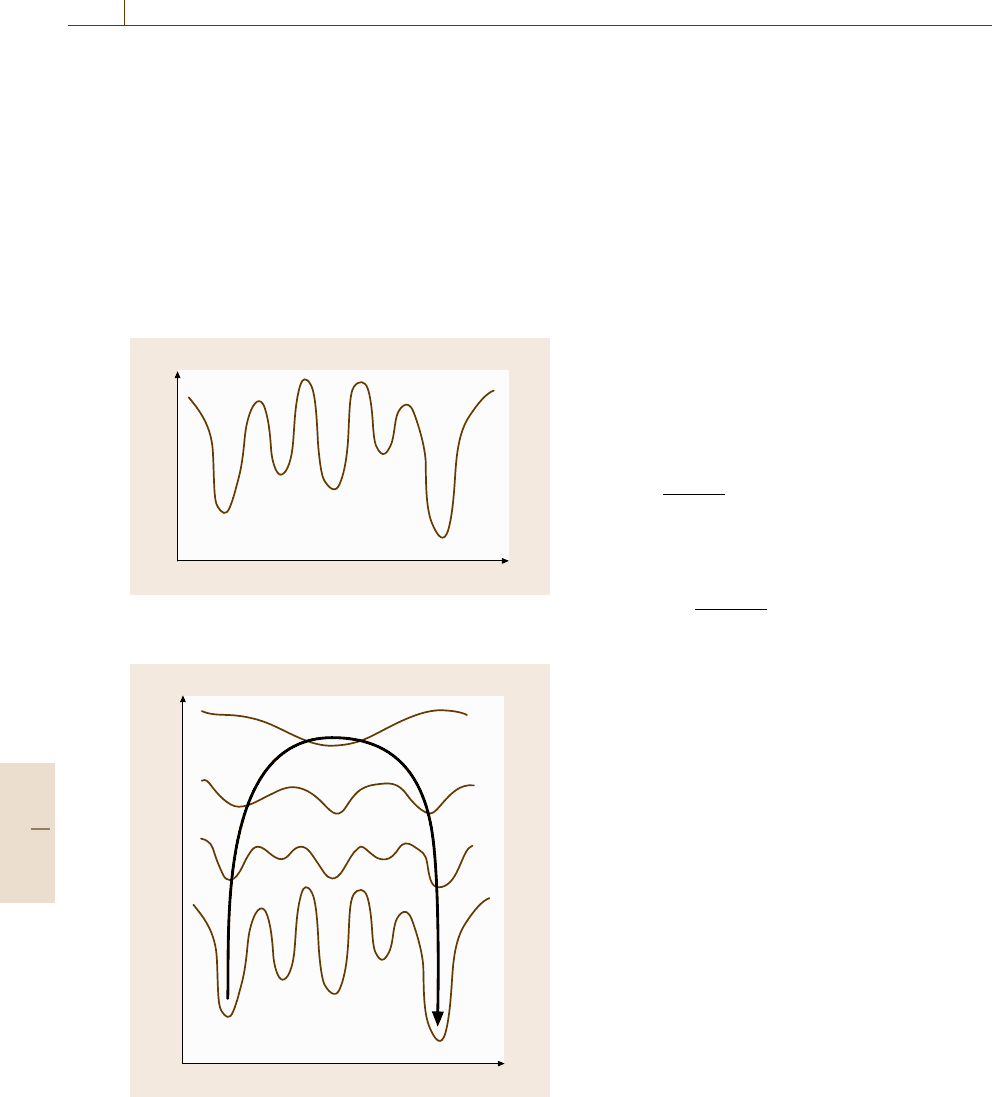

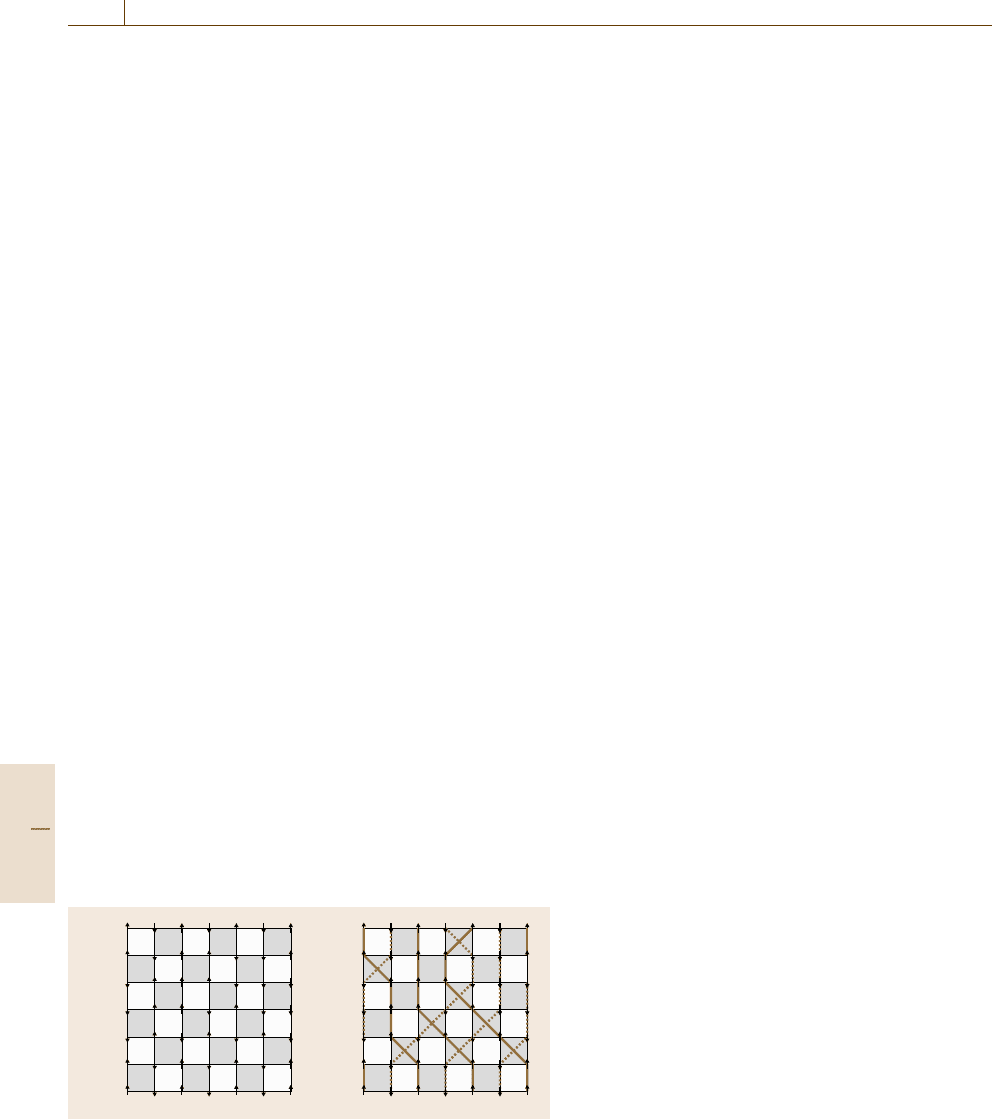

a)

(1)

2

1

.

.

.

m

b)

(1)

2

1

.

.

.

m

1 (1)2 N

s

... 1 (1)2 N

s

...

Fig. 22.4 (a) (d +1)-dimensional space-time for N

s

site quantum

system. (b) World-line configuration. The vertical axis represents

the Trotter axis, and m is the Trotter number

the corresponding (d +1)-dimensional classical sys-

tems. The Suzuki–Trotter transformation is the most

typical procedure of this mapping using the Trotter for-

mula. In order to explain the basics of quantum Monte

Carlo methods, we review first the Suzuki–Trotter for-

malism [22.53].

The partition function of quantum systems is gener-

ally defined as

Z =Tr e

−βH

, (22.49)

where β and H are the inverse temperature and the

Hamiltonian, respectively. Using the generalized Trot-

ter formula, the exponential of quantum Hamiltonians

can be decomposed into the product of exponentials in

infinitesimal time-slices as

e

βH

= lim

n→∞

i

e

−ΔτH

i

n

, (22.50)

where Δτ = β/n,andH

i

is a local Hamiltonian that

satisfies H =

i

H

i

. Inserting complete sets between

time slices, the partition function can be expressed in

terms of local weights as

Z =

A

W(A) , W(A) =

i

w(a

i

) . (22.51)

Here, A denotes a configuration such as shown in

Fig. 22.4a, and a is a local configuration on a plaquette.

Their weights are represented by W(A)andw(a), re-

spectively. Plaquette weights are defined on shaded

plaquettes in Fig. 22.4a.

In this way, the system is mapped onto a (d +1)-

dimensional classical system. This transformation is

called the Suzuki–Trotter transformation [22.54, 55].

Quantum Monte Carlo simulations are performed

through updates of configurations in the (d +1)-

dimensional space-time. For recent progress see [22.56,

57].

22.3.2 World-Line Approach

In order to explain how quantum Monte Carlo simula-

tions are performed in the (d +1)-dimensional space-

time, we here review the world-line algorithm. As an

example, we consider the spin-1/2 Heisenberg model,

which is defined by the following Hamiltonian

H = J

i, j

S

i

·S

j

, (22.52)

Part E 22.3

Monte Carlo Simulation 22.3 Quantum Monte Carlo Method 1127

where the summation is taken over nearest-neighbor

sites, and S

i

denotes the spin operator at site i. All the

possible local configurations and weights of plaquettes

in a time-slice are listed in Table 22.1.

Up spins (or down spins) may be joined by lines as

illustrated in Fig. 22.4b. These lines are called world-

lines [22.58]. In the world-line algorithm, world-line

configurations are updated. One way to update them is

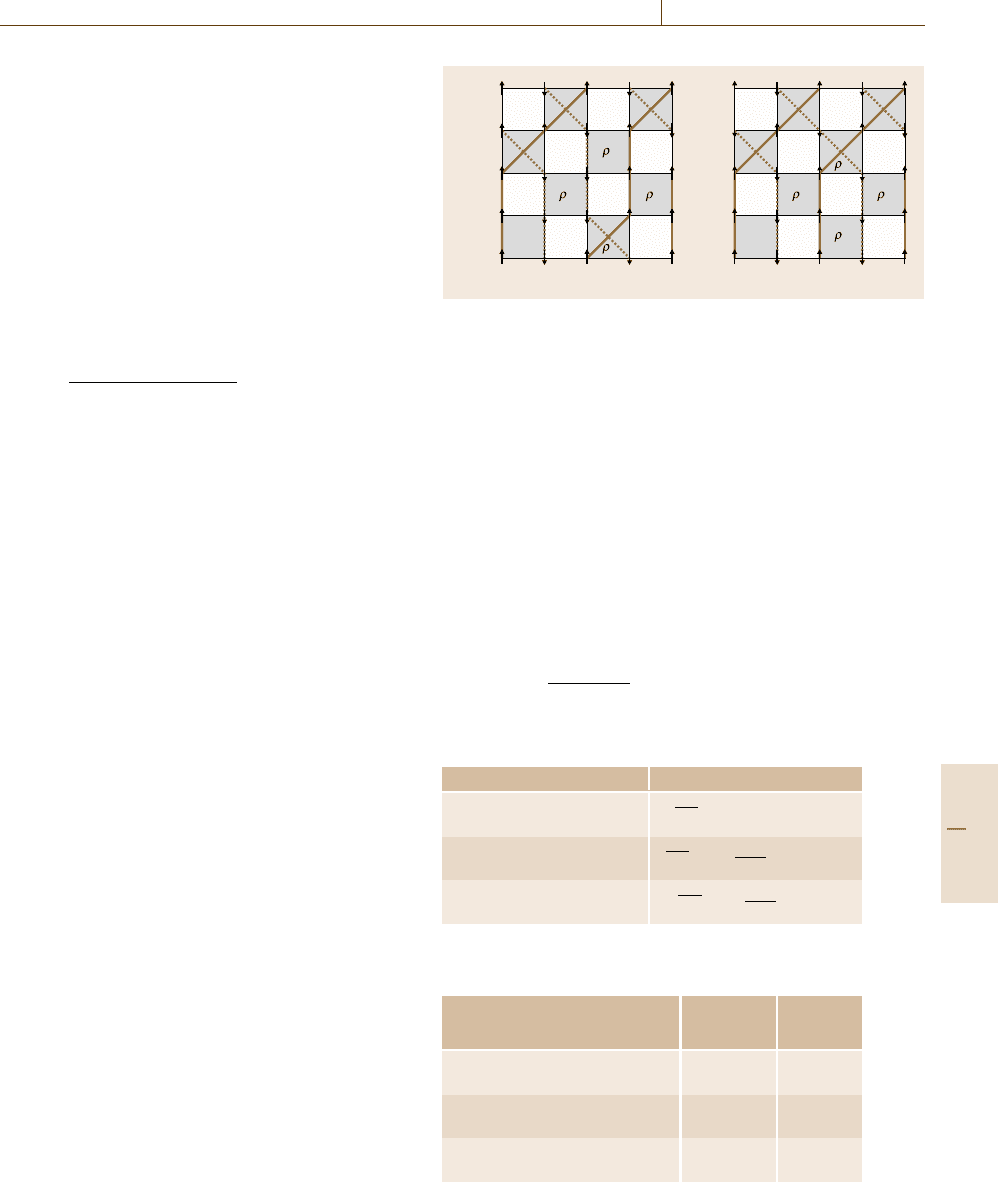

the following: As illustrated in Fig. 22.5,wetrytoflip

four spins (a, b, c, d), taking care so that the flip satisfies

the conservation law of the model. The new world-line

configuration is accepted with the probability

P =

ρ

1

ρ

2

ρ

3

ρ

4

ρ

1

ρ

2

ρ

3

ρ

4

+ρ

1

ρ

2

ρ

3

ρ

4

, (22.53)

based on the heat-bath algorithm (or the Metropolis al-

gorithm). In this way, world-lines are locally moved.

This process is called a local flip. Since the ergodicity

is not satisfied by this type of updates only [22.59], we

need other types of flips. If up spins (or down spins)

align in the imaginary time direction or in the diago-

nal directions, they can flip simultaneously. If up and

down spins align alternately in the space direction, they

can also flip simultaneously. These types of flips are

called global flips [22.60]. Combining these two types

of flips, world-line configurations are updated. Phys-

ical quantities such as magnetization, susceptibilities,

energy and specific heat can be measured using spin

configurations, local weights and their derivatives in the

(d +1)-dimensional space-time [22.61–63].

In the world-line algorithm, the width of the time

slice is finite. Hence, the extrapolation Δτ →0 is nec-

essary after simulations. The error due to the finite Δτ

is systematic: It is proportional to Δτ

2

or Δτ

4

,etc.,de-

pending on the way of decomposition in (22.50) [22.64,

65]. In the continuous-time algorithm, the discreteness

of the Trotter slice is removed by taking Δτ → 0in

the definition of local probabilities as will be discussed

later.

22.3.3 Cluster Algorithm

Spin configurations in the (d +1)-dimensional space-

time can be updated more efficiently by using the

cluster algorithm. For the cluster algorithm in quan-

tum systems [22.35, 40], readers may refer to excellent

overviews in [22.66, 67]. In the cluster algorithm,

spin configurations as in Fig. 22.4a are mapped onto

graphs [22.68, 69]. The weights V(G)ofgraphG are

2

1

4

'

4

'

2

'

3

'

1

3

cd

a b

a)

(1)

4

3

2

1

1234(1)

b)

(1)

4

3

2

1

1 2 3 4 (1)

Fig. 22.5a,b Local flip in the world-line algorithm in a four-spin

system with the Trotter number m =4

defined in order to satisfy the following relation

W(A) =

G

V(G)Δ(A, G) , (22.54)

where Δ(A, G) is one if spin configuration A is com-

patible with graph G, and otherwise zero. Similarly, it

is possible to define the local version of (22.54)as

w(a) =

g

v(g)δ(a, g) . (22.55)

For the Heisenberg model defined in (22.52), δ(a, g)is

chosen as shown in Table 22.2.

The probability P(g|a) of assigning graph g to con-

figuration a is given by

P(g|a) =

δ(a, g)v(g)

w(a)

. (22.56)

Table 22.1 Local configurations and their weights

a w(a)

↑↑

↑↑

,

↓↓

↓↓

e

−

Δτ J

4

↑↓

↑↓

,

↓↑

↓↑

e

Δτ J

4

cosh

Δτ J

2

↑↓

↓↑

,

↓↑

↑↓

−e

Δτ J

4

sinh

Δτ J

2

Table 22.2 Function δ(a, g) for mapping of local configu-

rations to graphs

a

-

g

||

||

↑↑

↑↑

,

↓↓

↓↓

1 0

↑↓

↑↓

,

↓↑

↓↑

1 1

↑↓

↓↑

,

↓↑

↑↓

0 1

Part E 22.3