Russ J.C. Image Analysis of Food Microstructure

Подождите немного. Документ загружается.

Fourier transforms will be encountered several times in subsequent chapters on

enhancement and measurement. The basis for all of these procedures is Fourier’s

theorem, which states that any signal (such as brightness as a function of position)

can be constructed by adding together a series of sinusoids with different frequencies,

by adjusting the amplitude and phase of each one. Calculating those amplitudes and

phases with a Fast Fourier Transform (FFT) generates a display, the power spectrum,

that shows the amplitude of each sinusoid as a function of frequency and orientation.

Most image processing texts (see J. C. Russ, The Image Processing Handbook, 4th

edition, CRC Press, Boca Raton, FL) include extensive details about the mathematics

and programming procedures for this calculation. For our purposes here the math

is less important than observing and becoming familiar with the results.

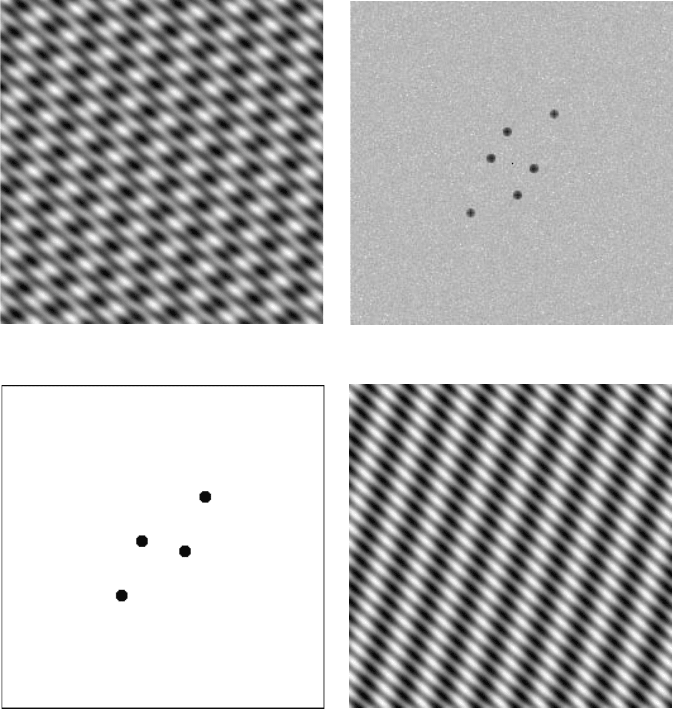

For a simple image consisting of just a few obvious sinusoids, the power spec-

trum consists of just the corresponding number of points (each spike is shown twice

because of the rotational symmetry of the plot). For each one, the radius from the

center is proportional to frequency and the angle from the center identifies the

orientation. In many cases it will be easier to measure spacings and orientations of

structures from the FFT power spectrum, and it is also easier to remove one or

another component of an image by filtering or masking the FFT. In the example

shown in Figure 2.29, using a mask or filter to set the amplitude to zero for one of

the frequencies and then performing an inverse FFT removes the corresponding set

of lines without affecting anything else.

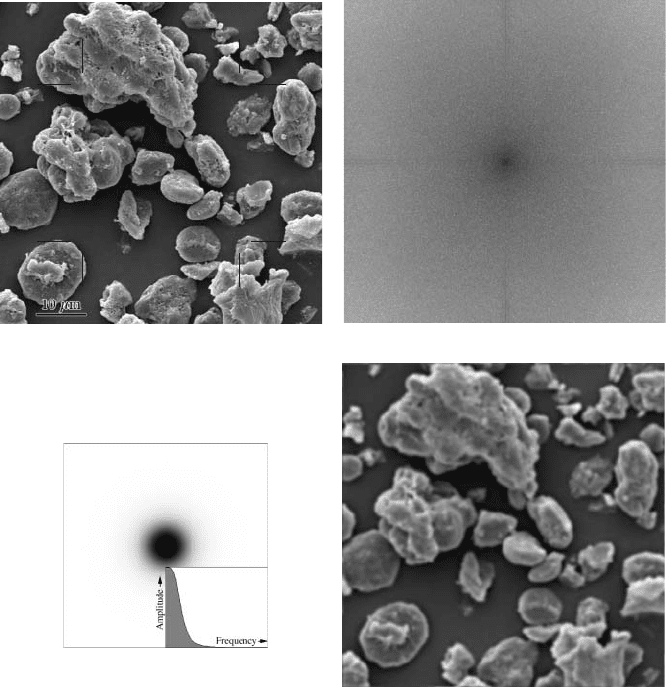

A low pass filter like the Gaussian keeps the amplitude of low frequency sinu-

soids unchanged but reduces and finally erases the amplitude of high frequencies

(large radius in the power spectrum). For large standard deviation Gaussian filters,

it is more efficient to actually execute the operation by performing the FFT, filtering

the data there, and performing an inverse FFT as shown in Figure 2.30, even though

it may be easier for those not familiar with this technique to understand the operation

based on the kernel of neighborhood weights. Mathematically, it can be shown that

these two ways of carrying out the procedure are identical.

There is another class of neighborhood filters that do not have equivalents in

frequency space. These are ranking filters that operate by listing the values of the

pixels in the neighborhood in brightness order. From this list it is possible to replace

the central pixel with the darkest, lightest or median value, for example. The median

filter, which uses the value from the middle of the list, is also an effective noise

reducer. Unlike the averaging or Gaussian smoothing filters, the median filter does

not blur or shift edges, and is thus generally preferred for purposes of reducing

noise. Figures 2.26d and 2.27d include a comparison of median filtering with neigh-

borhood smoothing.

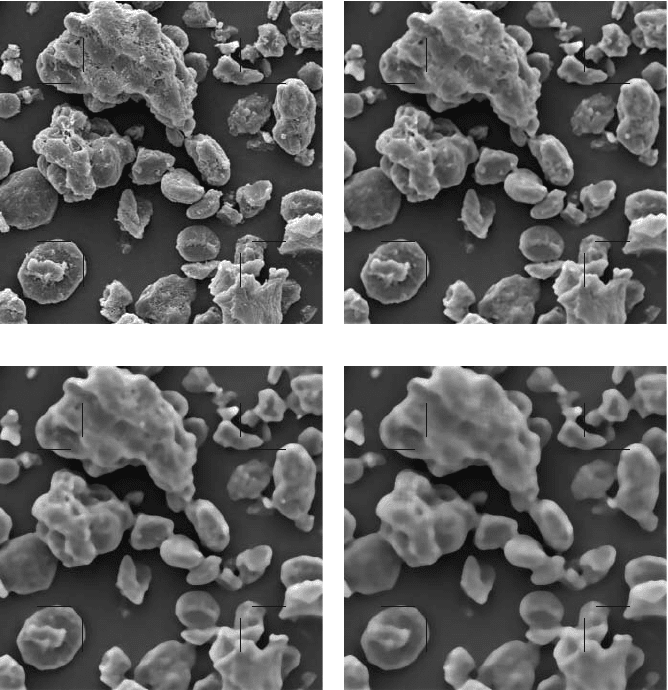

Increasing the size of the neighborhood used in the median is used to define the

size of details — including spaces between features — that are defined as noise,

because anything smaller than the radius of the neighborhood cannot contribute the

median value and is eliminated. Figure 2.31 illustrates the effect of neighborhood

size on the median filter. Since many cameras provide images with more pixels than

the actual resolution of the device provides, it is often important to eliminate noise

2241_C02.fm Page 104 Thursday, April 28, 2005 10:23 AM

Copyright © 2005 CRC Press LLC

that covers several pixels. Retention of fine detail requires a small neighborhood

size, but because it does not shift edges, a small median can be repeated several

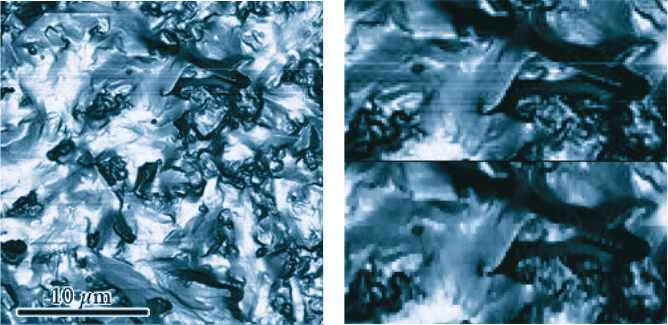

times to reduce noise. If the noise is not isotropic, such as scan line noise from

video cameras or AFMs, or scratches on film, the use of a neighborhood that is not

circular but instead is shaped to operate along a line perpendicular to the noise, the

median can also be an effective noise removal tool (see Figure 2.32).

One problem with the median filter is that while it does not shift or blur edges,

it does tend to round corners and to erase fine lines (which, if they are narrower

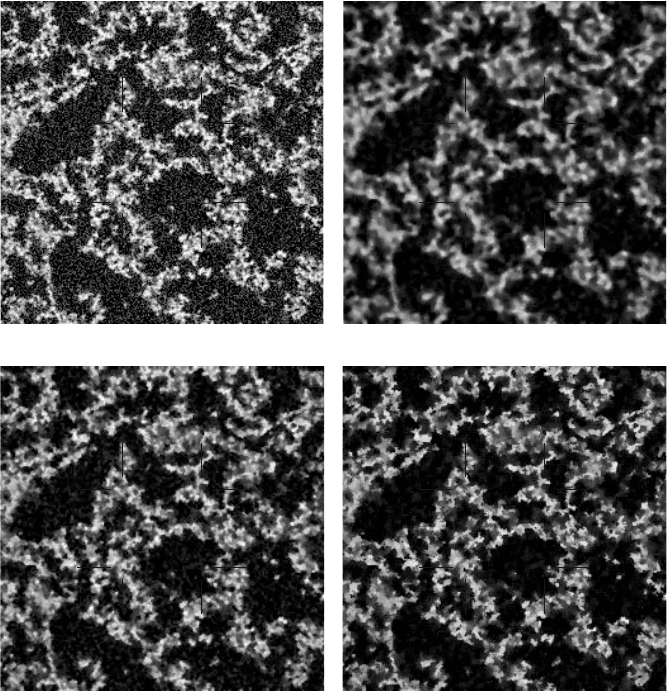

(a) (b)

(c) (c)

FIGURE 2.29 Illustration of Fourier transform, and the relationship between frequency pat-

terns in the pixel domain and spikes in the power spectrum: (a) image produced by superpo-

sition of three sets of lines; (b) FFT power spectrum of the image in (a), showing three spikes

(each one plotted twice with rotational symmetry); (c) mask used to select just two of the

frequencies; (d) inverse FFT using the mask in (c), showing just two sets of lines.

2241_C02.fm Page 105 Thursday, April 28, 2005 10:23 AM

Copyright © 2005 CRC Press LLC

than the radius of the neighborhood, are considered to be noise). This can be

corrected by using the hybrid median. Instead of a single ranking operation on all

of the pixels in the neighborhood, the hybrid median performs multiple rankings on

subsets of the neighborhood. For the case of the 3 × 3 neighborhood, the ranking

is performed first on the 5 pixels that form a + pattern, then the five that form an x,

and finally on the original central pixel and the median results from the first two

rankings. The final result is then saved as the new pixel value. This method can be

extended to larger neighborhoods and more subsets in additional orientations. As

shown in the example in Figure 2.33c, fine lines and sharp corners are preserved.

(a) (b)

(c) (d)

FIGURE 2.30 Low pass (smoothing) filter in frequency space: (a) original (SEM image of

spray dried soy protein isolate particles); (b) FFT power spectrum; (c) filter that keeps low

frequencies and attenuates high frequencies; (d) inverse FFT produces smoothed result.

2241_C02.fm Page 106 Thursday, April 28, 2005 10:23 AM

Copyright © 2005 CRC Press LLC

Another approach to modifying the neighborhood is the conditional median

(Figure 2.33d). In addition to the radius of a circular neighborhood, the user specifies

a threshold value. Pixels whose difference from the central pixel exceed the threshold

are not included in the ranking operation. This technique also works to preserve fine

lines and irregular shapes.

All of these descriptions of a median filter depend on being able to rank the

pixels in the neighborhood in order of brightness. Their application to a grey scale

image is straightforward, but what about color images? In many cases with digital

cameras, the noise content of each channel is different. The blue channel in particular

generally has a higher noise level than the others because silicon detectors are

(a) (b)

(c) (d)

FIGURE 2.31 Effect of the neighborhood size used for the median filter: (a) original image;

(b) 5 pixel diameter; (c) 9 pixel diameter; (d) 13 pixel diameter.

2241_C02.fm Page 107 Thursday, April 28, 2005 10:23 AM

Copyright © 2005 CRC Press LLC

relatively insensitive to short wavelengths and more amplification is required. The

blue channel also typically has less resolution than the green channel (half as many

blue filters are used in the Bayer pattern). Separate filtering of the RGB channels

using different amounts of noise reduction (e.g., different neighborhood sizes) may

be used in these cases.

The problem with independent channel filtering is that it can alter the proportions

of the different color signals, resulting in the introduction of different colors that

are visually distracting. It is usually better to filter only the intensity channel, leaving

the color information unchanged. Another approach uses the full color information

in a median filter. One approach is to use a brightness value for each pixel, usually

just the sum of the red, green and blue values, to select the neighbor whose color

values replace those of the central pixel. This does not work as well as performing

a true color median, although it is computationally simpler.

Finding the median pixel using the color values requires plotting each pixel in

the neighborhood as a point in color space, using one of the previously described

systems of coordinates. It is then possible to calculate the sum of distances from

each point to all of the others. The median pixel is the one whose point has the

smallest sum of distances, in other words is closest to all of the other points. It is

also possible and equivalent to define this point in terms of the angles of vectors to

the other points. In either case, the color values from the median point are then

reassigned to the central pixel in the neighborhood. The color median can be used

in conjunction with any of the neighborhood modification schemes (hybrid median,

conditional median, etc.).

Random speckle noise is not the only type of noise defect present in images.

One other, scratches, has already been mentioned. Most affordable digital cameras

suffer from a defect in which a few detectors are inactive (dead) or their output is

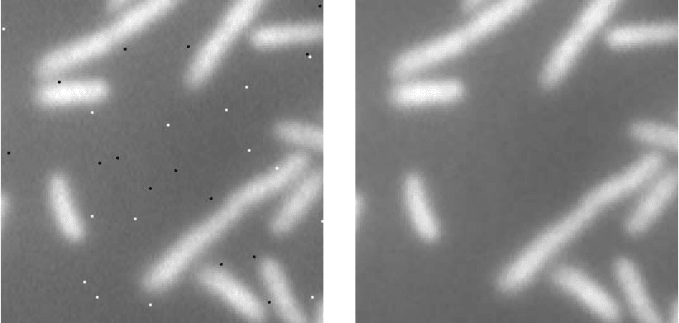

(a) (b)

FIGURE 2.32 Removal of scan line noise in AFM images: (a) original image (surface of

chocolate); (b) application of a median filter using a 5 pixel vertical neighborhood to remove

the scan line noise (top) leaving other details intact (bottom).

2241_C02.fm Page 108 Thursday, April 28, 2005 10:23 AM

Copyright © 2005 CRC Press LLC

always maximum (locked). This produces white or black values for those pixels, or

at least minimum or maximum values in one color channel. A similar problem can

arise in some types of microscopy such as AFM or interference microscopes, where

no signal is obtained at some points and the pixels are set to black. Dust on film

can produce a similar result. Generally, this type of defect is shot noise.

A smoothing filter based on the average or Gaussian is very ineffective with

shot noise, because the errant extreme value is simply spread out into the surrounding

pixels. Median filters, on the other hand, eliminate it easily and completely, replacing

the defective pixel with the most plausible value taken from the surrounding neigh-

borhood, as shown in Figure 2.34.

(a) (b)

(c) (d)

FIGURE 2.33 Comparison of standard and hybrid median: (a) original (noisy CSLM image

of acid casein gel, courtesy of M. Faergemand, Department of Dairy and Food Science, Royal

Veterinary and Agricultural University, Denmark); (b) conventional median; (c) hybrid

median; (d) conditional median.

2241_C02.fm Page 109 Thursday, April 28, 2005 10:23 AM

Copyright © 2005 CRC Press LLC

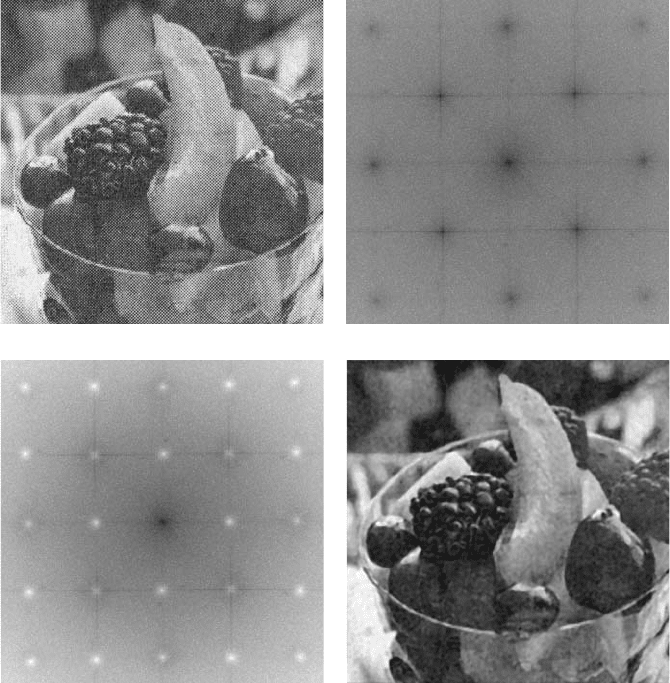

Periodic noise in images shows up as a superimposed pattern, usually of lines.

It can be produced by electronic interference or vibration, and is also present in

printed images such as the ones in this book because they are printed with a halftone

pattern that reproduces the image as a set of regularly spaced dots of varying size.

Television viewers will recognize moiré patterns that arise when someone wears

clothing whose pattern is similar in size to the spacing of video scan lines as a type

of periodic noise that introduces strange and shifting colors into the scene, and the

same phenomenon can happen with digital cameras and scanners.

The removal of periodic noise is practically always accomplished by using

frequency space. Since the noise consists of just a few, usually relatively high

frequencies, the FFT represents the noise pattern as just a few points in the power

spectrum with large amplitudes. These spikes can be found either manually or

automatically, using some of the techniques described in the next chapter. However

they are located, reduction of the amplitude to zero for those frequencies will

eliminate the pattern without removing any other information from the image, as

shown in Figure 2.35.

Because of the way that single chip color cameras use filters to sample the colors

in the image, and the way that offset printing uses different halftone grids set at

different angles to reproduce color images, the periodic noise in color images is

typically very different in each color channel. This requires processing each channel

separately to remove the noise, and then recombining the results. It is important to

select the right color channels for this purpose. For instance, RGB channels corre-

spond to how most digital cameras record color, while CMYK channels correspond

to how color images are printed.

(a) (b)

FIGURE 2.34 Removal of shot noise: (a) original image of bacteria corrupted with random

black and white pixels; (b) application of a hybrid median filter.

2241_C02.fm Page 110 Thursday, April 28, 2005 10:23 AM

Copyright © 2005 CRC Press LLC

NONUNIFORM ILLUMINATION

One assumption that underlies nearly all steps in image processing and mea-

surement, as well as strategies for sampling material, is that the same feature will

have the same appearance wherever it happens to be positioned in an image. Non-

uniform illumination violates this assumption, and may arise for a number of dif-

ferent causes. Some of them can be corrected in hardware if detected before the

images are acquired, but some cannot and we are often faced with the need to deal

with previously recorded images that have existing problems.

(a) (b)

(c) (d)

FIGURE 2.35 Removal of periodic noise: (a) image from a newspaper showing halftone

printing pattern; (b) Fourier transform power spectrum with spikes corresponding to the high

frequency pattern; (c) filtering of the Fourier transform to remove the spikes; (d) result of

applying an inverse Fourier transform.

2241_C02.fm Page 111 Thursday, April 28, 2005 10:23 AM

Copyright © 2005 CRC Press LLC

Variations that are not visually apparent (because the human eye compensates

automatically for gradual changes in brightness) may be detected only when the

image is captured in the computer. Balancing lighting across the entire recorded

scene is difficult. Careful position of lights on a copy stand, use of ring lighting for

macro photography, or adjustment of the condenser lens in a microscope, are pro-

cedures that help to achieve uniform lighting of the sample. Capturing an image of

a uniform grey card or blank slide and measuring the brightness variation is an

important tool for such adjustments.

Some other problems are not normally correctable. Optics can cause vignetting

(darkening of the periphery of the image) because of light absorption in the glass.

Cameras may have fixed pattern noise that causes local brightness variations. Cor-

recting variations in the brightness of illumination may leave variations in the angle

or color of the illumination. And, of course, the sample itself may have local

variations in density, thickness, surface flatness, and so forth, which can cause

changes in brightness.

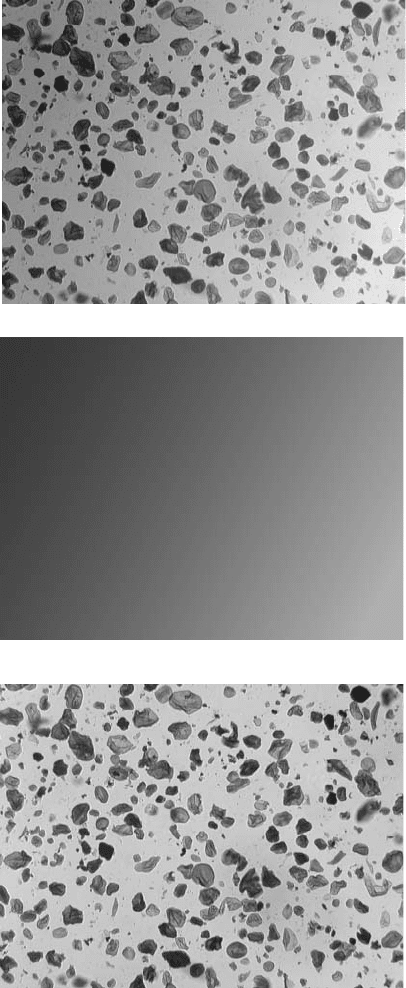

Many of the variations other than those which are a function of the sample itself

can be corrected by capturing an image that shows just the variation. Removing the

sample and recording an image of just the background, a grey card, or a blank slide

or specimen stub with the same illumination provides a measure of the variation.

This background image can then be subtracted from or divided into the image of

the sample to level the brightness. The example in Figure 2.36 shows particles of

cornstarch imaged in the light microscope with imperfect centering of the light

source. Measuring the particle size distribution depends upon leveling the contrast

so that particles can be thresholded everywhere in the image. Capturing a background

image with the same illumination conditions and subtracting it from the original

makes this possible.

The choice of subtraction or division for the background depends on whether

the imaging device is linear or logarithmic. Scanners are inherently linear, so that

the measured pixel value is directly proportional to the light intensity. The output

from most scanning microscopes is also linear, unless nonlinear gamma adjustments

are made in the amplified signal. The detectors used in digital cameras are linear,

but in many cases the output is converted to logarithmic to mimic the behavior of

film. Photographic film responds logarithmically to light intensity, with equal incre-

ments of density corresponding to equal ratios of brightness. For linear recordings,

the background is divided into the image, while for logarithmic images it is sub-

tracted (since division of numbers corresponds to the subtraction of their logarithms).

In practice, the best advice when the response of the detector is unknown, is to try

both methods and use the one that produces the best result. In the examples that follow,

some backgrounds are subtracted and some are divided to produce a level result.

In situations where a satisfactory background image cannot be (or was not)

stored along with the image of the specimen, there are several ways to construct

one. In some cases one color channel may contain little detail but may still serve as

a measure of the variation in illumination. Another technique that is sometimes used

is to apply an extreme low pass filter (e.g., a Gaussian smooth with a large standard

deviation) to the image to remove the features, leaving just the background variation.

This method is based on the assumptions that the features are small compared to

2241_C02.fm Page 112 Thursday, April 28, 2005 10:23 AM

Copyright © 2005 CRC Press LLC

(a)

(b)

(c)

FIGURE 2.36 Leveling contrast with a recorded background image: (a) original image of

cornstarch particles with nonuniform illumination; (b) image of blank slide captured with

same illumination; (c) subtraction of background from original.

2241_C02.fm Page 113 Thursday, April 28, 2005 10:23 AM

Copyright © 2005 CRC Press LLC