Klir G.J. Uncertainity and Information. Foundations of Generalized Information Theory

Подождите немного. Документ загружается.

(3.49)

where q denotes a probability density function on the interval [a, b] of real

numbers, could be viewed as the counterpart of the Shannon entropy in the

domain of real numbers. Indeed, the form of this functional, usually referred

to as a Boltzmann entropy or a differential entropy, is analogous to the form

of the Shannon entropy. The former is obtained from the latter by replacing

summation with integration and a probability distribution function with a

probability density function. Notwithstanding this analogy, the following ques-

tion cannot be avoided: Is the Boltzman entropy a genuine counterpart of the

Shannon entropy? To answer this nontrivial question, we must establish a con-

nection between the two functionals.

Let q be a probability density function on the interval [a, b] of real numbers.

That is, q(x) ≥ 0 for all x Œ [a, b] and,

(3.50)

Consider a sequence of probability distributions

n

p =·

n

p

1

,

n

p

2

,...,

n

p

n

Ò such

that

(3.51)

for every n Œ⺞

n

, where

for each i Œ ⺞

n

, and x

0

= a by convention. For convenience, let

so that

For each probability distribution

n

p =·

n

p

1

,

n

p

2

,...,

n

p

n

Ò, let

n

d(x) denote a

probability density function on [a,b] such that

nn

in

xdxid

()

=

()

Œ⺞ ,

xai

in

=+D.

D

n

ba

n

=

-

xai

ba

n

i

=+

-

n

i

x

x

pqxdx

i

i

=

()

-

Ú

1

qxdx

a

b

()

=

Ú

1.

Bqx x ab qx qx dx

a

b

()

Œ

[]

()

=-

() ()

Ú

, log ,

2

92 3. CLASSICAL PROBABILITY-BASED UNCERTAINTY THEORY

where

(3.52)

for all i Œ ⺞

n

. Then due to continuity of q(x), the sequence ·

n

d(x)|n Œ ⺞Ò con-

verges to q(x) uniformly on [a,b].

Given the probability distribution

n

p for some n Œ ⺞, its Shannon entropy

is

or, using the introduced probability density function

n

d,

This equation can be modified as follows:

Since probabilities

n

p

i

of the distribution

n

p must add to one, and by the defi-

nition of D

n

, we obtain

(3.53)

When n Æ•(or D

n

Æ 0), we have

according to the introduced relation among

n

p, q(x), and

n

d

i

(x), in particular

Eqs. (3.51) and (3.52). Equation (3.53) can thus be written for n Æ•as

(3.54)

The last term in this equation clearly diverges. This means that the Boltzmann

entropy is not a limit of the Shannon entropy for n Æ•and, consequently, it

is not a measure of uncertainty and information.

lim lim .

n

n

n

SBqx

n

ba

Æ• Æ•

()

=

()()

+

-

p

lim log log

n

n

i

n

in

i

n

a

b

d x d x qx qx dx

Æ•

=

-

() ()

[]

=-

() ()

Â

Ú

2

1

2

D

Sdxdx

n

ba

nn

i

n

i

i

n

n

p

()

=-

() ()

[]

+

-

=

Â

log log .

2

1

2

D

Sdxdxdx

dx dx p

nn

in

i

n

n

i

n

in

i

n

n

n

i

n

in

i

n

n

n

i

i

n

p

()

=-

() ()

-

()

=-

() ()

[]

-

==

==

ÂÂ

ÂÂ

DDD

DD

1

2

1

2

2

1

2

1

log log

log log .

Sdxdx

nn

in

i

n

n

in

p

()

=-

() ()

[]

=

Â

DD

1

2

log .

Spp

nn

i

i

n

n

i

p

()

=-

=

Â

1

2

log

n

i

n

i

n

ii

dx

p

xxx

()

=Œ

[

)

-

D

for

1

,

3.3. SHANNON-LIKE MEASURE OF UNCERTAINTY FOR INFINITE SETS 93

The discrepancy between the Shannon and Boltzmann entropies can be rec-

onciled in a modified form of the Boltzmann entropy,

(3.55)

which involves two probability density functions, q(x) and r(x), defined on

[a, b]. If only q is given, it is convenient to use

which is the probability density function corresponding to the uniform

probability distribution on [a, b]. The finite counterpart of B

ˆ

is the

functional

(3.56)

This functional, which is known in classical information theory as a cross-

entropy or a directed divergence, measures uncertainty in relative rather

than absolute terms. When p¢ is the uniform probability distribution function

(p¢(x) = 1/|X|) for all x ŒX ), then

In this special form, S

ˆ

clearly measures the amount of information carried

by function p¢ with respect to total ignorance (expressed in probabilistic

terms).

When q(x) in Eq. (3.55) is replaced with a density function, q(x,y), of a joint

probability distribution on X ¥ Y, and r(x) is replaced with the product of

density functions of marginal distributions on X and Y, q

X

(x)·q

Y

(y), B

ˆ

becomes

the continuous counterpart the information transmission given by Eq. (3.34).

This means that the continuous counterpart, T

B

, of the information transmis-

sion can be expressed as

(3.57)

It is well established that this functional is finite when functions q, q

X

, and

q

Y

are continuous; it is always positive, and it is invariant under linear

transformations.

Tqxyq xq y x aby cd

fxy

qx y

qxqx

dx dy

BXY

c

d

a

b

XY

,, ,, ,

, log

,

.

()()

◊

()

Œ

[]

Œ

[][]

=

()

()

()

◊

()

ÚÚ

2

ˆ

, log .SpxpxxX X SpxxX

()

¢

()

Œ

[]

=-

()

Œ

[]

2

ˆ

, log .Spx p x x X px

px

px

xX

()

¢

()

Œ

[]

=

()

()

¢

()

Œ

Â

2

rx

ba

()

=

-

1

,

ˆ

, , log ,Bqx rx x a b qx

qx

rx

dx

a

b

() ()

Œ

[][]

=

()

()

()

Ú

2

94 3. CLASSICAL PROBABILITY-BASED UNCERTAINTY THEORY

NOTES

3.1. As is well described by Hacking [1975], the concept of numerical probability

emerged in the mid-17th century. However, its adequate formalization was

achieved only in the 20th century by Kolmogorov [1950]. This formalization is

based on the classical measure theory [Halmos, 1950]. The literature dealing with

probability theory and its applications is copious. Perhaps the most comprehen-

sive study of foundations of probability was made by Fine [1973].Among the enor-

mous number of other books published on the subject, it makes sense to mention

just a few that seem to be significant in various respects: Billingsley [1986], De

Finetti [1974, 1975], Feller [1950, 1966], Gnedenko [1962], Jaynes [2003], Jeffreys

[1939], Reichenbach [1949], Rényi [1970a, b], Savage [1972].

3.2. A justified way of measuring uncertainty and uncertainty-based information in

probability theory was established in a series of papers by Shannon [1948]. These

papers, which are also reprinted in the small book by Shannon and Weaver [1949],

opened a way for developing the classical probability-based information theory.

Among the many books providing general coverage of the theory, particularly

notable are classical books by Ash [1965], Billingsley [1965], Csiszár and Körner

[1981], Feinstein [1958], Goldman [1953], Guiasu [1977], Jelinek [1968], Jones

[1979], Khinchin [1957], Kullback [1959], Martin and England [1981], Reza [1961],

and Yaglom and Yaglom [1983], as well as more recent books by Blahut [1987],

Cover and Thomas [1991], Gray [1990], Ihara [1993], Kåhre [2002], Mansuripur

[1987], and Yeung [2002]. The role of information theory in science is well

described in books by Brillouin [1956, 1964] and Watanabe [1969]. Other books

focus on more specific areas, such as economics [Batten, 1983; Georgescu-Roegen,

1971; Theil, 1967], engineering [Bell, 1953; Reza, 1961], chemistry [Eckschlager,

1979], biology [Gatlin, 1972], psychology [Attneave, 1959; Garner, 1962; Quastler,

1955; Weltner, 1973], geography [Webber, 1979], and other areas [Hyvärinen,

1968; Kogan, 1988; Moles, 1966; Yu, 1976]. Useful resources to major papers on

classical information theory that were published in the 20th century are the

books edited by Slepian [1974] and Verdú and McLaughlin [2000]. Claude

Shannon’s contributions to classical information theory are well documented in

[Sloane and Wymer, 1993]. Most current contributions to classical information

theory are published in the IEEE Transactions on Information Theory. Some

additional books on classical information theory, not listed here, are included in

Bibliography.

3.3. Various subsets of the axioms for a probabilistic measure of uncertainty that are

presented in Section 3.2.2. were shown to be sufficient for providing the unique-

ness of Shannon entropy by Feinstein [1958], Forte [1975], Khinchin [1957], Rényi

[1970b], and others. The uniqueness proof presented as Theorem 3.1 is adopted

from a book by Ash [1965]. Excellent overviews of the various axiomatic treat-

ments of Shannon entropy can be found in books by Aczél and Daróczy [1975],

Ebanks et al. [1997], and Mathai and Rathie [1975]. All these books are based

heavily on the use of functional equations.An excellent and comprehensive mono-

graph on functional equations was prepared by Aczél [1966].

3.4. Several classes of functionals that subsume the Shannon entropy as a special case

have been proposed and studied. They include:

NOTES 95

1. Rényi entropies (also called entropies of degrees a), which are defined for all

real numbers a π 1 by the formula

(3.58)

It is well known that the limit of H for a Æ 1 is the Shannon entropy. For

a = 0, we obtain

This functional represents one of the probabilistic interpretations of Hartley

information as a measure that is insensitive to actual values of the given prob-

abilities and distinguishes only between zero and nonzero probabilities. As the

name suggests, Rényi entropies were proposed and investigated by Rényi

[1970b].

2. Entropies of order b, introduced by Daróczy [1970], which have the form

(3.59)

for all b π 1. As in the case of Rényi entropies, the limit of H

b

for b Æ 1 results

in the Shannon entropy.

3. R-norm entropies, which are defined for all R π 1 by the functional

(3.60)

As in the other two classes of functionals, the limit of H

R

for R Æ 1 is the

Shannon entropy. This class of functionals was proposed by Boekee and Van

der Lubbe [1980] and was further investigated by Van der Lubbe [1984].

Formulas converting entropies from one class to other classes are well

known. Conversion formulas between H

a

and H

b

were derived by Aczél and

Daróczy [1975, p. 185]; formulas for converting H

a

and H

b

into H

R

were derived

by Boeke and Van der Lubbe [1980, p.144].

Except for the Shannon entropy, these classes of functionals are not adequate

measures of uncertainty, since each of them violates some essential requirement

for such a measure. For example, when a, b, R > 0, Rényi entropies violate sub-

additivity, entropies of order b violate additivity, and R-norm entropies violate

both subadditivity and additivity. The significance of these functions in the

context of information theory is thus primarily theoretical, as they help us

better understand Shannon entropy as a limiting case in these classes of func-

tions. Strong arguments supporting this claim can be found in papers by Aczél,

Forte, and Ng [1974] and Forte [1975].

Hpp p

R

R

p

Rn i

R

i

n

R

12

1

1

1

1, ,..., .

()

=

-

-

Ê

Ë

Á

ˆ

¯

˜

È

Î

Í

Í

˘

˚

˙

˙

=

Â

Hpp p p

n

i

i

n

b

b

b

12

1

1

1

21

1, ,...,

()

=

-

-

Ê

Ë

Á

ˆ

¯

˜

-

=

Â

Hpp p p

ni

i

n

a 12 2

0

1

, ,..., log .

()

=

=

Â

Hpp p p

ni

i

n

a

a

a

12 2

1

1

1

, ,..., log .

()

=

-

=

Â

96 3. CLASSICAL PROBABILITY-BASED UNCERTAINTY THEORY

3.5. Using Theorems 3.3 through 3.5 as a base, numerous theorems regarding the rela-

tionship among the information transmission and basic, conditional, and joint

Shannon entropies can be derived by simple algebraic manipulations and by math-

ematical induction to obtain generalizations. Conant [1981] offers some useful

ideas in this regard. A good summary of practical theorems for Shannon entropy

was prepared by Ashby [1969]. Ashby [1965, 1970, 1972] and Conant [1976, 1988]

also demonstrated the utility of these theorems for analyzing complex systems.

3.6. An excellent examination of the difference between Shannon and Boltzmann

entropy is made by Reza [1961]. This issue is also discussed by Ash [1965], Guiasu

[1977], Jones [1979], and Ihara [1993]. The origin of the concept of entropy in

physics is discussed in detail by Fast [1962]; see also an important early paper by

Elsasser [1937].

3.7. Guiasu [1977, Chapter 4] introduced and studied a generalization of the Shannon

entropy to the weighted Shannon entropy,

(3.61)

where w(x) are nonnegative numbers that are called weights. For each alternative

x ΠX, the weight w(x) characterizes the importance (or utility) of the alternative

in a given application context. Guiasu showed that the functional WS possesses

the following properties:

•

WS(p(x), w(x) | x Œ X) ≥ 0, where the equality is obtained iff p(x) = 1 for one

particular x ΠX.

•

WS is subadditive and additive.

•

The maximum of WS,

(3.62)

is reached when p(x) = e

(1-l)/w(x)

for all x ΠX, where l is determined by the

equation

(3.63)

EXERCISES

3.1. For each of the probability distributions in Table 3.3, calculate the

following:

(a) All conditional uncertainties;

(b) Information transmissions for all partitions of the set of variables;

(c) The reduction of uncertainty (information) with respect to the

uniform probability distribution;

(d) Normalized counterparts of results obtained in parts (a), (b), (c).

e

wx

xX

1

1

-

()()

Œ

Â

=

l

.

WS w

wx

xX

max

=+

-

()()

Œ

Â

l

l1

WS px wx x X wx px px

xX

() ()

Œ

()

=-

()() ()

Œ

Â

, log .

2

EXERCISES 97

3.2. Repeat Example 3.5 using the following assumptions:

(a) The state at time t is x

2

;

(b) The state at time t is x

3

;

(c) The probability distribution at time t is

t

p(x

1

) = 0,

t

p(x

2

) = 0.6,

t

p(x

3

) = 0.4.

3.3. Consider a system with state set X = {x

1

, x

2

, x

3

, x

4

} whose transitions from

present states to next states are characterized by the state-transition

matrix of conditional probabilities specified in Table 3.4. Assuming that

the initial probability distribution on the states (at time t) is

t

p(x

1

) = 0.5,

t

p(x

2

) = 0.3,

t

p(x

3

) = 0.2,

t

p(x

4

) = 0, determine the following:

(a) Uncertainties in predicting states at time t = 2, 3, 4;

(b) Uncertainty in predicting sequences of states of lengths 2, 3, 4;

(c) Associated normalized uncertainties for parts (a) and (b);

(d) Associated amounts of information contained in the system with

respect to questions regarding predictions in parts (a) and (b).

3.4. Repeat Exercise 3.3 for some other probability distributions on the

states at time t.

3.5. Use some additional branching in Example 3.6 to calculate the value of

the Shannon entropy for the given probability distributions, for example:

(a) p

A

= p

1

+ p

4

, p

B

= p

2

+ p

3

(b) p

A

= p

1

+ p

2

+ p

4

, p

B

= p

3

(c) Scheme IV in which p

1

is exchanged with p

4

and p

2

is exchanged with

p

3

.

98 3. CLASSICAL PROBABILITY-BASED UNCERTAINTY THEORY

Table 3.3. Probability Distributions in Exercise 3.1

xy zp

1

(x, y, z) p

2

(x, y, z) p

3

(x, y, z) p

4

(x, y, z)

0 0 0 0.30 0.00 0.10 0.10

0 0 1 0.00 0.00 0.00 0.00

0 1 0 0.00 0.25 0.00 0.20

0 1 1 0.20 0.25 0.30 0.00

1 0 0 0.00 0.25 0.40 0.30

1 0 1 0.10 0.25 0.00 0.00

1 1 0 0.30 0.00 0.00 0.40

1 1 1 0.10 0.00 0.20 0.00

Table 3.4. State-Transition Matrix in Exercise 3.3

t+1

x

1

t+1

x

2

t+1

x

3

t+1

x

4

t

x

1

0.2 0.0 0.8 0.0

t

x

2

0.0 0.0 0.0 1.0

t

x

3

0.0 0.9 0.0 0.1

t

x

4

0.5 0.3 0.2 0.0

3.6. Derive the generalized form Eq. (3.37) of Eq. (3.35) in Theorem 3.3.

3.7. Derive the generalized form Eq. (3.39) of Eq. (3.38) in Theorem 3.4.

3.8. The so-called Q-factor, which is defined by the equation

is often used in classical information theory. Express Q(X ¥ Y ¥ Z) solely

in terms of the various information transmissions.

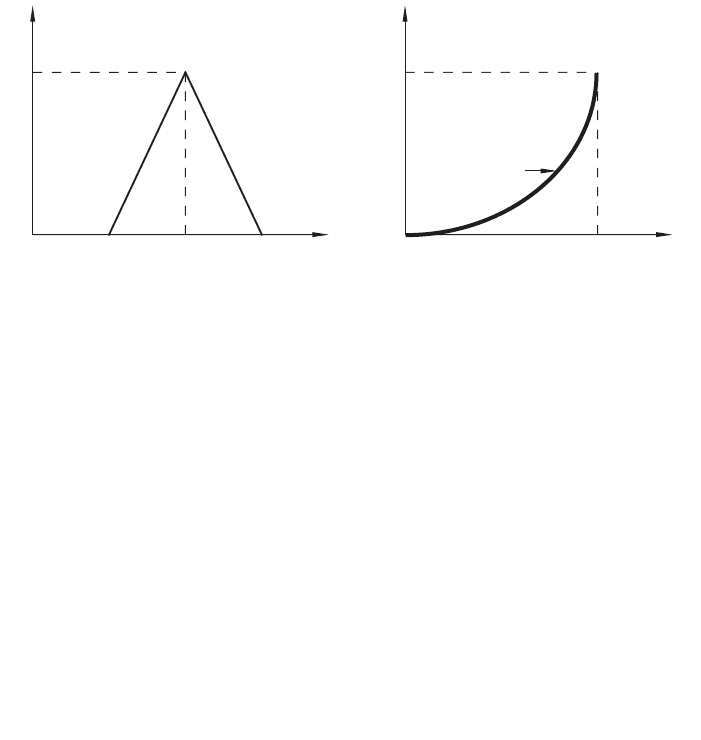

3.9. For each of the probability density functions q(x) shown in Figure 3.7,

calculate the Boltzmann entropy and demonstrate that it is negative,

zero, or positive, depending on the values of a, b, c.Note: Remember

that the condition

must be satisfied.

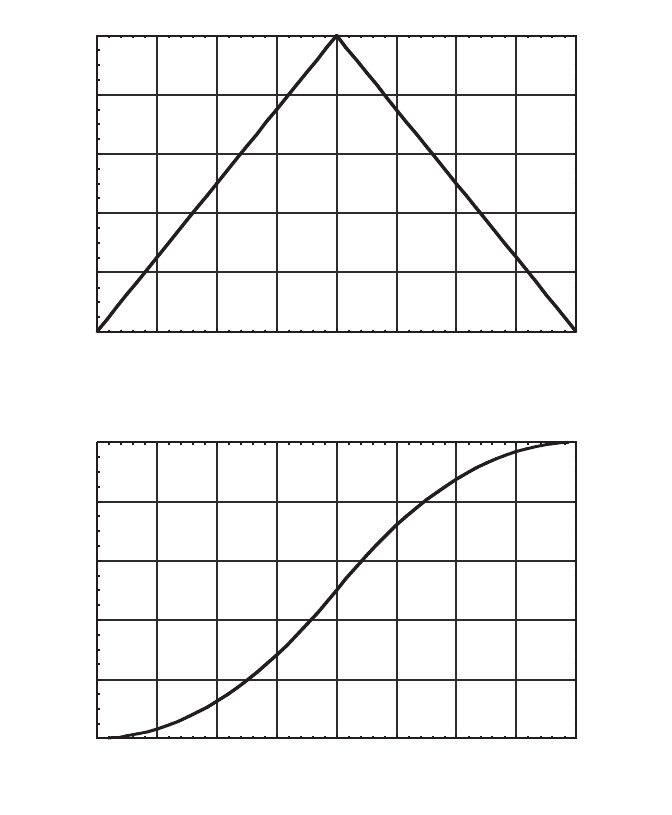

3.10. Let the graphs in Figure 3.8a and 3.8b represent, respectively, a proba-

bility density function q and the associated probability distribution func-

tion p. Determine mathematical definitions of both these functions.

3.11. Consider variables X, Y whose states are in sets X, Y, respectively, and

that are characterized by joint probabilities for all ·x, yÒŒX ¥ Y. Show

that:

(a) X is independent of Y iff Y is independent of X;

(b) X and Y are noninteractive iff X and Y are independent of one

another.

3.12. Calculate the posterior probability Pro(A | B) in Example 3.1 under the

following assumptions:

qx dx

a

b

()

=

Ú

1

QX Y Z SX SY SZ SX Y

SX Z SY Z SX Y Z

¥¥

()

=

()

+

()

+

()

-¥

()

-¥

()

-¥

()

+¥¥

()

,

EXERCISES 99

a

b

c

x

0

q(x)

q(x)

q(b)

x

0 (= a)

b

cx

2

Figure 3.7. Probability density functions in Exercise 3.9.

(a) The outcome of the TST test is negative;

(b) The reliability of the TST test is higher; Pro(B |A) = 0.999 and

Pro(B|A

¯

) = 0.02;

(c) the reliability of the TST test is lower: Pro(B |A) = 0.8 and

Pro(B|A

¯

) = 0.15.

100 3. CLASSICAL PROBABILITY-BASED UNCERTAINTY THEORY

0.25 0.5 0.75 1 1.25 1.5 1.75 2

0.2

0.4

0.6

0.8

1

x

q(x)

(a)

0.25 0.5 0.75 1 1.25 1.5 1.75 2

0.2

0.4

0.6

0.8

1

x

p(x)

(b)

Figure 3.8. Illustration to Exercise 3.10.

4

GENERALIZED MEASURES

AND IMPRECISE

PROBABILITIES

101

An educated mind is satisfied with the degree of precision that the nature of

the subject admits and does not seek exactness where only approximation is

possible.

—Aristotle

4.1. MONOTONE MEASURE

The term “classical information theory” is used in the literature, by and large,

to refer to the theory based on the notion of probability (Chapter 3). Uncer-

tainty functions in this theory are expressed in terms of classical measure

theory, which in turn, is formalized in terms of classical set theory. Generaliz-

ing the concept of a classical measure is thus one way of enlarging the frame-

work for a broader treatment of the concept of uncertainty and the associated

concept of uncertainty-based information. The purpose of this chapter is to

discuss this generalization. Further enlargement of the framework, which is

discussed in Chapter 8, is obtained by fuzzifications of classical as well as gen-

eralized measures. Basic characteristics of both these generalizations are

depicted in Figure 4.1.

Given a universal set X and a nonempty family C of subsets of X with an

appropriate algebraic structure (e.g., a s-algebra), a classical measure, m, is a

set function of the form

Uncertainty and Information: Foundations of Generalized Information Theory, by George J. Klir

© 2006 by John Wiley & Sons, Inc.