Klir G.J. Uncertainity and Information. Foundations of Generalized Information Theory

Подождите немного. Документ загружается.

3.1.1. Functions on Finite Sets

As in the case of possibilistic uncertainty, consider first a finite set X of mutu-

ally exclusive alternatives (predictions, diagnoses, etc.) that are of concern to

us in a given application context. In general, alternatives in set X may be

viewed as states of a variable X. Only one of the alternatives is true, but we

are not certain which one it is. In probability theory, this uncertainty about the

true alternative is expressed by a function

for which

(3.1)

This function is called a probability distribution function, and the associated

tuple of values p(x) for all x ΠX,

is called a probability distribution. For each x ΠX, the value p(x) expresses

the degree of evidential support that x is the true alternative. A variable X

whose states x ΠX are associated with probabilities p(x) is usually called a

random variable.

Given a probability distribution function p, the associated probability

measure, Pro, is obtained for all A ΠP(X) via the formula

(3.2)

However, it is often not necessary to consider all sets in P(X). Any family of

subsets of X, C債P(X), is acceptable provided that it contains X and it is closed

under complementation and finite unions. Members of C are called events.For

any pair of disjoint events A and B,

(3.3)

This basic property of probability measures is referred to as additivity.

Given a probability distribution function p on X and any real-valued func-

tion f on X, the functional

(3.4)

is called an expected value of f. Clearly, a(f, p) is a weighted average of values

f(x), in which the weights are probabilities p(x).

af p fxpx

xX

,

()

=

()()

Œ

Â

Pro A B Pro A Pro B»

()

=

()

+

()

.

Pro A p x

xA

()

=

()

Œ

Â

.

p =

()

Œpx x X ,

px

xX

()

=

Œ

Â

1.

pX:,,Æ

[]

01

62 3. CLASSICAL PROBABILITY-BASED UNCERTAINTY THEORY

Now, consider two sets of alternatives, X and Y, which may be viewed, in

general, as sets of states of variables X and Y, respectively.A probability func-

tion defined on X ¥ Y is called a joint probability distribution function.The

associated marginal probability distribution functions, p

X

and p

Y

, on X and Y,

respectively, are determined by the formulas

(3.5)

for each x ΠX, and

(3.6)

for each y ŒY. Variables X, Y with marginal probability distribution functions

p

X

, p

Y

, respectively, are called noninteractive iff

(3.7)

for all x ŒX and y ŒY. Conditional probability distribution functions, p(x |y)

and p(y |x), are defined for all x ŒX and y ŒY such that p

X

(x) π 0 and p

Y

(y)

π 0 by the formulas

(3.8)

(3.9)

When p

X|Y

(x|y) = p

X

(x) for all x ΠX, variable X is said to be independent of

variable Y. Similarly, when p

Y|X

(y|x) = p

Y

(y) for all y, variable Y is said to be

independent of variable X.

It is easy to show that the concepts of probabilistic noninteraction and

probabilistic independence are equivalent. Given two variables, X and Y,

with probability distributions, p

X

and p

Y

, defined on their states sets, X

and Y, assume that they are noninteractive. This means that their joint

probability distribution satisfies Eq. (3.7) for all x Œ X and y ŒY. Then, Eq.

(3.8) becomes

and, similarly, Eq. (3.9) becomes

pxy

pxpy

py

px

XY

XY

Y

X

()

=

()

◊

()

()

=

()

pyx

px y

px

YX

X

()

=

()

()

,

.

pxy

px y

py

XY

Y

()

=

()

()

,

,

px y p x p y

XY

,

()

=

()

◊

()

py pxy

Y

xX

()

=

()

Œ

Â

,,

px pxy

X

y

Y

()

=

()

Œ

Â

,,

3.1. PROBABILITY FUNCTIONS 63

Hence, noninteraction implies independence.

Assume now that the variables are independent. This means that

and similarly,

In both cases, clearly, we obtain Eq. (3.7), which means that independence

implies noninteraction. Hence, the two concepts, noninteraction and indepen-

dence, are equivalent in probability theory. This equivalence does not hold in

other theories of uncertainty.

3.1.2. Functions on Infinite Sets

When X is the set of real numbers, ⺢, or a bounded interval of real numbers,

[x, x

¯

], the set of alternatives is infinite, and the way in which probability mea-

sures are defined for finite X is not applicable. It is not any more meaningful

to define probability measures on the full power set P(X ). In each particular

application, a relevant family of subsets of X (events), C, must be chosen,

which is required to contain X and be closed under complements and count-

able unions (these requirements imply that C is also closed under countable

intersections).Any such family together with the operations of set union, inter-

section, and complement is usually called a s-algebra. In many applications,

family C consists of all bounded, right-open subintervals of X.

Probability distribution function p cannot be defined for infinite sets in the

same way in which it is defined for finite sets. For X = ⺢ or X = [x

, x

¯

], function

p is defined for all x ŒX by the equation

(3.10)

where Pro denotes, as before, a probability measure. This definition utilizes

the ordering of real numbers. Function p is clearly nondecreasing, and it is

usually expected to be continuous at each x ŒX and differentiable everywhere

except at a countable number of points.

p x Pro a X a x

()

=Œ<

{}()

,

pxy

px y

px

py

XY

X

Y

()

=

()

()

=

()

,

.

pxy

px y

py

px

XY

Y

X

()

=

()

()

=

()

,

pyx

pxpy

px

py

YX

XY

X

Y

()

=

()

◊

()

()

=

()

.

64 3. CLASSICAL PROBABILITY-BASED UNCERTAINTY THEORY

Connected with the probability distribution function p is another function,

q, defined for all x ŒX as the derivative of p. This function is called a proba-

bility density function. Since p is a nondecreasing function, q(x) ≥ 0 for all

x ŒX.

Given a s-algebra defined on a family C, and a probability density function

q on X, the probability of any set A ΠC, Pro(A), can be calculated via the

integral

(3.11)

Since it is required that Pro(X) = 1 (the true alternative must be in X), the

probability density function is constrained by the equation

(3.12)

which is the counterpart of Eq. (3.1) for the infinite case.

Given a probability distribution function on X and another real-valued

function f on X, the functional

(3.13)

is called an expected value of f. Clearly, Eq. (3.13) is a counterpart of Eq. (3.4)

for the infinite case. Observe that a( f, p) can also be expressed in terms of the

probability density function q associated with p as

(3.14)

When function q is defined on a Cartesian product X ¥ Y = [x

, x

¯

] ¥ [,y

¯

],

it is called a joint probability density function. The associated marginal proba-

bility density functions, q

X

and q

Y

, on X and Y, respectively, are defined for

each x ŒX and each y ŒY by the formulas

(3.15)

(3.16)

Marginal probability density functions are called noninteractive iff

(3.17)

for all x ΠX and each y ΠY. Conditional probability density functions,

q

X|Y

(x|y) and q

Y|X

(y|x), are defined for all x ΠX and all y ΠY such that

p

X

(x) π 0 and p

Y

(y) π 0 by the formulas

qx y q x q y

XY

,

()

=

()

◊

()

qy qxydx

Y

X

()

=

()

Ú

,.

qx qxydy

X

Y

()

=

()

Ú

,,

y

af p fxqx dx

X

,.

()

=

()()

Ú

af p fxdp

X

,

()

=

()

Ú

qx dx

X

()

=

Ú

1,

Pro A q x dx

A

()

=

()

Ú

.

3.1. PROBABILITY FUNCTIONS 65

(3.18)

(3.19)

Clearly, Eqs. (3.15)–(3.19) are counterparts of Eqs. (3.5)–(3.9) for the infinite

case. Again, the concepts of probabilistic noninteraction and probabilistic

independence are equivalent in this case.

3.1.3. Bayes’ Theorem

Consider a s-algebra with events in a family C 債 P(X) and a probability

measure Pro on C. For each pair of sets A, B Œ C such that Pro(B) π 0, the

conditional probability of A given B, Pro(A|B), is defined by the formula

(3.20)

Similarly, the conditional probability of B given A, Pro(B | A) is defined by

the formula

(3.21)

Expressing Pro(A « B) from Eqs. (3.20) and (3.21) results in the equation

that establishes a relationship between the two conditional probabilities. One

of the conditional probabilities is then expressed in terms of the other one by

the equation

(3.22)

which is usually referred to as Bayes’ theorem. Since Pro(B) can be expressed

in terms of given elementary, mutually exclusive events A

i

(i Œ ⺞

m

) of the

s-algebra as

Pro B Pro A B

Pro B A Pro A

i

i

i

i

i

m

m

()

=«

()

=

()

◊

()

Œ

Œ

Â

Â

⺞

⺞

Pro A B

Pro B A Pro A

Pro B

()

=

()

◊

()

()

,

Pro A B Pro B Pro B A Pro A

()

◊

()

=

()

◊

()

Pro B A

Pro A B

Pro A

()

=

«

()

()

.

Pro A B

Pro A B

Pro B

()

=

«

()

()

.

qyx

qx y

qx

YX

X

()

=

()

()

,

.

qxy

qx y

qy

XY

Y

()

=

()

()

,

,

66 3. CLASSICAL PROBABILITY-BASED UNCERTAINTY THEORY

when C is finite, Bayes’ theorem may also be written in the form

(3.23)

For infinite sets, Bayes’ theorem must be properly reformulated in terms

of probability density functions and the summation in Eq. (3.23) must be

replaced with integration.

Bayes’ theorem is a simple procedure for updating given probabilities on

the basis of new evidence. From prior probabilities Pro(A) and new evidence

expressed in terms of conditional probabilities Pro(B|A), we calculate poste-

rior probabilities Pro(A|B). When further evidence becomes available, the

posterior probabilities are employed as prior probabilities and the procedure

of probability updating is repeated.

EXAMPLE 3.1. Let X denote the population of a given town community. It

is known from statistical data that 1% of the town residents have tuberculo-

sis. Using this information, the probability, Pro(A), that a randomly chosen

member of the community has tuberculosis (event A) is 0.01. Suppose that this

member takes a tuberculosis skin test (TST) and the outcome is positive. On

the basis of the information, the prior probability changes to a posterior prob-

ability Pro(A |B), where B denotes the event “positive outcome of the TST

test.” Clearly, the posterior probability depends on the reliability of the TST

test. Assume that the following is known about the test: (1) the probability

of a positive outcome for a person with tuberculosis, Pro(B|A), is 0.99; and

(2) the probability of a positive outcome for a person with no tuberculosis,

Pro(B|A

¯

), is 0.04. Using this information regarding the reliability of the

TST test, the posterior probability Pro(A |B) that the person has tuberculosis

is calculated from the prior probability Pro(A) via Eq. (3.23) as follows:

The probability that the person has tuberculosis is thus 0.2. Observe that if the

test were fully reliable (Pro(B|A) = 1 and Pro(B |A

¯

) = 0), we would conclude

(as expected) that the person has tuberculosis with probability 1.

3.2. SHANNON MEASURE OF UNCERTAINTY FOR FINITE SETS

The question of how to measure the amount of uncertainty (and the asso-

ciated information) in classical probability theory was first addressed by

Pro A B

PB APA

Pro B A P A Pro B A P A

()

=

()()

()()

+

()()

=

◊

◊+◊

=

––

..

.. ..

..

099 001

099 001 004 099

02

Pro A B

Pro B A Pro A

Pro B A Pro A

ii

i

m

()

=

()

◊

()

()

◊

()

Œ

Â

⺞

.

3.2. SHANNON MEASURE OF UNCERTAINTY FOR FINITE SETS 67

Shannon [1948]. He established that the only meaningful way to measure the

amount of uncertainty in evidence expressed by a probability distribution

function p on a finite set is to use a functional of the form

where b and c are positive constants, and b π 1. Each choice of values b and

c determines the unit in which the uncertainty is measured.The most common

choice is to define the measurement unit by the requirement that the amount

of uncertainty be 1 when X = {x

1

, x

2

} and p(x

1

) = p(x

2

) = 1/2. This requirement,

which is usually referred to as a normalization requirement, is formally

expressed by the equation

It can be conveniently satisfied by choosing b = 2 and c = 1. The resulting mea-

surement unit is called a bit.That is, 1 bit is the amount of uncertainty removed

(or information gained) upon learning the answer to a question whose two

possible answers were equally likely. The resulting functional,

(3.24)

is called a Shannon measure of uncertainty or, more frequently, a Shannon

entropy.

One way of getting insight into the type of uncertainty measured by the

Shannon entropy is to rewrite Eq. (3.24) in the form

(3.25)

The term

in Eq. (3.25) represents the total evidential claim pertaining to alternatives

that are different from x. That is, Con(x) expresses the sum of all evidential

claims that fully conflict with the one focusing on x. Clearly, Con(x) Π[0, 1]

for each x ŒX.The function -log

2

[1 - Con(x)], which is employed in Eq. (3.25),

is monotonic increasing with Con(x) and extends its range from [0, 1] to

[0, •). The choice of the logarithmic function is a result of axiomatic require-

ments for S, which are discussed later in this chapter. It follows from these

facts and from the form of Eq. (3.25) that the Shannon entropy is the mean

Con x p y

yx

()

=

{}()

π

Â

Sp px py

yxxX

()

=-

()

-

()

È

Î

Í

˘

˚

˙

πŒ

ÂÂ

log .

2

1

S p px px

xX

()

=-

() ()

Œ

Â

log ,

2

-=c

b

log .

1

2

1

-

() ()

Â

cpx px

b

log ,

68 3. CLASSICAL PROBABILITY-BASED UNCERTAINTY THEORY

(expected) value of the conflict among evidential claims expressed by each

given probability distribution function p.

3.2.1. Simple Derivation of the Shannon Entropy

Suppose that a particular alternative in a finite set X of considered alterna-

tives occurs with the probability p(x). When this probability is very high, say

p(x) = 0.999, then the occurrence of x is taken almost for granted and, conse-

quently, we are not much surprised when it actually occurs. That is, our uncer-

tainty in anticipating x is quite small and, therefore, our observation that x has

actually occurred contains very little information. On the other hand, when

the probability is very small, say p(x) = 0.001, then we are greatly surprised

when x actually occurs. This means, in turn, that we are highly uncertain in our

anticipation of x and, hence, the actual observation of x has very large infor-

mation content. We can conclude from these considerations that the anticipa-

tory uncertainty of x prior to the observation (and the information content of

observing x) should be expressed by a decreasing function of the probability

p(x): the more likely the occurrence of x, the less information its actual obser-

vation contains.

Consider a random experiment with n considered outcomes, i = 1,2,...,n,

whose probabilities are p

1

, p

2

,...,p

n

, respectively. Assume that p

i

> 0 for all

i Œ ⺞

n

, which means that no outcomes with zero probabilities are considered.

The uncertainty in anticipating a particular outcome i (and the information

obtained by actually observing this outcome) should clearly be a function of

p

i

. Let

denote this function. To measure in a meaningful way the anticipatory uncer-

tainty, function s should satisfy the following properties:

(s1) s(p

i

) should decrease with increasing p

i

.

(s2) s(1) = 0.

(s3) s should behave properly when applied to joint outcomes of indepen-

dent experiments.

To elaborate on property (s3), let r

ij

denote the joint probabilities of outcomes

of two independent experiments. Assume that one of the experiments has n

outcomes with probabilities p

i

(i Œ ⺞

n

) and the other one has m outcomes with

probabilities q

j

( j Œ⺞

m

). Then, according to the calculus of probability theory,

(3.26)

for all i Œ ⺞

n

and all j Œ ⺞

m

. Since the experiments are independent, the

anticipatory uncertainty of a particular joint outcome ·i, jÒ should be equal to

rpq

ij i j

=◊

s :, ,01 0

(

]

Æ•

[

)

3.2. SHANNON MEASURE OF UNCERTAINTY FOR FINITE SETS 69

the sum of anticipatory uncertainties of the individual outcomes i and j. That

is, the equation

should hold when Eq. (3.26) holds. This leads to the functional equation

where p

i

, q

j

Π(0, 1]. This is known as one form of the Cauchy equation whose

solution is the class of functions defined for each a Π(0, 1] by the equation

where c is an arbitrary constant and b is a nonnegative constant distinct from

1. Since s is required by (s1) to be a decreasing function on (0, 1] and the

logarithmic function is increasing, c must be negative. Furthermore, defining

the measurement unit by the requirement that s(1/2) = 1 and choosing

conveniently b = 2 and c =-1, we obtain a unique function s, defined for each

a Π(0, 1] by the equation

(3.27)

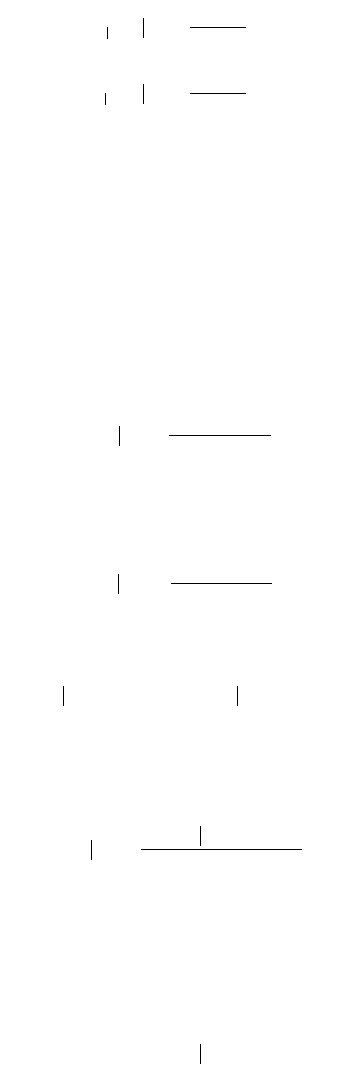

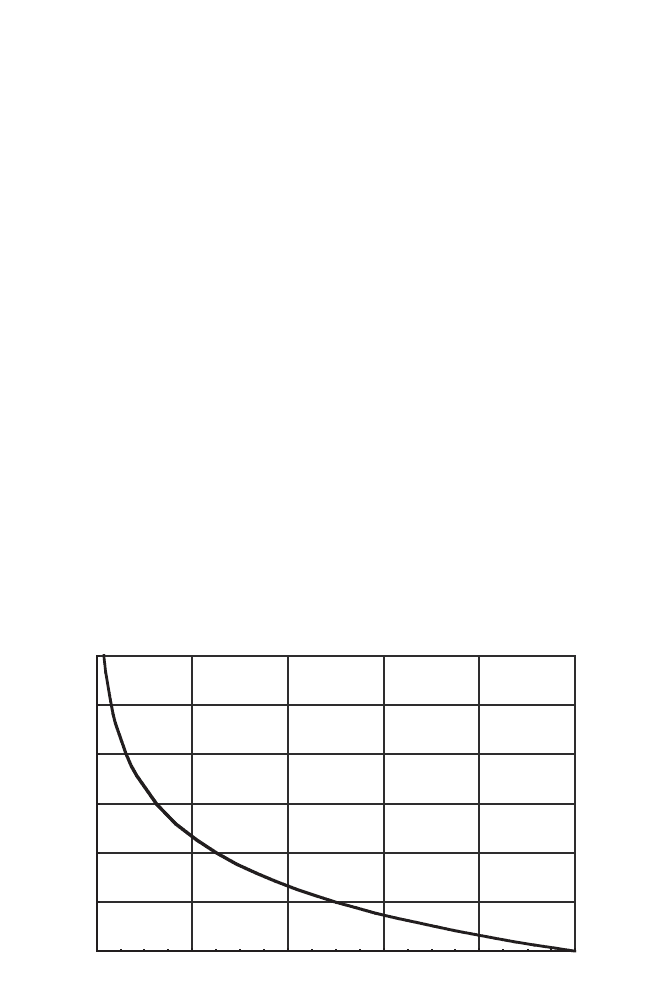

A graph of this function is shown in Figure 3.1.

Now consider a finite set X of considered alternatives with probabilities

p(x) for all x ŒX. Let S(p) denote the expected value of s[p(x)] for all x ŒX.

Then,

sa a

()

=-log .

2

sa c a

b

()

= log ,

sp q sp sq

ij i j

◊

()

=

()

+

()

,

sr s p s q

ij i j

()

=

()

+

()

70 3. CLASSICAL PROBABILITY-BASED UNCERTAINTY THEORY

0.2 0.4 0.6 0.8 1

a

1

2

3

4

5

6

s(a)

Figure 3.1. Graph of function s(a) =-log

2

a.

Substituting for s from Eq. (3.27), we obtain the functional

which is the Shannon entropy (compare with Eq. (3.24)).

Observe that the term -p(x)·log

2

p(x) in the formula for S(p) is not defined

when p(x) = 0. However, employing l’Hospital’s rule for indeterminate forms,

we can calculate its limit for p(x) Æ 0:

When only two alternatives are considered, x

1

and x

2

, whose probabilities

are p(x

1

) = a and p(x

2

) = 1 - a, the Shannon entropy, S(p), depends only on a

in the way illustrated in Figure 3.2a; graphs of the two components of S(p),

s

1

(a) =-a log

2

a and s

2

(a) =-(1 - a) log

2

(1 - a), are shown in Figure 3.2b.

3.2.2. Uniqueness of the Shannon Entropy

The issue of measuring uncertainty and information in probability theory

has also been treated axiomatically in various ways. It has been proved in

numerous ways, from several well-justified axiomatic characterizations, that

the Shannon entropy is the only meaningful functional for measuring uncer-

tainty and information in probability theory. To survey this more rigorous

treatment, assume that X = {x

1

, x

2

...x

n

}, and let p

i

= p(x

i

) for all i Œ ⺞

n

.In

addition, let P

n

denote the set of all probability distributions with n compo-

nents. That is,

Then, for each integer n, a measure of probabilistic uncertainty is a functional,

S

n

, of the form

which satisfies appropriate requirements. For the sake of simplicity, let

Spp p Spp p

nn n12 12

, ,..., , ,..., .

() ()

be written as

S

nn

:,,P Æ•

[

)

0

P

nni ni

i

n

pp p p i p=

{}

Œ

[]

Œ=

}

=

Â

12

1

01 1, ,..., , . for all and ⺞

lim log lim

log

lim

ln

lim

ln

.

px px px px

px px

px

px

px

px

px

()

Æ

()

Æ

()

Æ

()

Æ

-

() ()()

=

-

()

()

=

-

()

-

()

=

()

=

0

2

0

2

0

2

0

1

1

2

12

0

Sp px px

xX

()

=-

()

◊

()

Œ

Â

log ,

2

Sp px spx

xX

()

=

()

◊

()

[]

Œ

Â

.

3.2. SHANNON MEASURE OF UNCERTAINTY FOR FINITE SETS 71