Klir G.J. Uncertainity and Information. Foundations of Generalized Information Theory

Подождите немного. Документ загружается.

Different subsets of the following requirements, which are universally con-

sidered essential for a probabilistic measure of uncertainty and information,

are usually taken as axioms for characterizing the measure.

Axiom (S1) Expansibility. When a component with zero probability is added

to the probability distribution, the uncertainty should not change. Formally,

72 3. CLASSICAL PROBABILITY-BASED UNCERTAINTY THEORY

0.2 0.4 0.6 0.8 1

a

0.2

0.4

0.6

0.8

1

S(a,1-a)

(a)

0.2 0.4 0.6 0.8 1

a

0.2

0.4

0.6

0.8

1

(s1,s2)

(b)

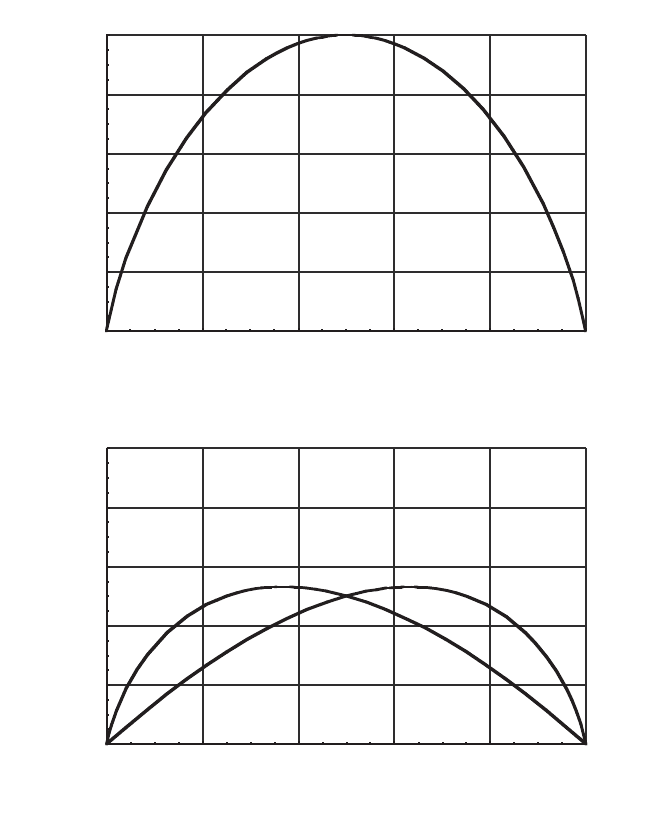

Figure 3.2. Graphs of: (a) S(a, 1 - a); (b) components s

1

(a) =-a log

2

a and s

2

(1 - a) =

-(1 - a)log

2

(1 - a) of S(a, 1 - a).

for all · p

1

, p

2

,...,p

n

ÒŒP

n

.

Axiom (S2) Symmetry. The uncertainty should be invariant with respect to

permutations of probabilities of a given probability distribution. Formally,

for all · p

1

, p

2

,...,p

n

ÒŒP

n

and for all permutations p (p

1

, p

2

,...,p

n

).

Axiom (S3) Continuity. Functional S should be continuous in all its

arguments p

1

, p

2

,..., p

n

. This requirement is often replaced with a

weaker requirement: S(p,1 - p) is a continuous functional of p in the

interval [0, 1].

Axiom (S4) Maximum. For each positive integer n, the maximum uncertainty

should be obtained when all the probabilities are equal to 1/n. Formally,

Axiom (S5) Subadditivity. The uncertainty of any joint probability distribu-

tion should not be greater than the sum of the uncertainties of the corre-

sponding marginal distributions. Formally,

for any given joint probability distribution ·p

ij

|i Œ ⺞

n

, j Œ⺞

m

Ò.

Axiom (S6) Additivity. The uncertainty of any joint probability distribution

that is noninteractive should be equal to the sum of the uncertainties of the

corresponding marginal distributions. Formally,

for any given marginal probability distributions ·p

1

, p

2

,...,p

n

Ò and ·q

1

, q

2

,...,

q

m

Ò. This requirement is sometimes replaced with a restricted requirement of

weak additivity which applies only to uniform marginal probability distributions

with p

i

= 1/n and q

j

= 1/m. Formally,

Spqpq pq pqpq pq pqpq pq

Sp p p Sq q q

mmnnnm

nm

11 1 2 1 21 2 2 2 1 2

12 12

, ,..., , , ,..., ,..., , ,...,

, ,..., , ,...,

()

=

()

+

()

Sp p p p p p p p p

Sp p pSp p p

mmnnnm

j

j

m

jnj

j

m

j

m

ii

i

n

i

n

im

i

n

11 12 1 21 22 2 1 2

1

1

2

11

12

111

, ,..., , , ,..., ,..., , ,...,

, ,..., , ,...,

()

£

Ê

Ë

Á

ˆ

¯

˜

+

======

ÂÂÂÂÂÂ

ÊÊ

Ë

Á

ˆ

¯

˜

Sp p p S

nn n

n12

11 1

, ,..., , ,..., .

()

£

Ê

Ë

ˆ

¯

Sp p p S p p p

nn12 12

, ,..., , ,...,

()

=

()()

p

Sp p p Sp p p

nn12 12

0, ,..., , ,..., ,

()

=

()

3.2. SHANNON MEASURE OF UNCERTAINTY FOR FINITE SETS 73

Introducing a convenient function f such that f(n) = S(1/n,1/n,...,1/n), then

the weak additivity can be expressed by the equation

for positive integers n and m.

Axiom (S7) Monotonicity. For probability distributions with equal probabil-

ities 1/n, the uncertainty should increase with increasing n. Formally, for any

positive integers m and n, when m < n, then f(m) < f(n), where f denotes the

function introduced in (S6).

Axiom (S8) Branching. Given a probability distribution p =·p

i

|i Œ ⺞

n

Ò on

set X = {x

i

|i Œ⺞

n

} for some integer n ≥ 3, let X be partitioned into two blocks,

A = {x

1

, x

2

,...,x

s

} and B = {x

s+1

, x

s+2

,...,x

n

} for some integer s. Then, the

equation

should hold, where . On the left-hand side of the

equation, the uncertainty is calculated directly; on the right-hand side, it is cal-

culated in two steps, following the calculus of probability theory. In the first

step (expressed by the first term), the uncertainty associated with the proba-

bility distribution ·p

A

, p

B

Ò on the partition is calculated. In the second step

(expressed by the second and third terms), the expected value of uncertainty

associated with the conditional probability distributions within the blocks A

and B of the partition is calculated. This requirement, which is also called a

grouping requirement or a consistency requirement is sometimes presented in

various other forms. For example, one of its weaker forms is given by the

formula

Sp p p Sp p p p p S

p

pp

p

pp

123 1 23 1 2

1

12

2

12

,, , , .

()

=+

()

++

()

++

Ê

Ë

ˆ

¯

pppp

Ai

i

s

Bi

is

s

==

==+

ÂÂ

11

and

Sp p p Sp p pS

p

p

p

p

p

p

pS

p

p

p

p

p

p

nABA

AA

s

A

B

s

B

s

B

n

B

12

12

12

, ,..., , , ,...,

, ,...,

()

=

()

+

Ê

Ë

ˆ

¯

+

Ê

Ë

ˆ

¯

++

fnm fn fm

()

=

()

+

()

S

nm nm nm

S

nn n

S

mm m

11 1 11 1 11 1

, ,..., , ,..., , ,..., .

Ê

Ë

ˆ

¯

=

Ê

Ë

ˆ

¯

+

Ê

Ë

ˆ

¯

74 3. CLASSICAL PROBABILITY-BASED UNCERTAINTY THEORY

It matters little which of these forms is adopted since they can be derived

from one another. (The branching axiom is illustrated later in this chapter by

Example 3.6 and Figure 3.6.)

Axiom (S9) Normalization. To ensure (if desirable) that the measurement

units of S are bits, it is essential that

This axiom must be appropriately modified when other measurement units are

preferred.

The listed axioms for a probabilistic measure of uncertainty and informa-

tion are extensively discussed in the abundant literature on classical informa-

tion theory. The following subsets of these axioms are the best known

examples of axiomatic characterization of the probabilistic measure of

uncertainty:

1. Continuity, weak additivity, monotonicity, branching, and normalization.

2. Expansibility, continuity, maximum, branching, and normalization.

3. Symmetry, continuity, branching, and normalization.

4. Expansibility, symmetry, continuity, subadditivity, additivity, and

normalization.

Any of these collections of axioms (as well as some additional collections)

is sufficient to characterize the Shannon entropy uniquely. That is, it has been

proven that the Shannon entropy is the only functional that satisfies any of

these sets of axioms. To illustrate in detail this important issue of uniqueness,

which gives the Shannon entropy its great significance, the uniqueness proof

is presented here for the first of the listed sets of axioms.

Theorem 3.1. The only functional that satisfies the axioms of continuity, weak

additivity, monotonicity, branching, and normalization is the Shannon entropy.

Proof. (i) First, we prove the proposition f(n

k

) = kf(n) for all positive integers

n and k by induction on k, where

is the function that is used in the definition of weak additivity. For k = 1, the

proposition is trivially true. By the axiom of weak additivity, we have

fn S

nn n

()

=

Ê

Ë

ˆ

¯

11 1

, ,...,

S

1

2

1

2

1,.

Ê

Ë

ˆ

¯

=

3.2. SHANNON MEASURE OF UNCERTAINTY FOR FINITE SETS 75

Assume the proposition is true for some k Œ ⺞. Then,

which demonstrates that the proposition is true for all k Œ ⺞.

(ii) Next, we demonstrate that f(n) = log

2

n. This proof is identical to that of

Theorem 2.1, provided that we replace the Hartley measure H with f. There-

fore, we do not repeat the derivation here. Observe that the proof requires

weak additivity, monotonicity, and normalization.

(iii) We prove now that S(p,1 - p) =-plog

2

p - (1 - p)log

2

(1 - p) for ratio-

nal p. Let p = r/s where r, s Œ ⺞. Then

by the branching axiom. By (ii) and the definition of p we obtain

Solving this equation for S(p,1 - p) results in

(iv) We now extend (iii) to the real numbers p Π[0, 1] with the help of the

continuity axiom. Let p be any number in the unit interval and let p¢ be a series

of rational numbers that approach p as a limit. Then,

by the continuity axiom. Moreover,

lim , lim log log

log log ,

¢

Æ

¢

Æ

¢-¢

()

=-¢ ¢--¢

()

-¢

()

[]

=- - -

()

-

()

pp pp

Sp p p p p p

pp p p

111

11

22

22

Sp p Sp p

pp

, lim ,11-

()

=¢-¢

()

¢

Æ

Sp p s p r p s r

psps spr p sr

pspr p s p sr

p

r

s

p

sr

, log log log

log log log log log

log log log log

log log

11

1

11

1

22 2

2222 2

2222

22

-

()

=- --

()

-

()

=-+---

()

-

()

=-+-

()

--

()

-

()

=-

Ê

Ë

ˆ

¯

--

()

-

ss

pp p p

Ê

Ë

ˆ

¯

=- - -

()

-

()

log log .

22

11

log , log log .

222

11sSpppr p sr=-

()

++-

()

-

()

fs S

ss sss s

S

r

s

sr

s

r

s

fr

sr

s

fs r

()

=

Ê

Ë

ˆ

¯

=

-

Ê

Ë

ˆ

¯

+

()

+

-

-

()

11 111 1

,,...,,,

,

,...,

fn fn fn

kf n f n

kfn

kk+

()

=

()

+

()

=

()

+

()

=+

()()

1

1,

fn fn n fn fn

kk k+

()

=◊

()

=

()

+

()

1

.

76 3. CLASSICAL PROBABILITY-BASED UNCERTAINTY THEORY

{

{

rs- r

since all the functions involved are continuous.

(v) We now conclude the proof by showing that

This is accomplished by induction on n. The result is proved in (ii) and (iv)

for n = 1,2, respectively. For n ≥ 3, we may use the branching axiom to

obtain

where Since S(p

n

/p

n

) = S(1) = 0 by (ii), we obtain

By (iv) and assuming the proposition we want to prove to be true for n - 1,

we may rewrite this equation as

3.2.3. Basic Properties of the Shannon Entropy

The literature dealing with information theory based on the Shannon entropy

is extensive. No attempt is made to give a comprehensive coverage of the

theory in this book. However,the most fundamental properties of the Shannon

entropy are surveyed.

First, a theorem is presented that plays an important role in classical infor-

mation theory. This theorem is essential for proving some basic properties of

Shannon entropy, as well as introducing some additional important concepts

of classical information theory.

Sp p p p p p p p

p

p

p

p

pppp p

p

p

pppp ppp

nAAnnA

i

A

i

n

i

A

AAnn i

i

n

i

A

AAnn i

i

n

ii

i

12 2 2

1

1

2

22

1

1

2

22

1

1

2

1

, ,..., log log log

log log log

log log log

()

=- - -

=- - -

=- - - +

=

-

=

-

=

-

=

Â

Â

Â

nn

A

AAnn i

i

n

iA A

i

i

n

i

p

pppp pppp

pp

-

=

-

=

Â

Â

Â

=- - - +

=-

1

2

22

1

1

22

1

2

log

log log log log

log . 䊏

Sp p p Sp p pS

p

p

p

p

p

p

nAnA

AA

n

A

12

12 1

, ,..., , , ,..., .

()

=

()

+

Ê

Ë

ˆ

¯

-

pp

A

i

n

i

=

=

-

Â

1

1

.

Sp p p Sp p pS

p

p

p

p

p

p

pS

p

p

nAnA

AA

n

A

n

n

n

12

12 1

, ,..., , , ,..., ,

()

=

()

+

Ê

Ë

ˆ

¯

+

Ê

Ë

ˆ

¯

-

Sp p p p p

nii

i

n

12 2

1

, ,..., log .

()

=-

=

Â

3.2. SHANNON MEASURE OF UNCERTAINTY FOR FINITE SETS 77

Theorem 3.2. The inequality

(3.28)

is satisfied for all probability distributions ·p

i

| i Œ ⺞

n

Ò and ·q

i

| i Œ ⺞

n

Ò and

for all n Œ ⺞

n

; the equality in (3.28) holds if and only if p

i

= q

i

for all

i Œ⺞

n

.

Proof. Consider the function

for p

i

, q

i

Π[0, 1]. This function is finite and differentiable for all values of

p

i

and q

i

except the pair p

i

= 0 and q

i

π 0. For each fixed q

i

π 0, the partial

derivative of s with respect to p

i

is

That is,

and, consequently, s is a convex function of p

i

, with its minimum at p

i

= q

i

.

Hence, for any given i, we have

where the equality holds if and only if p

i

= q

i

. This inequality is also satisfied

for q

i

= 0, since the expression on its left-hand side is +• if p

i

π 0 and q

i

= 0,

and it is zero if p

i

= 0 and q

i

= 0. Taking the sum of this inequality for all

i Œ ⺞

n

, we obtain

which can be rewritten as

pp pq p q

ii

i

n

ii

i

n

i

j

n

i

i

n

ln ln .

== ==

ÂÂÂÂ

- -+≥

11 11

0

pppqpq

iiiiii

i

n

ln ln ,--+

[]

≥

=

Â

1

0

pp q pq

ii i ii

ln ln ,-

()

-+≥0

∂

∂

sp q

p

pq

pq

pq

ii

i

ii

ii

ii

,

for

for

for

()

<<

==

>>

Ï

Ì

Ô

Ó

Ô

0

0

0

∂

∂

sp q

p

pq

ii

i

ii

,

ln ln .

()

=-

sp q p p q p q

ii i i i i i

,lnln

()

=-

()

-+

-£-

==

ÂÂ

pp pq

ii

i

n

ii

i

n

log log

2

1

2

1

78 3. CLASSICAL PROBABILITY-BASED UNCERTAINTY THEORY

The last two terms on the left-hand side of this inequality cancel each other

out, as they both sum up to one. Hence,

which is equivalent to Eq. (3.28) when multiplied through by 1/ln2. 䊏

This theorem, sometimes referred to as Gibbs’ theorem, is quite useful in

studying properties of the Shannon entropy. For example, the theorem can be

used as follows for proving that the maximum of the Shannon entropy for

probability distributions with n elements is log

2

n.

Let q

i

= 1/n for all i Œ ⺞

n

. Then, Eq. (3.28) yields

Thus, S(p

i

| i Œ ⺞

n

) £ log

2

n. The upper bound is obtained for p

i

= 1/n for all

i Œ ⺞

n

.

Let us now examine Shannon entropies of joint, marginal, and conditional

probability distributions defined on sets X and Y. In agreement with a common

practice in the literature dealing with the Shannon entropy, we simplify the

notation in the rest of this section by using S(X) instead of S(P

X

(x) | x ŒX)

or S(p

1

, p

2

,...,p

n

). Furthermore, assuming x ŒX and y ŒY we use symbols

p

X

(x) and p

Y

(y) to denote marginal probabilities on sets X and Y, respectively,

the symbol p(x, y) for joint probabilities on X ¥ Y, and the symbols p(x |y) and

p(y|x) for the corresponding conditional probabilities. In this simplified nota-

tion for conditional probabilities, the meaning of each symbol is uniquely

determined by the arguments shown in the parentheses.

Given two sets X and Y which may be viewed, in general, as state sets of

random variables X and Y, respectively, we can recognize the following three

types of Shannon entropies:

1. A joint entropy defined in terms of the joint probability distribution on

X ¥ Y,

(3.29)

2. Two simple entropies based on marginal probability distributions:

SX Y pxy pxy

x

y

XY

¥

()

=-

() ()

δ

Â

, log ,

,

2

Sp i p p

p

n

n

p

n

in i i

i

n

i

i

n

i

i

n

| log

log

log

log .

Œ

()

=-

£-

=-

=

=

=

=

Â

Â

Â

⺞

2

1

2

1

2

1

2

1

1

pp pq

ii

i

n

ii

i

n

ln ln ,

==

ÂÂ

-≥

11

0

3.2. SHANNON MEASURE OF UNCERTAINTY FOR FINITE SETS 79

(3.30)

(3.31)

3. Two conditional entropies defined in terms of weighted averages of local

conditional probabilities:

(3.32)

(3.33)

In addition to these three types of Shannon entropies, the functional

(3.34)

is often used in the literature as a measure of the strength of the relationship

(in the probabilistic sense) between elements of set X and Y. This functional

is called an information transmission. It is analogous to the functional defined

by Eq. (2.31) for the Hartley measure: it can be generalized to more than two

sets in the same way.

It remains to examine the relationship among the various types of entropies

and the information transmission. The key properties of this relationship are

expressed by the next several theorems.

Theorem 3.3

(3.35)

Proof

䊏

SX Y p y px y px y

py

px y

py

px y

py

px y

px y

py

px y

Y

yY xX

Y

yY

Y

xX

Y

xXyY

Y

xXyY

()

=-

() () ()

=-

()

()

()

()

()

=-

()

()

()

=-

()

ŒŒ

ŒŒ

ŒŒ

ŒŒ

ÂÂ

ÂÂ

ÂÂ

ÂÂ

log

,

log

,

, log

,

, log

2

2

2

222

2

2

2

px y px y p y

SX Y pxy p y

SX Y p y pxy

SX Y p y p y

SX Y

xXyY

Y

xXyY

Y

Y

yY xX

Y

yY

Y

, , log

, log

log ,

log

()

+

() ()

=¥

()

+

() ()

=¥

()

+

() ( )

=¥

()

+

() ()

=¥

ŒŒ

ŒŒ

ŒŒ

Œ

ÂÂ

ÂÂ

ÂÂ

Â

(()

-

()

SY.

SX Y SX Y SY

()

=¥

()

-

()

.

TXY SX SY SXY

S

,,

()

=

()

+

()

-

()

SY X p x py x py x

X

xX

y

Y

()

=-

() () ()

ŒŒ

ÂÂ

log .

2

SX Y p y px y px y

Y

y

YxX

()

=-

() () ()

ŒŒ

ÂÂ

log

2

SY py py

YY

y

Y

()

=-

() ()

Œ

Â

log .

2

SX px px

XX

xX

()

=-

() ()

Œ

Â

log ,

2

80 3. CLASSICAL PROBABILITY-BASED UNCERTAINTY THEORY

The same theorem can obviously be proved for the conditional entropy of

Y given X as well:

(3.36)

The theorem can be generalized to more than two sets. The general form,

which can be derived from either Eq. (3.35) or Eq. (3.36), is

(3.37)

This equation is valid for any permutation of the sets involved.

Theorem 3.4

(3.38)

Proof

By Gibbs’ theorem we have

SX Y pxy pxy

px y p x p y SX SY

xy X Y

xy X Y

XY

¥

()

=-

() ()

£-

() ()

◊

()

[]

=

()

+

()

δ

δ

Â

Â

, log ,

, log .

,

,

2

2

SX SY px y px y px y

px y p x p y

px y p x p y

yYxX yY xX

xy X Y

XY

xy X Y

XY

()

+

()

=-

() ()

+

()

È

Î

Í

˘

˚

˙

=-

() ()

+

()

[]

=-

() ()

◊

(

ŒŒŒŒ

δ

δ

ÂÂÂÂ

Â

Â

, log , log ,

, log log

, log

,

,

22

22

2

))

[]

.

SX px px

px y px y

SY py py

px y px y

XX

xX

yYxX yY

YY

yY

xXyY xX

()

=-

() ()

=-

() ()

()

=-

() ()

=-

() ()

Œ

ŒŒŒ

Œ

ŒŒŒ

Â

ÂÂÂ

Â

ÂÂÂ

log

, log ,

log

, log ,

2

2

2

2

SX Y SX SY¥

()

£

()

+

()

.

SX X X SX SX X SX X X

SX X X X

n

nn

12 1 21 312

12 1

¥¥¥

()

=

()

+

()

+¥

()

++ ¥¥¥

()

-

...

... ...

.

SY X SX Y SX

()

=¥

()

-

()

.

3.2. SHANNON MEASURE OF UNCERTAINTY FOR FINITE SETS 81