Mikles J., Fikar M. Process Modelling, Identification, and Control

Подождите немного. Документ загружается.

6.2 Identification from Step Responses 229

˙y(t)=Z

ω

0

P

e

−ζω

0

t

[ζ sin(ω

0

Pt+ ϕ) − P cos(ω

0

Pt+ ϕ)] (6.32)

= Z

ω

0

P

e

−ζω

0

t

sin(ω

0

Pt) (6.33)

where the formula for sin(a − ϕ) was used. The following holds at the local

extrema

˙y(t

n

)=0⇐⇒ sin(ω

0

Pt

n

)=0⇒ t

n

=

nπ

ω

0

P

(6.34)

Substituting for t

n

into the process output equation (6.31) yields

y(t

n

)=Z

1 −

1

P

e

−

1

P

nπζ

sin(nπ + ϕ)

(6.35)

= Z

1 −(−1)

n

e

−

1

P

πζ

n

(6.36)

= Z(1 −(−1)

n

M

n

) ,M=e

−

1

P

πζ

(6.37)

The identification procedure is then as follows:

1. Z = y(∞),

2. y

1

= Z(1 + M),y

2

= Z(1 − M

2

) ⇒ M =

y

1

− y

2

y

1

,

3. M =e

−

1

P

πζ

⇒ ζ =

ln M

π

2

+ln

2

M

,

4. t

1

=

π

ω

0

P

,t

2

=

2π

ω

0

P

⇒ ω

0

=

π

(t

2

− t

1

)

1 −ζ

2

,T=1/ω

0

.

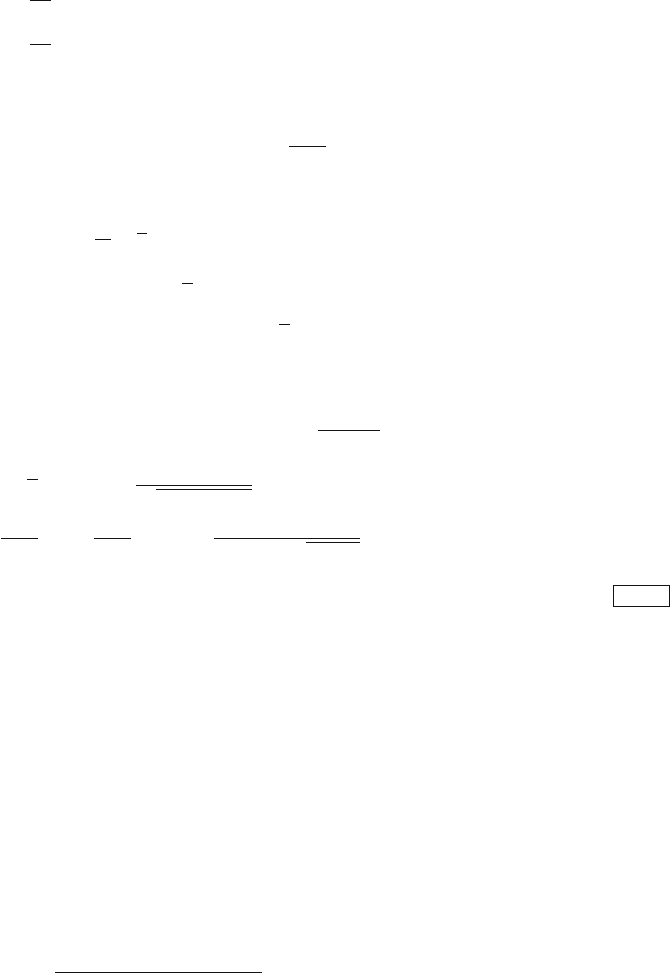

Example 6.2: Underdamped system

www

Consider a measured step response shown in the left part of Fig. 6.4 that

has been measured from the steady-state characterised by the input vari-

able at the value u

0

=0.2 changed to the value u

∞

= −0.3. Such a step

response can be obtained for example from a U-tube manometer by a step

change of the measured pressure.

The measured step response is first shifted to the origin by a value of

y

0

= −2.3608 and then normalised – divided by the step change of the

input Δu =0.5. The obtained normalised step response y is shown by a

solid line on the right side of Fig. 6.4.

The values of the first maximum and minimum are found as [15.00; 0.38]

and [30.50; 0.32], respectively. The above described identification proce-

dure yielded the following values of the estimated parameters: Z =0.33,

ζ =0.51, and T =4.22. The approximated transfer function is then of the

form

G(s)=

0.33

17.8084s

2

+4.3044s +1

The step response y

n

of the approximated transfer function is shown by

a dashed line on the right side of Fig. 6.4.

230 6 Process Identification

0 10 20 30 40 50

−2.55

−2.5

−2.45

−2.4

−2.35

−2.3

y

t

0 10 20 30 40 50

0

0.05

0.1

0.15

0.2

0.25

0.3

0.35

0.4

+

+

y

t

y

y

n

Fig. 6.4. Measured step response of an underdamped system (left). Normalised and

approximated step response (right)

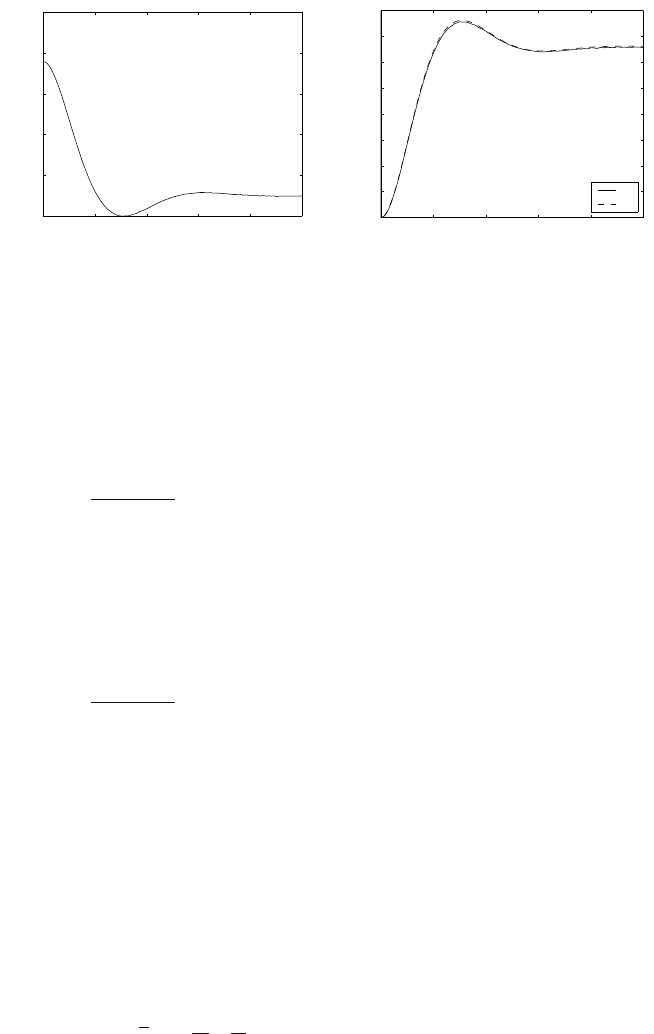

6.2.3 System of a Higher Order

Strejc Method

Consider an approximation of a process by the nth order system

G(s)=

Z

(Ts+1)

n

e

−T

d

s

(6.38)

where Z is the process gain, T time constant, T

d

time delay, and n the system

order that all are to be estimated. Two process characteristics are determined

from the step response: times T

n

, T

u

(see page 257) from the tangent at the

inflexion point.

Consider now properties of the normalised step response if T

d

= 0 (Fig. 6.5)

G(s)=

1

(Ts+1)

n

(6.39)

The following facts will be used for the derivation:

1. The tangent of the step response in the inflexion point [t

i

,y

i

] is given by

the equation of a line p : y = a + bt,

2. The line p passes through points [T

u

, 0], [T

u

+ T

n

, 1], [t

i

,y

i

]. The following

holds: b =1/T

n

and a = −T

u

b,

3. The slope of the line p is given as b =˙y(t

i

),

4. ¨y(t

i

) = 0 (inflexion point).

The step response can by obtained by the means of the inverse Laplace

transform and is given as

y(t)=1− e

−

t

T

n−1

k=0

1

k!

t

T

k

(6.40)

6.2 Identification from Step Responses 231

r

- -

t

t

i

T

n

T

u

1

y

i

--

T

uf

T

d

?

6

e(T

p

)

Fig. 6.5. Step response of a higher order system

We calculate the first and the second derivative of the output y with respect

to time

˙y(t)=

1

T

n

(n −1)!

t

n−1

e

−

t

T

(6.41)

¨y(t)=

1

T

n

t

n−2

(n −2)!

−

1

T

t

n−1

(n −1)!

e

−

t

T

(6.42)

In the inflexion point holds ¨y(t

i

) = 0, hence

t

i

= T (n −1) (6.43)

Evaluating ˙y at time t

i

gives

˙y(t

i

)=

(n −1)

n−1

T (n −1)!

e

−(n−1)

(6.44)

It can be seen from the figure that ˙y(t

i

)=1/T

n

,thus

T

T

n

=

(n −1)

n−1

(n −1)!

e

−(n−1)

= g(n) (6.45)

We can see that this function depends only on n.

Further, it can be shown that the relation between T

u

and T

n

is again only

a function of n

232 6 Process Identification

T

u

T

n

=e

−(n−1)

-

(n −1)

n

(n −1)!

+

n−1

k=0

1

k!

(n −1)

k

.

− 1=f(n) (6.46)

The following table can then be constructed:

n

123456

f(n) 0.000 0.104 0.218 0.319 0.410 0.493

g(n)

1.000 0.368 0.271 0.224 0.195 0.161

The identification procedure is then as follows:

1. The values of Z = y(∞), T

us

,T

n

are read from the step response,

2. The quotient f

s

= T

us

/T

n

is calculated,

3. The degree n

0

is chosen from the table in such a way that the following

holds

f(n

0

) ≤ f

s

<f(n

0

+1),

4. Time delay T

d

can be determined as the difference between the real and

theoretical time T

u

T

d

=[f

s

− f(n

0

)]T

n

because T

u

= T

n

f(n),

5. The process time constant T can be read from the row of g(n) for the

corresponding n

0

. T is obtained from the definition of g

T = T

n

g(n

0

).

Broida method

The assumption that all time constants of the process are the same may not

always hold. Broida considered the transfer function of the form

G(s)=

Z

+

n

k=1

(

T

k

s +1)

e

−T

d

s

(6.47)

In the same way as in the Strejc method, the table for f(n),g(n)canbe

derived.

n

123456

f(n) 0.000 0.096 0.192 0.268 0.331 0.385

g(n)

1.000 0.500 0.440 0.420 0.410 0.400

The identification procedure is the same as with the Strejc method.

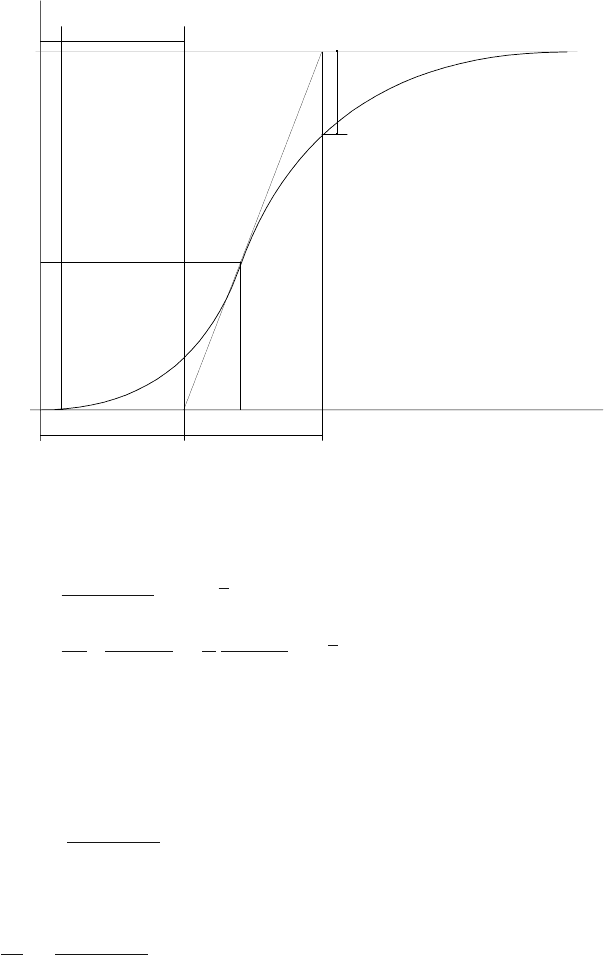

Example 6.3: Higher order system approximation

www

Consider again identification of the step response of the chemical reactor

from Example 6.1. Inflexion point has been determined, tangent drawn

6.3 Least Squares Methods 233

that crosses it, and times T

u

=0.2451 a T

n

=1.9979 were read (Fig. 6.6

left).

Both Strejc and Broida methods were applied. Resulting transfer functions

are given as

G

Strejc

(s)=

0.027

0.54s

2

+1.47s +1

e

−0.037s

G

Broida

(s)=

0.027

0.5s

2

+1.5s +1

e

−0.053s

The step responses together with the original one are shown in Fig. 6.6

(right). We can notice that both methods approximate the process dynam-

ics better than the first order transfer function. However, neither Strejc

nor Broida methods can cover small overshoot as both are suitable only

for aperiodic and monotone step responses.

0 2 4 6 8 10

0

0.005

0.01

0.015

0.02

0.025

0.03

+

x

1

t

0 2 4 6 8 10

0

0.005

0.01

0.015

0.02

0.025

0.03

x

1

t

orig

strejc

broida

Fig. 6.6. Normalised step response of the chemical reactor with lines drawn to

estimate T

u

,T

n

(left), approximated step response (right)

6.3 Least Squares Methods

The most often used methods nowadays are based on minimisation of squares

of errors between measured and estimated process output – the least squares

method (RL). Main advantages include the possibility of recursivity of calcu-

lations, possibility to track time variant parameters, and various modifications

to make the algorithm robust. Originally the method was derived for estima-

tion of parameters of discrete-time systems. We will also show its modification

for continuous-time systems.

Consider the ARX model (see page 224).

y(k)=−a

1

y(k−1)−···−a

n

a

y(k−n

a

)+b

1

u(k−1)+···+b

n

b

u(k−n

b

)+ξ(k)

(6.48)

234 6 Process Identification

We will introduce the notation

θ

T

=(a

1

,...,a

n

a

,b

1

,...,b

n

b

)

z

T

(k)=(−y(k − 1),...,−y(k −n

a

),u(k − 1),...,u(k − n

b

))

Hence

y(k)=θ

T

z(k)+ξ(k) (6.49)

The identification aim is to determine the parameter vector θ based on infor-

mation of measured process output y(k) and the data vector z(k).

Assume that the equation (6.49) holds at times 1,...,k

y(1) = −

*

n

a

i=1

a

i

y(1 −i)+

*

n

b

i=1

b

i

u(1 −i)+ξ(1) = z

T

(1)θ + ξ(1)

y(2) = −

*

n

a

i=1

a

i

y(2 −i)+

*

n

b

i=1

b

i

u(2 −i)+ξ(2) = z

T

(2)θ + ξ(2)

.

.

.

y(k)=−

*

n

a

i=1

a

i

y(k − i)+

*

n

b

i=1

b

i

u(k − i)+ξ(k)=z

T

(k)θ + ξ(k)

(6.50)

where k>n

a

+ n

b

and denote

Y =

⎛

⎜

⎜

⎜

⎝

y(1)

y(2)

.

.

.

y(k)

⎞

⎟

⎟

⎟

⎠

, ξ =

⎛

⎜

⎜

⎜

⎝

ξ(1)

ξ(2)

.

.

.

ξ(k)

⎞

⎟

⎟

⎟

⎠

(6.51)

Z =

⎛

⎜

⎜

⎜

⎝

z

T

(1)

z

T

(2)

.

.

.

z

T

(k)

⎞

⎟

⎟

⎟

⎠

(6.52)

We will look for such an estimate

ˆ

θ that minimises sum of squares of errors

between measured and modelled outputs, i. e.

I(θ)=

k

i=1

(y(i) −z

T

(i)θ)

2

(6.53)

This equation can be rewritten in vector notation

I(θ)=(Y − Zθ)

T

(Y −Zθ) (6.54)

Minimum is found if gradient of this function with respect to θ is equal to

zero

(Z

T

Z)

ˆ

θ = Z

T

Y (6.55)

6.3 Least Squares Methods 235

If the matrix Z

T

Z is invertible, then

ˆ

θ =(Z

T

Z)

−1

Z

T

Y (6.56)

The matrix P =(Z

T

Z)

−1

is called the covariance matrix if the stochastic

part has unit variance.

In general, the estimate of

ˆ

θ is unbiased (its expectation is equal to θ)if

ξ(k) is white noise.

6.3.1 Recursive Least Squares Method

In recursive least squares (RLS) estimated parameters are improved with each

new data. This means that the estimate

ˆ

θ(k) can be obtained by some simple

manipulations from the estimate

ˆ

θ(k − 1) based on the data available up to

time k − 1.

Characteristic features of recursive methods are as follows:

• Their requirements for computer memory are very modest as not all mea-

sured data up to current time are needed.

• They form important part of adaptive systems where actual controllers

are based on current process estimates.

• They are easily modifiable for real-time data treatment and for time vari-

ant parameters.

To understand better derivation of a recursive identification method, let

us consider the following example.

Example 6.4: Recursive mean value calculation

Consider a model of the form

y(k)=a + ξ(k)

where ξ(k) is a disturbance with standard deviation of one. It is easy to

show that the best estimate of a based on information up to time k in the

sense of least squares is given as the mean of all measurements

ˆa(k)=

1

k

k

i=1

y(i)

This equation can be rewritten as

ˆa(k)=

1

k

-

k−1

i=1

y(i)+y(k)

.

=

1

k

[(k − 1)ˆa(k − 1) + y(k)]

=ˆa(k − 1) +

1

k

[y(k) − ˆa(k − 1)]

The result says that the estimate of the parameter a in time k is equal

to the estimate at time k − 1 plus some correction term. The correction

term depends linearly on the error between ˆa(k −1) and its prediction at

236 6 Process Identification

time k,i.e.y(k). The proportionality factor is 1/k which means that the

magnitude of changes will be decreasing with increasing time as the value

of ˆa(k − 1) will approach the true value of a.

Similarly, it can be shown that the covariance matrix is given as P (k)=

1/k. This relation can recursively be rewritten as

P (k)=

P (k − 1)

1+P (k − 1)

The following matrix inversion lemma will be used to derive recursive least

squares method.

Theorem 6.1. Assume that M = A + BC

−1

D. If the matrices A, C are

nonsingular, then

M

−1

= A

−1

− A

−1

B(DA

−1

B + C)

−1

DA

−1

(6.57)

Proof. Consider inversion of a matrix X

X

−1

=

AB

−DC

−1

=

P

1

P

2

P

3

P

4

= P (6.58)

Because XP = I, multiplication of all submatrices gives

AP

1

+ BP

3

= I, AP

2

+ BP

4

= 0 (6.59)

−DP

1

+ CP

3

= 0, −DP

2

+ CP

4

= I (6.60)

We assume that A, C are nonsingular. Hence

P

3

= C

−1

DP

1

, P

2

= −A

−1

BP

4

P

1

=[A + BC

−1

D]

−1

, P

4

=[C + DA

−1

B]

−1

(6.61)

As it also holds that PX = I we can write

P

1

A −P

2

D = I ⇒ P

1

= A

−1

+ P

2

DA

−1

(6.62)

Comparison of the last three equations yields the desired result.

If we consider C =1, B = b, D = b

T

then the matrix inversion lemma yields

M

−1

=(A + bb

T

)

−1

= A

−1

− A

−1

b(b

T

A

−1

b +1)

−1

b

T

A

−1

(6.63)

where the term that has to be inverted is only a scalar.

To derive the RLS method assume that the parameter estimate at time

k denoted by

ˆ

θ(k) given by (6.56) and the covariance matrix P (k)=

(Z

T

(k)Z(k))

−1

are known. The aim is to derive recursive relations for

ˆ

θ(k+1)

and P (k + 1). When the measurement at time k + 1 is known then

6.3 Least Squares Methods 237

Y (k +1)=

Y (k)

y(k +1)

Z(k +1)=

Z(k)

z

T

(k +1)

, Z

T

(k +1)=

Z

T

(k) z(k +1)

The covariance matrix P (k + 1) is given as

P (k +1)=(Z

T

(k +1)Z(k + 1))

−1

(6.64)

=

Z

T

(k) z(k +1)

Z(k)

z

T

(k +1)

−1

(6.65)

=

Z

T

(k)Z(k)+z(k +1)z

T

(k +1)

−1

(6.66)

=

P (k)

−1

+ z(k +1)z

T

(k +1)

−1

(6.67)

Using the matrix inversion lemma yields

P (k+1) = P (k)−P (k)z(k+1)[z

T

(k+1)P (k)z(k+1)+1]

−1

z

T

(k+1)P (k)

(6.68)

Denote γ(k +1)=[z

T

(k +1)P (k)z(k +1)+1]

−1

. This can be manipulated

as

γ(k +1)=1− γ(k +1)z

T

(k +1)P (k)z(k + 1) (6.69)

This relation will later be used.

Therefore, the covariance matrix P update is given as

P (k +1)=P (k) − γ(k +1)P (k)z(k +1)z

T

(k +1)P (k) (6.70)

Derivation of a new parameter estimate

ˆ

θ(k + 1) is similar and makes use

of (6.69), (6.70):

ˆ

θ

k+1

= P (k +1)Z

T

(k +1)Y (k +1)

= P (k +1)

Z

T

(k) z(k +1)

Y (k)

y(k +1)

= P (k + 1)[Z

T

(k)Y (k)+z(k +1)y(k + 1)]

=[P (k) −γ(k +1)P (k)z(k +1)z

T

(k +1)P (k)] ×

[Z

T

(k)Y (k)+z(k +1)y(k + 1)]

=

ˆ

θ(k) −γ(k +1)P (k)z(k +1)z

T

(k +1)

ˆ

θ(k)

+P (k)z(k +1)y(k +1)

−γ(k +1)P (k)z(k +1)z

T

(k +1)P (k)z(k +1)y(k +1)

=

ˆ

θ(k) −γ(k +1)P (k)z(k +1)z

T

(k +1)

ˆ

θ(k)

+P (k)z(k + 1)[1 − γ(k +1)z

T

(k +1)P (k)z(k + 1)]y(k +1)

=

ˆ

θ(k) −γ(k +1)P (k)z(k +1)z

T

(k +1)

ˆ

θ(k)

+γ(k +1)P (k)z(k +1)y(k +1)

=

ˆ

θ(k)+γ(k +1)P (k)z(k + 1)[y(k +1)− z

T

(k +1)

ˆ

θ(k)] (6.71)

238 6 Process Identification

To conclude, equations for recursive least squares are given as

(k +1)=y(k +1)− z

T

(k +1)

ˆ

θ(k)

γ(k +1)=[1+z

T

(k +1)P (k)z(k + 1)]

−1

L(k +1)=γ(k +1)P (k)z(k +1)

P (k +1)=P (k) − γ(k +1)P (k)z(k +1)z

T

(k +1)P (k)

ˆ

θ(k +1)=

ˆ

θ(k)+L(k +1)(k +1)

(6.72)

Every recursive algorithm needs some initial conditions. In our case these

are

ˆ

θ(0) and P (0). The value of P (0) can be thought as uncertainty of the

estimate

ˆ

θ(0). Both initial conditions have some influence on convergence

properties of RLS. As it will be shown below, the cost function minimised by

RLS is not given by (6.53), but as

I

k+1

(θ)=[θ −

ˆ

θ(0)]

T

P

−1

(0)[θ −

ˆ

θ(0)] +

k+1

i=1

[y(i) −z

T

(i)θ]

2

(6.73)

We can notice that the original cost function includes a term characterising

effects of initial conditions. To minimise these effects we usually choose

ˆ

θ(0) =

0 and P (0) = cI where c is some large constant, for example 10

5

− 10

10

.

For completeness we will give the proof of above mentioned statements

Theorem 6.2. Minimisation of the cost function (6.73) leads to (6.72).

Proof. The cost function (6.73) can be rewritten to the vector notation

I

k+1

(θ)=[θ −

ˆ

θ(0)]

T

P

−1

(0)[θ −

ˆ

θ(0)]

+[Y (k +1)−Z(k +1)θ]

T

[Y (k +1)− Z(k +1)θ] (6.74)

Setting its partial derivative with respect to θ equal to zero gives

[Z

T

(k +1)Z(k +1)+P

−1

(0)]θ = P

−1

(0)

ˆ

θ(0) + Z

T

(k +1)Y (k + 1) (6.75)

Data up to time k + 1 gives

ˆ

θ(k + 1), thus

ˆ

θ(k+1) = [Z

T

(k+1)Z(k+1)+P

−1

(0)]

−1

[P

−1

(0)

ˆ

θ(0)+Z

T

(k+1)Y (k+1)]

(6.76)

Recursion of (6.67) gives the relation

P

−1

(k +1)=P

−1

(0) + Z

T

(k +1)Z(k + 1) (6.77)

and for the estimate of the parameters

ˆ

θ(k + 1) holds

ˆ

θ(k +1)=P (k + 1)[P

−1

(0)

ˆ

θ(0) + Z

T

(k +1)Y (k + 1)] (6.78)

= P (k + 1)[P

−1

(0)

ˆ

θ(0) + Z

T

(k)Y (k)+z(k +1)y(k + 1)] (6.79)

Equation (6.76) shifted to time k is given as