Korb K.B., Nicholson A.E. Bayesian Artificial Intelligence

Подождите немного. Документ загружается.

Property 1: Domain knowledge (first-order knowledge), which can be measured

by the frequency with which one is inclined to correctly assert

or

— i.e., by predictive accuracy. For example, in sports betting the

more often you can identify the winning team, the better off you are.

Property 2: Calibration (meta-knowledge), the tendency of the bettor to put

close to the objective probability (or, actual frequency). That

betting reward is maximized by perfect calibration is proven as Theorem 6.1.2

in Cover and Thomas’s Elements of Information Theory [60]

.

With Property 1 comes a greater ability to predict target states; with Property 2 comes

an improved ability to assess the probability that those predictions are in error. These

two are not in a trade-off relationship: they can be jointly maximized.

If we happen to have in hand the true probability distribution over the target vari-

ables, then we can use Kullback-Leibler divergence (from

3.6.5) to measure how

different the model’s posterior distribution is from the true distribution. That is, we

can use

(10.3)

This is just the expected log likelihood ratio:

(10.4)

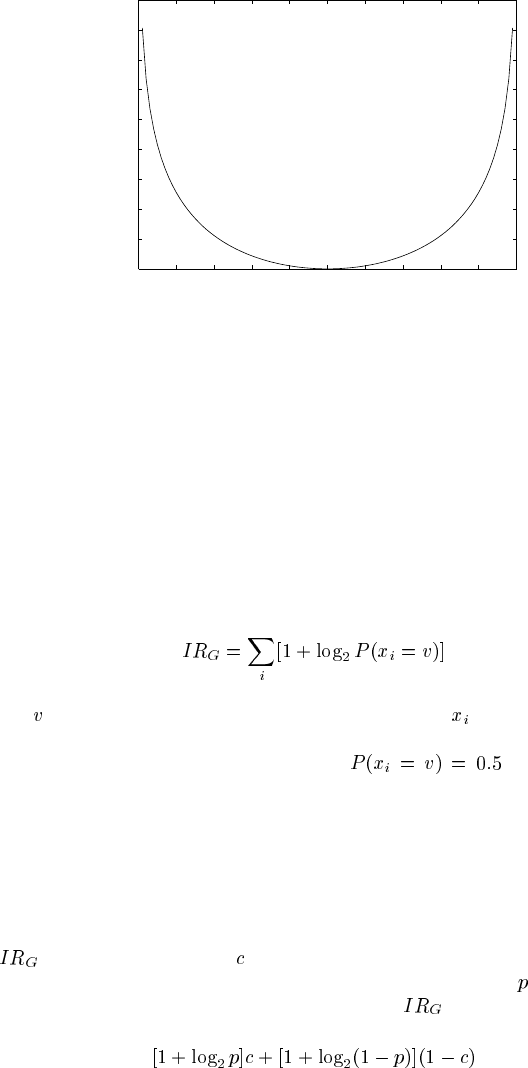

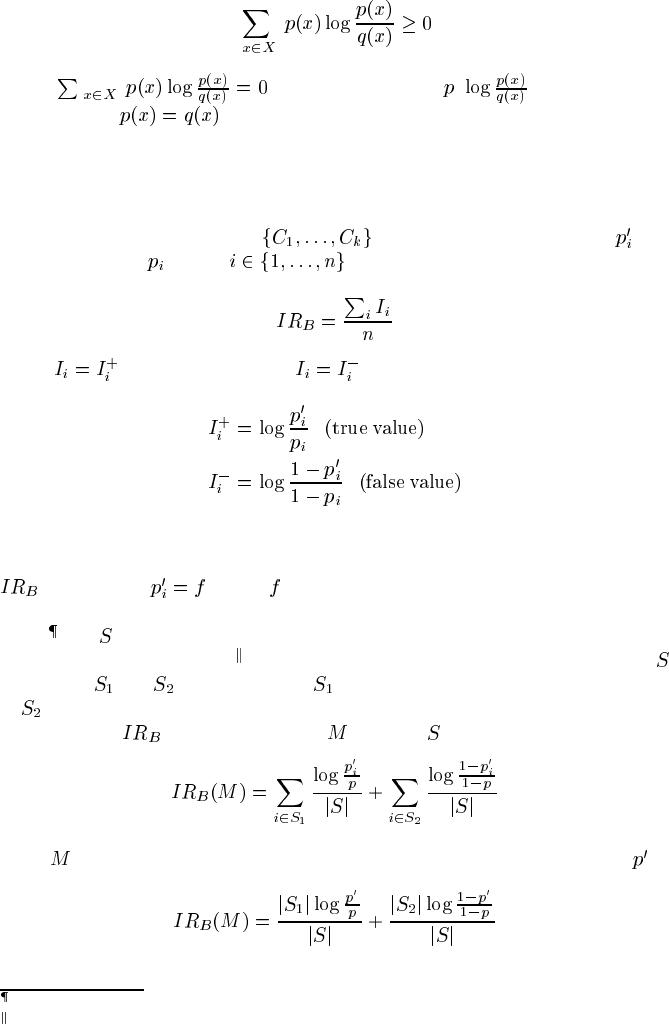

Kullback-Leibler divergence is a measure which has the two properties of betting

reward in reverse: that is, by minimizing KL divergence you maximize betting re-

ward, and vice versa. Consider, the KL divergence from the binomial distribution

for various , as in Figure 10.5. Clearly, this KL divergence is

minimized when

. This is proved for all positive distributions in Technical

Notes

10.8. Of course, implies that the model is perfectly calibrated. But it

also implies Property 1 above: clearly there is no more domain knowledge to be had

(disregarding causal structure, at any rate) once you have exactly the true probability

distribution in the model.

KL divergence is the right measure when there is a preferred “point of view” —

when the true probability distribution is known,

in equation (10.3), then KL di-

vergence reports how close a model’s distribution

is to the generating distribution.

This provides an evaluation measure for a model that increases monotonically as

the model’s distribution diverges from the truth. The drawback to KL divergence is

simply that when assessing models for real-world processes, the true model is nec-

essarily unknown. Were it known, we would have no need to collect observational

data and measure KL divergence in the first place. In consequence, we want some

This is recognized in David Lewis’s “Principal Principle,” which asserts that one’s subjective probability

for an event, conditioned upon the knowledge of a physical probability of that event, should equal the

latter [171].

© 2004 by Chapman & Hall/CRC Press LLC

0

2

4

6

8

10

12

14

16

18

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

q

KL divergence

FIGURE 10.5

KL divergence.

other performance measure, sharing with KL the same two properties of being op-

timized with maximal domain knowledge and calibration, while not depending on

some privileged access to the truth.

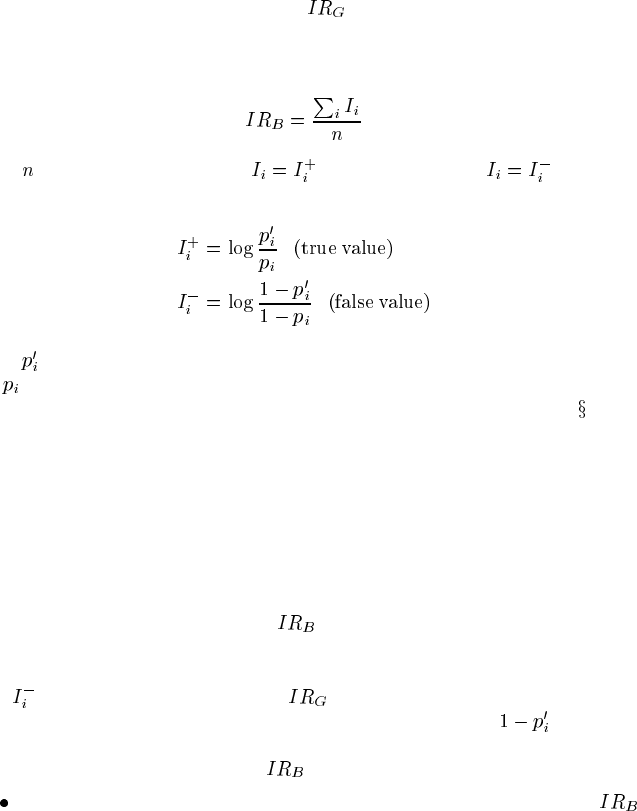

10.5.4 Information reward

Information reward, due to I.J. Good [95], is just such a measure. Good invented

it as a cost neutral assessment of gambling expertise for binomial prediction tasks.

Good’s definition is:

(10.5)

where

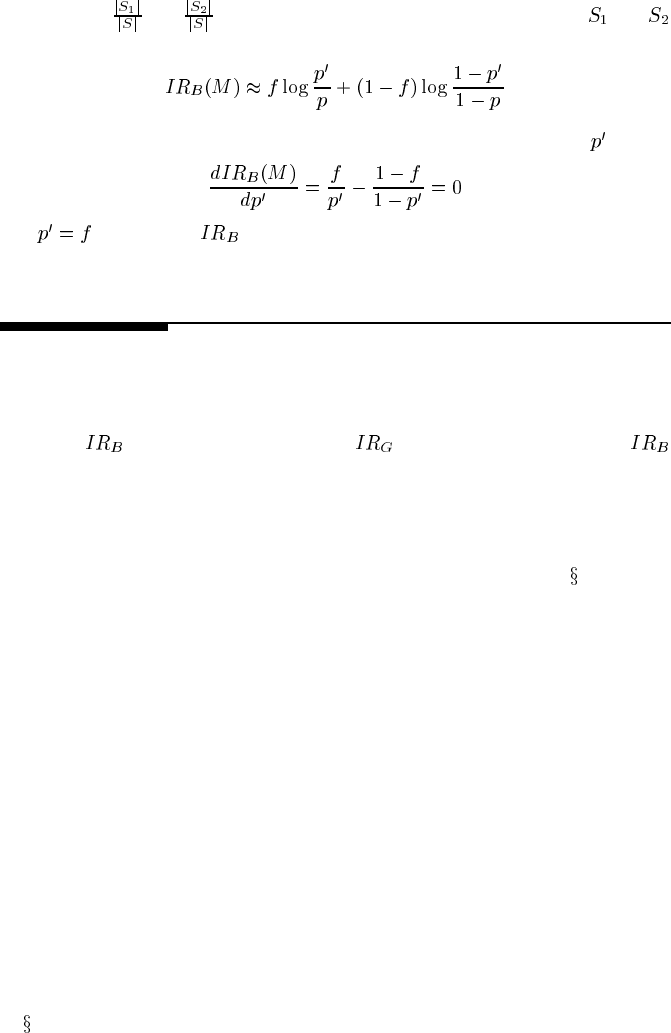

is whichever truth value is correct for the case of . Good introduced the

constant 1 in order to fix the reward in case of prior ignorance to zero, which he

assumed would be represented by predicting

. This reward has

a clear and useful information-theoretic interpretation (see Figure 10.6): it is one

minus the length of a message conveying the actual event in a language efficient for

agents with the model’s beliefs (i.e., a language that minimizes entropy for them).

Note that such a message is infinite if it is attempting to communicate an event that

has been deemed to be impossible, that is, to have probability zero. Alternatively,

message length is minimized, and information reward maximized, when the true

class is predicted with maximum probability, namely 1. This gives us Property 1.

also has Property 2. Let be the chance (physical probability, or its stand-in,

frequency in the test set) that some test case is in the target class and

the probability

estimated by the machine learner. Then the expected

is:

© 2004 by Chapman & Hall/CRC Press LLC

-3.5

-3

-2.5

-2

-1.5

-1

-0.5

0

0.5

1

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

reward

probability

FIGURE 10.6

Good’s information reward.

To find the maximum we take the derivative with respect to

and set it to zero:

which last equation reports that perfect calibration maximizes reward.

All of this has some interesting general implications for machine learning research

and evaluation methodology. For one thing, machine learning algorithms might be

usefully modified to monitor their own miscalibration and use that negative feed-

back to improve their calibration performance while also building up their domain

knowledge.

In experimental work, we have shown that standard machine learning problems

issue differing verdicts as to which algorithm or model is best, when those verdicts

are based on information reward instead of predictive accuracy [157]. In other words,

reliance upon the predictive accuracy metric is demonstrably causing errors in the

judgments of performance showing up in the machine learning literature.

10.5.5 Bayesian information reward

There are two respects in which Good’s information reward is deficient, leading to

potential errors in evaluating models. The first is that

assumes a uniform prior

probability over the target classes. If the base rate for a disease, for example, is far

© 2004 by Chapman & Hall/CRC Press LLC

lower than 50% in a population, say 1%, then a model which reflects no understand-

ing of the domain at all — beyond that base rate — can accumulate quite a high

information reward simply by predicting a 1% chance of disease for all future cases.

Rather than Good’s intended zero reward for ignorance, we have quite a high reward

for ignorance. As a Bayesian reward function, we propose one which takes the prior

into account and, in particular, which rewards with zero any prediction at the prior

probability for the target class.

The second deficiency is simply that

is limited to binomial predictions.

Bayesian networks are very commonly applied to more complex prediction prob-

lems, so we propose the Bayesian information reward which applies to multino-

mial prediction:

(10.6)

where

is the number of test cases, for the true class and otherwise,

and

where is the model’s probability for the variable taking the particular value at issue

and

is the prior probability of the variable taking that value. Note that this version

of information reward is directly related to Kullback-Leibler divergence (

3.6.5),

where the prior probability takes the role of the reference probability, but with two

differences. First, the weighting is done implicitly by the frequency with which the

different values arise in the test set, presumably corresponding to the prior proba-

bility of the different values. Second, the ratio between the model probability and

the prior is inverted, to reflect the idea that this is a reward for the model to diverge

from the reference prior, rather than to converge on it — so long as that divergence

is approaching the truth more closely than does the prior. This latter distinction, re-

warding divergence toward the truth and penalizing divergence away from the truth,

is enforced by the distinction between

for true value and for false values.

This generalizes Good’s information reward to cover multinomial prediction by

introducing a reward value for values which the variable in the test case fails to take

(i.e.,

). In the case of the binomial this was handled implicitly, since the

probability of the alternative to the true value is constrained to be

.However,

for multinomials there is more than one alternative to the true value and they must be

treated individually, as we do above.

has the following meritorious properties:

If a model predicts any value with that value’s prior probability, then the

is zero. That is, ignorance is never rewarded. This raises the question of where

the priors for the test cases should come from. The simplest, and usually

satisfactory, answer is to use the frequency of the value for the variable within

the training cases.

© 2004 by Chapman & Hall/CRC Press LLC

Property 1 is satisfied: as predictions become more extreme, close to one or

zero, for values which are, respectively, true or false, the reward increases

asymptotically to one. As incorrect predictions become more extreme, the

negative reward increases without bound. Both of these are strictly in accord

with the information-theoretic interpretation of the reward.

Property 2 is satisfied: is maximized under perfect calibration (see 10.8).

10.6 Summary

Sensitivity analysis can be applied to model parameters (utilities and conditional

probability tables) as well as inputs (observations) to determine the impact of large or

small changes to decisions and/or query nodes. It is well suited to obtaining feedback

from a domain expert and can be used throughout the prototyping development of

Bayesian networks. Case-based evaluation is again well suited to obtaining expert

judgment on how a particular set of cases is handled by the model. Explanation

methods can be applied both to enhance user understanding of models and to support

their initial development.

Validation methods are applicable when a reasonable set of observational samples

are available for the domain. In evaluating Bayesian networks the causal structure

needs to be taken into account explicitly, since the validation metrics do not do so

of their own accord. A plausible approach is to examine the probability distribution

over a subnetwork of query and evidence nodes. Four validation methods are:

Predictive accuracy. This is the most commonly used and generally the easiest

to compute. It can provide a biased assessment in that it fails to take into

account the probabilistic nature of prediction; in particular, it fails to penalize

miscalibration.

Expected value, or cost-sensitive methods. This is the most relevant and effec-

tive evaluation metric for any particular domain, so long as the costs associated

with misclassification and the benefits for correct classification are available.

Kullback-Leibler divergence. This is an idealized metric, one which requires

that the true distribution is already known. It illustrates the virtues which we

would like a good metric to have.

Bayesian information reward. This is a metric which has those virtues, but

does not require prior knowledge of the truth. It is suboptimal in that it is

entirely insensitive to the utilities of (mis)classification; however, it will de-

liver a generally useful assessment of the betting adequacy of the model for

the domain.

© 2004 by Chapman & Hall/CRC Press LLC

10.7 Bibliographic notes

Coup ’s Ph.D. thesis [55] provides a very thorough coverage of the issues involved

in undertaking sensitivity analysis with respect to parameters, for both Bayesian net-

works and decision networks.

For an example of recent work on cost-sensitive classification, see papers by

Turney [276] and Provost [224]; there was also some early work by Pearl [214].

Kononenko and Bratko also criticize predictive accuracy for evaluating algorithms

and models [155]; furthermore, they too offer a scoring function which takes the prior

probabilities of target classes into account. Unfortunately, their metric, while begin-

ning with an information-theoretic intuition, does not have a proper information-

theoretic interpretation. See our [110] for a criticism of the Kononenko-Bratko met-

ric (however, please note that this paper’s metric itself contains an error;

in the

text is a further improvement).

10.8 Technical notes

Kullback-Leibler divergence

We prove that KL divergence is minimized at zero if and only if the model under

evaluation is perfectly calibrated (restricting our consideration to strictly positive

distributions).

Theorem 10.1 Let

and of Equation 10.3 be strictly positive probability distribu-

tions. Then

and if and only if .

Proof. (See [25, A.15.1].)

First, a lemma:

(10.7)

This is because (since )

Since,

© 2004 by Chapman & Hall/CRC Press LLC

it follows that

(10.8)

And if

, then by positivity of , must everywhere

be zero; hence

everywhere.

Bayesian information reward

We provide a restricted proof that Bayesian information reward is maximized

when the estimated probabilities are calibrated. The Bayesian information reward

for a classification into classes

with estimated probabilities and

prior probabilities

,where ,is

(10.9)

where

for the true class and otherwise, and

Theorem 10.2 Given a batch classification learner (which does not alter its proba-

bility estimates after seeing the training data) and a binary classification problem,

is maximal if ,where is the frequency of the target class in the test set.

Proof

.Let be a test set such that all data items have the same attributes (other than

the binary target class value).

To the classifier these items are indistinguishable.

is split into and with all items in belonging to the target class and all items

in

belonging to the complement class.

The average

for machine learner on items is:

(10.10)

Since

is a batch machine learner, it will respond with the same probability to

each item. So,

(10.11)

With assistance from Lucas Hope.

If the actual test set is not of this kind, we can partition it so that each subset is. Since the theorem holds

for each subset, it will also hold for the union.

© 2004 by Chapman & Hall/CRC Press LLC

The fractions

and approximate the true probabilities of being in and

respectively, so replacing these we get:

(10.12)

Since we want to find the maximum reward, differentiate with respect to

and set

to 0:

(10.13)

thus

for maximal .

10.9 Problems

Problem 1

Prove that

is a proper generalization of — in other words, prove that

in the binomial case is equivalent to Good’s information reward (assuming that the

prior probability is uniform).

Problem 2

Start with the decision network for Julia’s manufacturing problem from

4.9. In case

it isn’t already, parameterize it so that the decision (between production, marketing,

further development) is relatively insensitive to the exact probability of a high vs. low

product quality. Then reparameterize it so that the decision is sensitive to product

quality. Comment on these results.

Problem 3

In Table 9.4 we gave a utility function for the missing car problem, for the decision

network shown in Figure 9.17, with expected utility results in Table 9.5. Investigate

the effects on the decision in the following situations:

1. The same relative ordering of outcome preferences is kept, but the quantitative

numbers are all made positive.

2. The preference ordering is changed so that most priority is given to not wasting

police time.

3. The rate of car theft in the area is much higher, say 1 in 500.

Problem 4

In

10.3.1 we described how it is possible to compute the entropy reduction due to

evidence about a pair of observations. Write code to do this using the Netica API.

© 2004 by Chapman & Hall/CRC Press LLC

11

KEBN Case Studies

11.1 Introduction

In this chapter we describe three applications of Bayesian networks. These are, in

particular, applications we were involved in developing, and we choose them because

we are familiar with the history of their development, including design choices illus-

trating KEBN (or not), which otherwise must go unreported.

11.2 Bayesian poker revisited

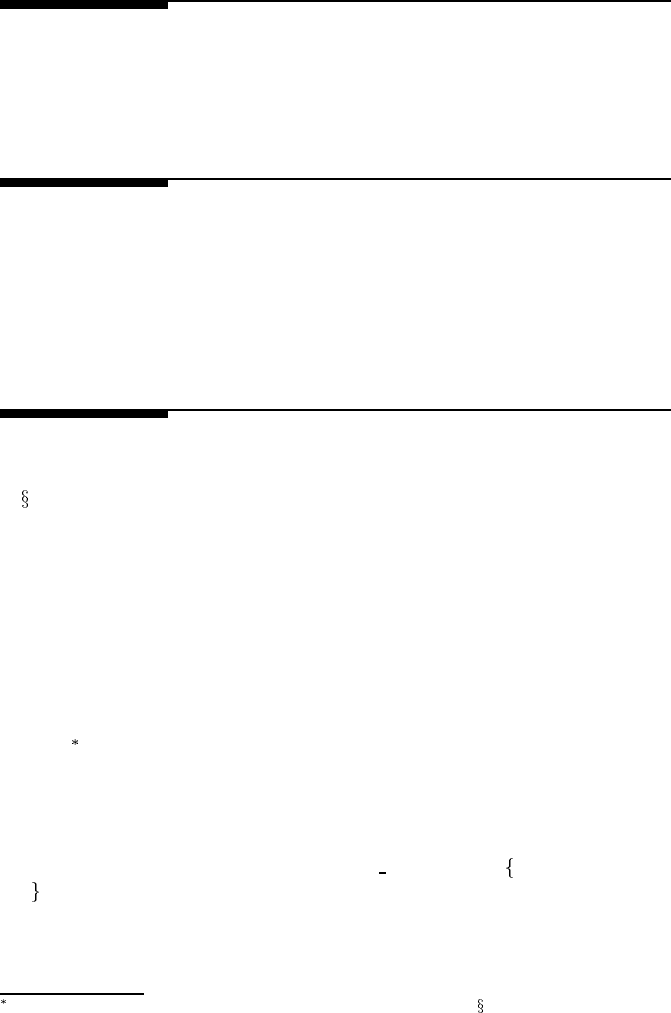

In 5.3, we described a Bayesian decision network for the game of 5-card stud poker.

The details of the network structure and betting strategies used by the Bayesian Poker

Player (BPP) are a more recent version of a system that has been evolving since

1993. Here we present details of that evolution as an interesting case study in the

knowledge engineering of Bayesian networks.

11.2.1 The initial prototype

The initial prototype developed in 1993 [132] used a simple polytree structure. This

was largely for pragmatic reasons, as the message passing algorithms for polytrees

were simple to implement, and at that time, BN software tools were not widely

available

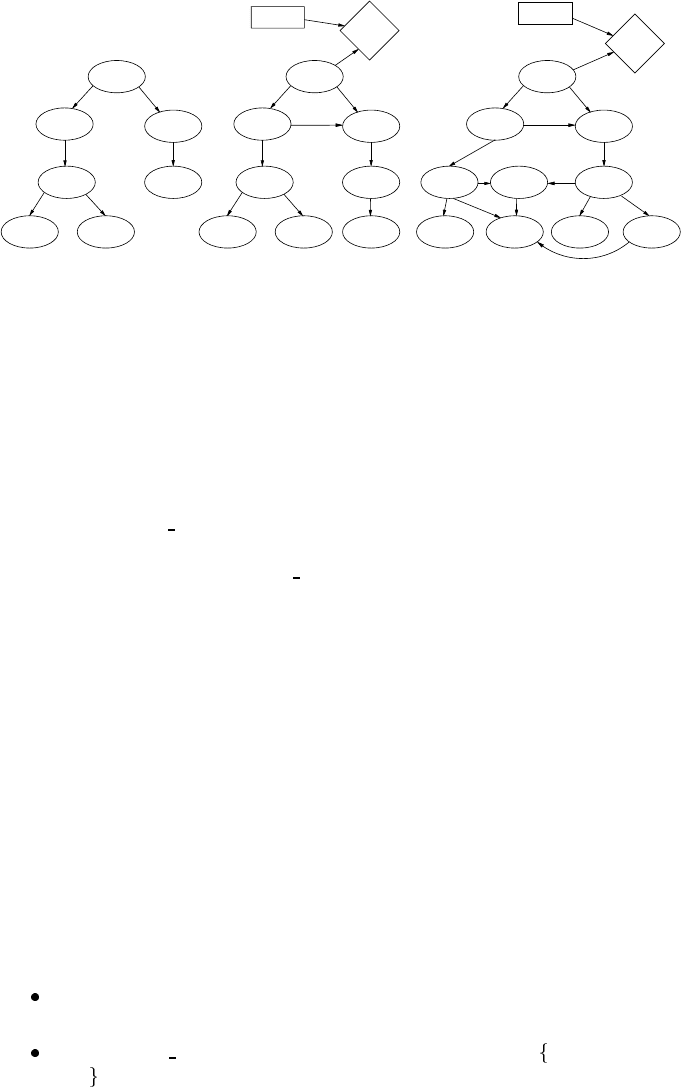

. The original network structure is shown in Figure 11.1(a). The polytree

structure meant that we were making the (clearly incorrect) assumption that the final

hand types are independent.

In the initial version, the nodes representing hand types were initially given values

which divided hands into the nine categories. The size of the model was also limited

by compressing the possible values for the OPP

Action node to pass/call, bet/raise,

fold

. This produced a level of play comparable only to a weak amateur. Since

any busted hands, for example, were treated as equal to any other, BPP would bet

inappropriately strongly on middling busted hands and inappropriately weakly on

Early non-commercial tools included IDEAL [266] and Caben [58]; see B.2 for a brief history of BN

software development.

© 2004 by Chapman & Hall/CRC Press LLC

(b) (c)(a)

BPP_Win

BPP_Final

OPP_Final

OPP_Current

BPP_Current

OPP_Upcards

OPP_Action OPP_Action

OPP_Current

OPP_Final

BPP_Win

BPP_Action

Winnings

BPP_Final

OPP_Upcards

BPP_Current

BPP_Upcards

OPP_Current

OPP_Upcards

OPP_Action

Bluffing

OPP_Final

BPP_Final

BPP_Current

BPP_Action

BPP_Upcards

BPP_Win

BPP_Action

Winnings

FIGURE 11.1

The evolution of network structures for BPP: (a) polytree used for both 1993 and

1999 version; (b) 2000 decision network version; (c) proposed new structure 2003.

Ace-high hands. The lack of refinement of paired hands also hurt its performance.

Finally, the original version of BPP was a hybrid system, using a BN to estimate

the probability of winning, and then making the betting decision using randomized

betting curves, which were hand constructed. Bluffing was introduced as a random

low probability (5%) of bluffing on any individual bet in the final round. The prob-

abilities for the OPP

Action node were adapted over time based on observations of

opponents’ actions. Information about the opponent’s betting actions in different

situations was used to learn the OPP

Action CPT.

11.2.2 Subsequent developments

During 1995, alternative granularities for busted and pair hand types were investi-

gated [274]. At this stage, we introduced an automatic opponent that would estimate

its winning probability by dealing out a large sample of final hands from the current

situation, and then use simple rules for betting.

In the 1999 version [160] we kept the same polytree structure, but opted for a

modest refinement of the hand types, moving from the original 9 hand types to 17, by

subdividing busted hands and pairs into low (9 high or lower), medium (10 or J high),

queen, king and ace. In this version, the parameters for the betting curves were found

using a stochastic greedy search. This version of BPP was evaluated experimentally

against: a simple rule-based system; a program which depends exclusively on hand

probabilities (i.e., without opponent modeling); and human players with experience

playing poker.

A number of individual changes to the system were investigated individually dur-

ing 2000 [38]. These changes included:

Expansion of the hand type granularity from 17 to 24, by explicitly including

all 9 different pair hands.

Allowing OPP Action to take all four different action values, fold, pass, call,

raise

.

© 2004 by Chapman & Hall/CRC Press LLC