Bunt H., Beun R.-J., Borghuis T. (eds.) Multimodal Human-Computer Communication. Systems, Techniques, and Experiments

Подождите немного. Документ загружается.

Object Reference in Task-Oriented Keyboard Dialogues 291

communication that was used. In order to type as few words as possible, typists

omitted determiners.

Since the latter group of misunderstandings was a direct result of the available

modalities of communication, they can be omitted from the comparison between

keyboard and spoken dialogues. The percentage of misunderstandings due to

focus then becomes 77% (13 out of 17 cases), which is close to the 83% found in

spoken dialogues.

To summarize, more or less the same percentage of focus-related misunder-

standings occurred in keyboard dialogues as in spoken dialogues. However, the

total percentage of misunderstandings in keyboard dialogues was greater since

more misunderstandings occurred due to mistakes and, most importantly, due

to omitting determiners in descriptions. This result stresses the importance of

determiners as providing information about the accessibility of a referent (see

Piwek, Beun and Cremers, 1995).

5 Discussion and Conclusions

The differences between the uses of referential expressions and gestures in key-

board and spoken dialogues can be explained to a large extent by the differences

in the respective experimental paradigms as illustrated by the DENK triangle.

A direct consequence of typing rather than speaking is the length of the ref-

erential expressions used. Since it takes more effort to type than to speak, fewer

words were used in referential expressions in keyboard dialogues. However, since

the difference not very great, the largest reduction of words occurred in the

non-referential parts of the utterances. Furthermore, participants in keyboard

dialogues were not found to use fewer gestures than those in spoken dialogues.

The total number of gestures was the same, although the distribution over ac-

companying features was different. These results may be domain-dependent,

since objects that are more difficult to describe are expected to be pointed at

more often.

The difference found in the distribution of gestures is a direct consequence

of the difficulty to coordinate verbal and non-verbal information in keyboard

dialogues. Since it is not possible to gesture and type at the same time, hardly any

occurrences of short referential expressions were found, such as demonstratives

or absolute features only. In spoken dialogues the demonstratives and absolute

features that accompanied gestures can be said to have the function of either

attracting the attention of the partner to an area in the domain or of keeping

the conversation flowing by avoiding silences. In keyboard dialogues the latter

function is not prevalent, since there is less time pressure (Beun and Bunt, 1987).

Participants in keyboard dialogues do not have the possibility to apply the former

function, i.e. to attract attention. However, these participants were observed to

point with more emphasis, i.e. repeatedly or for a longer period than participants

in spoken dialogues did. The emphasis can be interpreted as a means to make

sure that the partner has observed the gesture.

292 Anita Cremers

A second consequence related to the coordination of modalities is the fact that

typing and simultaneously inspecting the domain was difficult. This resulted in

difficulty in keeping track of the current focus area. This difficulty was reflected

in the same number of references to objects in focus and to objects out of focus

(in spoken dialogues far more references to objects in focus occurred).

As a consequence of the difficulty to change turns in keyboard dialogues, fewer

verbal turns took place. However, the loss of verbal turns was compensated by

more non-verbal turns. There was no indication that more information was given

in the first utterance to try to avoid having to use more turns. However, this

could be a consequence of the relatively simple objects used in the experiment.

It was probably not necessary to use more words to indicate a certain object un-

ambiguously. Although more miscommunications occurred in an absolute sense,

they did not affect the mean number of turns used to reach agreement that the

target object had been identified.

The differences between keyboard and spoken dialogues were all found to

relate to the principle of minimal cooperative total effort. In a situation where

different modalities of communication are available which have different charac-

teristics and possibilities, other means have to be found to minimize effort. The

main difference with respect to spoken dialogues was in the use of gestures to

refer to objects, the same numbers of gestures were used in spoken dialogues

and keyboard dialogues, but they were used at different moments. At moments

where participants in spoken dialogues used limited information, participants in

keyboard dialogues tended to use more pointing gestures.

From these findings some implications can be drawn for the design of a mul-

timodal interface, such as the DENK interface. First, in our domain we did not

find a great reduction of words in referential expressions, but we did find a great

reduction in the rest of the utterances, i.e. in the part were the action that has

to be carried out is formulated. Further research should be conducted to figure

out whether this reduction causes more or other types of miscommunication.

In the design of a multimodal interface special attention should be devoted

to the coordination of verbal and non-verbal information. Procedures should be

developed to make links between verbal expressions, especially longer ones, and

gestures that axe meant to refer to the same objects but do not occur at the

same time. This is necessary in order to avoid confusions about whether in these

cases only one object or two separate objects are referred to.

In keyboard dialogues participants apparently did not make use of the cur-

rent focus area as often as participants in the spoken dialogues, but reduced

expressions referring to objects in the current focus area still occurred regularly.

This means that the interface should adopt a notion of focus area in order to

enable these expressions to be understood.

Finally, the interface should allow users to change turns quickly since almost

the only type of feedback that was provided in keyboard dialogues consisted

of gestures or actions in the domain. It is probably easier for the interface to

understand verbal feedback than to analyse the meaning of gestures and actions.

However, provisions should be made for listing the verbal and non-verbal turns

Object Reference in Task-Oriented Keyboard Dialogues 293

in a convenient way so that no confusions will arise, because the correct order

of turns is not clear.

References

Ahn, R.M.C., Beun, R.J., Borghuis, T., Bunt, H.C. & Overveld, C.W.A.M. van (1995)

The DENK-architecture: a Fundamental Approach to User-Interfaces. Artificial In-

telligence Review, 8, 431-445.

Beun, R.J. and Bunt, H.C. (1987) Investigating Linguistic Behaviour in Information

Dialogues with a Computer. IPO Annual Progress Report, 22, 77-86.

Bunt, H.C. (1997) Issues in Multimodal Human-Computer Communication. This vol-

ume.

Bunt, H.C., Ahn, R.M.C., Beun, R.J., Borghuis, T., & Overveld, C.W.A.M. van (1997)

Cooperative Multimodal Communication in the DENK System. This volume.

Clark, H.H. and Wilkes-Cibbs, D. (1986) Referring as a collaborative process. Cogni-

tion, 22, 1-39.

Cohen, P.R. (1984) The Pragmatics of Referring and the Modality of Communication.

Computational Linguistics, 10(2), 97-125.

Cremers, A.H.M. (1995) The Process of Cooperative Object Reference in a Shared

Domain. In: E. Fava (ed.) Speech acts and linguistic research: proceedings of the

workshop, July 15-17, 1994. First International Summer Institute in Cognitive Sci-

ence, Center for Cognitive Science, State University of New York at Buffalo, NY.

Padova: edizione nemo, 139-153.

Cremers, A.H.M. and Beun, R.J. (1985) Object Reference m a Shared Domain o/Con-

versation. Eindhoven, Institute for Perception Research, manuscript no. 1089.

Hauptmann, A.G. and Rudnicky, A.I. (1988) Talking to Computers: an Empirical In-

vestigation. International Journal of Man-Machine Studies, 28, 583-604.

Oviatt, S.L. and Cohen, P.R. (1991) Discourse Structure and Performance Efficiency

in Interactive and Non-interactive Spoken Modalities. Computer Speech and Lan-

guage, 5, 297-326.

Piwek, P., Beun, R.J. and Cremers, A.H.M. (1995) Deictic Use of Dutch Demonstra-

tives. IPO Annual Progress Report, 30, 99-108.

Referent Identification Requests in

Multi-Modal Dialogs

Tsuneaki Kato and Yukiko I. Nakano

NTT Information and Communication Systems Labs.

1-2356 Take, Yokosuka-shi, Kanagawa 238-03 JAPAN

kato@nttnly, ntt. j p

Abstract.

This paper describes an empirical study on what kinds of

information are appropriate for referent identification requests in multi-

modal dialogs, and how that information should be communicated in

order to achieve the request desired. We conduct experiments in which

experts explain the installation of a telephone in four situations: spoken-

mode monolog; spoken-mode dialog; multi-modal monolog; and multi-

modal dialog. Referent identification requests could be well analyzed

from two perspectives: information communicated and the style of goal

achievement. We find that there is a close relationship between the infor-

mation conveyed via different communicative modes, and sketch a model

that explains these results. In the model, information cannot be divided

into the semantic content conveyed and the communicative modes em-

ployed, and is treated as the primitive unit for consideration. Pointing

is considered as information in this sense. We also find that in dialogs,

especially in spoken-mode dialogs, the speakers realize identification re-

quests as series of fine-grained steps, and try to achieve them step by

step.

1 Introduction

Two kinds of actions are ubiquitous in every dialog: referent identification, by

which the hearer identifies the object described linguistically by a noun phrase or

pointed to through a physical action, and referent identification request, by which

the speaker makes the hearer identify the referent. Especially in instruction-

giving dialogs on physical objects, these actions appear frequently and play a

central role. As a consequence, all multi-modal dialog systems have to have a

framework to realize referent identification requests.

An interesting point is that there is no agreement between researchers on

how referent identification requests should be realized. According to the rela-

tionship between communicative modes, COMET's media coordinator decides

which portion of a given semantic content should be realized in which mode

(Feiner and McKeown, 1990). The relationship of communicative modes is lim-

ited to only the aspect of how to communicate a given content. By contrast,

in the framework proposed by Maybury, during planning for achieving a mode-

independent rhetorical goal, mode allocation and content selection are achieved

Referent Identification Requests in Multi-Modal Dialogs 295

simultaneously, and can co-constrain (Maybury, 1993). In the examples shown in

Maybury (1993), however, he used the same schema for linguistic identification

requests, independently of whether visual actions could be used. Based on this

example only, he looks to treat visual identification requests such as pointing

actions as just supplemental; they have no effect on linguistic requests. On the

other hand, WIP uses cross-modal references, the references between modes that

are possible only when the system can utilize more than one mode (Andr@ and

Rist, 1994; Wahlster et al., 1991).

Several systems have different criteria on what reference expression should

be preferred. For example, Neal and Shapiro (1991) claim that graphic/pictorial

presentation is always desirable, and that natural language can always be used

as a last resort. In Claassen (1992), contextual factors such as salience play an

important role, while whether the object is currently visible or not is taken into

account towards the bottom of the decision tree. Although it is obvious that

these criteria depend on the domains that the systems are concerned with and

do not need to be identical, some criteria based on empirical studies is needed.

The objective of our research is to empirically determine what kinds of infor-

mation are appropriate for referent identification requests in multi-modal dialogs,

and how that information should be communicated. The long term goal of this

study is to provide useful suggestions for designing more sophisticated multi-

modal dialog systems. Cohen also picked up referent identification requests and

compared the kinds of speech acts used to achieve them for two dialog situations:

keyboard dialog and telephone dialog (Cohen, 1984). Our research not only ex-

tends the situations considered to the multi-modal one in which conversants have

audio and visual channels, but also considers the kinds and amounts of infor-

mation used for referent identification and clarifies how they are influenced by

the communicative modes and contextual factors. Moreover, elaboration related

phenomena and the roles of the addressee are also examined.

2 The Experiments and the Corpus

Experiments were conducted to obtain the corpus needed to design multi-modal

dialog systems. The task is the installation of a telephone with an answering

machine feature. In this task, the telephone set is unpacked, then eight settings,

such as checking the volume, adjusting the clock and recording a response mes-

sage, are accomplished. Finally, some function buttons are explained.

In order to consider the effect of communicative modes and level of interac-

tivity, we recorded explanations in four situations: SD (Spoken-mode Dialog),

MD (Multi-modal Dialog), SM (Spoken-mode Monolog) and MM (Multi-modal

Monolog). In all situations, the experts were able to handle the telephones in

front of them freely. In the dialog situations, SD and MD, each expert conversed

with a remote apprentice to lead him/her through installation. In the monolog

situations, SM and MM, the experts verbalized the instructions with no audi-

ence in the setting that an apprentice will follow his/her instruction afterwards

by listening to an audiotape in SM or watching a videotape in MM.

296 Tsuneaki Kato and Yukiko I. Nakano

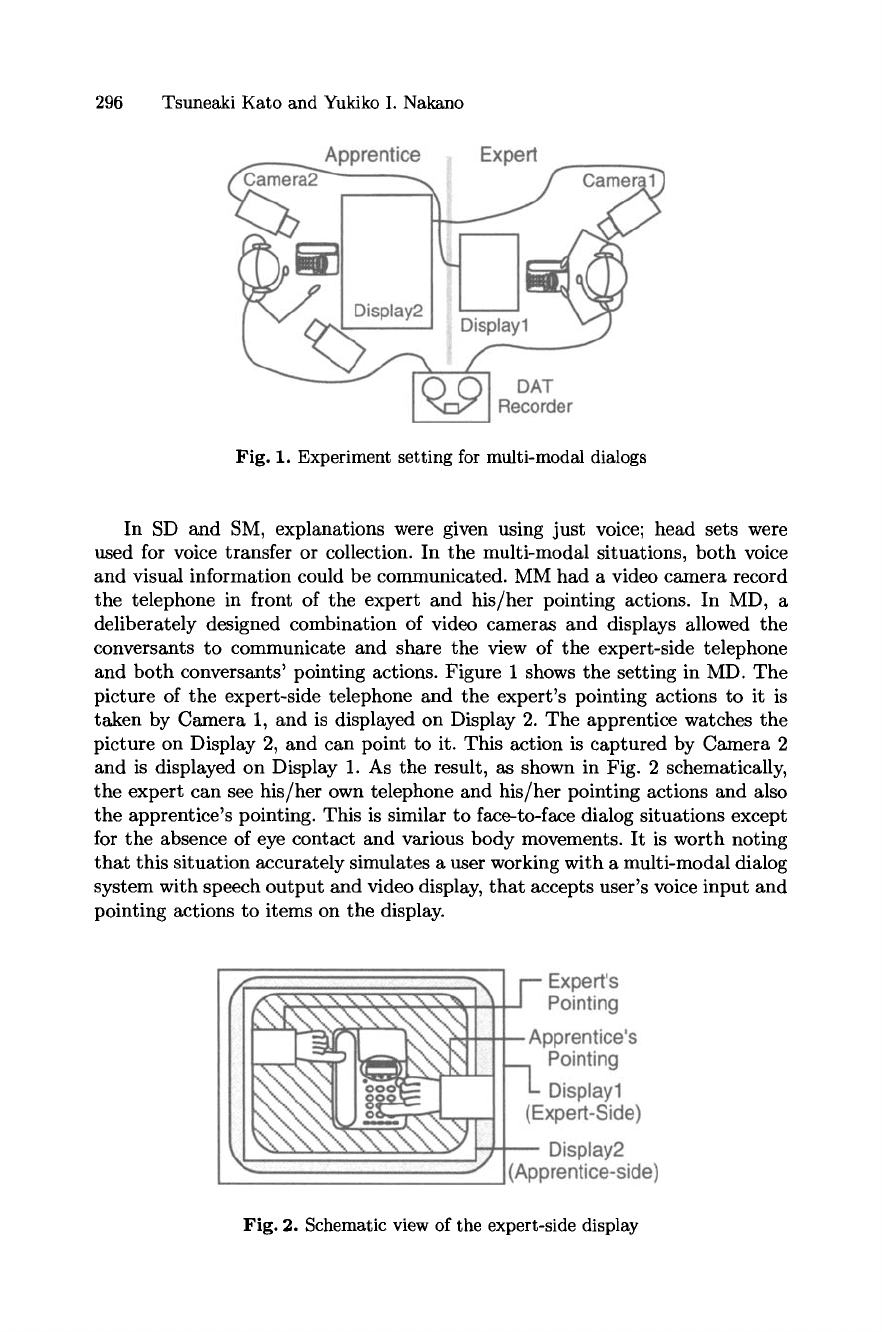

Fig. 1. Experiment setting for multi-modal dialogs

In SD and SM, explanations were given using just voice; head sets were

used for voice transfer or collection. In the multi-modal situations, both voice

and visual information could be communicated. MM had a video camera record

the telephone in front of the expert and his/her pointing actions. In MD, a

deliberately designed combination of video cameras and displays allowed the

conversants to communicate and share the view of the expert-side telephone

and both conversants' pointing actions. Figure 1 shows the setting in MD. The

picture of the expert-side telephone and the expert's pointing actions to it is

taken by Camera 1, and is displayed on Display 2. The apprentice watches the

picture on Display 2, and can point to it. This action is captured by Camera 2

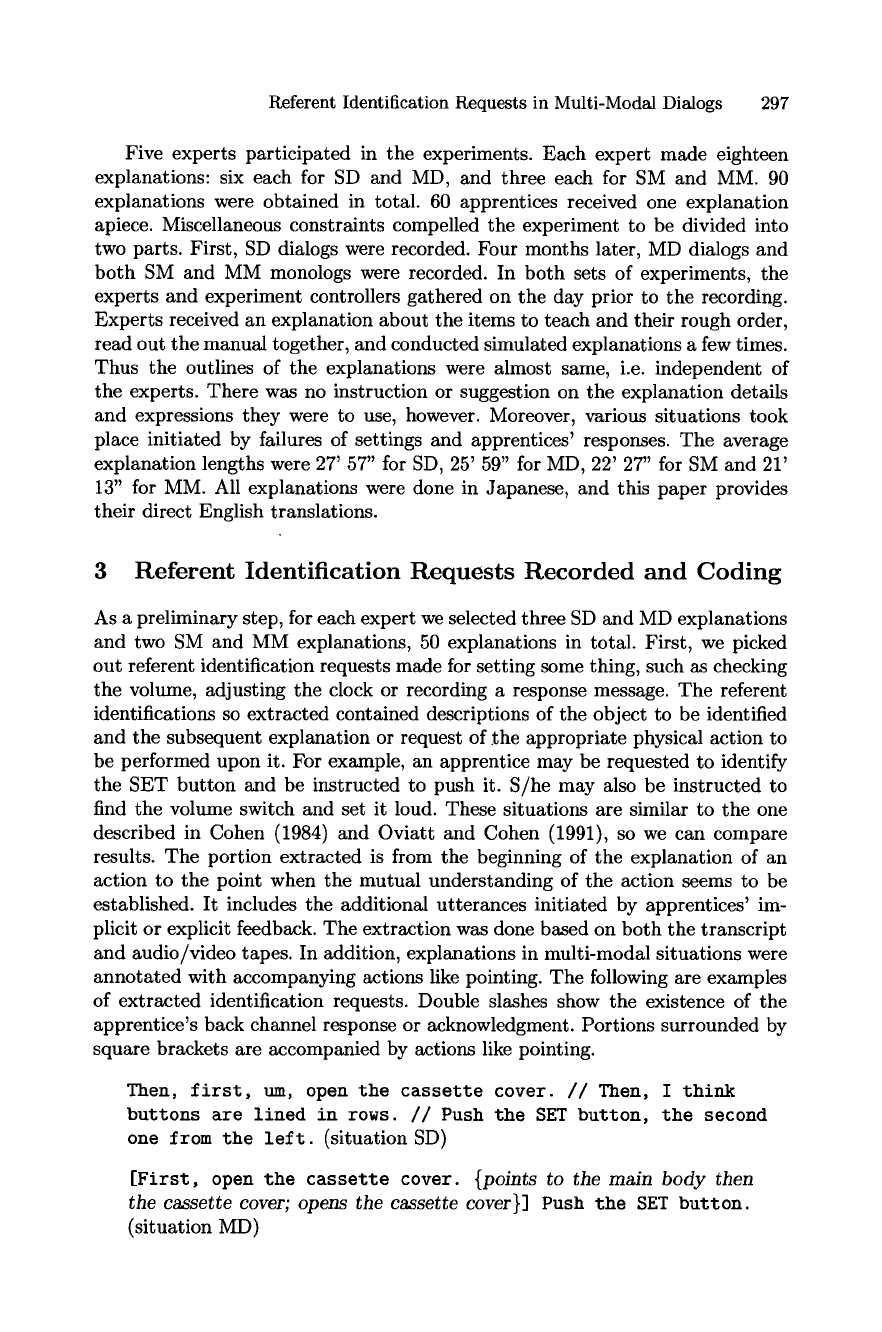

and is displayed on Display 1. As the result, as shown in Fig. 2 schematically,

the expert can see his/her own telephone and his/her pointing actions and also

the apprentice's pointing. This is similar to face-to-face dialog situations except

for the absence of eye contact and various body movements. It is worth noting

that this situation accurately simulates a user working with a multi-modal dialog

system with speech output and video display, that accepts user's voice input and

pointing actions to items on the display.

Fig. 2. Schematic view of the expert-side display

Referent Identification Requests in Multi-Modal Dialogs 297

Five experts participated in the experiments. Each expert made eighteen

explanations: six each for SD and MD, and three each for SM and MM. 90

explanations were obtained in total. 60 apprentices received one explanation

apiece. Miscellaneous constraints compelled the experiment to be divided into

two parts. First, SD dialogs were recorded. Four months later, MD dialogs and

both SM and MM monologs were recorded. In both sets of experiments, the

experts and experiment controllers gathered on the day prior to the recording.

Experts received an explanation about the items to teach and their rough order,

read out the manual together, and conducted simulated explanations a few times.

Thus the outlines of the explanations were almost same, i.e. independent of

the experts. There was no instruction or suggestion on the explanation details

and expressions they were to use, however. Moreover, various situations took

place initiated by failures of settings and apprentices' responses. The average

explanation lengths were 27' 57" for SD, 25' 59" for MD, 22' 27" for SM and 21'

13" for MM. All explanations were done in Japanese, and this paper provides

their direct English translations.

3 Referent Identification Requests Recorded and Coding

As a preliminary step, for each expert we selected three SD and MD explanations

and two SM and MM explanations, 50 explanations in total. First, we picked

out referent identification requests made for setting some thing, such as checking

the volume, adjusting the clock or recording a response message. The referent

identifications so extracted contained descriptions of the object to be identified

and the subsequent explanation or request of .the appropriate physical action to

be performed upon it. For example, an apprentice may be requested to identify

the SET button and be instructed to push it. S/he may also be instructed to

find the volume switch and set it loud. These situations are similar to the one

described in Cohen (1984) and Oviatt and Cohen (1991), so we can compare

results. The portion extracted is from the beginning of the explanation of an

action to the point when the mutual understanding of the action seems to be

established. It includes the additional utterances initiated by apprentices' im-

plicit or explicit feedback. The extraction was done based on both the transcript

and audio/video tapes. In addition, explanations in multi-modal situations were

annotated with accompanying actions like pointing. The following are examples

of extracted identification requests. Double slashes show the existence of the

apprentice's back channel response or acknowledgment. Portions surrounded by

square brackets are accompanied by actions like pointing.

Then, first, um, open the cassette cover. // Then, I think

buttons are lined in rows. // Push the SET button, the second

one from the left. (situation SD)

[First, open the cassette cover.

{points to the main body then

the

cassette cover; opens the

cassette

cover}]

Push the SET button.

(situation MD)

298 Tsuneaki Kato and Yukiko I. Nakano

The extracted and annotated referent identification requests were scored and

analyzed from the following two perspectives.

3.1 Information Communicated

What kind of and what amount of information was communicated in each iden-

tification process? In this study, information was captured in its widest sense.

First, a linguistic description of the proposition that concerns the features of the

object is regarded as the piece of information communicated. Such type of infor-

mation includes descriptions of its shape, size, position, and so on. In addition,

usage of a pronominal, description of contextual information, and description

of landmark objects are all regarded as communicating some piece of informa-

tion. As a pointing action conveys some visual information, it is also regarded

as information in itself. Information was divided into the following categories.

pronominals pronominals including pronouns e.g. "this", "it".

context reference an indication that the object was already identified in the

current discourse, e.g. "that we used before"

general name an object category name that identifies the type of thing but

does not clearly identify which thing is being referred to. e.g. "button",

"switch"

proper name a name that distinguishes the object from others, e.g. "the SET

button", "the telephone circuit selector"

shape/size a description of the color, size and shape of the object, e.g. "red",

"big"

position a description about where the object is. e.g. "on upper right of the

main body"

characters/marks a description of the characters or marks attached on/by the

object, e.g. "with the characters, OUT"

functions a description about the function(s) the object has. e.g. "for control-

ling the volume"

related objects a description about a set that contains the object or a land-

mark around the object, e.g. "a group of buttons"

others other linguistic information such as features of the set containing the

object, e.g. "lined in two rows"

pointing a pointing action to the object.

All information except pointing were communicated through the audio chan-

nel, and were captured in the transcript. The appropriate portions were picked

from the extracted referent identification requests, and were classified. In this

study, we define the amount of information as the number of such portions and

the existence of accompanying pointing. In the case of pronominals, general name

and proper name, the number of tokens is used. In other cases, number of types

is used. That is, in the latter case, a literal repetition that conveys the same con-

tent is counted just once. Obviously, it is considered as two pieces of information

communicated in the case that two types of information of the same category,

Referent Identification Requests in Multi-Modal Dialogs 299

such as "on upper left of the body" and "above the dial buttons", appear in a

referent identification request. In the case of pointing, only its usage was checked

so that its amount is always zero or one regardless of its continuation time.

This examination and enumeration also revealed that almost all usages of

"this" in MD and MM were accompanied with pointing, and could be regarded

as cross-modal references.

3.2 Goal Achievement

What type of surface speech act form was used in a referent identification request,

and through what process was the identification request expressed and achieved?

The first characteristic examined in this perspective is what type of surface

speech act form was used in a referent identification request. This characteristic

was also examined in Cohen (1984). We examined whether a referent identifi-

cation request is subsumed in the following action request as its case element

without any clue, or is to be regarded as an explicit goal and realized as a

sentence. In the latter case, what kind of sentence is used was also examined.

Utterance forms were divided into the following categories. Referent identifi-

cation is considered an explicit goal when NP fragment, Existential Prop., or

Perception-base is used. This is not the case when Case is used.

Case an unmarked noun phrase describing the object appearing in another

request, e.g.

"Push the SET button".

NP fragment an utterance consisting of an isolated noun phrase describing

the object, e.g. "The

SET button,

this is it, push it".

Existential Prop. an utterance that asserts object existence, e.g. "There

is

the SET button on upper right of the main body".

Perception-base an utterance that requests the addressee perform an action

to make him/her aware of the object, such as looking and opening, e.g.

"Look

at the upper right of the dial buttons.

Push the SET button there".

Others other types of utterances.

The second characteristic examined is whether confirmation such as use of a

tag question and an interrogative sentence, was made to check the accomplish-

ment of the request

The third characteristic is how often the expert used expressions closely re-

lated to elaboration. The style and amount of elaboration relate the processes

in 6rder to achieve the referent identification requests, and were also examined

in Clark and Wilkes-Gibbs (1990) and Oviatt and Cohen (1991). In our study,

elaboration related expression were divided into the following categories.

Apposition an appositive expression, that is a noun phrase explaining the ob-

ject expanded by a subsequent parenthetical noun phrase.

Supplement a supplementary copula or a noun phrase fragment that explains

the object and follows a sentence or clause that contains a noun phrase

explaining that object. In Japanese, as a main verb follows its case element,

300 Tsuneaki Kato and Yukiko I. Nakano

appositions and supplements can be distinguished easily. The following two

Japanese expressions, for an example, both have the almost same meaning

as the English expression, "Push the OUT button, the red button.", one

contains an apposition and the other contains a supplement.

Apposition

rusu botan, akai botan, wo oshite-kudasai.

OUT button, red button, Obj push please.

Supplement

rusu botan wo oshite-kudasai, akai botan.

OUT button Obj push please, red button.

Replacement expressions that have the same syntactic structure as apposi-

tions or supplements but whose semantic content contradicts that of the one

uttered immediately before and replaces it. For example, "push the leftmost

button, rightmost one". Articulation level errors, phonetic repair in Levelt

(1983), are not included.

The above definition does not take it into account whether there is an utter-

ance boundary somewhere in those expressions, though, as Clark and Wilkes-

Gibbs (1990) pointed out, the existence of utterance boundary and the appren-

tice's implicit request of refashioning can be a clue for determining whether an

elaboration is reactive or previously designed. Experts' intonation was also not

taken into consideration.

The fourth and last characteristic examined is the apprentices' contribu-

tions in dialog situations. The number and categories of apprentices' utterances

were examined. The utterances were categorized into five categories: acknowl-

edgments, which include back channel responses; repetitions with down into-

nation; follow-up questions including repetitions with rising intonation; sponta-

neous elaboration; and replacements.

4 Results

This section shows the results on how the characteristics of referent identification

requests described in the previous section are influenced by the available modes

of communication and the level of interactivity. It is obvious that identification

requests depend on the contextual factor of the referent. That is, initial iden-

tification, which is used to make the first effective reference to an object must

show different characteristics from other identifications, which are used to refer

to objects already introduced. Accordingly, we first analyzed how objects were

first introduced in order to examine, independently of the contextual factors, the

communicative modes available and interactivity dependency. Second, how ref-

erent identification requests depend on position in the discourse was examined.

4.1 Initial Identification

How objects were first introduced was analyzed in order to examine, indepen-

dently of the contextual factors, the communicative modes available and inter-

activity dependency. We examined the introduction of twelve objects.