Alfred DeMaris - Regression with Social Data, Modeling Continuous and Limited Response Variables

Подождите немного. Документ загружается.

two. Thus, given some type of violence, the odds that it is “intense male violence”

is .036/.099 ⫽ .364. In general, for an M-category variable, there are M(M ⫺ 1)/2

nonredundant odds that can be contrasted, but only (M ⫺ 1) independent odds.

Modeling (M ⴚ 1) Log Odds

As before, we typically wish to model the log odds as functions of one or more

explanatory variables. However, this time we require (M ⫺ 1) equations, one for each

independent log odds. Each equation is equivalent to a binary logistic regression

model, in which the response is a conditional log odds—the log odds of being in one

vs. another category of the response variable, given location in one of these two cate-

gories. Each odds is the ratio of the probabilities of being in the respective categories.

Equations for all of the other M(M ⫺ 1)/2 ⫺ (M ⫺ 1) dependent log odds are functions

of the parameters for the independent log odds, and therefore do not need to be esti-

mated from the data. Typically, we choose one category, say the Mth, of the response

variable as the baseline, and contrast all other categories with it (i.e., the probability of

being in the baseline category forms the denominator of each odds). With π

1

, π

2

,...,

π

M

representing the probabilities of being in category 1, category 2, ...,category M,

of the response variable, respectively, the multinomial logistic regression model with

K predictors is

log

ᎏ

π

π

M

1

ᎏ

⫽ β

0

1

⫹ β

1

1

X

1

⫹

...

⫹ β

K

1

X

K

log

ᎏ

π

π

M

2

ᎏ

⫽ β

0

2

⫹ β

1

2

X

1

⫹

...

⫹ β

K

2

X

K

(8.1)

.

.

.

log

ᎏ

π

π

M

M

⫺1

ᎏ

⫽ β

0

M⫺1

⫹ β

1

M⫺1

X

1

⫹

...

⫹ β

K

M⫺1

X

K

,

where the superscripts on the betas indicate that effects of the regressors can change,

depending on which log odds is being modeled.

Estimation. As before, parameters are estimated via maximum likelihood. In that the

model consists of a series of binary logistic regression equations, one method of esti-

mating the model, particularly in the absence of multinomial logistic regression soft-

ware, is via limited information maximum likelihood (LIML) estimation. In the

current example, this would be accomplished by selecting all cases characterized by

either “intense male violence” or “nonviolence” and ignoring those exhibiting “phys-

ical aggression.” One would then estimate a binary logistic regression for “intense

male violence” versus “nonviolence,” using only the cases selected. Next, one would

select only the cases characterized by either “physical aggression” or “nonviolence.”

For this group, one would estimate a binary logistic regression for “physical aggres-

sion” versus “nonviolence.” The two resulting sets of estimates would then constitute

MULTINOMIAL MODELS 295

c08.qxd 8/27/2004 2:55 PM Page 295

the multinomial logistic regression estimates. This approach, originally proposed by

Begg and Gray (1984), produces estimates that are consistent and asymptotically nor-

mal. However, they are not as efficient as those produced by maximizing the joint

likelihood function for all the parameters (across the M ⫺ 1 equations), given the data

[see Hosmer and Lemeshow (2000) for an expression for this likelihood]. The latter

approach is what is commonly employed for estimating the multinomial logistic

regression model. Maximization of the joint likelihood function using all the data is

referred to as full information maximum likelihood (FIML) estimation.

In SAS, one can use the procedures LOGISTIC or CATMOD for estimating the

FIML model. As SAS’s CATMOD (employed for this chapter) automatically chooses

the highest value of the response variable as the baseline, one controls the choice of

baseline by coding the variable accordingly. In the current example, I wanted “non-

violence” to be the baseline category, so the variable couple violence profile was

coded 0 for “intense male violence,” 1 for “physical aggression,” and 2 for “nonvio-

lence.” Table 8.5 presents the results of estimating the multinomial model of couple

violence profile using the regressors in model 2 of Table 8.4. Shown are the equations

for the two independent log odds—contrasting “intense male violence” with “nonvi-

olence” and contrasting “physical aggression” with “nonviolence”—based on either

FIML or LIML. The estimates in the equation for the third logs odds, which contrasts

“intense male violence” with “physical aggression,” are simply the differences in the

296 ADVANCED TOPICS IN LOGISTIC REGRESSION

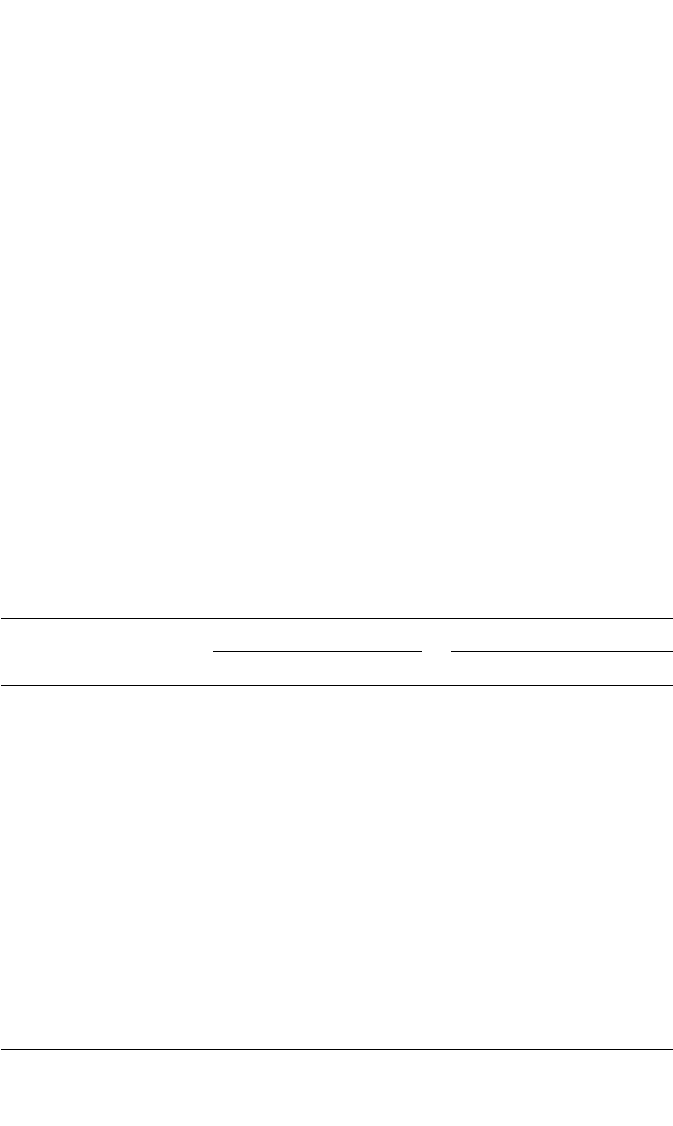

Table 8.5 Multinomial Logistic Regression Models for Violence Profile as a Function

of Couple Characteristics

FIML Estimates LIML Estimates

Predictor IM vs. NV PA vs. NV IM vs. NV PA vs. NV

Intercept ⫺4.024*** ⫺2.810*** ⫺4.046*** ⫺2.794***

Cohabiting

ab

1.368*** .591 1.430*** .645*

Minority couple .255 .191 .251 .149

Female’s age at union ⫺.029* ⫺.011 ⫺.029* ⫺.010

Male’s isolation .028 .008 .030 .008

Economic disadvantage .037* .005 .035* .006

Alcohol/drug problem

b

.867** .579** .913*** .585**

Relationship duration

b

⫺.066*** ⫺.049*** ⫺.068*** ⫺.048***

(Relationship duration)

2b

.0005 .0016*** .0005 .0016***

Economic disadvantage .048 .067 .055 .072*

⫻ alcohol/drug problem

Open disagreement

b

.058** .065*** .058** .069***

Positive communication

b

⫺.536*** ⫺.408*** ⫺.534*** ⫺.406***

Model χ

2

475.738*** 224.211*** 296.482***

df 22 11 11

R

2

L

.122 .180 .113

a

Significant discriminator of intense male violence (IM) versus physical aggression (PA).

b

Significant global effect on both log odds.

* p ⬍ .05. ** p ⬍ .01. *** p ⬍ .001.

c08.qxd 8/27/2004 2:55 PM Page 296

first two sets of estimates. To see why, let π

0

be the probability of “intense male vio-

lence,” π

1

be the probability of “physical aggression,” and π

2

be the probability of

“nonviolence.” Then, with K predictors of the form X

1

, X

2

, ..., X

K

in the model, the

equation for the odds of “intense male violence” vs. “physical aggression” is

ᎏ

π

π

0

1

ᎏ

⫽

ᎏ

π

π

0

1

/

/

π

π

2

2

ᎏ

⫽

⫽ exp(β

0

1

⫺ β

0

2

) exp[(β

1

1

⫺ β

1

2

)X

1

]

...

exp[(β

K

1

⫺ β

K

2

)X

K

].

Of course, this third equation can easily be generated via SAS simply by changing

the coding of the response. In this case, I would switch the coding so that 1 repre-

sents “nonviolence” and 2 represents “physical aggression.” Then the first set of esti-

mates shown in the output would be for the log odds of “intense male violence”

versus “physical aggression.” However, it is usually less confusing to show only the

equations for the independent log odds, after selecting the most appropriate group as

the baseline category.

Interpretation of Coefficients. Model coefficients are interpreted just as they

are in the binary case, except that now, more than two outcome categories are being

compared. For example, according to the FIML estimates, cohabitors have higher

odds of “intense male violence” (versus “nonviolence”) than marrieds by a factor of

exp(1.368) ⫽ 3.927. They are not at higher risk than marrieds, however, for “physical

aggression.” Their odds of “physical aggression,” compared to the odds for marrieds,

is inflated by 100[exp(.591) ⫺ 1] ⫽ 80.6%, but this effect is not significant. Given

violence, the odds that it is “intense male violence” versus “physical aggression” is

exp(1.368 ⫺ .591) ⫽ exp(.777) ⫽ 2.175 times higher for cohabitors than for marrieds.

Is this effect significant? One could pose the question in one of two ways. First, we

could ask whether the effect of cohabiting on the log odds of “intense male violence”

is different from its effect on “physical aggression.” This would involve a test of the

difference between the coefficients for cohabiting in each equation. Or we can estimate

the log odds of “intense male violence” vs. “physical aggression” (the third, noninde-

pendent equation) and ask whether the cohabiting effect is significant in that equation.

In fact, it is (results not shown). The latter perspective is equivalent to asking whether

cohabiting discriminates “intense male violence” from “physical aggression.” In fact,

it is the only significant discriminator in the model, as indicated in the table footnote.

Several other effects in the FIML model are significant. Having an alcohol or drug

problem and more frequent disagreements elevates the odds of both “intense male

violence” and “physical aggression.” More positive communication and a longer rela-

tionship duration reduce the odds of both types of violence. The female’s age at union,

living in an economically disadvantaged neighborhood, and the nonlinear effect of

relationship duration, however, affect only the odds of one type of violence. Neverthe-

less, none of these variables’ effects are significantly different for “intense male vio-

lence” as opposed to “physical aggression.”

exp(β

0

1

) exp(β

1

1

X

1

)

...

exp(β

K

1

X

K

)

ᎏᎏᎏᎏ

exp(β

0

2

) exp(β

1

2

X

1

)

...

exp(β

K

2

X

K

)

MULTINOMIAL MODELS 297

c08.qxd 8/27/2004 2:55 PM Page 297

The LIML estimates are generally quite close to the FIML ones. This should usu-

ally be the case, as the LIML estimates are nearly as efficient as their FIML counter-

parts (Begg and Gray, 1984). One might wonder why we would bother with LIML if

the FIML software is available, as it generally is. As Hosmer and Lemeshow (2000)

point out, the LIML approach has some specific advantages. First, it allows the model

for each log odds to be different if we so choose, that is, to contain different regressors

or different functions of regressors, an approach not possible with FIML. Second, it

allows one to take advantage of features that may be offered in binary logistic regres-

sion software but not multinomial logistic regression software. Examples are weight-

ing by case weights or diagnosing influential observations. Third, means of assessing

empirical consistency, such as the Hosmer–Lemeshow χ

2

, are not yet well developed

for the multinomial model (Hosmer and Lemeshow 2000). However, using the LIML

approach, empirical consistency can be assessed for each equation separately, as dis-

cussed in Chapter 7. In fact, for the model in Table 8.5, the Hosmer– Lemeshow χ

2

is 11.823 for the equation for “intense male violence” and 6.652 for the equation

for “physical aggression.” Both values are nonsignificant, suggesting an acceptable

model fit.

Inferences. In multinomial logistic regression, there are several statistical tests of

interest. First, as in binary logistic regression, there is a test statistic for whether the

model as a whole exhibits any predictive efficacy. The null hypothesis is that all

K(M ⫺ 1) of the regression coefficients (i.e., the betas) in equation group (8.1) equal

zero. Once again, the test statistic is the model chi-squared, equal to ⫺2log(L

0

/L

1

),

where L

0

is the likelihood function evaluated for a model with only the MLEs for the

intercepts and L

1

is the likelihood function evaluated at the MLEs for the hypothe-

sized model. This test is not automatically output in CATMOD. However, as the pro-

gram always prints out ⫺2 log L for the current model, it can be readily computed by

first estimating a model with no predictors and then recovering ⫺2log L

0

from the

printout (it is the value of “⫺2 log likelihood” for the last iteration on the printout).

This test can then be computed as ⫺2log L

0

⫺ (⫺2log L

1

). For the model in Table 8.5,

⫺2log L

0

was 3895.2458, while ⫺2log L

1

was 3419.5082. The test statistic was

therefore 3895.2458 ⫺ 3419.5082 ⫽ 475.7376, with 11(2) ⫽ 22 degrees of freedom, a

highly significant result. (The LIML equations each have their own model χ

2

,as

shown in the table.)

Second, the test statistic, using FIML, for the global effect on the response vari-

able of a given predictor, say X

k

, is not a single-degree-of-freedom test statistic as in

the binary case. For multinomial models, there are (M ⫺ 1) β

k

’s representing the

global effect of X

k

, one for each of the log odds in equation group (8.1). Therefore,

the test statistic is for the null hypothesis that all M ⫺ 1 of these β

k

’s equal zero.

There are two ways to construct the test statistic. One is to run the model with and

without X

k

and note the value of ⫺2 log L in each case. Then, if the null hypothesis

is true, the difference in ⫺2 log L for the models with and without X

k

is asymptoti-

cally distributed as chi-squared with M ⫺ 1 degrees of freedom. This test requires

running several different models, however, excluding one of the predictors on each

run. Instead, most software packages, including SAS, provide an asymptotically

298 ADVANCED TOPICS IN LOGISTIC REGRESSION

c08.qxd 8/27/2004 2:55 PM Page 298

equivalent Wald chi-squared test statistic [see Long (1997) for its formula] that per-

forms the same function. Predictors having significant global effects on violence

types, according to this test, are flagged with a superscript b in Table 8.5.

A third test statistic is for the effect of a predictor on a particular log odds. This

is simply the ratio of a given coefficient to its asymptotic standard error, which, as in

the binary case, is a z-test statistic. Fourth, it may be desirable to test effects of pre-

dictors on the nonindependent log odds—the odds of “intense male violence” versus

“physical aggression,” in the current example. As noted above, it is a simple matter

to obtain these tests, simply by rerunning the program and changing the coding of

the response variable. Fifth, tests of nested models with FIML are accomplished the

same as in the binary case. That is, if model B is nested inside model A (because, for

example, the predictors in B are a subset of those in A), ⫺2 log(L

B

/L

A

) ⫽⫺2logL

B

⫺

(⫺2 log L

A

) is a chi-squared test for the significance of the difference in fit of the two

models.

Finally, there is a test of collapsibility of outcome categories. Two categories of

the outcome variable are collapsible with respect to the predictors if the predictor set

is unable to discriminate between them. In the current example, it was seen that only

one predictor—cohabiting—discriminates significantly between “intense male vio-

lence” and “physical aggression.” A global chi-squared test for the collapsibility of

these two categories of violence can be conducted as follows. First, I select only the

couples experiencing one of the other of these types of violence, a total of 555 cou-

ples. Then I estimate a binary logistic regression model for the odds of “intense male

violence” versus “physical aggression.” The test of collapsibility is the usual likeli-

hood-ratio chi-squared test that all of the betas in this binary model are zero. Under

the null hypothesis that the predictors do not discriminate between these types of

violence, this statistic is asymptotically distributed as chi-squared (Long, 1997). The

test turns out to have the value 23.51, which, with 11 degrees of freedom, is just

significant at p ⬍ .05. Apparently, the model covariates do generally discriminate

between “intense male violence” and “physical aggression,” with cohabiting being

the primary discriminator, as noted above.

Estimating Probabilities. The probabilities of being in each category of the

response are readily estimated, based on the sample log odds. That is, if U is the esti-

mated log odds of “intense male violence” for a given couple and V is the estimated

log odds of “physical aggression” for that couple, the estimated probabilities of each

response for that couple are

P(intense male violence) ⫽

ᎏ

1 ⫹ e

e

U

U

⫹ e

V

ᎏ

,

P(physical aggression) ⫽

ᎏ

1 ⫹ e

e

U

V

⫹ e

V

ᎏ

, (8.2)

P(nonviolence) ⫽

ᎏ

1 ⫹ e

1

U

⫹ e

V

ᎏ

.

MULTINOMIAL MODELS 299

c08.qxd 8/27/2004 2:55 PM Page 299

Table 8.6 presents the probabilities for each category of couple violence profile, based

on the FIML estimates in Table 8.5. The focus in Table 8.6 is on the effect, in partic-

ular, of alcohol/drug problem. This is evaluated at three settings of the other covari-

ates: low risk, average risk, and high risk. Low risk represents a covariate profile that

predicts low probabilities of violence. For this profile, I set cohabiting and minority

couple each to 0; male’s isolation, economic disadvantage,and open disagreement to

1 standard deviation below the mean; and female’s age at union, relationship dura-

tion,and positive communication to 1 standard deviation above the mean. For aver-

age risk, all covariates (apart from alcohol/drug problem) are set to zero. High-risk

couples have cohabiting and minority couple each set to 1; male’s isolation, economic

disadvantage, and open disagreement set to 1 standard deviation above the mean; and

female’s age at union, relationship duration,and positive communication set to 1

standard deviation below the mean. (Remember that the model also contains an inter-

action between alcohol/drug problem and economic disadvantage as well as a quad-

ratic effect of relationship duration.) Let’s calculate the probabilities for the first row

of Table 8.6 to see how equations (8.2) work. Recalling that 0 represents “intense

male violence” and 1 represents “physical aggression,” and employing several deci-

mal places for the coefficients, we have the following estimated log odds (numbers in

parentheses are the standard deviations of the continuous regressors). The log odds of

“intense male violence” for low-risk couples with alcohol or drug problems is

ln O

0

⫽⫺4.024 ⫺ .0293(7.0807) ⫹ .0275(⫺6.2958) ⫹ .0372(⫺5.1283)

⫹ .8672 ⫺ .0662(12.8226) ⫹ .000475(12.8226

2

) ⫹ .0479(1)(⫺5.1283)

⫹ .0575(⫺4.0237) ⫺ .5359(1.3788) ⫽⫺5.7148,

and the log odds of “physical aggression” for low-risk couples with alcohol or drug

problems is

ln O

1

⫽⫺2.8099 ⫺ .0114(7.087) ⫹ .00815(⫺6.2958) ⫹ .00532(⫺5.1283)

⫹ .579 ⫺ .0489(12.8226) ⫹ .00162(12.8226

2

) ⫹ .0665(1)(⫺5.1283)

⫹ .0654(⫺4.0237) ⫺ .4084(1.3788) ⫽⫺3.9182.

300 ADVANCED TOPICS IN LOGISTIC REGRESSION

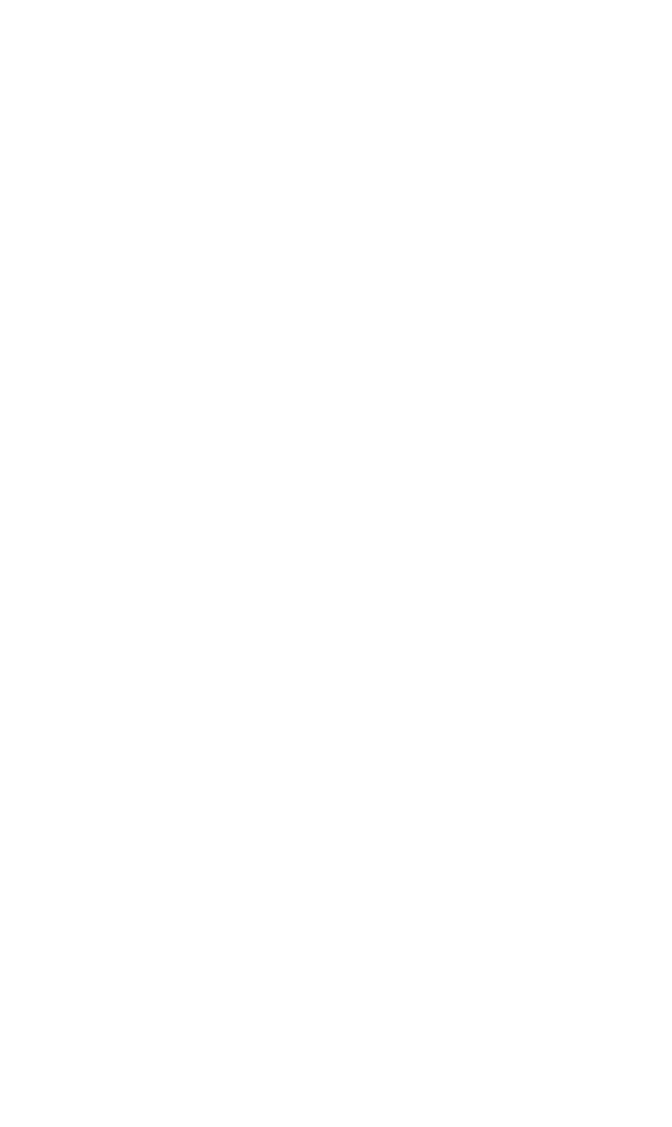

Table 8.6 Predicted Probabilities for Intense Male Violence (IM), Physical Aggression

(PA), and Nonviolence (NV), as a Function of Alcohol/Drug Problems

Settings of Alcohol/Drug

Other Covariates Problems P(IM) P(PA) PI(NV)

Low risk Yes .00322 .01943 .97735

No .00174 .01540 .98286

Average risk Yes .03701 .09342 .86957

No .01659 .05585 .92756

High risk Yes .50758 .33663 .15579

No .36520 .29371 .34109

c08.qxd 8/27/2004 2:55 PM Page 300

The estimated probabilities for each category of couple violence profile are, therefore,

P(intense male violence) ⫽⫽.0032,

P(physical aggression) ⫽⫽.0194,

P(nonviolence) ⫽⫽.9774.

At this point it is instructive to compare the odds ratios generated by the FIML

model in Table 8.5 with the probabilities in Table 8.6, for a couple of reasons. For

one, odds ratios based on the FIML model must agree with odds ratios generated

from ratios of probabilities in Table 8.6. This helps us understand the meaning of the

odds ratios. Second, odds ratios convey a different impression than probabilities,

particularly in multinomial models. It is therefore important to understand the

differences between the “story” told by odds ratios and the “story” told by the prob-

abilities. For example, the probabilities of each type of violence are increased

markedly for high-risk as opposed to low- or average-risk couples. For high-risk

couples with alcohol or drug problems, the probabilities associated with either type

of violence are especially high. In fact, the chances of a high-risk couple with alco-

hol or drug problems experiencing “intense male violence” are better than 50–50.

One might be tempted to infer that alcohol or drug problems are especially predic-

tive of violence for high-risk couples. As high-risk couples are those who are, among

other things, 1 standard deviation above the mean on economic disadvantage,we

might conclude that alcohol or drug problems have the strongest effect on violence

at high (i.e., a standard deviation above mean) economic disadvantage.

The odds ratios, however, present a different picture. Consider the effect of alcohol/

drug problem on “intense male violence” versus “physical aggression.” That is, given

violence, what is the impact of alcohol/drug problem on the odds that it is of the

“intense-male” type? Employing four decimal places for increased accuracy, the

coefficient for the main effect of alcohol/drug problem (from Table 8.5) is .8672 ⫺

.5790 ⫽ .2882. The coefficient for economic disadvantage ⫻ alcohol/drug problem is

.0479 ⫺ .0665 ⫽⫺.0186. Therefore, the effect of alcohol/drug problem on the log

odds of “intense male violence” (versus “physical aggression”) is .2882 ⫺ .0186 eco-

nomic disadvantage. Or, the effect on the odds is

ψ

alc/drug

⫽ exp(.2882)[exp(⫺.0186)]

ecndisad

⫽ 1.33402(.98157)

ecndisad

.

This suggests that each unit increase in economic disadvantage reduces the effect of

alcohol/drug problem by a factor of .98157, approximately a 2% reduction. In other

words, the effect of alcohol/drug problem is actually diminishing with greater

1

ᎏᎏᎏᎏ

1 ⫹ exp(⫺5.7148)⫹ exp(⫺3.9182)

exp(⫺3.9182)

ᎏᎏᎏᎏ

1 ⫹ exp(⫺5.7148) ⫹ exp(⫺3.9182)

exp(⫺5.7148)

ᎏᎏᎏᎏ

1 ⫹ exp(⫺5.7148) ⫹ exp(⫺3.9182)

MULTINOMIAL MODELS 301

c08.qxd 8/27/2004 2:55 PM Page 301

economic disadvantage. In particular, at 1 standard deviation below mean economic

disadvantage, the effect of alcohol/drug problem is

ψ

alc/drug

⫽ 1.33402(.98157)

⫺5.1283

⫽ 1.46755.

At mean economic disadvantage the effect is

ψ

alc/drug

⫽ 1.33402.

At 1 standard deviation above mean economic disadvantage, the effect is

ψ

alc/drug

⫽ 1.33402(.98157)

5.1283

⫽ 1.21264.

These figures agree with the probabilities in Table 8.6. Taking ratios of probabilities, the

effect of alcohol/drug problem for low-risk couples (who are at ⫺1 SD on economic

disadvantage) is

ψ

alc/drug

⫽

ᎏ

.

.

0

0

0

0

1

3

7

2

4

2

/

/

.

.

0

0

1

1

5

9

4

4

0

3

ᎏ

⫽ 1.46674.

The effect of alcohol/drug problem for average-risk couples (who are at mean eco-

nomic disadvantage) is

ψ

alc/drug

⫽

ᎏ

.

.

0

0

1

3

6

7

5

0

9

1

/

/

.

.

0

0

5

9

5

3

8

4

5

2

ᎏ

⫽ 1.33369,

and the effect of alcohol/drug problem for high-risk couples (who are at ⫹1 SD on

economic disadvantage) is

ψ

alc/drug

⫽

ᎏ

.

.

3

5

6

0

5

7

2

5

0

8

/

/

.

.

2

3

9

3

3

6

7

6

1

3

ᎏ

⫽ 1.21266.

These values agree with those based on the FIML model, within rounding error.

The point of this exercise is that odds ratios reflect a comparison of odds rather than

probabilities. In that capacity, they can indicate a declining effect even in the pres-

ence of increasing probabilities, because it is the ratio of probabilities (i.e., the odds)

that is being measured. Thus, even though the probability of “intense male violence”

is increasing dramatically for those with alcohol or drug problems across degrees of

couple risk, given violence, the odds that it is of the “intense-male” type is declin-

ing. Because the presentation of the equations for the nonindependent log odds can

lead to some confusion of this type, I prefer to focus on the equations for the inde-

pendent log odds. If an appropriate baseline category is chosen, these are usually

sufficient to describe the results.

302 ADVANCED TOPICS IN LOGISTIC REGRESSION

c08.qxd 8/27/2004 2:55 PM Page 302

Ordered Categorical Variables

When the values of a categorical variable are ordered, it is usually wise to take advan-

tage of that information in model specification. For example, the trichotomous catego-

rization of violence used for the analyses in Tables 8.5 and 8.6 represents different

degrees of violence severity, as mentioned previously. In this section, therefore, I treat

couple violence profile as an ordinal variable. The ordered logit model is a variant of

logistic regression specifically designed for ordinal-level dependent variables. Although

there is more than one way to form logits for ordinal variables [see, e.g., Agresti (1984,

1989) for other formulations], I focus on cumulative logits. These are especially appro-

priate if the dimension represented by the ordinal measure could theoretically be

regarded as continuous (Agresti, 1989). As I have already argued, this is the case for

couple violence profile. Cumulative logits are defined as follows. Suppose that the

response variable consists of J ordered categories coded 1, 2,...,J. The jth cumulative

odds is the ratio of the probability of being in category j or lower on Y to the probabil-

ity of being in category j ⫹ 1 or higher. That is, if O

ⱕj

represents the jth cumulative odds,

and π

j

is the probability of being in category j on Y, then

O

ⱕj

⫽ .

Cumulative odds are therefore constructed by utilizing J ⫺ 1 bifurcations of Y. In

each one, the probability of being lower on Y (the sum of probabilities that Y ⱕ j) is

contrasted with the probability of being higher on Y (the sum of probabilities that

Y ⬎ j). This strategy for forming odds makes sense only if the values of Y are

ordered. With regard to violence, the first cumulative odds, O

ⱕ0

, is the ratio of the

probability that couple violence profile is 0 (“intense male violence”) to the proba-

bility that couple violence profile is 1 (“physical aggression”) or 2 (“nonviolence”).

Using the marginal probabilities given above of each type of violence, the marginal

sample value is .036/(.099 ⫹ .864) ⫽ .037. The second cumulative odds, O

ⱕ1

, is the

ratio of the probability that couple violence profile is 0 or 1 to the probability that it

is 2, with marginal value (.036 ⫹ .099)/.864 ⫽ .156. In other words, each odds is the

odds of more severe vs. less severe violence, with “more severe” and “less severe”

being defined using different values of j—the cutpoint (Agresti, 1989)—in either

case. The jth cumulative logit is just the log of this odds. For a J-category variable,

there are a total of J ⫺ 1 such logits that can be constructed. These logits are ordered,

because the probabilities in the numerator of the odds keep accumulating as we go

from the first through the (J ⫺ 1)th logit. That is, if U

j

is the jth cumulative logit, then

it is the case that U

1

ⱕ U

2

ⱕ

...

ⱕ U

J ⫺ 1

.

One model for the cumulative logits, based on a set of K explanatory variables, is

log O

ⱕj

⫽ β

0

j

⫹ β

1

j

X

1

⫹ β

2

j

X

2

⫹

...

⫹ β

K

j

X

K

, (8.3)

where the superscripts on the coefficients of the regressors indicate that the effects of

the regressors can change, depending on the cutpoint, j. This model has heuristic

π

1

⫹ π

2

⫹

...

⫹ π

j

ᎏᎏᎏ

π

j⫹1

⫹ π

j⫹2

⫹

...

⫹ π

J

MULTINOMIAL MODELS 303

c08.qxd 8/27/2004 2:55 PM Page 303

value as a starting point. However, strictly speaking, it is not legitimate for an ordinal

response. The reason has to do with the fact that at any given setting of the covariate

vector, x, it must be the case that P(Y ⱕ j 冟 x) ⱕ P(Y ⱕ j ⫹ 1 冟 x) for all j. If covariates’

effects can vary over cutpoints, however, it is possible that P(Y ⱕ j 冟 x) ⬎ P(Y ⱕ j ⫹ 1 冟 x)

for some j, which is logically untenable if Y is ordered. (I wish to thank Alan Agresti

for bringing this model flaw to my attention.) At any rate, model (8.3) is easily esti-

mated using binary logistic regression software, as it is just a binary logistic regres-

sion based on bifurcating Y at the jth cutpoint. Table 8.7 presents the results of

estimating this model for couple violence profile. Estimates in the second column, for

the log odds of “violence” versus “nonviolence,” are just the estimates from model 2

in Table 8.4, repeated here for completeness. Estimates in the first column are for the

log odds of “intense male violence” versus any other response.

Invariance to the Cutpoint. For the most part, results suggest that regressors have the

same effects on the log odds of more severe versus less severe violence, regardless of

the cutpoint used to make this distinction. For example, a unit increase in open dis-

agreement elevates the odds of “intense male violence” versus any other response by

a factor of exp(.041) ⫽ 1.042, whereas it raises the odds of “any violence” versus “no

304 ADVANCED TOPICS IN LOGISTIC REGRESSION

Table 8.7 Ordered Logit Models for Violence Profile as a Function of Couple

Characteristics

Intense Male

Violence vs. Violence vs. More vs. Less

Predictor Other Response Nonviolence Violence

Intercept ⫺4.008*** ⫺2.553***

Intercept

1

⫺4.106***

Intercept

2

⫺2.528***

Cohabiting 1.202*** .867*** .909***

Minority couple .207 .206 .182

Female’s age at union ⫺.029* ⫺.016 ⫺.017*

Male’s isolation .026 .013 .013

Economic disadvantage .036* .014 .018

Alcohol/drug problem .724** .654*** .639***

Relationship duration ⫺.058*** ⫺.052*** ⫺.052***

(Relationship duration)

2

.0001 .0015*** .0014***

Economic disadvantage .012 .061* .048

⫻ alcohol/drug problem

Open disagreement .041 .064*** .062***

Positive communication ⫺.443*** ⫺.441*** ⫺.435***

Model χ

2

177.792*** 453.110*** 460.817***

Model df 11 11 11

Score test 12.457

Score df 11

R

2

L

.139 .140 .118

* p ⬍ .05. ** p ⬍ .01. *** p ⬍ .001.

c08.qxd 8/27/2004 2:55 PM Page 304