Accardi L., Freudenberg W., Obya M. (Eds.) Quantum Bio-informatics IV: From Quantum Information to Bio-informatics

Подождите немного. Документ загружается.

70

Although

these

divergences look very

natural,

it

is usually difficult

to

com-

pute

them,

unless

the

operators

rp

and

&

commute,

in

which case

they

both

can

be

written

as

VZr

(W;4?)

=

(4?-w)(1)

-w

(lr

(!)),

with

h/2

(q)

= 2

(01

-1)

for r =

1/2

and

1

h

(q)

=

"2

(q

- 1 +

Iq

-

11)

==

(q

-

lL

\lq;::O:

O.

(14)

(15)

Note

that

the

operator

function

ll/2

is

the

square

root

case

of

the

REmyi

logarithm

1 1

lr

(q)

= -

(e

r1nq

-

1)

= -

(qr

- 1),

lo

(q)

= lnq

(16)

r r

or

r-Iogarithm,

which is well defined for

any

0

S;

r < 1

as

a

smooth,

strictly

monotone

and

concave

operator

function

of

q > 0 including

the

limiting

case r

~

0

when

lo

= lim

lr

is

the

natural

logarithm

lo

= In.

It

can

be

naturally

extended

to

a

proper

concave function

on

lR

by

l

(q)

=

-00

on

q

S;

0,

has

finite

strictly

negative values for 0 < q < 1

with

l (1) = 0

and

the

normalized derivative

l~

(1) = 1

at

q = 1

and

is

strictly

positive if q >

O.

However,

in

the

case r = 1

the

REmyi

"logarithm"

l

(q)

=

q-l

is

not

concave

but

only affine,

corresponding

to

the

trivial

divergence d (w,

4?)

= 0

in

(14)

if

h is replaced

by

this

l.

This

is why

in

the

case r = 1 we redefine

the

divergence

by

another

monotone

concave function (15) which is, however,

not

strictly

monotone

and

concave

and

is

not

smooth

at

q =

l.

The information divergence V

(w;

4?)

of w from

4?

is usually defined

as

a positive negaentropy V

z

=

-Sz

by

the

semifinite relative entropy

(17)

Here w

(ill)

= w

(m)

and

l is usually

taken

to

be

the

Renyi

logarithm

(16),

r E

[0,

1[

for which In

(rpj&)

is usually

understood

as

In

rp

- In &.

3.2.

The

general

information

divergences

The

general information divergence V (w;

4?)

of

states

on

a

matrix

algebra

M

can

be

defined like a

distance

to

have only positive values, however, unlike

the

distance

it

is

not

assumed

to

be

symmetric

and

satisfying

the

triangular

inequality,

and

usually is allowed

to

have also

the

infinite value

+00,

say,

for some

central

states

w i-

4?

on

an

infinite dimensional M

S;;;

lIl\

(~).

The

71

reference

state

cp,

or

weight as

unnormalized

state,

is

said

to

be

tracial,

or

central,

if

the

density

operator

rp

commutes

with

all & E

SM.

Obviously

it

is

the

case if

rp

= 1

corresponding

to

the

standard

trace

cp

(m)

=

(m,1)

=

Tt

[m]

which majorizes

as

1

:::>

&

any

state

w normalized as

e [&]

:=

(1,&)

= 1

'Vw

E SM.

with

respect

to

the

trace

e

on

M

T

•

If

the

state

w is

not

too

different from

the

reference

cp

in

the

sense

that

it

is

dominated

by

the

equally normalized

cp,

i.e.

if

cp

(1) = w (1)

and

w is

majorized

by

ACP

for a A > 0, a finite

information

divergence V

(w;

cp)

of

w from

cp

is usually defined as a positive

negaentropy

VI

=

-SI

by

the

semifinite relative entropy (17).

More generally, we shall define a positive l-divergence

VI

as

dual

to

a

relative

l-entropy

in

the

sense

VI

(w,

cp)

+ Sl (w,

cp)

=

(cp

-

w)

(rz)

with

rl

= l' (1) (18)

for a

suitable

contrast

function

l.

One

can

always

take

l (q) = e

r1nq

- 1

==

rlr

(q) having q = l' (1) =

r1,

or

the

Renyi

logarithm

l =

IT)

0

~

r < 1,

normalized

by

l~

(1)

:=

Oqlr

(1)

=

1,

but

it

can

be

any

operator-monotone

concave function l :

JR.+

----+

JR.,

positive

on

(1, (0), negative

on

(0,1)

and

smooth

at

q =

1.

Note

that

the

latter

condition

excludes

the

function (15)

corresponding

to

the

trace

distance

V = d

1

for which

the

entropy

S is

not

well-defined

by

(18)

but

can

be

taken

zero Sh (w,

cp)

= 0 for

any

w

~

cp

by

choosing

the

subderivative

rl

E

l~

(1) as

rl

=

1.

The

above

properties

of

the

contrast

function

are

derived from

the

fol-

lowing

divergence

axioms

if

they

hold

on

the

whole cone Jt

of

w,

or

at

least

on

the

convex

subset

Jt

o

=

{w:::>

0:

W (1)

~

I}

containing

0 E Jt.

By

UCP

we

denote

the

properties

of

unitality

A (1) = 1

and

complete

positivity

[A

(bibk)]

:::>

0 for a linear

normal

map

A :

lIlS

----+

M.

(1)

V(w;

cp)

:::>

0

with

V = 0

{?

W =

cpo

(Strict

positivity

and

distin-

guishability)

(2)

V

(EBAiwi,

EBAiCPi)

=

2:

Ai

V

(Wi,

CPi)

'VAi

:::>

0,

2:

Ai

=

1.

(Direct

affinity)

(3)

V(w

0

A;

cp

0

A)

~

V(w;

cp)

for

any

UCP

map

(Operational

monotonicity. )

In

addition,

we say

that

V(

W;

cp)

is

semifinite

if

(4)

V

(w;

cp)

<

00

if

W

~

ACP

for a positive

A;

that

is

smooth

(differentiable)

if

(5)

the

function d (s, t) = V (w +

s{);

cp

+ M) is

smooth.

72

that

it

is

negaentropy

defining

the

entropy

S =

(cp

-

w)

(r) - V

if

(6)

S(w;

cp)

::;

S(w;

CPl)

for every

cP

::;

CPl

and

an

r >

O.

Not

e

that

in

the

commutative

case

[iV,

cp]

= 0

the

above axioms define

the

l-entropy

(17)

and

the

corresponding

divergence

uniquely

up

to

the

choice

of

the

function

l

similat

to

the

entropic

function g in

18,19

if

they

hold

on

the

whole cone

.it

of

w ,

or

at

least

on

the

convex

subset

.ito

=

{w

;::::

0 : w (1)

::;

I}

containing

0

E.it.

Given a

relativ

e

entropy

S,

the

function

l

can

be

easily

deduced

as l

(q)

= S (1;

q)

corresponding

to

the

case

w = 0

EB

1,

cP

= 0

EB

q,

and

V

(w;

cp)

is uniquely defined for

the

completely

decomposable

w =

EBAi

and

cP

=

EB

Aiqi

due

to

the

direct

additivity

by

such

l.

In

future

we

shall

always choose r = 1

by

normalizing

l

such

that

rz

= l' (1) = 1.

3.3.

The

relative

l-entropies

of

types

A&B

If

cp

and

iV

do

not

commute,

cp/iV

is

not

uniquely

defined.

In

the

logarithmic

case l = In

the

naive convention In

(cp

/

iV)

= In

cp

- In

iV

gives

the

Araki-

Umegaki

relative

entropy

SLA)

(w;

cp)

=

e[iV~

(In

cp

-In

iV)iV~]

= w [(In

cp

-In

iv)].

(19)

Note

that

for

the

noncommuting

cp

and

iv

this

convention does

not

lead

to

the

natural

generalization

of

classical

formula

SIn

(w;

cp)

= ¢ (h (iv

jcp))

in

terms

of

logarithmic

entropic

function

h (p) =

-p

In p for

the

Radon-

Nikodym

(RN)

density

p

of

w

with

respect

to

cpo

One

can

take

this

conven-

tion

to

produce

also

the

relative

l-entropy

of

the

A-type

for

any

contrast

function

l,

although

it

may

look even less

natural

as

in

the

case of

A-type

REmyi

entropy

siA)(w;

cp)

= e

[iv~

(er(ln.p-lnib)

-

l)iV~]

=

w(er(ln.p-lnib)

- 1).

corresponding

to

l

(q)

=

qr

-

1.

Moreover,

if

cp

is

not

dominated

by

w,

this

entropy

is ill-defined, while h (iv /

cp)

may

be

still well-defined as a function

1 1

of

the

positive

bounded

operator

cp

- 2

ivcp

- 2 for

any

w

dominated

by

cpo

Therefore,

it

is

more

natural

to

define

the

B-type

relative

l-entropy

(20)

1 1

in

terms

of

the

weight ¢ = e 0 7r

<p'

7r

<p

(p) =

cp

2

pcp

2

and

the

contravariant

1 1

RN-density

iV<p

=

cp

-

2

iVcp-2

of w

with

respect

to

the

weight

cpo

Here

73

h

(p)

= pl

(p

-1)

is l-entropic function, say

defined as a positive

and

concave

on

[0,1]

for

any

contrast

function l,

with

h (0) = 0 = h (1),

having

a

maximum

p~l'

(Po)

> 0

at

the

unique

solution

Po

of

the

equation

pl'

(p)

+ l

(p)

= 0 for a

smooth

l.

The

B-type

of

relative

entropy

was

introduced

by

Belavkin-Staszewski in 1986 for

the

case l = In.

One

can

rewrite

it

in

the

form similar

to

(19) as

where

In(&0-

1

)&

=

&In(0-

1

&)

is

understood

as

(22)

Ohya

and

Petz

proved

that

the

Belavkin-Staszewski divergence gives

better

distinction

of

W relative

to

tp

than

Araki-Umegaki divergence

in

the

sense

that

V9;l

2':

VIC:

l

,

and

that

it

satisfies all required axioms above.

Note

that

the

Hellinger divergences

vi"

1

(w;

tp) of A&B-

type

can

be

1/2

written

as

(13)

in

terms

of

the

corresponding

nonsymmetric

A&B-type

fidelities

fU2

(w;

tp),

and

f;Al = w (er(ln<p-lnwl) , f;B) = ¢

[(0-

1

/

2

&0-

1

/

2

fJ

define

the

relative lr-entropies

of

the

corresponding

type

as

3.4.

The

entropy

increase,

its

concavity

and

additivity

The

relative

entropy

5,

defined

by

the

general divergence as

5 (w;

tp)

=

tp

(1) - w (1) - V (w;

tp)

,

has

almost all

properties

of

V

except

the

positivity

(1), unless tp

2':

w.

Indeed,

by

taking

the

standard

normalization

r = 1

in

the

definition

(6) of

S we have 5 (w;

tp)

:S

tp

(1) - w (1) < 0 if

(tp

-w)

(1) < 0 since

V

(w;

tp)

2':

O.

Thus,

the

relative

entropy

5,

unlike

the

divergence

V,

can

be

used

to

distinguish only

the

states

with

equal

normalization

w (1) = tp (1)

when

5 =

-V.

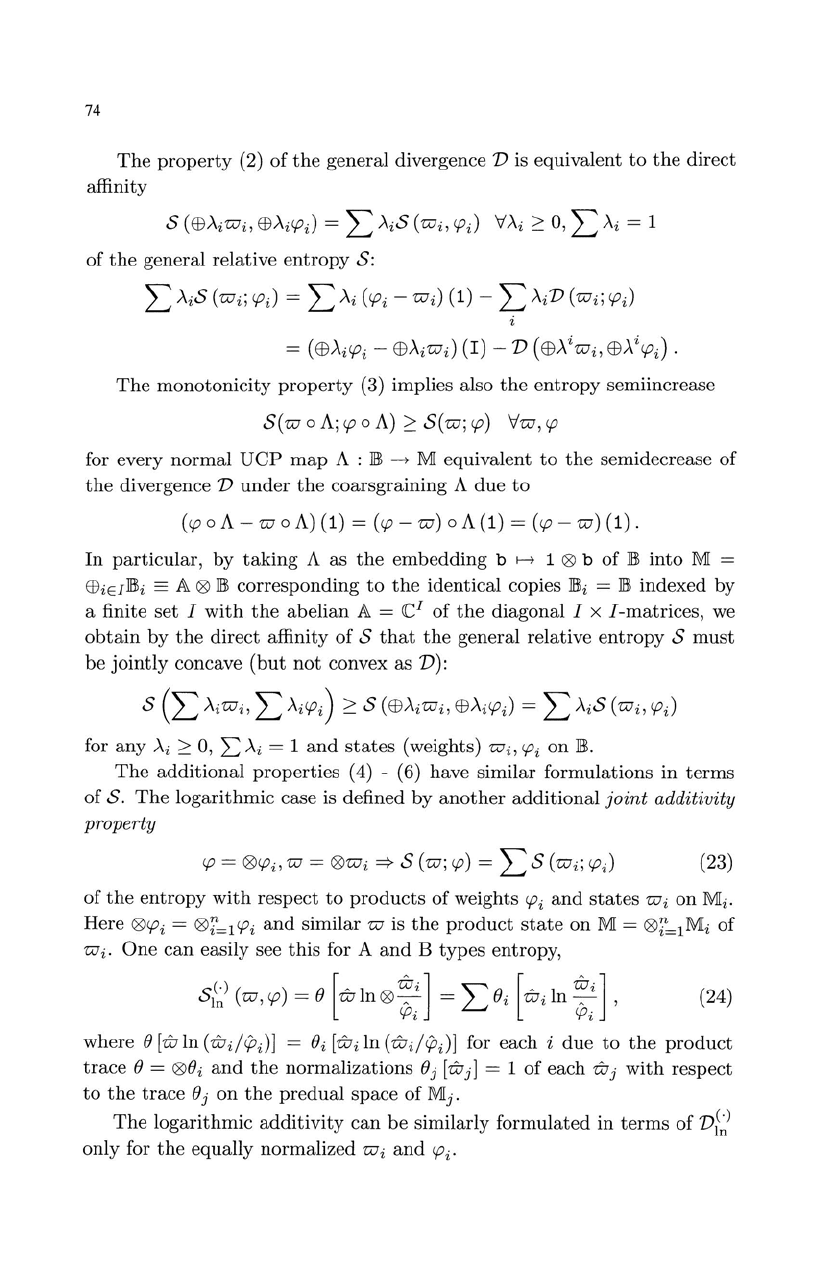

74

The

property

(2)

of

the

general divergence V is equivalent

to

the

direct

affinity

S(EBA(Wi,EBAi'Pi)

=

LAiS(Wi,'Pi)

VAi

~

O'LAi

= 1

of

the

general relative

entropy

S:

L

Ai

S

(Wi;

'Pi)

= L

Ai

('Pi

- Wi) (1) - L

Ai

V

(Wi;

'Pi)

=

(EBAi'Pi

-

EBAiWi)

(r) - V

(EBAiWi'

EBAi'Pi)'

The

monotonicity

property

(3) implies also

the

entropy

semi increase

S(W

0

A;

'P

0

A)

~

S(w;

'P)

Vw,

'P

for

every

normal

UCP

map

A :

lE

-+

M equivalent

to

the

semidecrease of

the

divergence V

under

the

coarsgraining

A

due

to

('P

0 A - W 0

A)

(1)

=

('P

-

w)

0 A

(1)

=

('P

-

w)

(1).

In

particular,

by

taking

A as

the

embedding

b f---+ 1 ® b of

lE

into

M =

EBiEI

lEi

==

A ®

lE

corresponding

to

the

identical copies

lEi

=

lE

indexed

by

a finite

set

I

with

the

abelian

A =

reI

of

the

diagonal

I x

I-matrices,

we

obtain

by

the

direct

affinity

of

S

that

the

general relative

entropy

S

must

be

jointly

concave

(but

not

convex as

V):

S

(L

AiWi, L Ai'Pi)

~

S

(EBAiwi,

EBAi'Pi)

= L

Ai

S

(Wi,

'Pi)

for

any

Ai

~

0, L

Ai

= 1

and

states

(weights) Wi,

'Pi

on

lEo

The

additional

properties

(4) - (6) have

similar

formulations

in

terms

of

S.

The

logarithmic

case is defined

by

another

additional

joint

additivity

property

'P

= ®'Pi' W = ®Wi

=}

S (w;

'P)

= L S (Wi;

'Pi)

(23)

of

the

entropy

with

respect

to

products

of

weights

'Pi

and

states

Wi

on

Mi'

Here

®'Pi

= ®i=l

'Pi

and

similar

W is

the

product

state

on

M = ®i=l Mi

of

Wi.

One

can

easily see

this

for A

and

B

types

entropy,

(24)

where

e[wln(wi/rPi)]

=

edwdn(wdc,Oi)]

for

each

i

due

to

the

product

trace

e = ®e

i

and

the

normalizations

e

j

[Wj]

= 1

of

each

Wj

with

respect

to

the

trace

e

j

on

the

pre

dual

space

of

M

j

.

The

logarithmic

additivity

can

be

similarly

formulated

in

terms

of

VjS!

only

for

the

equally

normalized

Wi

and

'Pi'

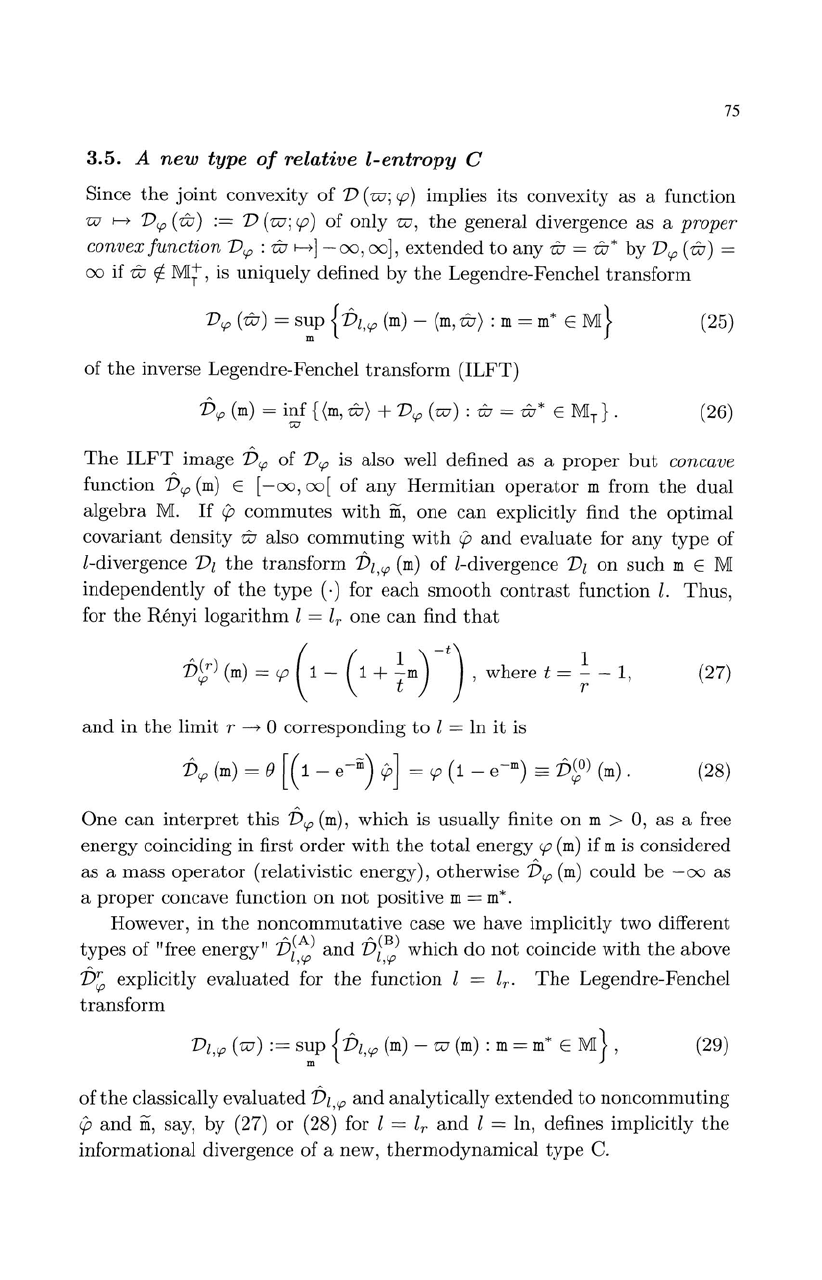

75

3.5.

A

new

type

of

relative

l-entropy

C

Since

the

joint

convexity

of

V

(w;

<p)

implies its convexity

as

a function

w

f-7

Vrp

(ftr)

:=

V(w;<p)

of

only

w,

the

general divergence as a

proper

convex function

Vrp

:

ftr

f-7]-

00,

00],

extended

to

any

ftr

=

&*

by

Vrp

(&) =

00

if &

rf-

Mi,

is uniquely defined

by

the

Legendre-Fenchel

transform

Vrp

(&) =

s~p

{Vz,rp

(m)

-

(m,

&) : m =

m*

EM}

(25)

of

the

inverse Legendre-Fenchel

transform

(ILFT)

Vrp

(m)

=

inf

{(m,

ftr)

+

Vrp

(w) :

ftr

=

ftr*

E M

T

} .

(26)

w

The

ILFT

image

Vrp

of

Vrp

is also well defined

as

a

proper

but

concave

function

Vrp

(m)

E [-oo,oo[

of

any

Hermitian

operator

m from

the

dual

algebra

M.

If

cp

commutes

with

m,

one

can

explicitly find

the

optimal

covariant density

ftr

also

commuting

with

cp

and

evaluate

for

any

type

of

l-divergence V

z

the

transform

Vz,rp

(m)

of l-divergence V

z

on such m E M

independently

of

the type

(.) for each

smooth

contrast

function l.

Thus,

for

the

Ri"myi

logarithm

l = lr

one

can

find

that

V~)

(m)

=

<p

(1-

(1

+

~m)

-t),

where t =

~

-1,

(27)

and

in

the

limit r

--+

0

corresponding

to

l = In

it

is

One

can

interpret

this

Vrp

(m),

which is usually finite

on

m > 0,

as

a free

energy

coinciding

in

first

order

with

the

total

energy

<p

(m)

if m is considered

as a

mass

operator

(relativistic energy), otherwise

Vrp

(m)

could

be

-00

as

a

proper

concave function

on

not

positive m =

m*

.

However,

in

the

noncommutative

case we have implicitly two different

types

of

"free energy"

Vz(A)

and

Vr

B

)

which

do

not

coincide

with

the

above

,rp ,rp

V~

explicitly

evaluated

for

the

function I = lr.

The

Legendre-Fenchel

transform

VZ,rp

(w)

:=

sup

{Vz,rp

(m)

- w

(m)

: m =

m*

EM},

(29)

m

of

the

classically

evaluated

Vz,rp

and

analytically

extended

to

noncommuting

cp

and

m,

say, by (27)

or

(28) for I = lr

and

I = In, defines implicitly

the

informational

divergence

of

a new,

thermodynamical

type

C.

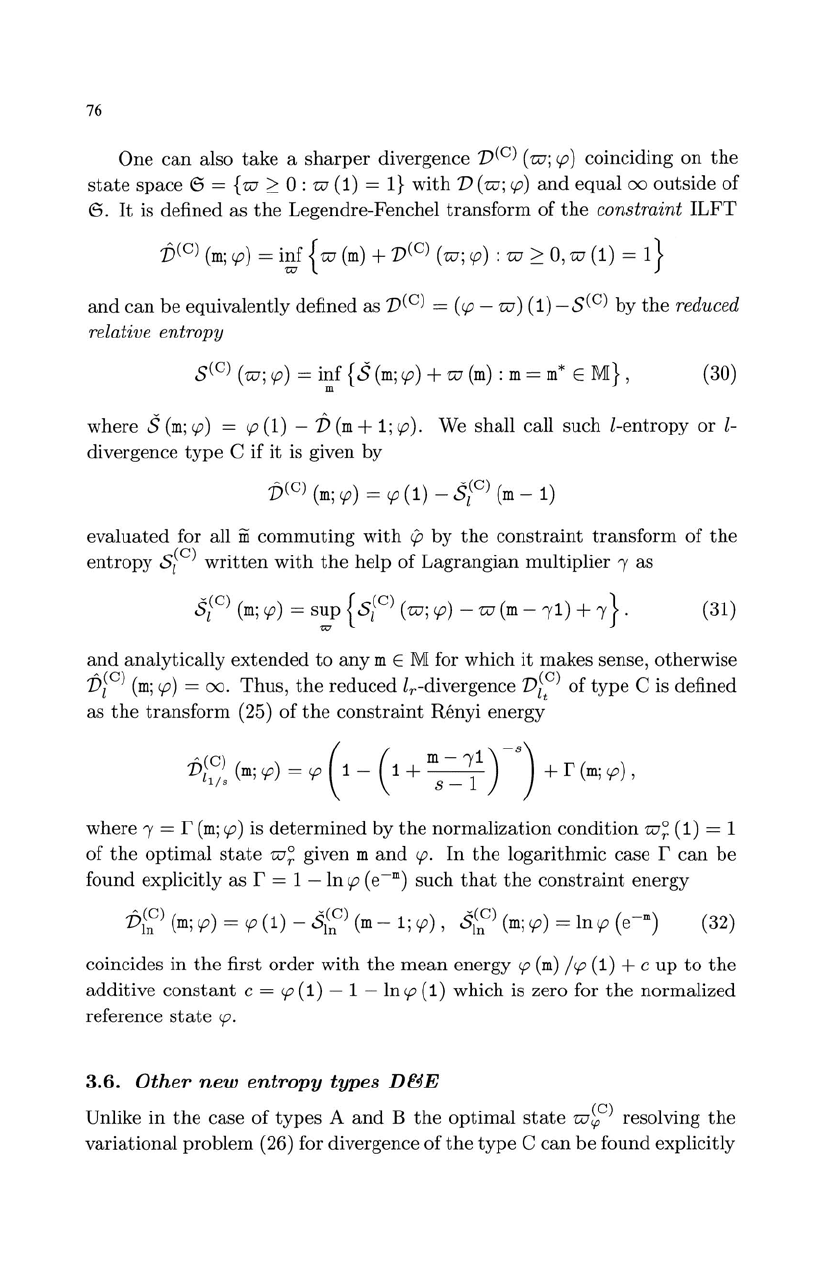

76

One

can

also

take

a

sharper

divergence

V(C)

(w;

<p)

coinciding

on

the

state

space

(3

=

{w

2:

0 : w (1) =

I}

with

V (w;

<p)

and

equal

00

outside of

(3.

It

is defined as

the

Legendre-Fenchel

transform

of

the

constraint

ILFT

v(C)

(m;

<p)

=

i~,f

{ w

(m)

+

V(C)

(w;

<p)

: w

2:

0,

w (1) = 1 }

and

can

be

equivalently defined as

V(C)

=

(<p

-

w)

(1)

-S(C)

by

the

reduced

relative

entropy

s(C)

(w;

<p)

=

inf

{S

(m;

<p)

+ w

(m)

: m =

m*

E M} , (30)

m

where S(m;<p) = <p(1) -

V(m+

1;<p). We shall call such l-entropy

or

l-

divergence

type

C if

it

is given by

V(C)

(m;

<p)

=

<p

(1) -

SI(C)

(m

- 1)

evaluated for all

iIi

commuting

with

rp

by

the

constraint

transform

of

the

entropy

siC)

written

with

the

help

of

Lagrangian

multiplier,

as

SI(C)

(m;

<p)

=

sup

{

SI(C)

(w;

<p)

- w

(m

-

,1)

+ , } .

(31)

'U7

and

analytically

extended

to

any

m E M for which

it

makes sense, otherwise

VI(C)

(m;

<p)

=

00.

Thus

,

the

reduced lr-divergence

vi~)

of

type

C is defined

as

the

transform

(25)

of

the

constraint

REmyi

energy

vi~~

(m;

<p)

=

<p

(1

-

(1

+

ms

-=-

~1

)

-8)

+ r

(m;

<p),

where,

= r

(m;

<p)

is

determined

by

the

normalization condition

w~

(1) = 1

of

the

optimal

state

w~

given m

and

<po

In

the

logarithmic case r

can

be

found explicitly as r = 1 - In

<p

(e-

m

)

such

that

the

constraint

energy

V[;)

(m;

<p)

=

<p

(1) -

Sl~C)

(m

-

1;

<p),

Sl~C)

(m;

<p)

=

In<p

(e

-

m

)

(32)

coincides in

the

first

order

with

the

mean

energy

<p

(m)

/<p

(1) + c

up

to

the

additive

constant

c =

<p

(1) - 1 - In

<p

(1) which is zero for

the

normalized

reference

state

<po

3.6.

Other

new

entropy

types

D&E

Unlike in

the

case

of

types

A

and

B

the

optimal

state

w~C)

resolving

the

variational problem (26) for divergence

of

the

type

C

can

be

found explicitly

77

even for

noncommuting

rp

and

iii

at

least in

the

logarithmic

case

lIn.

Indeed,

it

is given

as

&(e)

-1

1

(8-1)

A

-8md

free

- e

'Pe

s,

o

A

(e)

_

r-111

(8-1)

A

-8md

'W

nor

- e e

'Pe

s

o

by

solving

the

dual

problem

(25) respectively for free (28)

or

constraint

(32)

by

normalization

logarithmic energy using

the

ordering

index

method

for

noncommuting

variation

8m

of

m.

This

implicitly defines

the

entropic

function hi:) (& :

rp)

of

C-

type

relative density for

the

state

'W

with

respect

to

'P

presenting

the

solution

Sl~e)

('W;

'P)

= e

(rp

~

hi:) (& :

rp)

'P

~)

=

'P

(hi:)

(&

:

rp))

. (33)

of

the

variational

problem

(30) coinciding

on

('3

with

unreduced

logarith-

mic relative

entropy

S<p

('W).

It

must

satisfy

the

stationarity

differential

equation

rp

~

hr;:)

(&

:

rp)

rp

~

= iii -

,1

in

terms

of

the

variational

derivative

hr;:)

(&

:

rp)

= 8hi:) (& :

rp)

/8&

re-

solving (31) for (33).

Similarly

we

can

define

another

two

interesting

logarithmic relative en-

tropies

of

type

D

and

E in

the

form

Sl~)

('W;

'P)

= e

(rp

~

hf;!

(& :

rp)

'P

~)

=

'P

(

hf;!

(& :

rp))

.

They

are

determined

respectively

by

the

logarithmic

entropic

functions

hCJ;)

(& :

rp)

and

h~)

(& :

rp)

as

the

solutions

of

the

stationarity

differential

equation

equations

rp~h~(&:rp)rp~

=iii-,1

defined

by

the

variational

derivatives

h~

(& :

rp)

=

8hf;!

(& :

rp)

/8&

in

terms

of

the

RN

derivative

&SD)

=

&<p

(D-type)

and

of

the

exponential

relative

density

&SE)

=

exp

[In

&

-lnrp]

(E-type):

rp~hCJ;)

(&:

rp)

rp~

=

h'

(&SD)),

&SD)

=

rp-~&rp-~,

rp~

h~)

(&

:

rp)

rp~

=

h'

(&SE)) =

h'

(exp

[In

&

-lnrp]).

Here

h'

(p)

=

-In

p

-1

is

the

derivative of

the

logarithmic

entropic

function

h (p) =

-plnp.

Unfortunately,

the

free (28)

or

constraint

(32)

logarithmic

energy

of

types

D

and

E

in

general is difficult

to

evaluated

explicitly if

rp

does

not

78

commute

with

ill,

however

the

optimal

states

resolving

the

corresponding

minimization

problems

(25)

can

be

easily found from

the

stationarity

dif-

ferential

equation with

I = 1 for

the

free case

and

I = r for

the

normalized

case:

,(E)

_

In

",,-iii

W

free

- e ,

,(El

_

r-lln",,-iii

W

nor

- e e .

4.

Quantum

Mutual

Information

and

Encodings

4.1.

Entangled

mutual

information

Here

we

define

symmetric

mutual

information

m a

quantum

compound

state

W achieved

by

an

entanglement

7f

:

lBl

---7

AT' or, equivalently, by

7fT

: A

---7

lBlT'

as

the

general

information

divergence

of

the

entangled

state

W

with

respect

to

the

product

state

cp

=

12

Q9

C;

on

M = A

Q9

lBl:

(34)

In

particular,

I.t,k

(7f)

=

vU

(Wi

12

Q9

c;)

is

the

usual

logarithmic

quantum

mutual

information

of

a

particular

type.

Theorem

4.1.

Let A :

lBl

---7

A~

be

an entanglement

of

the state

c;(b)

=

(1go,A(b)) on

lBl

to

(AO,

12°)

with

AO

<;;;

8(gO) as a

CP

map such

as?/

=

A(1~),

and

7f

=

KT

0 A

be

the entanglement to the state

12

=

(!oK

on A

<;;;

8 (g) defined as the composition

of

A through a channel

KT

:

A~

---7

AT

as

the predual to a normal UCP map K : A

---7

A

0

•

Then the following

monotonicity

holds

I

('l()

(.)

()

A

IE

7f

~

I

Ao

IE

A .

, ,

Proof:

This

simply follows from

the

monotonicity

of

V.

(35)

It

is

interesting

to

compute

and

compare

the

different

types

of

entangled

mutual

informations Vz

(Wi

12

Q9

c;)

for

the

particular

types

of

the

contrast

function l. In

particular,

to

compute

the

RE'myi

and

the

usual

logarithmic

informations

vU

(Wi

12

Q9

c;)

for

the

special, say

quantum

Gaussian

entan-

gled

states

wand

to

compare

them

with

the

wellknown

Gaussian

entangled

information

of

the

type

A.

4.2.

The

proper

quantum

entropies

Applying

the

monotonicity

property

for

the

standard

entanglement

A =

7fJ

==

a of

C;

to

12°

=

~

decomposing every

7fT

by

the

Theorem

1 as

7fT

=

aoK

79

we

obtain

immediately

the

explicit

solution

A =

iffi,

1TT

=

a-

:=

1T~

to

the

optimization

problem

sup

IA,lIl\(1T)

= IlIl\,j(a-)

==

7-{lIl\(C;).

7r:/-L07r=c;

(36)

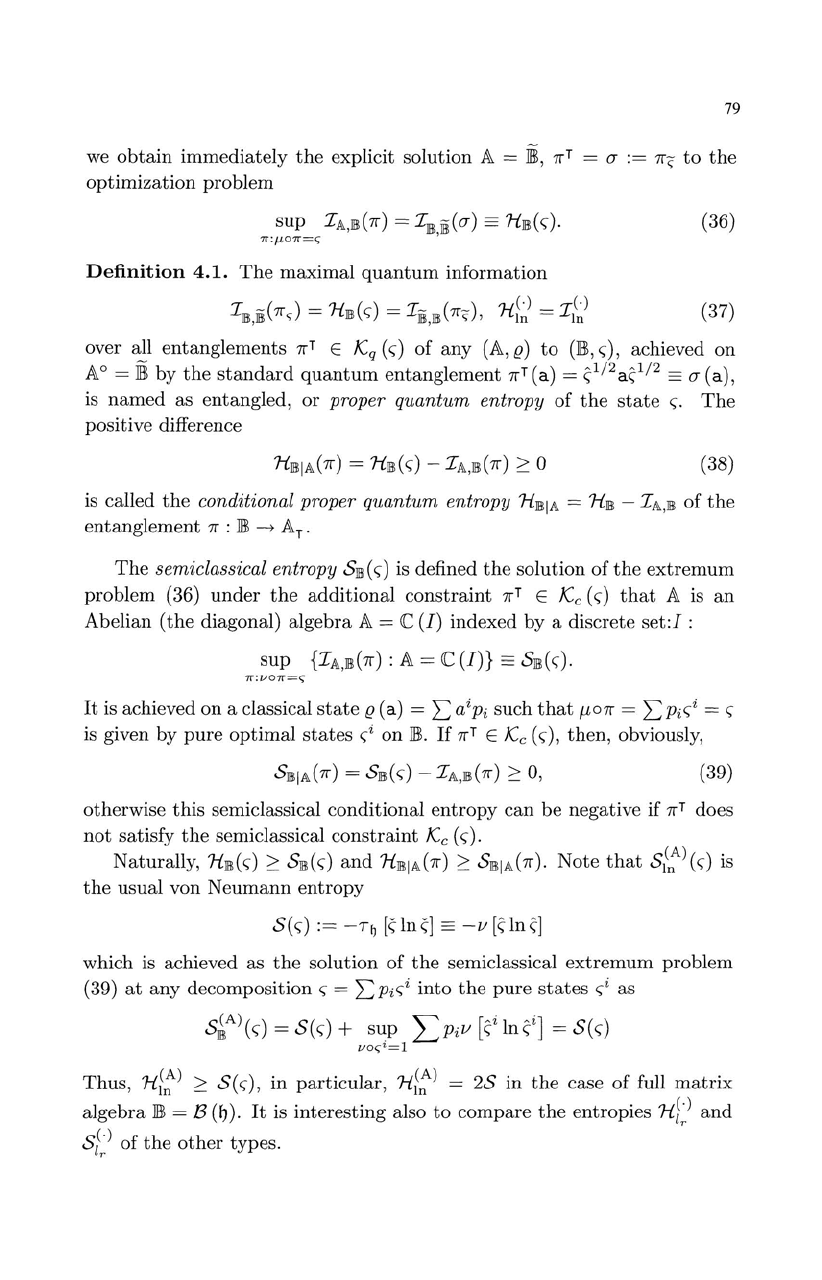

Definition

4.1.

The

maximal

quantum

information

I

~(1T

) =

7-{lIl\(c;)

=

I~

(1T~)

7-{e·)

= Ie·)

lIl\,lIl\

<;

lIl\,lIl\

<;,

In In

(37)

over all

entanglements

1TT

E

Kq(c;)

of

any

(A,a)

to

(lffi,c;),

achieved

on

A

0

=

iffi

by

the

standard

quantum

entanglement

1T

T (a) =

e/

2

ae/

2

==

a-

(a),

is

named

as entangled,

or

proper quantum entropy

of

the

state

c;.

The

positive difference

(38)

is called

the

conditional proper quantum entropy

7-{lIl\IA

=

7-{lIl\

-

IA,lIl\

of

the

entanglement

1T

:

lffi

--->

AT

.

The

semiclassical entropy

SlIl\

(c;)

is defined

the

solution

of

the

extremum

problem

(36)

under

the

additional

constraint

1T

T E

Kc

(c;)

that

A is

an

Abelian

(the

diagonal)

algebra

A = C

(I)

indexed

by

a discrete

set:I

:

sup

{IA,lIl\(1T):

A = C (I)}

==

SlIl\(c;).

7r:V07r=C;

It

is achieved

on

a classical

state

a (a) = L

aipi

such

that

/W1T

= L

PiC;i

=

C;

is given

by

pure

optimal

states

C;i

on

lffi.

If

1T

T E

Kc

(c;),

then,

obviously,

(39)

otherwise

this

semiclassical

conditional

entropy

can

be

negative

if

1TT

does

not

satisfy

the

semiclassical

constraint

Kc

(c;).

Naturally,

7-{lIl\

(c;)

2-

SlIl\

(c;)

and

7-{lIl\IA

(1T)

2-

SlIl\lA

(1T).

Note

that

SI~A)

(c;)

is

the

usual

von

Neumann

entropy

which is achieved

as

the

solution

of

the

semiclassical

extremum

problem

(39)

at

any

decomposition

C;

= LPiC;i

into

the

pure

states

C;i

as

st)(c;)

=

S(c;)

+

sup

I:>iV

[~i

ln~il

=

S(c;)

voc;1.=l

Thus,

7-{}:)

2-

S(

c;),

in

particular,

7-{}:)

=

2S

in

the

case

of

full

matrix

algebra

lffi

= B

(f)).

It

is

interesting

also

to

compare

the

entropies 7-{J')

and

r

SL)

of

the

other

types.