Nof S.Y. Springer Handbook of Automation

Подождите немного. Документ загружается.

Collaborative Human–Automation Decision Making 26.4 Conclusion and Open Challenges 445

is (2, −1, 2) as neither the moderator nor decider roles

changed from interface 2, although the generator’s did.

The three interfaces were evaluated with 20 US

Navy personnel who would use such a tool in an op-

erational setting. While the full experimental details

can be found elsewhere [26.18], in terms of overall

performance, operators performed the best with inter-

faces 1 and2, which were notstatistically different from

each other (p =0.119). Interface 3, the one with the

predominantly automation-led collaboration, produced

statistically worse performance compared with both in-

terfaces 1 and 2 (p = 0.011 and 0.031 respectively).

Table 26.4 summarizes the HACT categorizationfor the

three interfaces, along with their relative performance

rankings. The results indicate that, because the moder-

ator and decider roles were held constant, the degraded

performance for those operators using interface 3 was

a result of the differences in the generator aspect of the

decision-making process. Furthermore, the decline in

performance occurred when the LOC was weighted to-

wards the automation. When the solution process was

either human-led or of equal contribution, operators

performed no differently. However, when the solution

generation was automation led, operators struggled.

While there are many other factors that likely af-

fect these results (trust, visualization design, etc.), the

HACT taxonomy is helpful in first deconstructing the

automation components of the decision-making pro-

cess. This allows for more specific analyses across

different collaboration levels of humans and automa-

tion, which has not been articulated in other LOA

scales. In addition, as demonstrated in the previous ex-

ample, when comparing systems, such a categorization

will alsopinpoint which LOCs are helpful, or at the very

least, not detrimental. In addition, while not explicitly

illustrated here, the HACT taxonomy can also provides

Table 26.4 Interface performance and HACT three-tuples;

M – moderator; G – generator; D – decider

HACT three-tuple Performance

(M,G,D)

Interface 1 (2, 1, 2) Best

Interface 2 (2, 0, 2) Best

Interface 3 (2, −1, 2) Worst

designers with some guidance on system design, i. e.,

to improve performance for a system; for example, in

interface 3, it may be better to increase the moderator

LOC instead of lowering the generator LOC.

In summary, application of HACT is meant to elu-

cidate human–computer collaboration in terms of an

information processing theoretic framework. By decon-

structing either a single or competing decision support

systems using the HACT framework, a designer can

better understand how humans and computers are col-

laborating across different dimensions, in order to

identify possible problem areas in need of redesign; for

example, in the case of the Patriot missile system with

a(−2, −2, 1) three-tuple and its demonstratedpoor per-

formance, designers could change the decider role to

a 2 (only the human makes the final decision, automa-

tion cannot veto), as well as move towards a more truly

collaborative solution generation LOC. Because missile

intercept is a time-pressured task, it is important that

the automation moderate the task, but because of the

inability of the automation to always correctly make

recommendations, more collaboration is needed across

the solution-generationrole, withno automation author-

ity in the decider role. Used in this manner, HACT aids

designers in the understandingof the multiagent rolesin

human–computer collaboration tasks, as well as identi-

fying areas for possible improvement across these roles.

26.4 Conclusion and Open Challenges

The human–automation collaboration taxonomy

(HACT) presented here builds on previous research

by expanding the Parasuraman [26.1] information

processing model, specifically the decision-making

component. Instead of defining a simple level of au-

tomation for decision making, we deconstruct the

process to include threedistinct roles, thatof the moder-

ator (the agent that ensures the decision-making process

moves forward), the generator (the agent that is pri-

marily responsible for generating a solution or set of

possible solutions), and the decider (the agent that

decides the final solution along with veto authority).

These three distinct (but not necessarily mutually ex-

clusive) roles can each be scaled across five levels

indicating degrees of collaboration, with the center

value of 0 in each scale representing balanced col-

laboration. These levels of collaboration (LOCs) form

a three-tuple that can be analyzed to evaluate system

collaboration, and possibly identify areas for design

intervention.

Part C 26.4

446 Part C Automation Design: Theory, Elements, and Methods

As with all such levels, scales, taxonomies, etc.,

there are limitations. First, HACT as outlined here does

not address all aspects of collaboration that could be

considered when evaluating the collaborative nature of

a system, such as the type and possible latencies in

communication, whether or not the LOCs should be dy-

namic, the transparency of the automation, the type of

information used (i.e., low-level detail as opposed to

higher, more abstract concepts), and finally how adapt-

able the system is across all of these attributes. While

this has been discussed in earlier work [26.7], more

work is needed to incorporate this into a comprehensive

yet useful application.

In addition, HACT is descriptive versus prescrip-

tive, which means that it can describe a system and

identify post hocwhere designsmay be problematic, but

cannot indicative how the system should be designed to

achieve some predicted outcome. To this end, more re-

search is needed in the application of HACT and the

interrelation of the entries within each three-tuple, as

well as more general relationships across three-tuples.

Regarding the within three-tuples issue, more research

is needed to determine the impact and relative impor-

tance of each of the three roles; for example, if the

moderator is at a high LOC but the generator is at

alowLOC, are there generalizable principles that can

be seen across different decision support systems? In

terms of the between three-tuple issue, more research

is needed to determine under what conditions certain

three-tuples produce consistently poor (or superior) per-

formance, and whether these are generalizable under

particular contexts; for example, in high-risk time-

critical supervisory control domains such as nuclear

power plant operations, a three-tuple of (−2, −2, −2)

may be necessary. However, even in this case, given

flawed automated algorithms such as those seen in

the Patriot missile, the question could be raised of

whether it is ever feasible to design a safe (−2, −2, −2)

system.

Despite these limitations, HACT provides more de-

tailed information about the collaborative nature of

systems than did previous level-of-automation scales,

and given the increasing presence of intelligent au-

tomation both in complex supervisory control systems

and everyday life, such as global positioning system

(GPS) navigation, this sort of taxonomy can provide for

more in-depth analysis and a common point of com-

parison across competing systems. Other future areas

of research that could prove useful would be the de-

termination of how levels of collaboration apply in the

other data acquisition and action implementation in-

formation processing stages, and what the impact on

human performance would be if different collaboration

levels were mixed across the stages. Lastly, one area

often overlooked that deserves much more attention is

the ethical and social impact of human–computer col-

laboration. Higher levels of automation authority can

reduce an operator’s awareness of criticalevents [26.19]

as well as reduce their sense of accountability [26.20].

Systems that promote collaboration with an automated

agent could possibly alleviate the offloading of attention

and accountability to the automation, or collaboration

may further distance operators from their tasks and ac-

tions and promote these biases. There has been very

little research in this area, and given the vital nature

of many time-critical systems that have some degree of

human–computer collaboration (e.g., air-traffic control

and military command and control), the importance of

the social impact of such systems should not be over-

looked.

References

26.1 R. Parasuraman, T.B. Sheridan, C.D. Wickens:

A model for types and levels of human interaction

with automation, IEEE Trans. Syst. Man Cybern. –

Part A: System and Humans 30(3), 286–297 (2000)

26.2 P. Dillenbourg, M. Baker, A. Blaye, C. O’Malley:

The evolution of research on collaborative learn-

ing. In: Learning in Humans and Machines.

Towards an Interdisciplinary Learning Science,ed.

by P. Reimann, H. Spada (Pergamon, London 1995)

pp. 189–211

26.3 J. Roschelle, S. Teasley: The construction of shared

knowledge in collaborative problem solving. In:

Computer Supported Collaborative Learning,ed.by

C. O’Malley (Springer, Berlin 1995) pp. 69–97

26.4 P.J. Smith, E. McCoy, C. Layton: Brittleness in the

design of cooperative problem-solving systems:

the effects on user performance, IEEE Trans. Syst.

Man Cybern. 27(3), 360–370 (1997)

26.5 H.A. Simon, G.B. Dantzig, R. Hogarth, C.R. Plott,

H.Raiffa,T.C.Schelling,R.Thaler,K.A.Shepsle,

A. Tversky, S. Winter: Decision making and problem

solving, Paper presented at the Research Briefings

1986: Report of the Research Briefing Panel on De-

cision Making and Problem Solving, Washington

D.C. (1986)

26.6 P.M. Fitts (ed.): Human Engineering for an Effective

Air Navigation and Traffic Control system (National

Research Council, Washington D.C. 1951)

Part C 26

Collaborative Human–Automation Decision Making References 447

26.7 S. Bruni, J.J. Marquez, A. Brzezinski, C. Nehme,

Y. Boussemart: Introducing a human–automation

collaboration taxonomy (HACT) in command and

control decision-support systems, Paper presented

at the 12th Int. Command Control Res. Technol.

Symp., Newport (2007)

26.8 M.P. Linegang, H.A. Stoner, M.J. Patterson,

B.D. Seppelt, J.D. Hoffman, Z.B. Crittendon,

J.D. Lee: Human–automation collaboration in dy-

namic mission planning: a challenge requiring an

ecological approach, Paper presented at the Hu-

man Factors and Ergonomics Society 50th Ann.

Meet., San Francisco (2006)

26.9 Y. Qinghai, Y. Juanqi, G. Feng: Human–computer

collaboration control in the optimal decision of FMS

scheduling, Paper presented at the IEEE Int. Conf.

Ind. Technol. (ICIT ’96), Shanghai (1996)

26.10 R.E. Valdés-Pérez: Principles of human–computer

collaboration for knowledge discovery in science,

Artif. Intell. 107(2), 335–346 (1999)

26.11 B.G. Silverman: Human–computer collaboration,

Hum.–Comput. Interact. 7(2), 165–196 (1992)

26.12 L.G. Terveen: An overview of human–computer

collaboration, Knowl.-Based Syst. 8(2–3), 67–81

(1995)

26.13 G. Johannsen: Mensch-Maschine-Systeme (Hu-

man–Machine Systems) (Springer, Berlin 1993), in

German

26.14 J. Rasmussen: Skills, rules, and knowledge; sig-

nals, signs, and symbols, and other distractions in

human performance models, IEEE Trans. Syst. Man

Cybern. 13(3), 257–266 (1983)

26.15 T.B.Sheridan,W.Verplank:Human and Computer

Control of Undersea Teleoperators (MIT, Cambridge

1978)

26.16 M.R. Endsley, D.B. Kaber: Level of automation

effects on performance, situation awarness and

workload in a dynamic control task, Ergonomics

42(3), 462–492 (1999)

26.17 V. Riley: A general model of mixed-initiative

human–machine systems, Paper presented at the

Human Factors Society 33rd Ann. Meet., Denver

(1989)

26.18 S. Bruni, M.L. Cummings: Tracking resource

allocation cognitive strategies for strike plan-

ning, Paper presented at the COGIS 2006, Paris

(2006)

26.19 K.L. Mosier, L.J. Skitka: Human decision mak-

ers and automated decision aids: made for each

other? In: Automation and Human Performance:

Theory and Applications, ed. by R. Parasuraman,

M. Mouloua (Lawrence Erlbaum, Mahwah 1996)

pp. 201–220

26.20 M.L. Cummings: Automation and accountability in

decision support system interface design, J. Tech-

nol. Stud. 32(1), 23–31 (2006)

Part C 26

“This page left intentionally blank.”

449

Teleoperation

27. Teleoperation

Luis Basañez, Raúl Suárez

This chapter presents an overview of the tele-

operation of robotics systems, starting with

a historical background, and including the

description of an up-to-date specific teleop-

eration scheme as a representative example

to illustrate the typical components and func-

tional modules of these systems. Some specific

topics in the field are particularly discussed,

for instance, control algorithms, communica-

tions channels, the use of graphical simulation

and task planning, the usefulness of virtual

and augmented reality, and the problem of

dexterousgrasping.Thesecondpartofthe

chapter includes a description of the most

typical application fields, such as industry

and construction, mining, underwater, space,

surgery, assistance, humanitarian demining,

and education, where some of the pio-

neering, significant, and latest contributions

are briefly presented. Finally, some conclu-

sions and the trends in the field close the

chapter.

The topics of this chapter are closely related

to the contents of other chapters such as those

on Communication in Automation, Including

Networking and Wireless (Chap. 13), Virtual

27.1 Historical Background

and Motivation .................................... 450

27.2 General Scheme and Components .......... 451

27.2.1 Operation Principle ..................... 454

27.3 Challenges and Solutions ...................... 454

27.3.1 Control Algorithms ...................... 454

27.3.2 Communication Channels............. 455

27.3.3 Sensory Interaction and Immersion 456

27.3.4 Teleoperation Aids ...................... 457

27.3.5 Dexterous Telemanipulation ......... 458

27.4 Application Fields ................................. 459

27.4.1 Industry and Construction ............ 459

27.4.2 Mining....................................... 460

27.4.3 Underwater ................................ 460

27.4.4 Space......................................... 461

27.4.5 Surgery ...................................... 462

27.4.6 Assistance .................................. 463

27.4.7 Humanitarian Demining .............. 463

27.4.8 Education................................... 464

27.5 Conclusion and Trends .......................... 464

References .................................................. 465

Reality and Automation (Chap. 15), and Collab-

orative Human–Automation Decision Making

(Chap. 26).

The term teleoperation is formed as a combination of

the Greek word τηλε-, (tele-, offsite or remote), and

the Latin word operat

˘

ıo, -

¯

onis (operation, something

done). So, teleoperation means performing some work

or action from some distance away. Although in this

sense teleoperation could be applied to any operation

performed at a distance, this term is most commonly as-

sociated with robotics and mobile robots and indicates

the driving of one of these machines from a place far

from the machine location.

There are of lot of topics involved in a tele-

operated robotic system, including human–machine

interaction, distributed control laws, communications,

graphic simulation, task planning, virtual and aug-

mented reality, and dexterous grasping and manipula-

tion. Also the fields of application of these systems

are very wide and teleoperation offers great possi-

bilities for profitable applications. All these topics

and applications are dealt with in some detail in this

chapter.

Part C 27

450 Part C Automation Design: Theory, Elements, and Methods

27.1 Historical Background and Motivation

Since a long time ago, human beings have used a range

of tools to increase their manipulation capabilities. In

the beginning these tools were simple tree branches,

which evolved to long poles with tweezers, such as

blacksmith’s tools that help to handle hot pieces of iron.

These developments were the ancestors of master–slave

robotic systems, where the slave robot reproduces the

master motions controlled by a human operator. Tele-

operated robotic systems allow humans to interact with

robotic manipulators and vehicles and to handle objects

located in a remote environment, extending human ma-

nipulation capabilities to far-off locations, allowing the

execution of quite complex tasks and avoiding danger-

ous situations.

The beginnings of teleoperation can be traced back

to the beginnings of radio communication when Nikola

Tesla developed what can be considered the first tele-

operated apparatus, dated 8 November 1898. This

development has been reported under the US patent

613809, Method of and Apparatus for Controlling

Mechanism of Moving Vessels or Vehicles.However,bi-

lateral teleoperation systems did not appear until the

late 1940s. The first bilateral manipulators were de-

veloped for handling radioactive materials. Outstanding

pioneers were Raymond Goertz and his colleagues at

the Argonne National Laboratory outside of Chicago,

and Jean Vertut at a counterpart nuclear engineering

laboratory near Paris. The first mechanisms were me-

chanically coupled and the slave manipulator mimicked

the master motions, both being very similar mecha-

nisms (Fig. 27.1). It was not until the mid 1950s that

Goertz presented the first electrically coupled master–

slave manipulator (Fig.27.2) [27.1].

In the 1960s applications were extended to under-

water teleoperation, where submersible devices carried

cameras and the operator could watch the remote robot

and its interaction with the submerged environment.

The beginnings of space teleoperation dates form the

1970s, and in this applicationthe presence of time delay

started to cause instability problems.

Technology has evolved with giant steps, resulting

in better robotic manipulators and, in particular, in-

creasing the communication means, from mechanical to

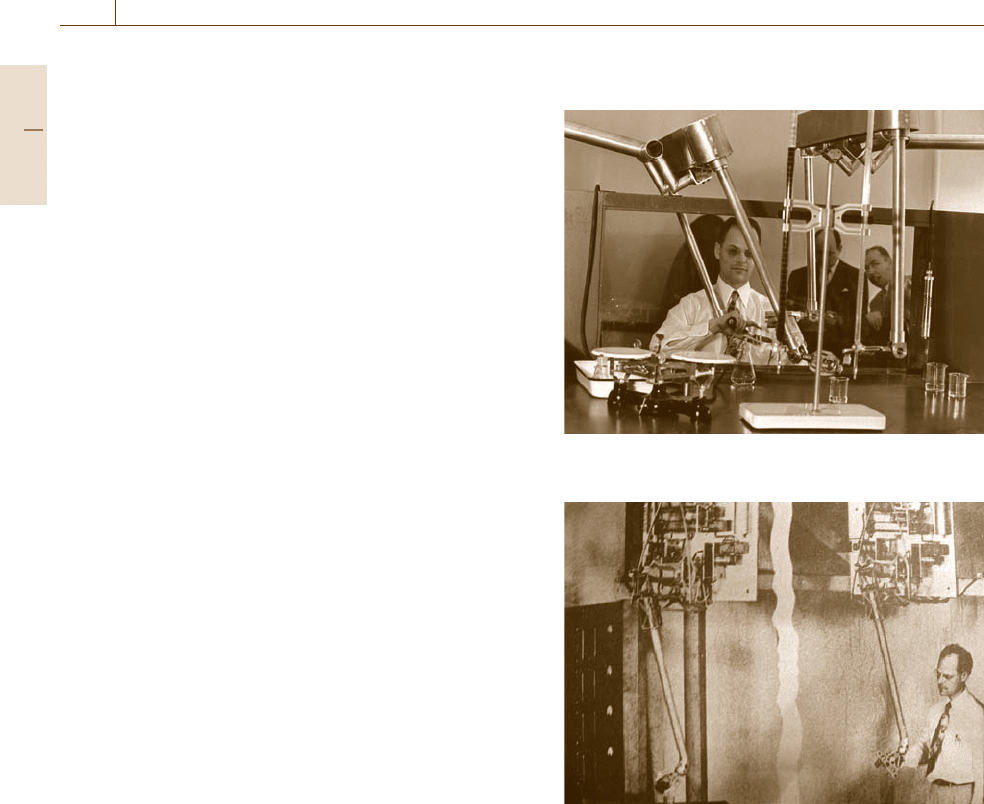

Fig. 27.1 Raymond Goertz with the first mechanically cou-

pled teleoperator (Source: Argonne National Labs)

Fig. 27.2 Raymond Goertz with an electrically coupled

teleoperator (Source: Argonne National Labs)

electrical transmission, using optic wires, radio signals,

and the Internet which practically removes any distance

limitation.

Today, the applications of teleoperation systems are

found in a large number of fields. The most illustra-

tive are space, underwater, medicine, and hazardous

environments, which are described amongst others

in Sect.27.4

Part C 27.1

Teleoperation 27.2 General Scheme and Components 451

27.2 General Scheme and Components

A modern teleoperation system is composed of several

functional modules according to the aim of the system.

As a paradigm of an up-to-date teleoperated robotic

system, the one developed at the Robotics Laboratory

of the Institute of Industrial and Control Engineering

(IOC), Technical University of Catalonia (UPC), Spain,

will be described below [27.2].

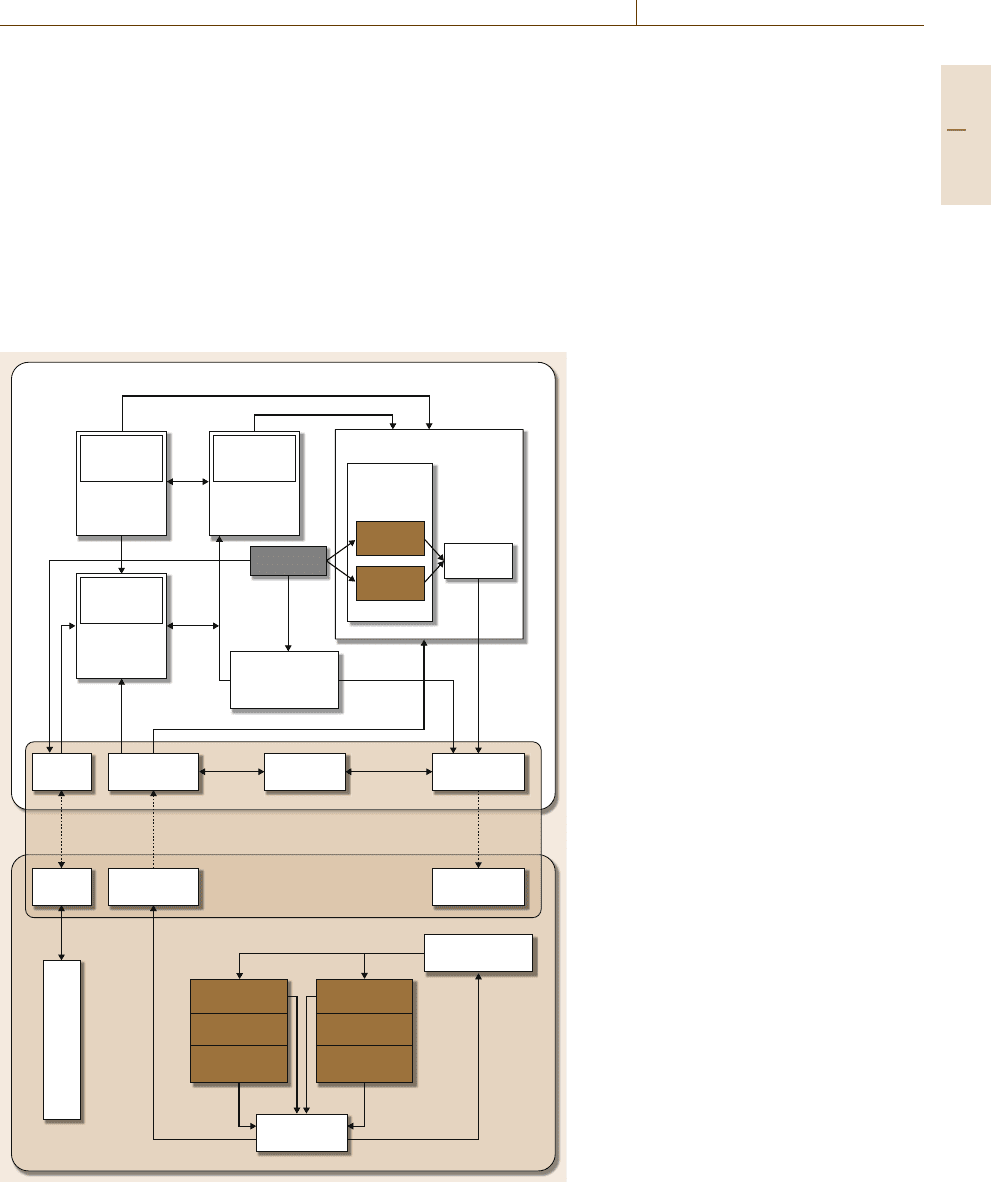

The outline of the IOC teleoperation system is rep-

resented in Fig. 27.3. The diagram contains two large

blocks that correspond to the local station, where the

Simulation

module

Simulated force

Guidance force

Collision

detection

Augmented

reality

Visualization

Local

planning

module

Relational

positioning

module

Controller I

Robot I

Force

sensor I

Controller II

Robot II

Force

sensor II

Sub-

space

Sensed force

Trajectories

Subspace

State

State

ForceForce

PositionPosition

Configuration

space

Local station

Remote station

Operator

Geometric

conversion

Command

codification

Network

monitoring

Cod/

decod

State

decodification

State

determination

Audio and video

Command

decodification

Remote planning

module

Cod/

decod

State

codification

Haptic

device 1

Haptic

device 2

Haptic

representation

engine

Haptic representation

module

Commuication module

Fig. 27.3 A general scheme of

a teleoperation system (courtesy of

IOC-UPC)

human operator and master robots (haptic devices) are

located, and the remote station, which includes two

industrial manipulatorsas slave robots.The system con-

tains the following system modules.

Relational positioning module: This module pro-

vides the operator with a means to define geometric

relationships that should be satisfied by the part manip-

ulated by the robots with respect to the objects in the

environment. These relationships can completely define

the position of the manipulated part and then fix all the

Part C 27.2

452 Part C Automation Design: Theory, Elements, and Methods

robots’ degrees of freedom (DOFs) or they can partially

determine the position and orientation and therefore fix

only some DOFs. In the latter case, the remaining de-

grees of freedom are those that the operator will be

able to control by means of one or more haptic devices

(master robots). Then, the output of this module is the

solution subspace in which the constraints imposed by

the relationships are satisfied. This output is sent to the

modules of augmented reality (for visualization), com-

mand codification (to define the possible motions in

the solution subspace), and planning (to incorporate the

motion constraints to the haptic devices).

Haptic representationmodule: Thismodule consists

of the haptic representation engine and the geometric

conversion submodule. The haptic representation en-

gine is responsible for calculating the force to be fed

back to the operator as a combination of the following

forces:

•

Restriction force: This is calculated by the plan-

ning module to assure that, during the manipulation

of the haptic device by the operator, the motion

constraints determined by the relational positioning

module are satisfied.

•

Simulated force: This is calculated bythe simulation

module as a reaction to the detection of potential

collision situations.

•

Reflected force: This is the forcesignal sent from the

remote station through the communication module

to the local station corresponding to the robots’ ac-

tuators forces and those measured by the force and

torque sensors in thewrist of the robots producedby

the environmental interaction.

The geometric conversion submodule is in charge of

the conversion between the coordinates of the haptic

devices and those of the robots.

Augmented-reality module:This moduleis incharge

of displaying to the user the view of the remote station,

to which is added the following information:

•

Motion restrictions imposed by the operator. This

information provides the operator with the under-

standing and control of the unrestricted degrees of

freedom can be commanded by means of the hap-

tic device (for example, it can visualize a plane on

which the motions of the robot end-effector are re-

stricted).

•

Graphical models of the robots in their last con-

figuration received from the cell. This allows the

operator to receive visual feedback of the robots’

state from the remote station at a frequency faster

than that allowed by the transmission of the whole

image, since it is possible to update the robots’

graphical models locally from the values of their six

joint variables.

This modulereceives asinputs: (1)the image of the cell,

(2) the state (pose) of the robots, (3) the model of the

cell, and (4) the motion constraints imposed by the op-

erator. This module is responsible for maintaining the

coherence of the data and for updating the model of the

cell.

Simulation module: This module is used to detect

possible collisions of the robots and the manipulated

pieces with the environment, and to provide feedback to

the operator with the corresponding force in order to al-

low him to react quickly when faced with these possible

collision situations.

Local planning module: The planning module of the

local station computes the forces that should guide the

operator to a position where the geometric relationships

he has defined are satisfied, as well as the necessary

forces to prevent the operator from violating the cor-

responding restrictions.

Remote planning module: The planning module of

the remote station is in charge of reconstructing the tra-

jectories traced by the operator with the haptic device.

This module includes a feedback loop for position and

force that allows safe execution of motions with com-

pliance.

Communication module: This module is in charge

of communications between the local and the remote

stations through the used communication channel (e.g.,

Internet or Internet2). This consists of the following

submodules for the information processing in the local

and remote stations:

•

Command codification/decodification: These sub-

modules are responsible for the codification and

decodification of the motion commands sent from

the local station and the remote station. These com-

mands shouldcontain theinformation ofthe degrees

of freedom constrained to satisfy the geometric

relationships and the motion variables on the unre-

stricted ones, following the movements specified by

the operator by means of the haptic devices (for in-

stance, if the motion is constrained to be on a plane,

this information will be transferred and then the

commands will be the three variables that define the

motion on that plane). For each robot, the following

three qualitatively different situations are possible:

– The motion subspace satisfying the constraints

defined by the relationships fixed by the operator

Part C 27.2

Teleoperation 27.2 General Scheme and Components 453

Ghost

Windows

Network monitor

Haptic device

PHANToM

Ethernet

Ethernet

Internet

Switch

Video

server

Audio

SP1

hand held

CS8

controller

CS8

controller

TX90

robot

TX90

robot

Force

sensors

Grippers

Cameras

Switch

Audio

3-D

visualization

Ghost

Linux

Haptic device

PHANToM

Tornado II

Local station

Remote station

Fig. 27.4 Physical architecture of a teleoperation system (courtesy of IOC-UPC)

has dimension zero. This means that the con-

straints completely determine the position and

orientation (pose) of the manipulated object. In

this case the command is this pose.

Part C 27.2

454 Part C Automation Design: Theory, Elements, and Methods

– The motion subspace has dimension six,i.e., the

operator does not have any relationship fixed. In

this case the operator can manipulate the six de-

grees of freedom of the haptic device and the

command sent to theremote station is composed

of the values of the six joint variables.

– The motion subspace has dimension from one

to five. In this case the commands are com-

posed of the information of this subspace

and the variables that describe the motion

inside it, calculated from the coordinates in-

troduced by the operator through the haptic

device or determined by the local planning mod-

ule.

•

State codification/decodification: These submod-

ules generate and interpret the messages between

the remote and the local stations. The robot state is

coded as the combination of the position and force

information.

•

Network monitoring system: This submodule ana-

lyzes in real time the quality of service (QoS)ofthe

communication channel in order to properly adapt

the teleoperation parameters and the sensorial feed-

back.

A scheme depicting the physical architecture of the

whole teleoperation system is shown in Fig.27.4.

27.2.1 Operation Principle

In order to perform a robotized task with the described

teleoperation system, the operator should carry out the

following steps:

•

Define the motion constraints for each phase of

the task, specifying the relative position of the

manipulated objects or tools with respect to the en-

vironment.

•

Move the haptic devices to control the motions of

the robots in the subspace that satisfies the imposed

constraints. The haptic devices, by means of the

force feedback applied to the operator, are capable

of:

– guiding the operator motions so that they satisfy

the imposed constraints

– detecting collision situations and trying to avoid

undesired impacts

•

Control the realization of the task availing him-

self of an image of the scene visualized using

three-dimensional augmented reality with addi-

tional information (like the graphical representation

of the motion subspace, the graphical model of the

robots updated with the last received data, and other

outstanding information for the good performance

of the task).

27.3 Challenges and Solutions

During the development of modern teleoperation sys-

tems, such as the one described in Sect.27.2,alotof

challenges have to be faced. Most of these challenges

now have a partial or total solution and the main ones

are reviewed in the following subsections.

27.3.1 Control Algorithms

A control algorithm for a teleoperation system has two

main objectives: telepresence and stability. Obviously,

the minimum requirementfor acontrol schemeis topre-

serve stability despite the existence of time delay and

the behavior of the operator and the environment. Tele-

presence means that the information about the remote

environment is displayed to the operator in a natural

manner, which implies a feeling of presence at the re-

mote site (immersion). Good telepresence increases the

feasibility of the remote manipulation task. The degree

of telepresence associated to a teleoperation system is

called transparency.

Scattering-based control has always dominated the

control field in teleoperation systems since it was

first proposed by Anderson and Spong [27.3], creat-

ing the basis of modern teleoperation system control.

Their approach was to render the communications pas-

sive using the analogy of a lossless transmission line

with scattering theory. They showed that the scattering

transformation ensures passivity of the communications

despite any constant time delay. Following the for-

mer scattering approach, it was proved [27.4] that, by

matching the impedances of the local and remote robot

controllers with the impedance of the virtual transmis-

sion line, wave reflections are avoided. These were the

beginnings of a series of developments for bilateral tele-

operators. The reader may refer to [27.5, 6]fortwo

advanced surveys on this topic.

Various control schemes for teleoperated robotic

systems have been proposed in the literature. A brief

description of the most representative approaches ispre-

sented below.

Part C 27.3