Korb K.B., Nicholson A.E. Bayesian Artificial Intelligence

Подождите немного. Документ загружается.

cost provides a measure that allows us to compare different junction trees, obtained

from different triangulations.

While the size of the compound nodes in the junction tree can be prohibitive, in

terms of memory as well as computational cost, it is often the case that many of the

table entries are zeros. Compression techniques can be used to store the tables more

efficiently, without these zero entries

.

3.6 Approximate inference with stochastic simulation

In general, exact inference in belief networks is computationally complex, or more

precisely, NP hard [52]. In practice, for most small to medium sized networks, up

to three dozen nodes or so, the current best exact algorithms — using clustering

— are good enough. For larger networks, or networks that are densely connected,

approximate algorithms must be used.

One approach to approximate inference for multiply-connected networks is stoch-

astic simulation. Stochastic simulation uses the network to generate a large number

of cases from the network distribution. The posterior probability of a target node is

estimated using these cases. By the Law of Large Numbers from statistics, as more

cases are generated, the estimate converges on the exact probability.

As with exact inference, there is a computational complexity issue: approximating

to within an arbitrary tolerance is also NP hard [64]. However, in practice, if the

evidence being conditioned upon is not too unlikely, these approximate approaches

converge fairly quickly.

Numerous other approximation methods have been developed, which rely on mod-

ifying the representation, rather than on simulation. Coverage of these methods is

beyond the scope of this text (see Bibliographic Notes

3.10 for pointers).

3.6.1 Logic sampling

The simplest sampling algorithm is that of logic sampling (LS) [106] (Algorithm 3.3).

This generates a case by randomly selecting values for each node, weighted by the

probability of that value occurring. The nodes are traversed from the root nodes

down to the leaves, so at each step the weighting probability is either the prior or the

CPT entry for the sampled parent values. When all the nodes have been visited, we

have a “case,” i.e., an instantiation of all the nodes in the BN. To estimate

with a sample value , we compute the ratio of cases where both and

are true to the number of cases where just is true. So after the generation of each

case, these combinations are counted, as appropriate.

The Hugin software uses such a compression method.

© 2004 by Chapman & Hall/CRC Press LLC

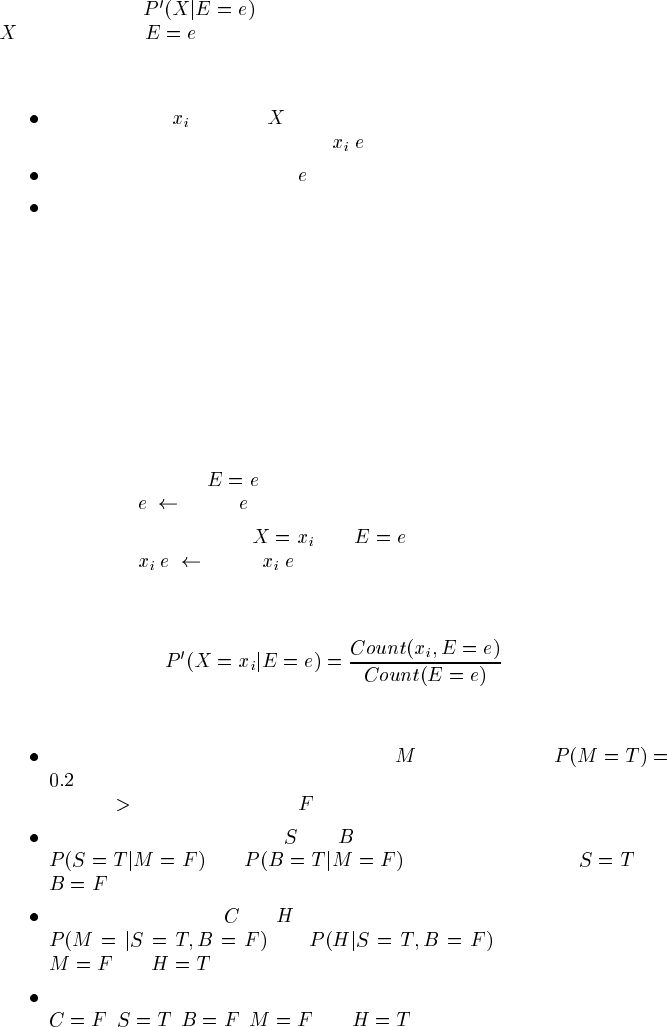

ALGORITHM 3.3

Logic Sampling (LS) Algorithm

Aim: to compute

as an estimate of the posterior probability for node

given evidence .

Initialization

For each value for node

Create a count variable Count( , )

Create a count variable Count( )

Initialize all count variables to 0

For each round of simulation

1. For all root nodes

Randomly choose a value for it, weighting the choice by the priors.

2. Loop

Choose values randomly for children, using the conditional

probabilities given the known values of the parents.

Until all the leaves are reached

3. Update run counts:

If the case includes

Count( ) Count( )+1

If this case includes both

and

Count( , ) Count( , )+1

Current estimate for the posterior probability

Let us work through one round of simulation for the metastatic cancer example.

The only root node for this network is node , which has prior

. The random number generator produces a value between 0 and 1; any

number

0.2 means the value is selected. Suppose that is the case.

Next, the values for children and must be chosen, using the CPT entries

and . Suppose that values and

are chosen randomly.

Finally, the values for and must be chosen weighted with the probabilities

and . Suppose that values

and are selected.

Then the full “case” for this simulation round is the combination of values:

, , , and .

© 2004 by Chapman & Hall/CRC Press LLC

If we were trying to update the beliefs for a person having metastatic cancer

or not (i.e.,

) given they had a severe headache, (i.e., evidence that

), then this case would add one to the count variable Count( ),

one to Count(

, ), but not to Count( , ).

The LS algorithm is easily generalized to more than one query node. The main

problem with the algorithm is that when the evidence

is unlikely, most of the cases

have to be discarded, as they don’t contribute to the run counts.

3.6.2 Likelihood weighting

A modification to the LS algorithm called likelihood weighting (LW) [87, 248] (Al-

gorithm 3.4) overcomes the problem with unlikely evidence, always employing the

sampled value for each evidence node. However, the same straightforward counting

would result in posteriors that did not reflect the actual BN model. So, instead of

adding “1” to the run count, the CPTs for the evidence node (or nodes) are used to

determine how likely that evidence combination is, and that fractional likelihood is

the number added to the run count.

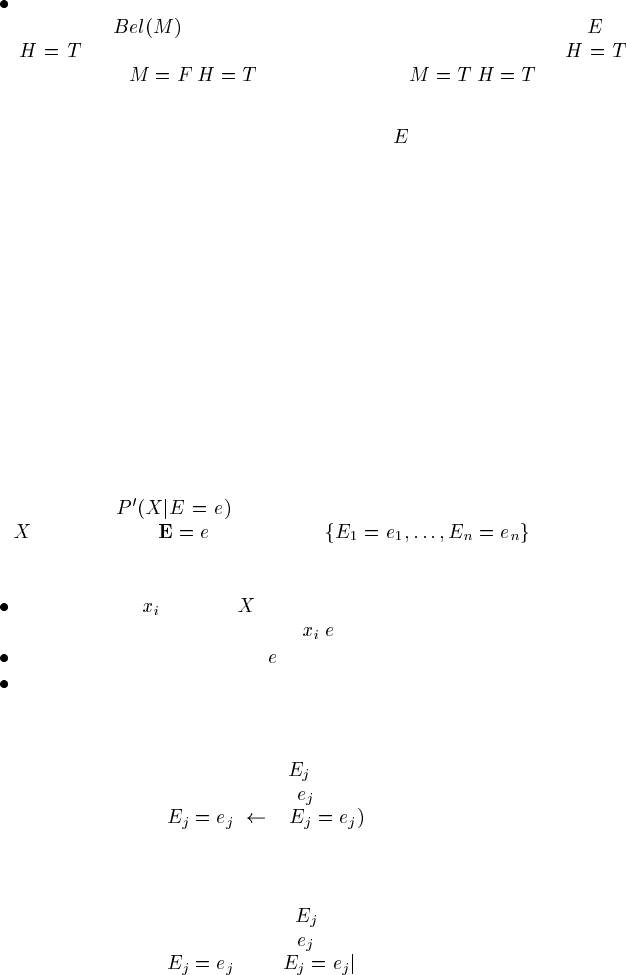

ALGORITHM 3.4

Likelihood Weighting (LW) Algorithm

Aim: to compute

, an approximation to the posterior probability for

node

given evidence , consisting of .

Initialization

For each value for node

Create a count variable Count( , )

Create a count variable Count( )

Initialize all count variables to 0

For each round of simulation

1. For all root nodes

If a root is an evidence node,

choose the evidence value,

likelihood( ) P(

Else

Choose a value for the root node, weighting the choice by the priors.

2. Loop

If a child is an evidence node,

choose the evidence value,

likelihood( )=P( chosen parent values)

Else

Choose values randomly for children, using the conditional

probabilities given the known values of the parents.

Until all the leaves are reached

© 2004 by Chapman & Hall/CRC Press LLC

3. Update run counts:

If the case includes

Count( ) Count( )+ likelihood( )

If this case includes both

and

Count( , ) Count( , )+ likelihood( )

Current estimate for the posterior probability

Let’s look at how this works for another metastatic cancer example, where the

evidence is

, and we are interested in probability of the patient going into a

coma. So, we want to compute an estimate for

.

Choose a value for with prior . Assume we choose .

Next we choose a value for from distribution .As-

sume

is chosen.

Look at . This is an evidence node that has been set to and

. So this run counts as 0.05 of a complete run.

Choose a value for randomly with . Assume .

So, we have completed a run with likelihood 0.05 that reports given

. Hence, both Count( , ) and Count( ) are incre-

mented.

3.6.3 Markov Chain Monte Carlo (MCMC)

Both the logic sampling and likelihood weighting sampling algorithms generate each

sample individually, starting from scratch. MCMC on the other hand generates a

sample by making a random change to the previous sample. It does this by randomly

sampling a value for one of the non-evidence nodes

, conditioned on the current

value of the nodes in its Markov blanket, which consists of the parents, children and

children’s parents (see

2.2.2).

The technical details of why MCMC returns consistent estimates for the posterior

probabilities are beyond the scope of this text (see [238] for details). Note that dif-

ferent uses of MDMC are presented elsewhere in this text, namely Gibbs sampling

(for parameter learning) in

7.3.2.1 and Metropolis search (for structure learning) in

8.6.2.

3.6.4 Using virtual evidence

There are two straightforward alternatives for using virtual evidence with sampling

inference algorithms

.

Thanks to Bob Welch and Kevin Murphy for these suggestions.

© 2004 by Chapman & Hall/CRC Press LLC

1. Use a virtual node: add an explicit virtual node V to the network, as described

in

3.4, and run the algorithm with evidence V=T.

2. In the likelihood weighting algorithm, we already weight each sample by the

likelihood. We can set

to the normalized likelihood ratio.

3.6.5 Assessing approximate inference algorithms

In order to assess the performance of a particular approximate inference algorithm,

and to compare algorithms, we need to have a measure for the quality of the solution

at any particular time. One possible measure is the Kullback-Leibler divergence

between a true distribution

and the estimated distribution of a node with states

,givenby :

Definition 3.1 Kullback-Leibler divergence

Note that when and are the same, the KL divergence is zero (which is proven

in

10.8). When is zero, the convention is to take the summand to have a zero

value. And, KL divergence is undefined when

; standardly, it is taken as

infinity (unless also

, in which case the summand is 0).

Alternatively, we can put this measure in terms of the updated belief for query

node

.

(3.7)

where

is computed by an exact algorithm and by the approximate

algorithm. Of course, this measure can only be applied when the network is such

that the exact posterior can be computed.

When there is more than one query node, we should use the marginal KL diver-

gence over all the query nodes. For example, if

and are query nodes, and the

evidence, we should use

. Often the average or the

sum of the KL divergences for the individual query nodes are used to estimate the

error measure, which is not exact. Problem 3.11 involves plotting the KL divergence

to compare the performance of approximate inference algorithms.

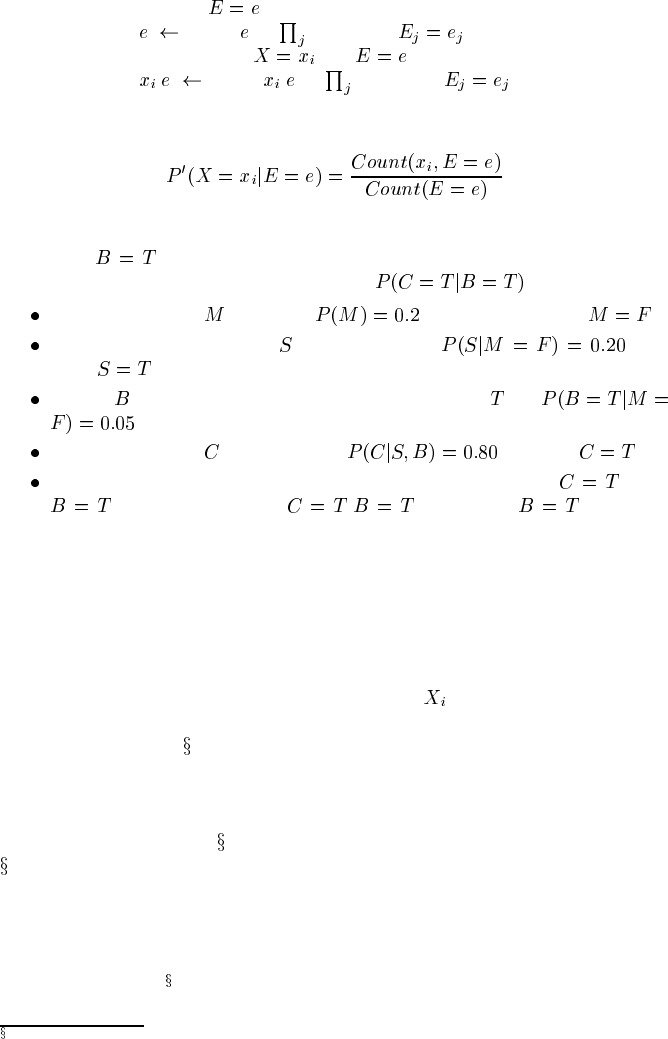

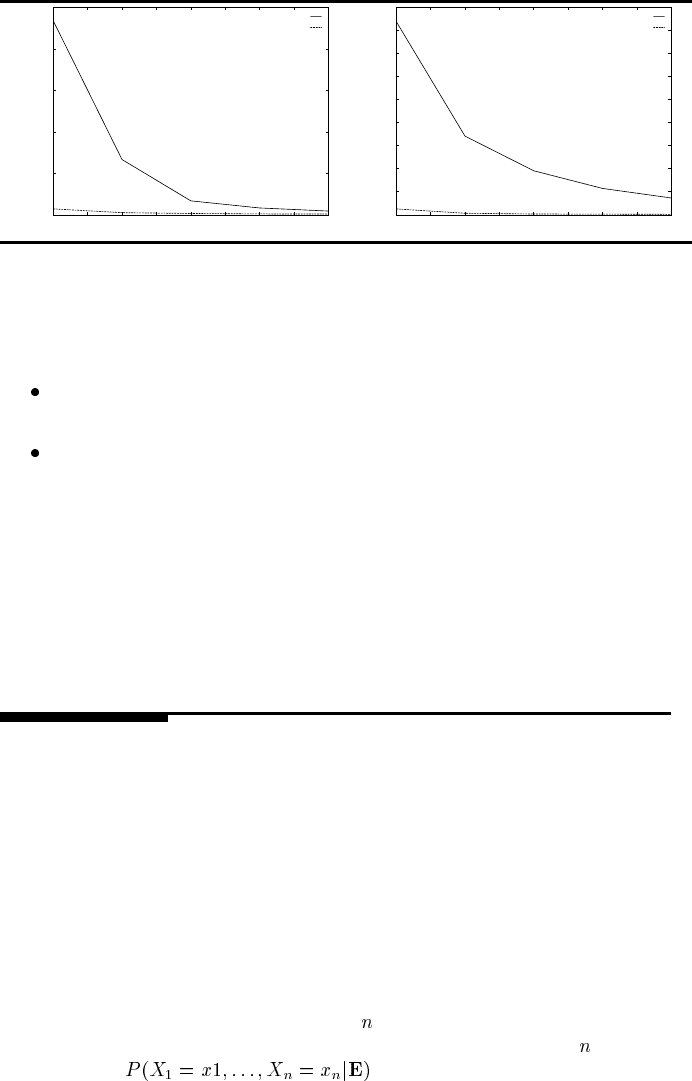

An example of this use of KL divergence is shown in Figure 3.12. These graphs

show the results of an algorithm comparison experiment [205]. The test network

contained 99 nodes and 131 arcs, and the LS and LW algorithms were compared for

two cases:

Although “Kullback-Leibler distance” is the commonly employed word, since the KL measure is asym-

metric — measuring the difference from the point of view of one or the other of the distributions — it is

no true distance.

© 2004 by Chapman & Hall/CRC Press LLC

0

0.5

1

1.5

2

2.5

0 0.5 1 1.5 2 2.5 3 3.5 4

KL distance

Iterations (x 50)

LS

LW

0

0.5

1

1.5

2

2.5

3

3.5

4

4.5

0 0.5 1 1.5 2 2.5 3 3.5 4

KL distance

Iterations (x 50)

LS

LW

FIGURE 3.12

Comparison of the logic sampling and likelihood-weighting approximate inference

algorithms.

Experiment 1: evidence added for 1 root node, while query nodes were all 35

leaf nodes.

Experiment 2: evidence added for 1 leaf node, while query nodes were all 29

root nodes (29).

As well as confirming the faster convergence of LW compared to LS, these and

other results show that stochastic simulation methods perform better when evidence

is nearer to root nodes [205]. In many real domains when the task is one of diagnosis,

evidence tends to be near leaves, resulting in poorer performance of the stochastic

simulation algorithms.

3.7 Other computations

In addition to the standard BN inference described in this chapter to data — comput-

ing the posterior probability for one or more query nodes — other computations are

also of interest and provided by some BN software.

3.7.1 Belief revision

It is sometimes the case that rather than updating beliefs given evidence, we are more

interested in the most probable values for a set of query nodes, given the evidence.

This is sometimes called belief revision [217, Chapter 5]. The general case of find-

ing a most probable instantiation of a set of

variables is called MAP (maximum

aposteriori probability). MAP involves finding the assignment for the

variables

that maximizes

. Finding MAPs was first shown to be

© 2004 by Chapman & Hall/CRC Press LLC

NP hard [254], and more recently NP complete [213]; approximating MAPs is also

NP hard [1].

A special case of MAP is finding an instantiation of all the non-evidence nodes,

also known as computing a most probable explanation (MPE). The “explanation”

of the evidence is a complete assignment of all the non-evidence nodes,

, and computing the MPE means finding the assignment that

maximizes

. MPE can be calculated efficiently with

a similar method to probability updating (see [128] for details). Most BN software

packages have a feature for calculating MPE but not MAP.

3.7.2 Probability of evidence

When performing belief updating, it is usually the case that the probability of the

evidence, P(E), is available as a by-product of the inference procedure. For example,

in polytree updating, the normalizing constant

is just . Clearly, there is a

problem should

be zero, indicating that this combination of values is impos-

sible in the domain. If that impossible combination of values is entered as evidence,

the inference algorithm must detect and flag it.

The BN user must decide between the following alternatives.

1. It is indeed the case that the evidence is impossible in their domain, and there-

fore the data are incorrect, due to errors in either gathering or entering the data.

2. The evidence should not be impossible, and the BN incorrectly represents the

domain.

This notion of possible incoherence in data has been extended from impossible ev-

idence to unlikely combinations of evidence. A conflict measure has been proposed

to detect possible incoherence in evidence [130, 145]. The basic idea is that correct

findings from a coherent case covered by the model support each other and therefore

would be expected to be positively correlated. Suppose we have a set of evidence

. A conflict measure on is

being positive indicates that the evidence may be conflicting. The higher

the conflict measure, the greater the discrepancy between the BN model and the

evidence. This discrepancy may be due to errors in the data or it just may be a rare

case. If the conflict is due to flawed data, it is possible to trace the conflicts.

© 2004 by Chapman & Hall/CRC Press LLC

3.8 Causal inference

There is no consensus in the community of Bayesian network researchers about the

proper understanding of the relation between causality and Bayesian networks. The

majority opinion is that there is nothing special about a causal interpretation,that

is, one which asserts that corresponding to each (non-redundant) direct arc in the

network not only is there a probabilistic dependency but also a causal dependency.

As we saw in Chapter 2, after all, by reordering the variables and applying the net-

work construction algorithm we can get the arcs turned around! Yet, clearly, both

networks cannot be causal.

We take the minority point of view, however (one, incidentally, shared by Pearl

[218] and Neapolitan [199]), that causal structure is what underlies all useful Bayes-

ian networks. Certainly not all Bayesian networks are causal, but if they represent a

real-world probability distribution, then some causal model is their source.

Regardless of how that debate falls out, however, it is important to consider how

to do inferences with Bayesian networks that are causal. If we have a causal model,

then we can perform inferences which are not available with a non-causal BN. This

ability is important, for there is a large range of potential applications for particularly

causal inferences, such as process control, manufacturing and decision support for

medical intervention. For example, we may need to reason about what will happen

to the quality of a manufactured product if we adopt a cheaper supplier for one of

its parts. Non-causal Bayesian networks, and causal Bayesian networks using ordi-

nary propagation, are currently used to answer just such questions; but this practice

is wrong. Although the Bayesian network tools do not explicitly support causal rea-

soning, we will nevertheless now explain how to do it properly.

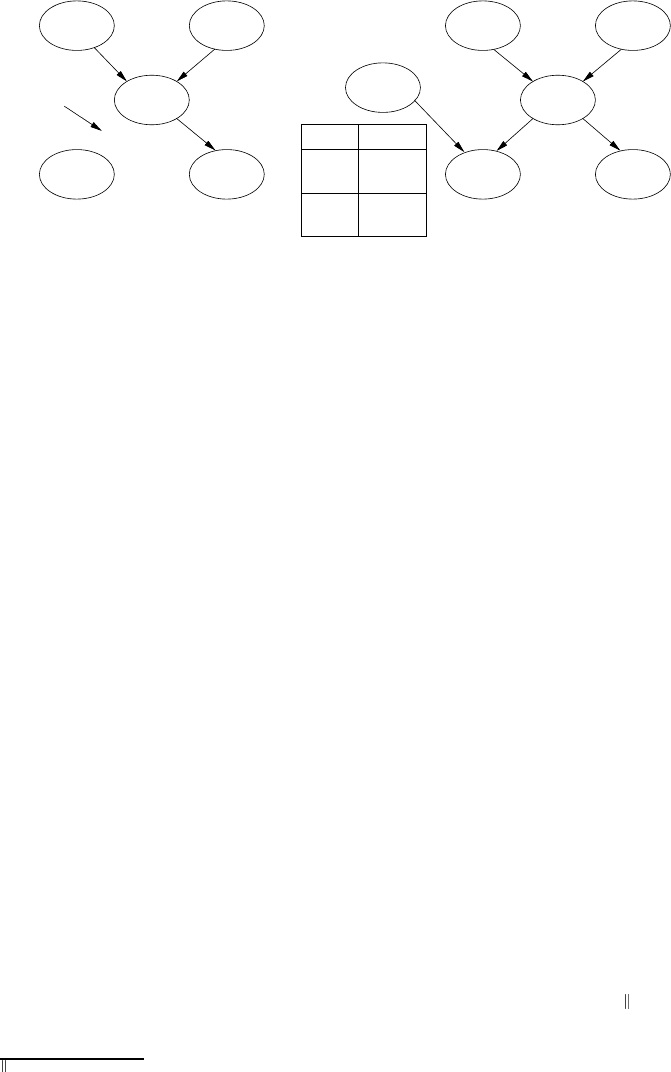

Consider again Pearl’s earthquake network of Figure 2.6. That network is intended

to represent a causal structure: each link makes a specific causal claim. Since it is

a Bayesian network (causal or not), if we observe that JohnCalls is true, then this

will raise the probability of MaryCalls being true, as we know. However, if we

intervene, somehow forcing John to call, this probability raising inference will no

longer be valid. Why? Because the reason an observation raises the probability of

Mary calling is that there is a common cause for both, the Alarm; so one provides

evidence for the other. However, under intervention we have effectively cut off the

connection between the Alarm and John’s calling. The belief propagation (message

passing) from JohnCalls to Alarm and then down to MaryCalls is all wrong under

causal intervention.

Judea Pearl, in his recent book Causality [218], suggests that we understand the

“effectively cut off” above quite literally, and model causal intervention in a variable

simply by (temporarily) cutting all arcs from to . If you do that

with the earthquake example (see Figure 3.13(a)), then, of course, you will find that

forcing John to call will tell us nothing about earthquakes, burglaries, the Alarm or

Mary — which is quite correct. This is the simplest way to model causal interven-

tions and often will do the job.

© 2004 by Chapman & Hall/CRC Press LLC

0.05

0.90

1.0

1.0

(a)

arc "cut"

Burglary

Earthquake

Alarm

JohnCalls

MaryCalls

F F

T T

T F

F T

I A P(J=T|A)

(b)

Intervene

JohnCalls

Alarm

JohnCalls

Burglary

Earthquake

MaryCalls

FIGURE 3.13

Modeling causal interventions: (a) Pearl’s cut method; (b) augmented model (CPT

unchanged when no intervention).

There is, however, a more general approach to causal inference. Suppose that the

causal intervention itself is only probabilistic. In the John calling case, we can imag-

ine doing something which will simply guarantee John’s cooperation, like pulling

out a gun. But suppose that we have no such guarantees. Say, we are considering a

middle-aged patient at genetic risk of cardiovascular disease. We would like to model

life and health outcomes assuming we persuade the patient to give up Smoking.Ifwe

simply cut the connection between Smoking and its parents (assuming the Smoking

is caused!), then we are assuming our act of persuasion has a probability one of suc-

cess. We might more realistically wish to model the effectiveness of persuasion with

a different probability. We can do that by making an augmented model, by adding

a new parent of Smoking,sayIntervene-on-Smoking. We can then instrument the

CPT for Smoking to include whatever probabilistic impact the (attempted) interven-

tion has. Of course, if the interventions are fully effective (i.e., with probability one),

this can be put into the CPT, and the result will be equivalent to Pearl’s cut method

(see Figure 3.13(b)). But with the full CPT available, any kind of intervention —

including one which interacts with other parents — may be represented. Subsequent

propagation with the new intervention node “observed” (but not its intervened-upon

effect) will provide correct causal inference, given that we have a causal model of

the process in question to begin with.

Adding intervention variables will unfortunately alter the original probability dis-

tribution over the unaugmented set of variables. That distribution can be recovered

by setting the priors over the new variables to zero. Current Bayesian network tools,

lacking support for explicit causal modeling, will not then allow those variables to

be instantiated, because of the idea that this constitutes an impossible observation;

an idea that is here misplaced. As a practical matter, using current tools, one could

maintain two Bayesian networks, one with and one without intervention variables,

in order to simplify moving between reasoning with and without interventions

.

This point arose in conversation with Richard Neapolitan.

© 2004 by Chapman & Hall/CRC Press LLC

3.9 Summary

Reasoning with Bayesian networks is done by updating beliefs — that is, comput-

ing the posterior probability distributions — given new information, called evidence.

This is called probabilistic inference. While both exact and approximate inference is

theoretically computationally complex, a range of exact and approximate inference

algorithms have been developed that work well in practice. The basic idea is that new

evidence has to be propagated to other parts of the network; for simple tree structures

an efficient message passing algorithm based on local computations is used. When

the network structure is more complex, specifically when there are multiple paths

between nodes, additional computation is required. The best exact method for such

multiply-connected networks is the junction tree algorithm, which transforms the

network into a tree structure before performing propagation. When the network gets

too large, or is highly connected, even the junction tree approach becomes compu-

tationally infeasible, in which case the main approaches to performing approximate

inference are based on stochastic simulation. In addition to the standard belief up-

dating, other computations of interest include the most probable explanation and the

probability of the evidence. Finally, Bayesian networks can be augmented for causal

modeling, that is for reasoning about the effect of causal interventions.

3.10 Bibliographic notes

Pearl [215] developedthe message passing method for inference in simple trees. Kim

[144] extended it to polytrees. The polytree message passing algorithm given here

follows that of Pearl [217], using some of Russell’s notation [237].

Two versions of junction tree clustering were developed in the late 1980s. One

version by Shafer and Shenoy [252] (described in [128]) suggested an elimination

method resulting in a message passing scheme for their so-called join-tree structure,

a term taken from the relational data base literature. The other method was initially

developed by Lauritzen and Spiegelhalter [169] as a two stage method based on the

running intersection property. This was soon refined to a message passing scheme in

a junction tree [124, 127, 125] described in this chapter

.

The junction tree cost given here is an estimate, produced by Kanazawa [139, 70],

of the complexity of the junction tree method.

Another approach to exact inference is cutset conditioning [216, 113], where the

network is transformed into multiple, simpler polytrees, rather than the single, more

Thanks to Finn Jensen for providing a summary chronology of junction tree clustering.

© 2004 by Chapman & Hall/CRC Press LLC