Kline R.B. Principles and Practice of Structural Equation Modeling

Подождите немного. Документ загружается.

364 ADVANCED TECHNIQuES, AVOIDING MISTAKES

tic information about possible sources of model–data disagreement. Any respecification

suggested by the diagnostics must be theoretically justifiable. If such respecifications

result in subsequently passing the chi-square test, then diagnostic information about

the fit of the respecified model is still needed. This is because a passing model can still

have fit problems concerning its explanations of the observed covariances. If this diag-

nostic assessment indicates a problem, then the model should be rejected despite pass-

ing the chi-square test.

40. Rely solely on suggested thresholds for approximate fit indexes to justify retaining

the model. Results of recent studies indicate that rules of thumb from the 1980s–1990s

about cutoffs for approximate fit indexes that supposedly indicate “acceptable” fit are not

trustworthy. This mistake is compounded when (a) the model fails the chi-square test

when the sample size is not large and (b) the researcher neglects to report diagnostic

information about model fit.

41. Interpret “closer to fit” as “closer to truth.” Close model–data correspondence

could reflect any of the following (not all mutually exclusive) possibilities: (a) the model

accurately reflects reality; (b) the model is an equivalent or near-equivalent version of

one that corresponds to reality but itself is incorrect; (c) the model fits the data in a non-

representative sample but has poor fit in the population; or (d) the model has so many

freely estimated parameters that it cannot have poor fit even if it is grossly misspecified.

In a single study, it is usually impossible to determine which of these scenarios explains

the good fit of the researcher’s model. This is another way of saying that SEM is more

useful for rejecting a false model than for somehow “proving” whether a given model is

true (point 5).

42. Interpret good fit as meaning that the endogenous variables are strongly predicted.

If the exogenous and mediator variables account for a small proportion of the variances

of the ultimate outcome variables and a model accurately reflects this lack of predictive

validity, then the overall fit of the model may be good. Fit statistics in SEM indicate

whether the model can reproduce the observed covariances, not whether substantial

proportions of the variance of the endogenous variables are explained.

43. Rely solely on statistical criteria in model evaluation. Other important consid-

erations include model generality, parsimony, and theoretical plausibility. As noted by

Robert and Pashler (2000), good statistical fit of a model indicates little about (a) theory

flexibility (e.g., what it cannot explain), (b) variability of the data (e.g., whether the data

can rule out what the theory explain cannot explain), and (c) the likelihood of other out-

comes. These authors also suggest that a better way to evaluate a model is to determine

(a) how well the theory limits outcomes (i.e., whether it can accurately predict), (b) how

closely the actual outcome agrees with those limits, and (c) if plausible alternative out-

comes would have been inconsistent with the theory (Sikström, 2001). That is, whether

a model is statistically beautiful involves not just numbers, but ideas, too.

44. Rely too much on statistical tests. This entry covers several kinds of errors. One is

to interpret statistical significance as evidence for effect size (especially in large samples)

or for importance (i.e., substantive significance). Another is to place too much emphasis

on statistical tests of individual parameters that may not be of central interest in hypoth-

How to Fool Yourself with SEM 365

esis testing (e.g., whether an error variance differs statistically from zero). A third is

to forget that statistical tests of individual effects tend to result in rejection of the null

hypothesis too often when non-normal data are analyzed by methods that assume nor-

mality. See point 27 for related misuses of statistical tests in SEM.

45. Interpret the standardized solution in inappropriate ways. This is a relatively com-

mon mistake in multiple-sample SEM—specifically, to compare standardized estimates

across groups that differ in their variabilities. In general, standardized solutions are fine

for comparisons within each group (e.g., the relative magnitudes of direct effects on the

same endogenous variable), but only unstandardized solutions are usually appropriate

for cross-group comparisons. A related error is to interpret group differences in the stan-

dardized estimates of equality-constrained parameters: the unstandardized estimates of

such parameters are forced to be equal, but their unstandardized counterparts are typi-

cally unequal if the groups have different variabilities.

46. Fail to consider equivalent or near-equivalent models. Essentially all structural

equation models have equivalent versions that generate the same predicted correlations

or covariances. For latent variable models, there may be infinitely many equivalent mod-

els. There are probably also near-equivalent versions that generate almost the same cova-

riances as those in the data matrix. Researchers must offer reasons why their models are

to be preferred over some obvious equivalent or near-equivalent versions of them.

47. Fail to consider (nonequivalent) alternative models. When there are competing

theories about the same phenomenon, it may be possible to specify alternative models

that reflect them. Not all of these alternatives may be equivalent versions of one another.

If the overall fits of some of these alternative models are comparable, then the researcher

must explain why a particular model is to be preferred.

48. Reify the factors. Believe that constructs represented in your model must corre-

spond to things in the real world. Perhaps they do, but do not assume it.

49. Believe that naming a factor means that it is understood (i.e., commit the naming

fallac y). Factor names are conveniences, not explanations. For example, if a three-factor

fits the data, this does not prove that the verbal labels assigned by the researcher to the

factors are correct. Alternative interpretations of factors are often possible in many, if

not most, factor analyses.

50. Believe that a strong analytical method like SEM can compensate for poor study

design or slipshod ideas. No statistical procedure can make up for inherent logical or

design flaws. For example, expressing poorly thought out hypotheses with a path dia-

gram does not give them more credibility. The specification of direct and indirect effects

in a structural model cannot be viewed as a replacement for an experimental or longi-

tudinal design. As mentioned earlier, the inclusion of a measurement error term for an

observed variable that is psychometrically deficient cannot somehow transform it into

a good measure. Applying SEM in the absence of good design, measures, and ideas is

like using a chain saw to cut butter: one will accomplish the task, but without a more

substantial base, one is just as likely to make a big mess.

51. As the researcher, fail to report enough information so that your readers can repro-

duce your results. There are still too many reports in the literature where SEM was used

366 ADVANCED TECHNIQuES, AVOIDING MISTAKES

in which the authors do not give sufficient summary information for readers to re- create

the original analyses or evaluate models not considered by the authors. At minimum,

authors should generally report all relevant correlations, standard deviations, and

means. Also describe the specification of the model(s) in enough detail so that a reader

can reproduce the analysis.

52. Interpret estimates of relatively large direct effects in a structural model as “proof” of

causality. As discussed earlier, it would be almost beyond belief that all of the conditions

required for inference of causality from covariances have been met in a single study. In

general, it is better to view structural models as being “as if” models of causality that

may or may not correspond to causal sequences in the real world.

suMMarY

So concludes this journey of discovery about SEM. As on any guided tour, you may have

found some places along the way more interesting than others. Also, you may decide

to revisit certain sites by using some of the related techniques in your work. Overall, I

hope that reading this book has given you new ways of looking at your data and testing

a broader range of hypotheses. Use SEM to address new questions or to provide new

perspectives on older ones, but use it guided by your good sense and knowledge of your

research area. Use it also as a means to reform methods of data analysis in the behavioral

sciences by focusing more on models instead of specific effects analyzed with traditional

statistical significance tests. The American politician Ivy Baker Priest once said: The

world is round and the place which may seem like the end may also be the beginning.

Go do yourself (and me, too) proud!

reCoMMended readIngs

These works all deal with the potential advantages and pitfalls of SEM. McCoach, Black, and

O’Connell (2007) outline various sources of inference error in drawing conclusions from SEM

analyses. Tomarken and Waller (2005) survey recent developments in SEM and describe

common misunderstandings. Tu (2009) addresses the application of SEM in epidemiology and

reminds us that there is no magic in SEM for inferring causality.

McCoach, D. B., Black, A. C., & O’Connell, A. A. (2007). Errors of inference in structural

equation modeling. Psychology in the Schools, 44, 461–470.

Tomarken, A. J., & Waller, N. G. (2005). Structural equation modeling: Strengths, limitations,

and misconceptions. Annual Review of Clinical Psychology, 1, 31–65.

Tu, Y.-K. (2009). Commentary: Is structural equation modelling a step forward for epidemiolo-

gists? International Journal of Epidemiology, 38, 549–551.

367

Suggested Answers to Exercises

ChaPter 2

1. For the data in Table 2.1, M

1

= 11.00, M

2

= 60.00, M

Y

= 25.00, SD

1

= 6.2048, SD

2

= 14.5774,

SD

Y

= 4.6904, r

Y1

= .6013, r

Y2

= .7496, and r

12

= .4699, so:

b

1

= [.6013 – .7496 (.4699)]/(1 – .4699

2

) = .3197

B

1

= .3197 (4.6904/6.2048) = .2417

b

2

= [.7496 – .6013 (.4699)]/(1 – .4699

2

) = .5993

B

2

= .5993 (4.6904/14.5774) = .1928

A = 25.00 – .2417 (11.00) – .1928 (60.00) = 10.7711

ˆ

Y

= .2417 X

1

+ .1928 X

2

+ 10.7711

2

12Y

R

⋅

= .3197 (.6013) + .5993 (.7496) = .6415;

12Y

R

⋅

= .8009

In the unstandardized solution, a 1-point difference on working memory (X

1

) predicts a .24-

point difference on reading achievement (Y), holding phonics skills (X

2

) constant; and the

expected difference on reading achievement is .19 points given a difference on phonics skills

of 1 point, with working memory held constant. When scores on both working memory and

phonics skills are zero, the predicted reading achievement score is 10.77. In the standard-

ized solution, a difference of a full standard deviation on working memory predicts a differ-

ence of .32 standard deviations on reading achievement controlling for phonics skills; and

a difference of a full standard deviation on phonics skills predicts a .60 standard deviation

difference on reading achievement controlling for working memory. The total proportion of

variance in reading achievement explained by working memory and phonics skills together

is .6415, or 64.15%.

2 and 3. Unstandardized and standardized predicted scores and residuals for the data in Table

2.1 are listed next:

368 Suggested Answers to Exercises

Case

ˆ

Y

−

ˆ

YY

z

1

z

2

z

Y

ˆ

Y

z

−

ˆ

Y

zz

A 24.0309 –.0309 –1.2893 .3430 –.2132 –.2066 –.0066

B 22.3466 –2.3466 –.4835 –.6860 –1.0660 –.5657 –.5003

C 20.9015 1.0985 –.1612 –1.3720 –.6396 –.8738 .2342

D 27.8951 4.1049 .6447 .6860 1.4924 .6172 .8752

E 29.8260 –2.8260 1.2893 1.0290 .4264 1.0289 –.6025

If you enter these scores in the data editor of a computer program for general statistical

analyses, such as SPSS, you can show that the following results are correct within rounding

error:

12Y

R

⋅

=

ˆ

YY

r

ˆ

( )1−YY

r

=

ˆ

()2−YY

r

=

1

ˆ

()

YY

zzz

r

−

=

2

ˆ

()

YY

zzz

r

−

= 0

4. Applying Equation 2.4 to

2

12Y

R

⋅

= .6415 for the data in Table 2.1 gives us

2

12

ˆ

Y

R

⋅

= 1 – (1 – .6415) [4/(5 – 2 – 1)] = .2829

The shrinkage-adjusted proportion of explained variance, or .28, is substantially less than

the observed proportion of explained variance, or .64, due to the small sample size (N = 5).

5. Given r

Y1

= .40, r

Y2

= .50, and r

12

= –.30:

b

1

= [.40 – .50 (–.30)]/(1 – .30

2

) = .6044

b

2

= [.50 – .40 (–.30)]/(1 – .30

2

) = .6813

12Y

R

⋅

= [.6790 (.40) + .7654 (.50)]

1/2

= .7632

There is a suppression effect because b

1

> r

Y1

and b

2

> r

Y2

, specifically, reciprocal suppres-

sion.

6. Applying Equation 2.10 to r

XY

= .50, r

XW

= .80, and r

YW

= .60 gives us

XYW

r

⋅

= [.50 – .80 (.60)]/[(1 – .80

2

) (1 – .60

2

)]

1/2

= 0.042

7. This is a variation of the local Type I error fallacy. This particular 95% confidence interval,

75.25–84.60, either contains µ

1

– µ

2

or it does not. The “95%” applies only in the long run: Of

the 95% confidence intervals from all random samples, we expect 95% to contain µ

1

– µ

2

, but

5% will not. Given a particular interval, such as 75.25–84.60, we do not know whether it is one

of the 95% of all random intervals that contains µ

1

– µ

2

or one of the 5% that does not.

8. The answer to this question depends on the particular definitions you selected, but here is an

example for one I found on Wikipedia: “In statistics, a result is called statistically significant

if it is unlikely to have occurred by chance.”

1

This is the odds-against-chance fallacy because

p values do not estimate the likelihood that a particular result is due to chance. Under H

0

, it

is assumed that all results are due to chance.

1

Retrieved February 4, 2009, from http://en.wikipedia.org/wiki/Statistical_significance

Suggested Answers to Exercises 369

ChaPter 3

1. First, derive the standard deviations, which are the square roots of the main diagonal

entries:

SD

X

= 42.25

1/2

= 6.50, SD

Y

= 148.84

1/2

= 12.20, and SD

W

= 376.36

1/2

= 19.40

Next, calculate each correlation by dividing the associated covariance by the product of the

corresponding standard deviations. For example:

r

XY

= 31.72/[6.50 × 12.20] = .40

The entire correlation matrix in lower diagonal form is presented next:

X Y W

X 1.00

Y .40 1.00

W .50 .35 1.00

2. The means are M

X

= 15.500, M

Y

= 20.125, and M

W

= 40.375. Presented next are the correla-

tions in lower diagonal form calculated for these data using each of the three options for

handling missing data:

Listwise N = 6

X Y W

X 1.000

Y .134 1.000

W .254 .610 1.000

Pairwise

X Y W

X r 1.000

N 8

Y r .134 1.000

N 6 8

W r .112 .645 1.000

N 7 7 8

Mean Substitution N = 10

X Y W

X 1.000

Y .048 1.000

W .102 .532 1.000

The results change depending on the missing data option used. For example, the correlation

between Y and W ranges from .532 to .645 across the three methods.

370 Suggested Answers to Exercises

3. Given cov

XY

= 13.00,

2

X

s

= 12.00, and

2

Y

s

= 10.00. The covariance can be expressed as fol-

lows:

cov

XY

= r

XY

(12.00

1/2

) (10.00

1/2

) = r

XY

(10.9545) = 13.00

Solving for the correlation gives us an out-of-bound value:

r

XY

= 13.00/10.9545 = 1.19

4. The covariances and effective sample sizes derived using pairwise deletion for the data in

Table 3.3 are presented next:

X Y W

X cov 86.400 –26.333 15.900

N 6 4 5

Y cov -26.333 10.000 –10.667

N 4 5 4

W cov 15.900 –10.667 5.200

N 5 4 6

I submitted the whole covariance matrix (without the sample sizes) to an online matrix cal-

culator. The eigenvalues are (98.229, 7.042, –3.671) and the determinant is –2,539.702. These

results indicate that the covariance matrix is nonpositive definite. The correlation matrix

implied by the covariance matrix for pairwise deletion is presented next in lower diagonal

form:

X Y W

X 1.00

Y –.896 1.000

W .750 –1.479 1.000

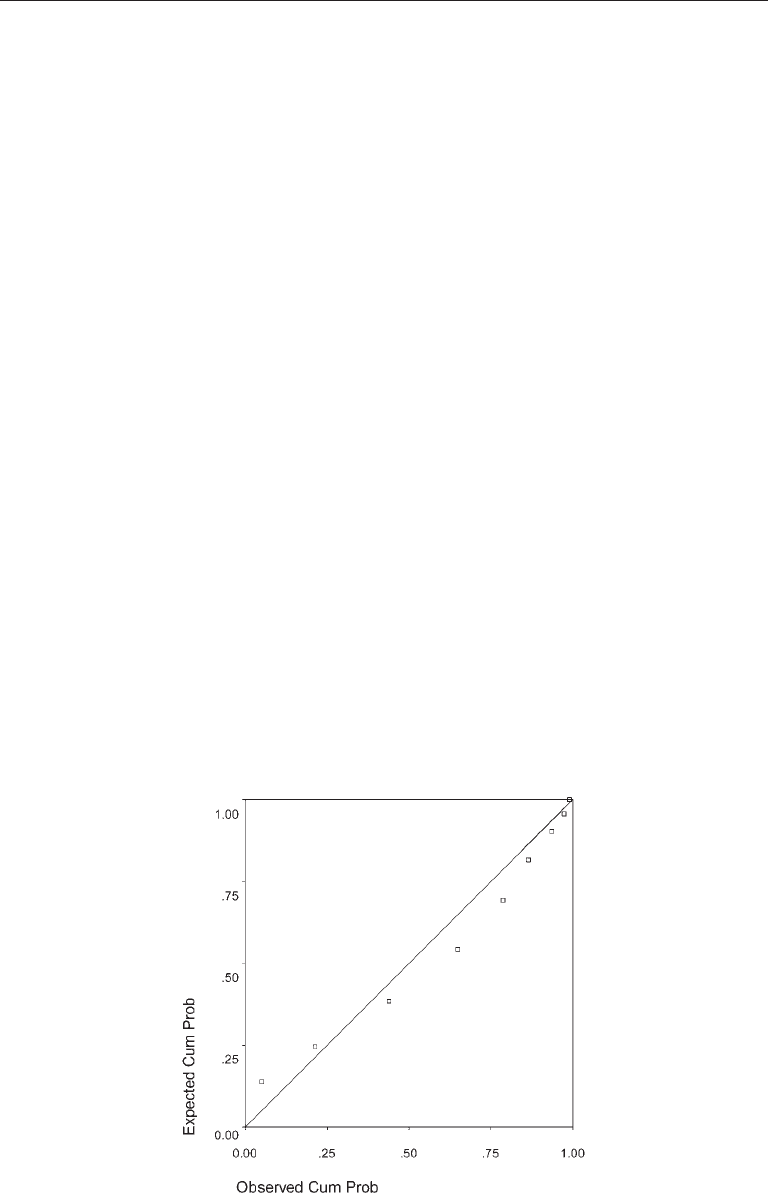

5. I used SPSS to generate the normal probability plot (P–P) presented next. The departure of

the data points in Figure 3.2 from a diagonal line indicates nonnormality:

Suggested Answers to Exercises 371

6. For the data in Figure 3.2 with the outlier removed (N = 63), SI = .65 and KI = –.24. In con-

trast, SI = 3.10 and KI = 15.73 when the outlier is included (N = 64).

7. The square root is not defined for negative numbers, and logarithms are not defined for num-

bers ≤ 0. Both functions treat numbers between 0 and 1.00 differently than they do numbers

> 1.00. Specifically, both functions make numbers between 0 and 1.0 larger, and both make

numbers greater than 1.0 smaller.

8. When the scores in Figure 3.2 are rescaled so that the lowest score is 1.0 before applying the

square root transformation, SI = 1.24 and KI = 4.12. If this transformation is applied directly

to the original scores in Figure 3.2, then SI = 2.31 and KI = 9.95. Thus, this transformation is

not as effective if applied when the minimum score does not equal 1.0. See Osborne (2002)

for additional examples.

9. The interitem correlations are presented next:

I1 I2 I3 I4 I5

I1 1.0000

I2 .3333 1.0000

I3 .1491 .1491 1.0000

I4 .3333 .3333 .1491 1.0000

I5 .3333 .3333 .1491 .3333 1.0000

Presented next are calculations for α

C

:

ij

r

= [6 (.3333) + 4 (.1491)]/10 = .2596

α

C

= [5 (.2596)]/[1 + (5 − 1) .2596] = 1.2981/2.0385 = .64

The value of α

C

reported by SPSS for these data is .63, which is within rounding error of the

result just calculated by hand.

ChaPter 5

1. Part of the association between Y

1

and Y

2

in Figure 5.3(a) is presumed to be causal, specifi-

cally, Y

1

has a direct effect on Y

2

. However, there also are noncausal aspects to their relation,

specifically, spurious associations due to common causes. For example, X

1

and X

2

are each

represented in the model as common causes of Y

1

and Y

2

. These common causes covary, so

this unanalyzed association between common causes is another type of spurious association

concerning Y

1

and Y

2

. The relevant paths for all causal and noncausal aspects of the correla-

tion between Y

1

and Y

2

are listed next:

Causal: Y

1

→ Y

2

Noncausal: Y

1

←X

1

→ Y

2

Y

1

← X

2

→ Y

2

Y

1

← X

1

X

2

→ Y

2

2. Yes. It is assumed in all CFA models that the substantive latent variables are causal (along

372 Suggested Answers to Exercises

with the measurement errors) and that the indicators are the affected (outcome) variables.

These assumptions concern effect priority.

3. Free parameter counts for Figures 5.3(b)–5.3(d) are as follows:

Exogenous variables

Model Direct effects on endogenous variables Variances Covariances Total

Figure 5.3(b) X

1

→ Y

1

X

2

→ Y

2

X

1

, X

2

X

1

X

2

10

Y

1

→ Y

2

Y

2

→ Y

1

D

1

, D

2

D

1

D

2

Figure 5.3(c) X

1

→ Y

1

X

2

→ Y

1

X

1

, X

2

X

1

X

2

10

X

2

→ Y

1

X

2

→ Y

1

D

1

, D

2

D

1

D

2

Figure 5.3(d) X

1

→ Y

1

X

2

→ Y

2

X

1

, X

2

X

1

X

2

9

Y

1

→ Y

2

D

1

, D

2

D

1

D

2

4a. With six observed variables there are p = 6(7)/2 = 21 observations. In Figure 5.5, there are

a total of seven direct effects on endogenous variables that need statistical estimates. These

paths among the exogenous variables School Support and Coercive Control and among the

endogenous variables Teacher Burnout, Teacher–Pupil Interactions (TPI), School Experi-

ence, and Somatic Status are listed next:

Support → Burnout, Coercive → Burnout,

Support → TPI, Coercive → TPI, Burnout → TPI,

TPI → Experience, TPI → Somatic

Variances of exogenous variables (

) include two for the measured exogenous variables

School Support and Coercive Control and another four for the unmeasured exogenous vari-

ables (disturbances) D

TB

, D

TPI

, D

SE

, and D

SS

, for a total of six variances. There is only one

covariance between a pair of exogenous variables, or Support

Coercive. The total number

of free parameters is

q = 7 + 6 + 1 = 14

so the model degrees of freedom are calculated as follows:

df

M

= 21 – 14 = 7

4b. With eight observed variables there are p = 8(9)/2 = 36 observations. Among the eight factor

loadings in Figure 5.7, a total of two are fixed to 1 in order to scale the factors, so there are

only six that require estimation. The variances and covariance of the two factors, Sequential

and Simultaneous, are free parameters plus the variances of each of the eight measurement

errors. The total number of free parameters is thus

q = 6 + 3 + 8 = 17

Suggested Answers to Exercises 373

so the model degrees of freedom are

df

M

= 36 – 17 = 19

4c. With 12 observed variables there are p = 12(13)/2 = 78 observations. Free parameters are for

the model of Figure 5.9 and are listed next in the following categories:

Direct effects on endogenous variables

Indicators (factor loadings): 2 per factor, or 8

Exogenous factors (path coefficients): 4

Total: 12

Variances and covariances of exogenous variables

Measurement error variances: 12

Factor variances: 1 (Constructive Thinking)

Disturbance variances: 3

Total: 16

There are no covariances between exogenous variables. The total number of free parameters

and model degrees of freedom are:

q = 12 + 16 = 28

df

M

= 78 – 28 = 50

5. A covariate is a variable that is concomitant with another variable of primary interest and

is measured for the purpose of controlling for the effects of the covariate on the outcome

variable(s). In nonexperimental designs, a covariate is often a potential confounding variable

that, once held constant in the analysis, may reduce the predictive power of another substan-

tive predictor. Potential confounding variables often include demographic or background

characteristics, such as level of education or amount of family income, and substantive pre-

dictors may include psychological variables. In a structural model, a covariate is typically

represented as an exogenous variable with direct effects on the endogenous (outcome) vari-

able that is assumed to covary with a substantive variable, which also has direct effects on

the endogenous variable. Just as in a regression analysis, the direct effect of the substantive

predictor is estimated controlling for the covariate.

6. It is possible that one model with Y

1

→ Y

2

and another model with Y

2

→ Y

1

are equivalent

models with exactly the same fit to the data. Even if these two models are not equivalent,

their fit to the data could be similar, in which case there is no statistical basis for preferring

one model over the other. The matter is not clearer even if the fit of one model is quite bet-

ter than that of the other model: This pattern of results is still affected by sampling error;

that is, the same advantage for one model may not be found in a replication sample. There is

also the possibility of a specification error that concerns the omission of other causes, which

could bias the estimation of path coefficients for both models. Again, if you know the causal

process beforehand, then you can use SEM to estimate the magnitudes of the direct effects,

but SEM will not generally help you to find the model with the correct directionalities.