Brinkmann R. The Art and Science of Digital Compositing

Подождите немного. Документ загружается.

This Page Intentionally Left Blank

C

HAPTER

E

LEVEN

Quality and Efficiency

The intent of this chapter is to take a step beyond the basic processes involved

in digital compositing and to start discussing how to apply all our tools to produce

the highest-quality images in as efficient a manner as possible. In fact these two

topics—quality and efficiency—are so co-dependent that it makes sense to discuss

them together, in the same chapter.

As we did in some of the other chapters, we will be looking at a number

of scenarios as if they were being accomplished with the use of a script-based

compositing system. Certain methods may be less applicable for a system that

does not support batch processing, but the basic concepts of efficiency with quality

are still valid.

Even though we are taking the time to devote an entire chapter to the topic,

we hope that you have already noticed a number of quality and efficiency issues

that were raised in earlier chapters of this book. Everything from conserving

disk space by using compressed file formats to using proxy images to speed

intermediate testing will contribute to maximizing your resource usage.

QUALITY

In an ideal world, there would be time and budget enough to produce perfect

composites for every job on which you work. After all, nobody gets into the

business of digital compositing without having some kind of desire to do quality

work. Unfortunately, the people paying for the work may not be as interested in

the quality as they are with some other aspect, such as speed, quantity, or cost.

The term ‘‘quality’’ is certainly subjective. Most compositing work allows for a

173

174 The Art and Science of Digital Compositing

great deal of personal aesthetics to be brought into the mix, and ultimately this

book will never be able to fully identify exactly what constitutes a quality image.

But there are certain absolutes of quality that can be identified. In particular, any

technical artifacts or imperfections that are independent of artistic judgments will

need to be addressed.

There are any number of these potential defects—aliasing artifacts, contouring,

misaligned mattes, animation glitches, scene-to-scene discontinuities, mismatched

colors, data clipping, and so on. Some of them (such as color mismatching) might

bridge the categories of artistic and technical problems, whereas others are clearly

a problem by any objective measure. The decision as to what is considered to be

acceptable quality for a given job may ultimately be someone else’s decision, but

don’t rely on others to find technical flaws for you!

EFFICIENCY

There is an old (rather cynical) adage that states that, given the categories of

speed, cost, and quality, you can only have two of the three options. Fortunately,

efficiency is an excellent way to cheat this rule and produce high-quality compos-

ites on time and on budget.

Efficiency is a matter of maximizing your available resources. No matter what

your scenario, whether you are compositing a single frame on the PC in your

basement or you are using dozens of CPUs to put together a 5000-frame composite

for the next Hollywood blockbuster, your resources will always have a limit. The

understanding of this limit, or, more properly, the understanding of the numerous

limits that each piece of your compositing toolkit has, is the first step toward

understanding where efficiencies can be introduced. Very few processes are inher-

ently efficient, and it is up to the compositing artist to modify his or her methodolo-

gies so that he or she is able to produce work within the necessary time and

budget.

Even though efficient working methods will save time and money, there is no

reason why they should cause any loss of quality. In fact, many times we find

that careful attention to producing an efficient compositing script will actually

result in a higher-quality image than one generated from a hastily created script.

Methodology

In some ways it is impossible to teach someone exactly how to choose the best

method for a given job, since every job is different. However, it is possible to

discuss commonly observed problems and impart some sense of things that should

be avoided. We will concentrate on things that can be done to make a script more

efficient, while being as certain as possible that we are not degrading the image

Quality and Efficiency 175

in any way. The discussion will include not only the actual compositing operators

used, but also issues related to disk, CPU, and memory usage. Many times we

can only give general guidelines, since certain things will depend on exactly what

software and hardware you are using to complete your task.

For most of the discussions about efficiency, we’ll be talking about methods

to increase the productivity of the software and hardware that we are using. But

determining the most efficient method for creating a composite involves not only

weighing the amount of processing time the computer will need to spend on a

particular composite, but also estimating how much of the operator’s time will

need to be spent to set up the process. Remember that the compositing artist has

a certain cost associated with him or her, just as the computer system does. If

you are creating the composite yourself, then you will be constantly making

decisions that trade off the amount of time you will spend on a problem compared

with the amount of time the computer will spend.

Many problems can certainly be solved by brute force. You may find that you

can spend 20 minutes quickly setting up a process that will then take 8 hours to

run on the computer. Or you could spend an hour setting up a much more elegant

and efficient process that will only take the computer 2 hours to process. How

do you decide which path to take? There is no definitive answer, of course. If it’s

the end of the day and you know that nobody will be using your computer

overnight anyway, you’ll probably choose the solution that takes less human time

and more computer time. If, on the other hand, it’s the beginning of the day and

you need to produce imagery by that afternoon, the time spent setting up a faster-

running process will be worth the investment. This may seem like common sense

(and if you’re an experienced compositor it had better be!), but it is always

important to remember that just about any process will have these sort of trade-

offs.

The ability to consistently make these sorts of decisions (often referred to as

production sense) is something that generally only comes with a fair amount of

experience, but we can certainly discuss a number of the issues involved. As

mentioned earlier, we always want our quest for efficiency to be driven by our

quest for quality. Consequently, let’s start with a look at how even the most simple

compositing process can be susceptible to drastic image-quality problems if one

is not careful.

MINIMIZING DATA LOSS

There is a popular misconception that working with digital images means that

one need not be concerned with decreased quality whenever a multitude of image

manipulations are applied. This is such a pervading fallacy that it must be refuted

immediately; in a large bold font:

176 The Art and Science of Digital Compositing

‘‘Digital’’ does not imply ‘‘lossless.’’

Certainly, digital compositing is a much more forgiving process than the optical

compositing process, in which every step can cause significant generation loss.

But even in the digital realm, data loss is generally something that can only be

minimized, not eliminated (hence the title of this section). Just about any image

processing or compositing operation loses some data. There are a few exceptions

to this rule, such as channel reordering or geometric transformations that don’t

require resampling, but in general even the most innocuous operation will, at the

very least, suffer from some round-off error. Unfortunately, whether this data loss

will actually affect the quality of your composite is nearly impossible to predict

in advance.

Whenever we begin a composite, we have a fixed amount of data available.

All of the images that will be used are at the best resolution (spatial, chromatic,

and temporal) that we are able to justify, and now the process of integrating them

begins. Note that we talk about the ‘‘best resolution we are able to justify.’’ What

does this mean, and how do we decide such a thing? The bottom line is that we

should try to work with the least amount of data necessary to produce a final

result that is acceptable. Once again we’re walking the fine line between having

enough data to produce the quality we desire without having to process more

pixels than is necessary. Choose too low a spatial resolution and you will have

an image that appears soft and out of focus. Choose too low a chromatic resolution

(i.e., bit depth) and you may start to see quantization artifacts such as banding

and posterization. Even if the original source images appear to be of an appropriate

quality level, once they are processed by multiple compositing operations these

artifacts may show up.

The tempting solution might be to start every composite with very high-

resolution images. But if our final result is intended for playback within a video

game at a resolution of 320 ⳯ 240 pixels, we would be foolish to process all the

intermediate steps at 4000 lines of resolution. Instead, we would probably choose

to initially reduce all the elements to a resolution of 320 ⳯ 240 and then work

directly with these images instead. As long as we pay attention to the various

steps, we should be fine working with the lower resolution, and we will certainly

have reduced the amount of time and resources necessary for the work. The

trade-off decision about what resolutions are necessary must be made for every

composite, although often it may make sense to standardize to a set of particular

formats in order to reap the benefits of consistency and predictability.

There are no guarantees or formulas that let one definitively determine if a

specific input resolution is high enough for a given composite. But not only will

choosing an excessively high resolution cause a great deal of wasted man- and

Quality and Efficiency 177

CPU-hours, it still will not guarantee artifact-free images. It is actually quite simple

to produce a compositing script that causes data loss even if the input images

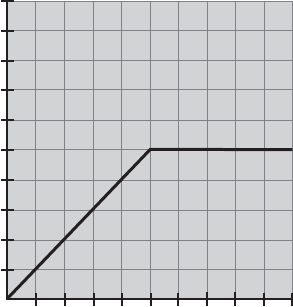

are high resolution and high bit depth. Consider the example shown in Plate 37,

in which a brightness of 2.0 was applied to our original test image from Plate 3,

and then a brightness of 0.5 was applied to the result. As you can see, a significant

amount of data was lost. A graph of the result of this two-step operation is shown

in Figure 11.1. Note that all the information in the brightest part of the image has

been clipped to a uniform value of 0.5.

Any time we need to layer multiple operations on top of each other, we must

be aware of what happens to the data in terms of overflow and round-off. In

certain situations, some of the newer software may be smart enough to analyze

multiple color corrections like this and consolidate these expressions into a global,

more precise algorithm. (In the example just given, the two color corrections

would cancel each other out.) This ability to automatically consolidate similar

operators can make a huge difference in image quality and, in many cases, can

even increase the speed of your compositing session because redundant operators

are eliminated. As usual, the best advice is to test the behavior of the system

yourself so that you know exactly what to expect. The ability of software to

automatically deal with this sort of thing is very useful, but as you will see

elsewhere in this chapter, an intelligent compositing artist can usually modify a

script to avoid these problems in the first place and will be able to introduce

efficiencies that an automated system cannot.

1

0.9

0.8

0.7

0.6

0.5

0.4

0.3

0.2

0.1

0

0

0.2 0.4 0.6 0.8

1

Output Pixel Value

Input Pixel Value

Figure 11.1 Graph of the data loss caused by applying a Brightness of 2.0 followed by a Brightness

of 0.5.

178 The Art and Science of Digital Compositing

Although the example was designed to show data loss when information is

pushed outside the range of a normal image, similar loss can occur within the

normal range if you are not working with enough precision. This possibility raises

the issue of exactly how a given compositing system maintains the data with

which it is working.

Internal Software Accuracy

Up to this point we have made the assumption that a compositing system that

is given a group of 16-bit elements will actually composite those elements at that

bit depth and produce a new 16-bit image. This is not necessarily a safe assumption.

Many of today’s systems still internally represent data at only 8 bits per channel.

Even if they are able to ‘‘read’’ a 16-bit image, they may not be able to actually

use all 16 bits and will immediately truncate the image to 8 bits. Any intermediate

imagery will be produced at this lower bit depth, and the final image will also

only be 8-bit. Thus, it is very important to know the internal accuracy of your

compositing system.

Some software and hardware manufacturers claim that 8 bits per channel is

enough information to accurately represent a color image and that there is no

need to support the ability to process at greater than 8 bits per channel. (Coinciden-

tally, the systems sold by these manufacturers all seem to be limited to this bit

depth.) In many ways it is true that 8 bits per channel can almost always produce

an image that is visually indistinguishable from a 16-bit image, particularly if the

image contains the noise, grain, and other irregularities that are inherent in a

digitized image from an analog source such as film or video. But rarely will we

simply input an image and then immediately send it to its final destination. Rather,

we will add multiple layers of image processing operations, color corrections,

image combinations, and so on until we come up with our final result. It is during

this multitude of additional processing that greater bit depth becomes useful.

Each step will cause a minute amount of data loss (at least), and eventually this

loss will start to manifest itself visibly if there is not enough color information

available. Limited-bit-depth systems may be perfectly acceptable for simple com-

posites without a large number of elements or processing steps, but once we need

to push things to the extreme, they can rapidly fall apart.

Better systems will let you choose the accuracy that you wish to use when

computing a solution. There may be times when you wish to work with only 8-

bit accuracy to increase speed, and there may be times when you need the full

16 bits. You should be aware that some systems may make assumptions about

the necessary bit depth. For instance, if your source image is only an 8-bit image,

the system may assume that any further operations can also be done at 8-bits.

The best systems will allow you to override this behavior, as certain things will

Quality and Efficiency 179

appear much cleaner if computed at a higher bit depth. Even something as simple

as a blur can introduce artifacts (particularly banding) if it is computed at only

8 bits per channel. In such a situation, you should be able to specify that the 8-

bit image be temporarily converted to 16 bits for the purpose of the calculation,

even if it will eventually be stored back to an 8-bit image. As usual, this sort of

decision is something that you will need to deal with on a case-by-case basis.

Compositing systems are not necessarily limited to working at only 16 bits of

accuracy or less. Some newer systems can actually go beyond this, representing

the data that is being processed with up to 32 bits per channel. Unlike the jump

between 8 and 16 bits, using 32 bits per channel is not done simply to increase

the number of colors that can be represented. Rather, 32-bit systems are designed

so that they also no longer need to clip image data that moves outside the range

of 0 to 1. These 32-bit systems are usually referred to as ‘‘floating-point systems.’’

At first, the ability to represent data outside the range of 0 to 1 may not seem to

be terribly worthwhile. But the implications are huge, in that it allows images to

behave more like they would in the real world, where there is no upper limit on

the brightness a scene can have. Even though we will probably still want to

eventually produce an image that is normalized between 0 and 1, we can work

with intermediate images with far less danger of extreme data loss. For instance,

we can now double the brightness of an image and not lose all the data that was

in the upper half of the image. A pixel that started with a value of 0.8 would

simply be represented with a value of 1.6. For purposes of viewing such an image,

we will still usually represent anything above 1.0 as pure white, but there will

actually be data that is stored above that threshold. At a later time, we could

apply another operator that decreases the overall brightness of the image and

brings these superwhite pixels back into the ‘‘visible’’ range.

The ability to calculate in a floating-point mode is usually an optional setting,

which should be used wisely. Running in this mode will double or quadruple

the amount of data that is used to represent each frame and will increase the

amount of processing power necessary by an equivalent amount.

There are almost always ways to produce images of identical quality without

resorting to the use of floating-point calculations. Instead, a system’s floating-

point capabilities should only be used whenever other methods are not practical.

There may be an effect that can only be achieved in floating-point mode, or you

may simply be confronted with a script that is so complex that you would spend

more time trying to find a data-clipping problem than the computer would spend

computing the results in floating-point mode.

Although compositing systems that support floating-point calculations are still

fairly rare, they will almost certainly become the standard in a few more years

as memory and CPUs become faster and cheaper. Eventually we may even have

a scenario in which all compositing is done without bothering to normalize values

180 The Art and Science of Digital Compositing

between 0 and 1, resulting in a much simpler model and, as a side benefit, making

large sections of this book obsolete.

CONSOLIDATING OPERATIONS

The purpose of this section is to discuss situations in which certain compositing

scripts can be modified in order to eliminate unnecessary operators. Whether a

given operator is necessary will not always be an objective question, but initially

we will look at scenarios in which two operators can be replaced by a single

operator without any change to the resultant image whatsoever.

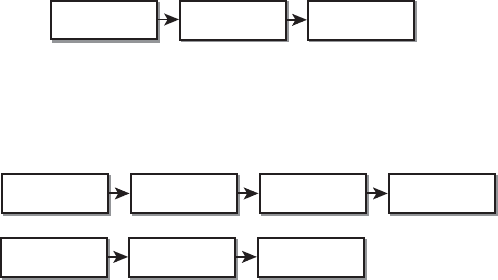

For instance, let’s take the extremely simple case of an image with a brightness

effect added to it according to the script in Figure 11.2. In this example, we have

applied a brightness of 0.5 to the original source image. Let’s say that we look at

the resultant image produced by this script and decide that we need the brightness

decreased by another 25%. There are two paths we could take: Either we add a

second brightness operator with a value of 0.75 (Figure 11.3a), or we modify our

existing brightness tool and decrease it by an additional 25%. Doing the math,

we take 0.5 ⳯ 0.75 ⳱ 0.375. This route is shown in Figure 11.3b.

Perhaps it is obvious that the preferred method would be to recalculate the

proper numbers and modify the existing brightness effect. This will leave us with

a simpler script, and the process should run quite a bit faster since it only needs

to process one operation instead of two. This is a very simple example—once

your script begins to have more than just a few layers, these sort of decisions can

become much less obvious.

Brightness

0.5

Background

Output

Figure 11.2 A simple script for applying brightness to an image.

Brightness

0.75

Brightness

0.5

Background

Brightness

0.375

Background

(a)

(b)

Output

Output

Figure 11.3 Two paths for modifying the image that results from the script in Figure 11.2.

(a) Adding a second Brightness operator. (b) Modifying the original Brightness operator.

Quality and Efficiency 181

Whenever one is creating a multiple-level compositing script, it is dangerously

easy to add a new operator every time a modification is necessary. Before you

add another layer to a script, stop to ask yourself if the layer is truly necessary,

or if the same (or a visually similar) effect can be accomplished by modifying

one of the existing layers. Compositing scripts have an uncanny ability to grow

excessively, and unless great care is taken to simplify whenever possible, you will

end up with a script that is not only incomprehensible, but also full of image-

degrading problems.

Our ability to simplify the previous script relied on the fact that we knew that

two Brightness operators could be ‘‘folded’’ together. Since the Brightness

operator is merely a multiplier, this consolidation is straightforward. One cannot

always assume that this is the case. Certain operators absolutely cannot be folded

together to get the same result. For instance, two Biased-Contrast operators with

different biases cannot be consolidated into a single Contrast operator that gives

an identical result.

Many compositing systems are able to analyze scripts and automatically sim-

plify the math before they run the process. On such a system, you might in fact

not have any significant speed difference between these two examples. Such

automatic optimization can only go so far, however, and to rely on it will inevitably

produce code that is less efficient. Even the best optimization routines will proba-

bly be limited to producing images that are exactly what would be produced by

the unoptimized script. A good artist, however, can look at a script and realize

that two layers can be consolidated to produce an extremely similar, but not

identical, image. The decision can be made to accept the slightly different image in

the interest of a significant speed increase. But please, be careful not to compromise

image quality in blind pursuit of the most efficient script possible!

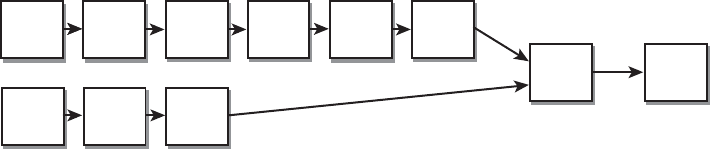

Let’s look at a slightly more complex example to discuss other areas that can

be simplified. Consider the compositing script shown in Figure 11.4. We have a

foreground element with several effects layered on it, and a background element

with a few different effects on it. The first branch is then placed over the second.

Fore-

ground

Pan

200, 100

Bright

2.0

Pan

50, -20

RGB

Mult

1, .25, 2

Blur

2.0

Back-

ground

RGB

Mult

2, 2, 1

Blur

2.0

Over

Output

Figure 11.4 A more complex script involving two source elements.