Wilkinson D.J. Stochastic Modelling for Systems Biology

Подождите немного. Документ загружается.

184

BEYOND THE GILLESPIE ALGORITHM

on

it

being

greater

than

t).

Again,

a

combination

of

the

memory

less

property

and

the

rescaling property (Proposition 3.20) ensures that this is okay.

The next thing worth noting is that it is assumed that the algorithm

"knows" which

hazards are affected by each reaction. Gibson

& Bruck (2000) suggest that this is

done by creating a

"dependency graph" for the system. The dependency graph has

nodes corresponding to each reaction in the system. A directed arc joins node

i to

node

j

if

a reaction event

of

type i induces a change

of

state that affects the hazard

for the reaction

of

type

j.

These can be determined (automatically) from the forms

of

the associated reactions. Using this graph,

if

a reaction

of

type i occurs, the set

of

all children

of

node i in the graph gives the set

of

hazards that needs to be updated.

An interesting alternative to the dependency graph is to work directly on the Petri

net representation

of

the system. Then, for a given reaction node, the set-of all "neigh-

bours"

(species nodes connected to that reaction node), X is the set

of

all species that

can

be

altered. Then the set

of

all reaction nodes that are "children"

of

a node in X

is the set

of

all reaction nodes whose hazards may need updating. This approach is

slightly conservative in that the resulting set

of

reaction nodes is a superset

of

the set

which absolutely must be updated, but nevertheless represents a satisfactory alterna-

tive.

This algorithm is now

"local" in the sense that all computations (bar one) involve

only adjacent nodes on the associated Petri net representation

of

the problem. The

only remaining

"global" computation is the location

of

the index

of

the smallest re-

action time. Gibson and Bruck's clever solution to this problem is to keep all reaction

times (and their associated indices) in an

"indexed priority queue." This is another

graph, allowing searches and changes to be made using only fast and local operations;

see the original paper for further details

of

exactly how this is done. The advantage

of

having local operations on the associated Petri net is that the algorithm becomes

straightforward to implement in an event-driven object-oriented programming style,

consistent with the ethos behind the Petri net approach. Further, such

an

implementa-

tion could be multi-threaded on an

SMP or hyper-threading machine, and would also

lend itself to a full message-passing implementation on a parallel computing cluster.

For further information on parallel stochastic simulation, see Wilkinson

(2005).

This algorithm is more efficient than Gillespie's direct method in the sense that

only one new random number needs to be simulated for each reaction event which

takes place, as opposed to the two that are required for the Gillespie algorithm. Note

however, that selective recalculation

of

the hazards, hi(X, Ci) (and the cumulative

hazard

ho(x,

c)), is also possible (and highly desirable) for the Gillespie algorithm,

and could speed up that algorithm enormously for large systems. Given that the Gille-

spie algorithm otherwise requires fewer operations than the next reaction method,

and does not rely on the ability to efficiently store putative reaction times in an in-

dexed priority queue, the relative efficiency

of

a cleverly implemented direct method

and the next reaction method is likely to depend on the precise structure

of

the model

and the speed

of

the random number generator used.

EXACT SIMULATION METHODS

185

8.2.3

Time-varying

volume

A problem that has so far been overlooked is that

of

reaction hazards that vary contin-

uously over time. The most common context for this to arise in a practical modelling

situation is when a growing cell (or cellular compartment) has its volume modelled

as

a continuous deterministic function

of

time. For example, let us suppose that the

container volume at

timet,

V(t),

is modelled as

V(t)

= v

0

+at,

t

2:

0,

(8.1)

for some constant a >

0.

If

the model contains any second-order reactions, the

hazards

of

these should be inversely proportional to

V(t)

(Section 6.2). The haz-

ards

of

first-order reactions are independent

of

volume and hence unaffected. What

to do about any zero-order reactions is somewhat unclear. As zero-order reactions

are typically used to model

"production"

or

"influx" in a simple-minded way, it is

conceivable that at least some zero-order reactions should have volume dependence.

Certain production rates might reasonably be considered to

be

directly proportional

to

V(t),

while influx equations might have hazards proportional to

V(t)

2

1

3

(as sur-

face area increases more slowly than volume). In general, zero-order reactions should

be

considered on a case-by-case basis.

In order to keep the presentation as straightforward as possible, we will just con-

sider modifying the (inefficient) first reaction method to take account

of

time-varying

reaction hazards.*

It

should

be

reasonably clear that since the only steps involving the

hazards are steps 2 and 3 that only steps 2 and 3 need modification. Since the hazard

is time varying, we should now write it

hi(x, ci, t), i = 1, 2,

...

, u. Now we could

simply run the algorithm using these time-depended hazards but otherwise unmodi-

fied. Unfortunately this will lead to an algorithm that is only approximately correct,

as

we would

be

essentially assuming that the hazards remain constant between each

reaction event, which

is

not actually true. At this point it is helpful to recall the in-

homogeneous

Poisson process (Section 5.4.4), as this is exactly what is needed for

dealing with non-constant hazards. Proposition 5.4 and the subsequent discussion tell

us

exactly how to simulate the time

of

the next event

of

an inhomogeneous Poisson

process, but note that the lower limit

of

the integral defining the cumulative hazard

must

be

the current simulation time, and not zero.

For concreteness consider a reaction with hazard h(t) =

a/V(t),

where a will

be

a function

of

x and

Ci,

but constant with respect

tot.

Suppose further that

V(t)

is

given by (8.1), the current simulation time is

t

0

,

and we wish to simulate the

timet'

of

the next reaction event.

We

begin by computing the cumulative hazard

H(t)

=it

h(t)dt

=it___!!:!!!:___

=!:log

(

vo

+at

) , t

2:

t

0

,

to

to

Vo +

at

a Vo + ato

and then compute the cumulative distribution function (CDF)

of

the time

of

the next

* The next reaction method (Gibson-Bruck algorithm) is also quite straightforward to modify. The direct

method (Gillespie algorithm) is actually a bit awkward to modify, and so the desire to work with time-

varying hazards is one reason why some people prefer to use an algorithm

in

the Gibson and Bruck

style. These issues are discussed in some detail in Gibson & Bruck (2000).

186

BEYOND

THE GILLESPIE

ALGORITHM

event as

F(t) =

1-

exp{-H(t)}

=

1-

(

Vo

+at

)-a/a,

vo

+ ato

t

~to.

Once

we

have the CDF we can simulate

u"'

U(O,

1)

and solve u = F(t')

fort',

the

time

of

the next event, to obtain

t'

=

~

[(vo

+

ato)u-afa-

vo].

Returning to the problem

of

modifying the first reaction method, one simply sim-

ulates putative times to the next event using the above strategy for any reactions with

time varying rates, and the rest

of

the algorithm remains untouched.

This provides an example

of

coupling a discrete stochastic process with a variable .

that changes continuously in time. Essentially the same strategy is used in several

of

the hybrid algorithms to

be

considered in Section 8.4, where some variables are

treated as discrete and others as varying continuously in time. A good understanding

of

the above technique is a necessary pre-requisite for understanding hybrid simula-

tion algorithms.

8.3 Approximate simulation strategies

8.3.1

TJme

discretisation

Gibson and Bruck's next reaction method is regarded by many to

be

the best avail-

able method for exact simulation

of

a stochastic kinetic model. However,

if

one is

prepared to sacrifice the exactness

of

the simulation procedure, there is a potential

for huge speed-up at the expense

of

a little accuracy. These fast approximate methods

are all based on a time discretisation

of

the Markov process.

The essential idea is that the time axis is divided into (small) discrete chunks, and

the underlying kinetics are approximated so that advancement

of

the state from the

start

of

one chunk to another can

be

made in one go. Most

of

the methods work on

the assumption that the time intervals have been chosen to be sufficiently small that

the reaction hazards can

be

assumed constant over the interval.

We

know that a point

process with constant hazard is a (homogeneous) Poisson process (Section 3.6.5).

Based on the definition

of

the Poisson process, we assume that the number

of

reac-

tions (of a given

type) occurring in a short time interval has a Poisson distribution

(independently

of

other reaction types).

We

can then simulate Poisson numbers

of

reaction events and update the system accordingly.

For a fixed (small) time step

D.t,

we can present an approximate simulation algo-

rithm as follows (we use the matrix notation from Section 2.3.2):

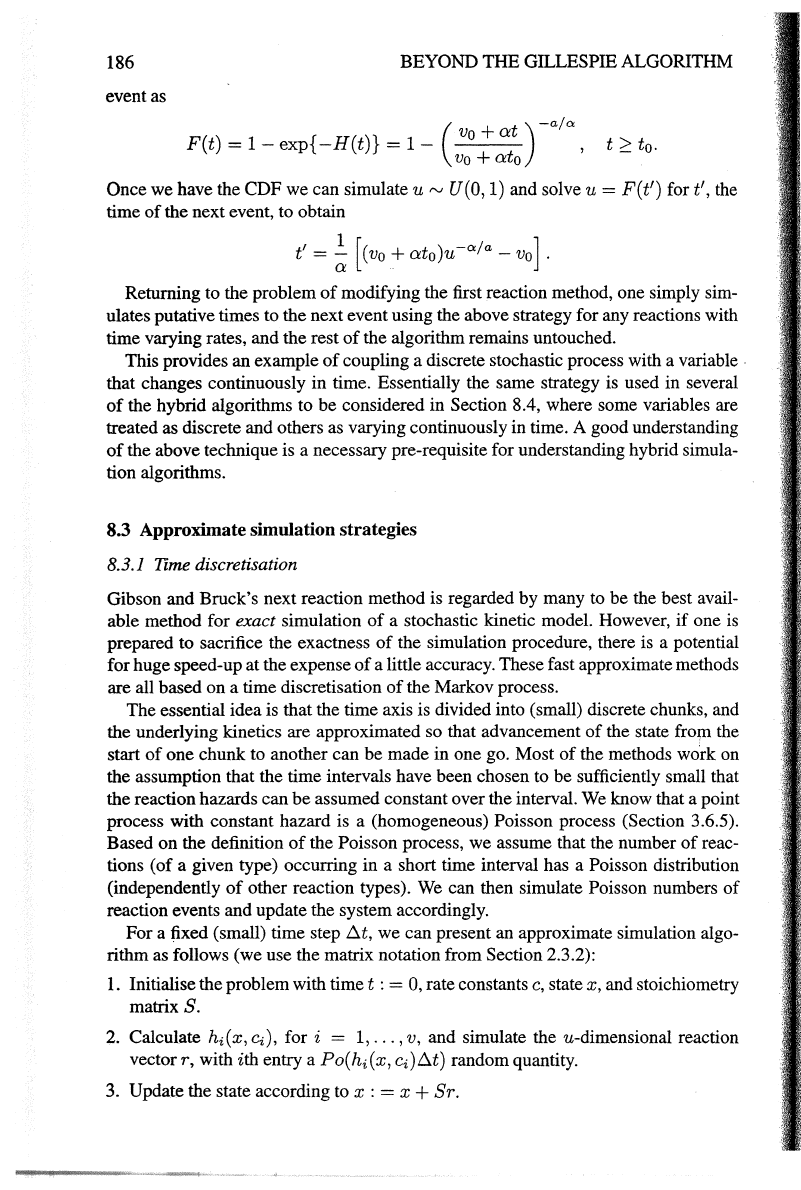

1. Initialise the problem with

timet

: = 0, rate constants

c,

state x, and stoichiometry

matrix

S.

2. Calculate /4(x, ct),

fori

= 1,

...

, v, and simulate the u-dimensional reaction

vectorr,

with ith entry a Po(hi(x,

ct)D.t)

random quantity.

3.

Update the state according to

x:

= x

+Sr.

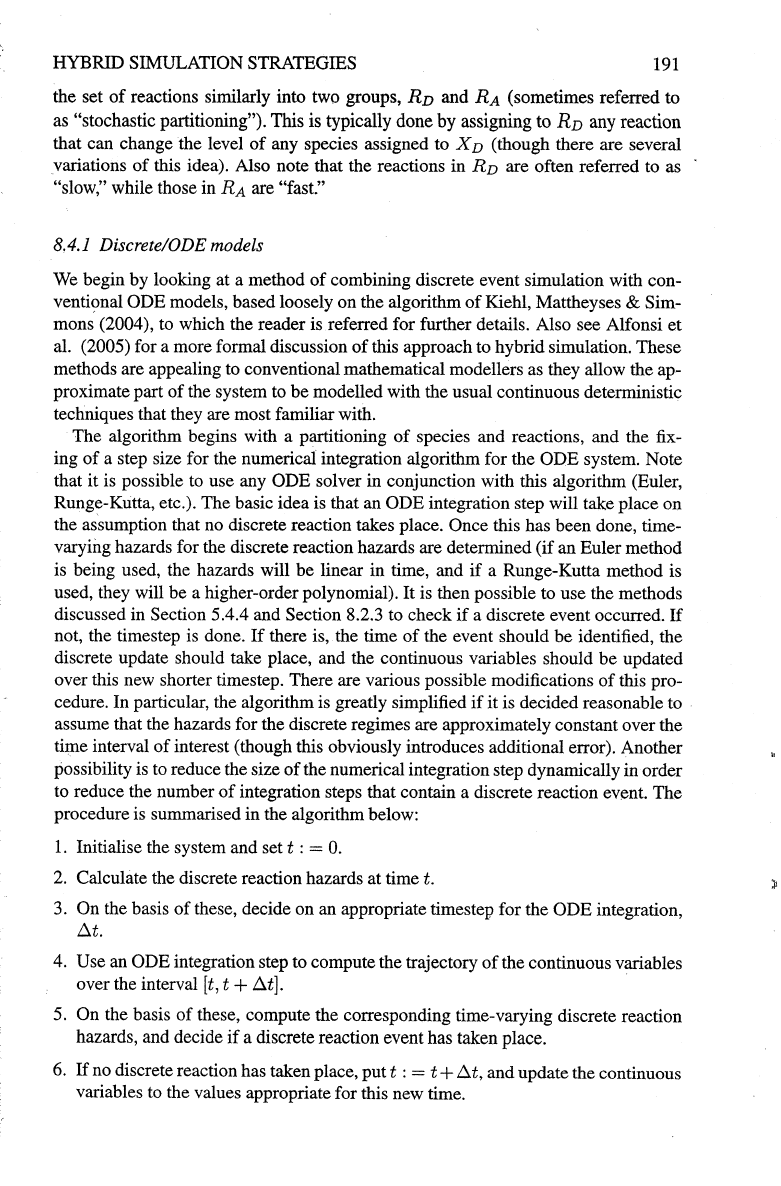

APPROXIMATE SIMULATION STRATEGIES

pts

<-

function(N,

T=lOO,

dt=l,

...

)

{

tt=O

n=T%/%dt

X=N$M

S=t(N$Post

-

N$Pre)

u=nrow(S)

v=ncol(S)

xmat=matrix(O,

ncol=u,

nrow=n)

for

(i

in

l:n)

{

h=N$h(x,

...

)

r=rpois(v,

h*dt)

X = X +

(8

%*%

r)

xmat[i,)=x

ts(xmat,start=O,deltat=dt)

187

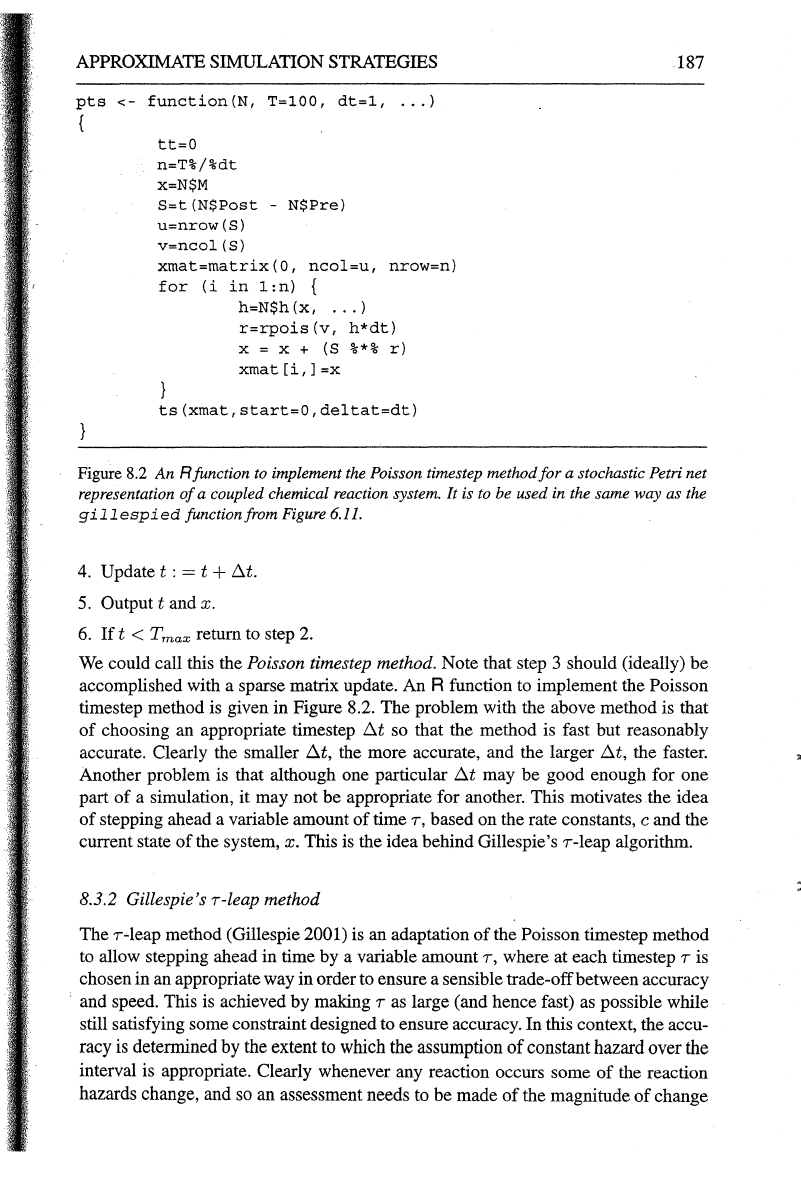

Figure 8.2

An

Rfunction

to

implement the Poisson timestep method for a stochastic Petri net

representation

of

a coupled chemical reaction system. It is to be used in the same way as the

gillespied

function from Figure 6.11.

4.

Update t : = t +

!:::.t.

5.

Output t and x.

6.

If

t < T

max

return to step

2.

We

could call this the Poisson timestep method. Note that step 3 should (ideally) be

accomplished with a sparse matrix update. An R function to implement the Poisson

timestep method is given in Figure 8.2. The problem with the above method is that

of

choosing an appropriate timestep

!:::.t

so that the method is fast but reasonably

accurate. Clearly the smaller

!:::.t,

the more accurate, and the larger

!:::.t,

the faster.

Another problem is that although one particular

!:::.t

may be good enough for one

part

of

a simulation, it may not be appropriate for another. This motivates .the idea

of

stepping ahead a variable amount

of

timeT, based on the rate constants, c and the

current state

of

the system, x. This is the idea behind Gillespie's T-leap algorithm.

8.3.2 Gillespie's T-leap method

The T-leap method (Gillespie

2001) is an adaptation

of

the Poisson timestep method

to allow stepping ahead in time by a variable amount

T,

where at each timestep

Tis

chosen in an appropriate way in order to ensure a sensible trade-off between accuracy

and speed. This

is

achieved by making T as large (and hence fast) as possible while

still satisfying some constraint designed to ensure accuracy. In this context, the accu-

racy

is

determined by the extent to which the assumption

of

constant hazard over the

interval is appropriate. Clearly whenever any reaction occurs some

of

the reaction

hazards change, and

so

an assessment needs

to

be made

of

the magnitude

of

change

188

BEYOND THE GILLESPIE ALGORITHM

of

the

hazards

hi(x,

Ci)·

Esserttially,

the

idea

is

to

chooser

so

that

the

(proportional)

change in all

of

the

hi(x,

ci) is small.

The simplest way to check that

a chosen r is satisfactory is to apply a post-leap

check. That is, after a leap

of

r,

check that lhi(x',

Ci)-

hi(x,

Ci)

I is sufficiently small

for each i (where

x and

x'

represent the state

of

the system before and after the leap).

If

any

of

the differences are too large, try again with a smaller value

of

r.

One

of

the

problems with this method is that it biases the system away from large yet legitimate

state changes.

A

pre-leap check seems more promising. Here we can calculate the expected new

state as

E (x') = x + E (r)

A,

where the

ith

element

of

E (r) is

just

hi(x,

ci)r.

We can then calculate the change in hazard at this "expected" new state and see

if

this is acceptably small (it should

be

noted that this is not necessarily the expected

change in hazard, due to the potential non-linearity

of

hi(x,

ci)). It is suggested that

the magnitude

of

acceptable change should be a fraction

of

the cumulative hazard

ho(x,

c),

i.e.,

lhi(x',ci)-

hi(x,ci)l:::;

cho(x,c),

Vi.

Gillespie provides an approximate method for calculating the largest r satisfying this

property (Gillespie

2001). Note that

if

the resulting r is as small (or almost as small)

as the expected time leap associated with an exact single reaction update, then it is

preferable to do

just

that. Since the time to the first event is

Exp(h

0

(x, c)); which has

expectation

1/ho(x,

c), one should always prefer an exact update

if

the suggested r

is less than (say)

2/ho(x,c).

Gillespie & Petzold (2003) consider refinements

of

this basic r selection algorithm

that improve its behaviour somewhat. However, in my opinion, the

"pure"

r-leap

method is always likely to

be

somewhat unsatisfactory in the context

of

biochemical

networks with very small numbers

of

molecules

of

some species (say, zero or one

copy

of

an activated gene).

On

the other hand, a hybrid algorithm known as the

max-

imal timestep method uses the

r-leap

method for some variables and not others. This

algorithm, which seems quite promising, will

be

briefly described in Section 8.4.2.

8.3.3 Diffusion approximation (chemical Langevin equation)

Another way

of

speeding up simulation is to simulate from the'diffusion approxi-

mation to the true process (Section 5.5). A formal discussion

of

this procedure is

beyond the scope

of

this book. However, we shall here content ourselves with deriv-

ing the form

of

the diffusion approximation using an intuitive procedure (which can

be

formalised with a little effort; see, for example Gillespie (2000)).

It

is clear from the discussion

of

the Poisson timestep method that the change

in state

of

the process, ·dXt in an infinitesimally small time interval dt is S dRt,

where

dRt

is

au-vector

whose

ith

element is a

Po(hi(Xt,

ci)dt) random quantity.

Matching the mean and variance, we put

dRt

~

h(x, c)dt +

diag

{ y'h(x, c)}

dWt,

where

h(x,

c) = (h1 (x, c1),

...

,

hv(x,

cv))', dWt is the increment

of

a v-d Wiener

process, and for a

p-d vector v,

diag

{ v} denotes the p x p matrix with the elements

APPROXIMATE

SIMULATION

STRATEGIES

189

of

v along the leading diagonal and zeros elsewhere.

We

now have the diffusion

approximation

dXt

=

SdRt

= S (

h(Xt,

c)dt +

diag

{

yfh(Xt,

c)}

dWt)

=>

dXt

=

Sh(Xt,

c)dt

+ S

diag

{

yfh(Xt,

c)}

dWt.

(8.2)

Equation (8.2) is one way

of

writing the chemical Langevin equation (CLE) for a

stochastic kinetic model.

t One slightly inconvenient feature

of

this form

of

the equa-

tion is that the dimension

of

Xt

(u)

is different from the dimension

of

the driving

process

Wt

(v). Since we will typically have v >

u,

there will

be

unnecessary

redundancy in the formulation associated with this representation. However, using

some straightforward multi-variate statistics (not covered

in

Chapter 3), it is easy

to see that the variance-covariance matrix for

dXt

is S

diag

{h(Xt,

c)}

S'

dt

(or

A'

diag

{h(Xt,

c)}

Adt).

So, (8.2) can

be

rewritten

dXt

=

Sh(Xt,

c)dt +

yfSdiag{h(Xt,

c)}

S'dWt,

(8.3)

where dWt now denotes the increment

of

a

u-d

Wiener process, and we use a com-

mon convention in statistics for the square root

of

a matrix.+

In

some ways (8.3)

represents a more efficient description

of

the CLE. However, there can

be

compu-

tational issues associated with calculating the square root

of

the diffusion matrix,

particularly when

S is rank degenerate (as is typically the case, due to conservation

laws

in

the system}, so both (8.2) and (8.3) turn

out

to

be

useful representations

of

the CLE, depending on the precise context

of

the problem, and both provide the basis

for approximate simulation algorithms.

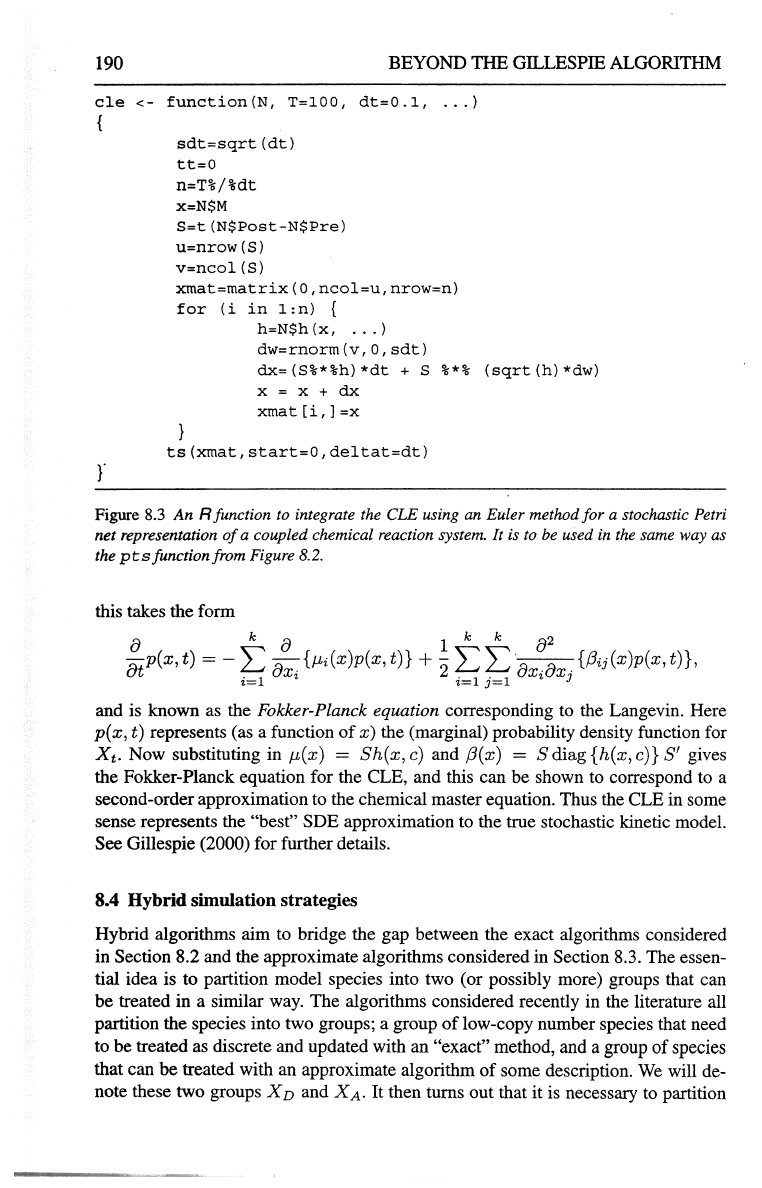

An R function

to

integrate the

CLE

using the

Euler method is given in Figure 8.3. Again, this method can work extremely well

if

there are more than (say) ten molecules

of

each reacting species throughout the

course

of

the simulation. However,

if

the model contains species with a very small

number

of

molecules, simulation based

on

a pure Langevin approximation is likely

to

be

unsatisfactory. Again, however, a hybrid algorithm can

be

constructed that uses

discrete updating for the low copy-number species and a Langevin approximation for

the rest; such an algorithm will

be

discussed in Section 8.4.3.

Having used a relatively informal method to derive the CLE, it is worth taking

time to understand more precisely its relationship with the true discrete stochastic

kinetic model (represented by a Markov

jump

process).

In

Section 6. 7 we derived the

chemical Master equation as Kolmogorov's forward equation for the Markov

jump

process.

It

is possible to develop a corresponding forward-equation for the chemical

Langevin equation (8.3).

For

a general k-dimensional Langevin equation

dXt

= J.L(Xt)dt +

j3

1

1

2

(Xt)dWt.

t

Note

that

setting

the

tenn

in

dWi

to

zero

gives

the

ODE

system

corresponding

to the

continuous

detenninistic

approximation.

+

Here,

for

a p x p

matrix

M

(typically

symmetric),

.,fM

(or

M

1

1

2

)

denotes

any p x p

matrix

N

satisfying

N

N'

=

M.

Common

choices

for

N include

the

symmetric

square

root

matrix

and

the

Cholesky

factor;

see

Golub

&

Van

Loan

(1996)

for

computational

details.

)

190

BEYOND

THE GILLESPIE

ALGORITHM

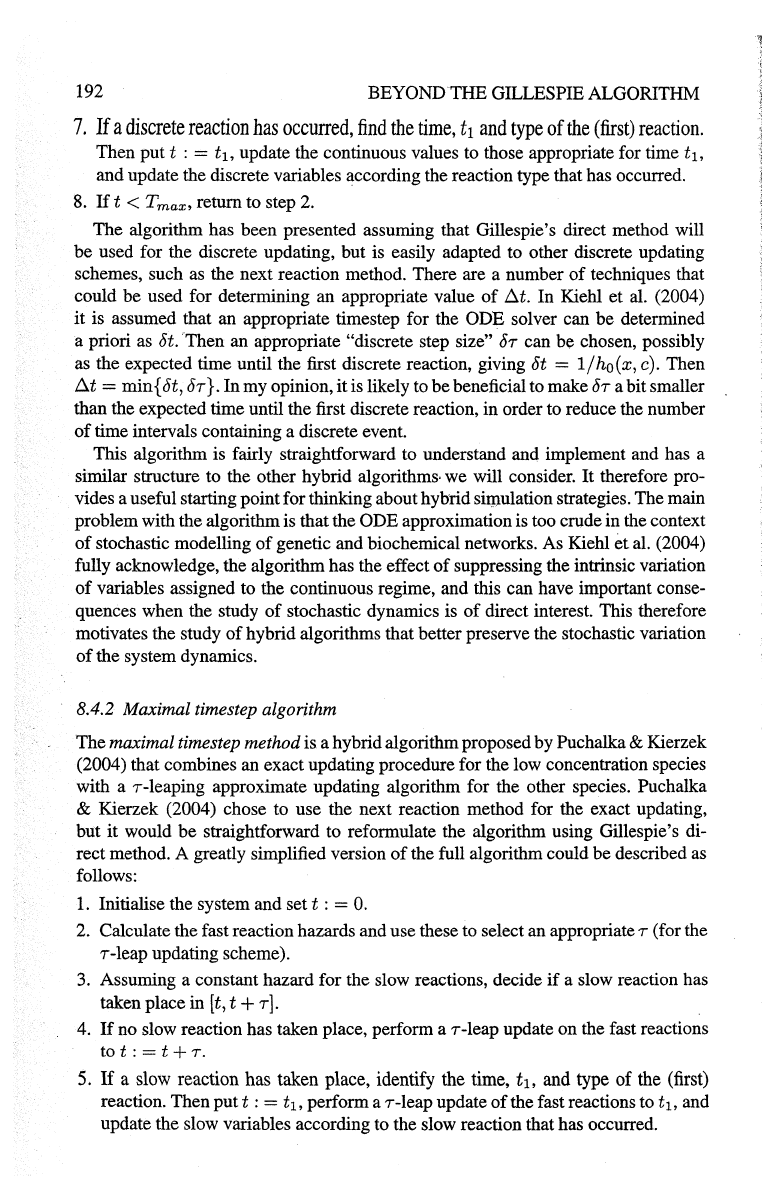

cle

<-

function(N,

T=lOO,

dt=O.l,

...

)

r

sdt=sqrt(dt)

tt=O

n=T%/%dt

X=N$M

S=t(N$Post-N$Pre)

u=nrow(S)

v=ncol(S)

xmat=matrix(O,ncol=u,nrow=nl

for

(i

in

l:n)

{

h=N$h(x,

...

)

dw=rnorm(v,O,sdt)

dx=(S%*%h)*dt

+ S

%*%

(sqrt(h)*dw)

X=X+dx

xmat[i,]=x

ts(xmat,start=O,deltat=dt)

Figure 8.3

An

R function to integrate

the

Cl.E using an Euler method for a stochastic Petri

net representation

of

a coupled chemical reaction system. It is

to

be

used

in

the

same way as

the

ptsfunctionfrom

Figure 8.2.

this takes the form

f)

kf}

lkk

fj2

fJtp(x, t)

=-

L

~{P,i(x)p(x,

t)} +

2

L L

~{(Jij(x)p(x,

t)},

i=l

X,

i=l

j=l

X,

XJ

and is known as the Fokker-Planck equation corresponding to the Langevin. Here

p(x,

t)

represents (as a function

of

x)

the (marginal) probability density function for

Xt.

Now substituting in p,(x) =

Sh(x,c)

and (J(x) =

Sdiag{h(x,c)}S'

gives

the Fokker-Planck equation for the CLE, and this can be shown to correspond to a

second-order approximation to the chemical master equation. Thus the CLE in some

sense represents the

"best" SDE approximation to the true stochastic kinetic model.

See Gillespie (2000) for further details.

8.4 Hybrid simulation strategies

Hybrid algorithms aim to bridge the gap between the exact algorithms considered

in Section 8.2 and the approximate algorithms considered in Section 8.3. The essen-

tial idea is to partition model species into two (or possibly more) groups that can

be

treated in a similar way. The algorithms considered recently in the literature all

partition the species into two groups; a group

of

low-copy number species that need

to

be

treated as discrete and updated with an "exact" method, and a group of species

that can

be

treated with an approximate algorithm

of

some description.

We

will de-

note these two groups

Xv

and XA. It then turns out that it is necessary

to

partition

192

BEYOND

THE

GILLESPIE ALGORITHM

7.

If

a

discrete

reaction

has

occurred,

find

the

time,

t

1

and

type

of

the

(first)

reaction.

Then

putt

: = t

1

,

update the continuous values to those appropriate for time t

1

,

and update the discrete variables according the reaction type that has occurred.

8.

H t <

Tmax•

return to step 2.

The algorithm has been presented assuming that Gillespie's direct method will

be used for the discrete updating, but is easily adapted to other discrete updating

schemes, such as the next reaction method. There are a number

of

techniques that

could be used for determining an appropriate value

of

.D.t.

In Kiehl

et

al. (2004)

it is assumed that an appropriate timestep for the ODE solver can be determined

a priori as

Jt. Then an appropriate "discrete step size" or can be chosen, possibly

as the expected time until the first discrete reaction, giving

Jt

=

1/ho(x,

c). Then

.D.t

=min{

ot, or}. In my opinion, it is likely to

be

beneficial to make or a bit smaller

than the expected time until the first discrete reaction, in order to reduce the number

of

time intervals containing a discrete event.

This algorithm is fairly straightforward to understand and implement and has a

similar structure to the other hybrid

algorithms· we will consider.

It

therefore pro-

vides a useful starting point for thinking about hybrid

sin:mlation strategies. The main

problem with the algorithm is that the

ODE approximation is too crude in the context

of

stochastic modelling

of

genetic and biochemical networks. As Kiehl

et

al. (2004)

fully acknowledge, the algorithm has the effect

of

suppressing the intrinsic variation

of

variables assigned to the continuous regime, and this can have important conse-

quences when the study

of

stochastic dynamics is

of

direct interest. This therefore

motivates the study

of

hybrid algorithms that better preserve the stochastic variation

of

the system dynamics.

8.4.2 Maximal timestep algorithm

The maximal timestep method is a hybrid algorithm proposed by Puchalka & Kierzek

(2004) that combines an exact updating procedure for the low concentration species

with a r-leaping approximate updating algorithm for the other species.

Puchalka

& Kierzek (2004) chose to use the next reaction method for the exact updating,

but it would

be

straightforward to reformulate the algorithm using Gillespie's di-

rect method. A greatly simplified version

of

the full algorithm could be described as

follows:

1.

Initialise the system and set t : =

0.

2. Calculate the fast reaction hazards and use tilese to select an appropriate r (for tile

r-leap

updating scheme).

3.

Assuming a constant hazard for the slow reactions, decide

if

a slow reaction has

taken place in

[t,

t +

r].

4.

If

no slow reaction has taken place, perform a

r-leap

update on the fast reactions

tot:=

t +

r.

5.

If

a slow reaction has taken place, identify tile time, t

1

,

and type of tile (first)

reaction. Then

putt:=

t

1

,

perform a

r-leap

update

of

the fast reactions to t

1

,

and

update the slow variables according to the slow reaction that has occurred.