Wilamowski B.M., Irwin J.D. The Industrial Electronics Handbook. Second Edition: Industrial Communication Systems

Подождите немного. Документ загружается.

64-1

64.1 Introduction

A.static.Web.site,.which.is.generally.written.in.HTML,.does.not.provide.functionality.to.dynamically.

extr

act

.info

rmation

.and.to.store.it.in.a.data

base

.[WT0

8].

.Dyna

mic

.Web.sites.inte

ract

.dyna

mically

.with.

clie

nts

.by.taki

ng

.requ

ests

.from.clie

nts.

.When.rece

iving

.requ

ests

.from.a.clie

nt,

.a.serv

er

.inte

racts

.with.a.

data

base

.to.extr

act

.requ

ired

.info

rmation,

.form

ats

.info

rmation,

.and.send

s

.it.back.to.the.clie

nt.

.HTML.

alon

e

.does.not.prov

ide

.the.func

tionality

.need

ed

.for.a.dyna

mic,

.inte

ractive

.envi

ronment.

.Addi

tional

.

tech

nologies

.are.used.to.impl

ement

.the.dyna

mic

.beha

vior

.both.on.the.clie

nt

.side.(insi

de

.a.Web.brow

ser)

.

and.on.the.serv

er

.side.to.rend

er

.a.web.page.dyna

mically.

.Most.comm

on

.netw

ork

.prog

ramming

.tools.

used.for.deve

loping

.dyna

mic

.Web.sites.on.the.clie

nt

.side.are.Java

Script,

.and.Java.or.Acti

veX

.appl

ets.

.

Most.comm

on

.tools.on.the.serv

er

.side.are.PHP,.Sun.Micr

osystems’

.Java.Serv

er

.Page

s,

.Java.Serv

lets,

.

Micr

oso

.Acti

ve

.Serv

er

.Page

s

.(APS

)

.tech

nology,

.and.Comm

on

.Gate

way

.Inte

rface

.(CGI

)

.scri

pts

.using.

scri

pting

.lang

uages

.such.as.PERL.and.Java

Script,

.Acti

veX

.and.Pyth

on,

.or.prec

ompiled

.bina

ry

.prog

rams.

Communication

.thro

ugh

.comp

uter

.netw

orks

.has.beco

me

.a.popu

lar

.and.eci

ent

.mean

s

.of.comp

ut-

ing

.and.simul

ation.

.Most.comp

anies

.and.rese

arch

.inst

itutions

.use.netw

orks

.to.some.exte

nt

.on.a.regu

lar

.

basi

s

.[W10

].

.Comp

uter

.netw

orks

.prov

ide

.abil

ity

.to.acces

s

.all.kind

s

.of.info

rmation

.and.made.avail

able

.

from.all.arou

nd

.the.worl

d;

.and.intr

anet

.netw

orks

.prov

ide

.conn

ectivity

.for.a.smal

ler,

.more.isol

ated

.

doma

in

.like.a.comp

any

.or.a.scho

ol.

.User

s

.can.run.sow

are

.thro

ugh

.comp

uter

.netw

orks

.by.inte

racting

.

with.use

r

.int

erface.

.Sim

ulation

.thr

ough

.com

puter

.net

works

.has.sev

eral

.ben

ets:

•

. Univ

ersal user interface on every system:

.Ever

y

.syst

em

.can.run.sow

are

.simul

ation

.with.a.web.

brow

ser

.thr

ough

.use

r

.int

erface.

•

. Port

ability:

. Sow

are

. loca

ted

. only. on. the. cent

er

. mach

ine

. can. be. acce

ssed

. at. any. time. and.

ever

ywhere.

•

. Sow

are protection:

.Use

rs

.can.run.so

ware

.thr

ough

.com

puter

.net

works

.but.can

not

.own.it.

64

Running Software

over Internet

64.1. Introduction.....................................................................................64-1

64.2

. Most.Com

monly

.Use

d

.Net

work

.Pro

gramming

.Too

ls..............64-2

Hypertext.Markup.Language. •. JavaScript. •. Java. •. ActiveX. •. .

CORBA.and.DCOM. •. Common.Gateway.Interface. •. PERL. •. PHP

64.3. Examples...........................................................................................64-5

Neural.Network.Trainer.through.Computer.Networks. •. Web-Based.

C++.Compiler. •. SPICE-Based.Circuit.Analysis.Using.Web.Pages

64.4. Summary.and.Conclusion............................................................64-11

References...................................................................................................64-11

Nam Pham

Auburn University

Bogdan M.

Wilamowski

Auburn University

Aleksander

Malinowski

Bradley University

© 2011 by Taylor and Francis Group, LLC

64-2 Industrial Communication Systems

•. Limitation:.Soware.can.interact.with.any.platform.that.is.independent.of.the.operation.systems,.

user

s

.do.not.have.to.set.up.or.con

gure

.sow

are

.unle

ss

.it.is.impl

emented

.on.the.serv

er

.in.the.form.

of.stor

ed

.use

r

.pro

le.

•

. Legac

y soware:

.Old.sow

are

.can.be.run.in.a.dedi

cated

.envi

ronment

.on.the.serv

er

.whil

e

.its.new.

user.inte

rface

.runs.thro

ugh

.a.web.brow

ser

.on.new.syst

ems

.for.whic

h

.the.part

icular

.appl

ication

.

is.not.ava

ilable.

•

. Remo

te control:

.Com

puter

.net

works

.are.use

d

.to.con

trol

.obje

cts

.rem

otely.

e

.Inte

rnet

.band

width

.is.alre

ady

.adeq

uate

.for.many.sow

are

.appl

ications

.if.thei

r

.data.ow.is.care

fully

.

desig

ned.

.Furt

hermore,

.the.band

width

.limi

tation

.will.signi

cantly

.impr

ove

.with.time

.

.e.key.issu

e

.

is.to.solve.problems.associated.with.a.new.way.of.soware.development.so.that.application.of.soware.

will.be.possi

ble

.thro

ugh

.the.Inte

rnet

.and.Intr

anet.

.It.is.ther

efore

.impo

rtant

.to.deve

lop

.meth

ods

.that.

take.adva

ntage

.of.netw

orks

.and.then.plat

form

.inde

pendent

.brow

sers.

.is.woul

d

.requ

ire

.solv

ing

.seve

ral

.

issu

es

.suc

h

.as

•

. Mini

mization

.of.the.amo

unt

.of.dat

a

.tha

t

.mus

t

.be.sen

t

.thr

ough

.the.net

work

•

. Task.par

titioning

.bet

ween

.the.ser

ver

.and.cli

ent

•

. Sele

ction

.of.pro

gramming

.tool

s

.use

d

.for.var

ious

.tas

ks

•

. Deve

lopment

.of.spe

cial

.use

r

.int

erfaces

•

. Use.of.mul

tiple

.ser

vers

.dis

tributed

.aro

und

.the.wor

ld

.and.job.sha

ring

.amo

ng

.the

m

•

. Security.and.account.handling

•

. Port

ability

.of.so

ware

.use

d

.on.ser

vers

.and.cli

ents

•

. Dist

ributing

.and.ins

talling

.net

work

.pac

kages

.on.sev

eral

.ser

vers

•

. Othe

rs

64.2 Most Commonly Used Network Programming tools

For.implementation,.several.dierent.languages.must.be.used.simultaneously..e.following.sections.

revi

ew

.the

se

.lan

guages

.and.sco

pes

.of.the

ir

.app

lication

.[W1

0].

64.2.1 Hypertext Markup Language

Hypertext.Markup.Language.(HTML).was.originally.designed.to.describe.a.document.layout.regard-

less

.of.the.dis

playing

.dev

ice,

.its.size

,

.and.oth

er

.pro

perties.

.It.can.be.inc

orporated

.into.net

worked

.app

li-

cation

.fron

t-end

.deve

lopment

.eith

er

.to.crea

te

.form

-based

.dial

og

.boxe

s

.or.as.a.tool.for.den

ing

.the.

layo

ut

.of.an.inte

rface,

.or.wrap

around

.for.Java.appl

ets

.or.Acti

veX

.comp

onents.

.In.a.way,.HTML.can.

be.clas

sied

.as.a.prog

ramming

.lang

uage

.beca

use

.the.docu

ment

.is.disp

layed

.as.a.resu

lt

.of.the.exec

u-

tion

.of.its.code

.

.In.addi

tion,

.scri

pting

.lang

uage

.can.be.used.to.den

e

.simpl

e

.inte

ractions

.betw

een

.a.

user.and.HTML.comp

onents.

.Seve

ral

.impr

ovements

.to.the.stan

dard

.lang

uage

.are.avai

lable:

.casc

ading

.

styl

e

.shee

ts

.(CSS

)

.allo

w

.very.prec

ise

.desc

ription

.of.the.grap

hical

.view.of.the.user.inte

rface;

.comp

ressed

.

HTML.allo

ws

.band

width

.cons

ervation

.but.can.only.be.used.by.Micr

oso

.Inte

rnet

.Expl

orer.

.HTML.is.

also.used.dire

ctly

.as.it.was.orig

inally

.inte

nded—as

.a.publ

ishing

.tool.for.inst

ruction

.and.help.les.that.

are.bun

dled

.wit

h

.the.so

ware

.[W1

0].

64.2.2 JavaScript

HTML.itself.lacks.even.basic.programming.construction.such.as.conditional.statements.or.loops..A.few.

scri

pting

.inte

rpretive

.lang

uages

.were.deve

loped

.to.allo

w

.for.use.of.prog

ramming

.in.HTML

.

.ey.can.be.

clas

sied

.as.exte

nsions

.of.HTML.and.are.used.to.mani

pulate

.or.dyna

mically

.crea

te

.port

ions

.of.HTML.

code

.

.One.of.the.most.popu

lar

.amon

g

.them.is.Java

Script.

.e.only.draw

back

.is.that.alth

ough

.Java

Script

.

© 2011 by Taylor and Francis Group, LLC

Running Software over Internet 64-3

is.already.well.developed,.still.there.is.no.one.uniform.standard..Dierent.web.browsers.may.vary.a.little.

in.the.ava

ilable

.fun

ctions.

.Jav

aScript

.is.an.int

erpretative

.lan

guage

.and.the.scr

ipts

.are.run.as.the.web.pag

e

.is.

dow

nloaded

.and.dis

played.

.ere.is.no.str

ong

.dat

a

.typ

ing

.or.fun

ction

.pro

totyping.

.Yet.the.lan

guage

.

inc

ludes

. sup

port

. for. obje

ct

. ori

ented

. pro

gramming

. wit

h

. dyn

amically

. cha

nging

. mem

ber

. fun

ctions.

.

Jav

aScript

.pro

grams

.can.als

o

.com

municate

.wit

h

.Jav

a

.app

lets

.tha

t

.are.emb

edded

.int

o

.an.HTM

L

.pag

e.

JavaScript

.is.par

t

.of.the.HTM

L

.cod

e.

.It.can.be.pla

ced

.in.bot

h

.the.hea

der

.and.bod

y

.of.a.web.pag

e.

.e.

scr

ipt

.sta

rts

.wit

h

.<sc

ript language="JavaScript">

.lin

e.

.One.of.the.mos

t

.use

ful

.app

lications

.

of.Jav

aScript

.is.ver

ication

.of.the.ll

ed

.for

m

.bef

ore

.it.is.sub

mitted

.onl

ine.

.at.all

ows

.for.imm

ediate

.

fee

dback

.an

d

.pr

eserves

.th

e

.In

ternet

.ba

ndwidth

.as.we

ll

.as.lo

wers

.th

e

.we

b

.se

rver

.lo

ad

.[W

10].

JavaScript

.has.cer

tain

.lim

itations

.due.to.the.sec

urity

.mod

el

.of.its.imp

lementation

.by.a.Web.bro

wser.

.

One.of.tho

se

.lim

itations

.is.ina

bility

.to.ret

rieve

.dat

a

.on.dem

and

.dyn

amically

.fro

m

.the.ser

ver.

.is.was.

cha

nged

.by.add

ing

.a.new.lib

rary

.tha

t

.is.typ

ically

.ref

erred

.to.by.the.nam

e

.of.Aja

x

.Tec

hnology.

.Aja

x

.tec

h-

nology

.all

ows

.a.Jav

aScript

.pro

gram

.embe

dded

.insi

de

.a.web.pag

e

.to.ret

rieve

.add

itional

.doc

uments

.or.

web.pag

es

.fro

m

.the.ser

ver,

.stor

e

.the

m

.as.loc

al

.var

iables,

.and.par

se

.the

m

.in.ord

er

.to.ret

rieve

.dat

a

.and.use.

it.for.dyn

amic

.alt

eration

.of.the.web.pag

e

.whe

re

.the.Jav

aScript

.is.embe

dded.

.e.add

itional

.dat

a

.is.typ

i-

cally

.gen

erated

.on.the.ser

ver

.by.mea

ns

.of.ASP.or.CGI.use

d

.to.int

erface

.the.Aja

x

.que

ry

.to.the.dat

abase

.

on.the.ser

ver.

.Dat

a

.is.the

n

.sen

t

.bac

k

.typ

ically

.in.the.for

mat

.of.an.XML.for

matted

.web.pag

e.

.Typ

ically,

.

eve

ryday

.app

lication

.of.thi

s

.tec

hnology

.is.an.auto

-complete

.sug

gestion

.lis

t

.in.a.web.pag

e

.for

m,

.for.

exa

mple

.au

to-complete

.su

ggestions

.in.a.se

arch

.en

gine

.we

b

.pa

ge

.be

fore

.a.us

er

.cl

icks

.th

e

.se

arch

.bu

tton.

64.2.3 Java

Java.is.an.object-oriented.programming.language.compiled.in.two.stages..e.rst.stage.of.compi-

lation,

.to.so-

called

.byt

e-code,

.is.per

formed

.duri

ng

.the.cod

e

.dev

elopment.

.Byt

e-code

.can.be.com

-

pared

.to.mac

hine

.cod

e

.ins

tructions

.for.a.mic

roprocessor.

.Bec

ause

.no.pro

cessor

.und

erstands

.dir

ectly

.

byt

e-code

.ins

tructions,

.int

erpreters,

.cal

led

.Jav

a

.Vir

tual

.Mac

hines

.(JV

M),

.were.dev

eloped

.for.var

ious

.

mic

roprocessors

.and.ope

rating

.sys

tems.

.At.som

e

.poi

nt,

.JVM.were.impr

oved

.so.tha

t

.ins

tead

.of.int

er-

preting

.the.cod

e

.the

y

.do.per

form

.the.sec

ond

.sta

ge

.of.compi

lation,

.dir

ectly

.to.the.mac

hine

.lan

guage.

.

Howe

ver,

.to.cut.dow

n

.the.ini

tial

.tim

e

.to.run.the.pro

gram,

.the.compi

lation

.is.don

e

.onl

y

.as.nec

essary

.

(ju

st

.in.tim

e

.(JI

T)),

.and.the

re

.is.no.tim

e

.for.ext

ensive

.cod

e

.opt

imization.

.At.cur

rent

.sta

te

.of.the.art.of.

JIT.tech

nology,

.pro

grams

.wri

tten

.in.Java.run.abo

ut

.two.to.ve.tim

es

.slo

wer

.tha

n

.the

ir

.C++.cou

nterparts.

.

Add

ing

.a.JVM.to.a.web.bro

wser

.all

owed

.embe

dding

.so

ware

.com

ponents

.tha

t

.cou

ld

.be.run.on.di

erent

.

pla

tforms

.[W1

0].

64.2.4 activeX

Microso.developed.ActiveX.is.another.technology.allowing.for.the.automatic.transfer.of.soware.over.

the.net

work.

.Act

iveX,

.how

ever,

.can.be.exe

cuted

.pre

sently

.onl

y

.on.a.PC.wit

h

.a.Wind

ows

.ope

rating

.

sys

tem,

.thu

s

.mak

ing

.the.app

lication

.pla

tform

.dep

endent.

.Alt

hough

.thi

s

.tec

hnology

.is.ver

y

.pop

ular

.

alr

eady,

.it.doe

s

.not.all

ow

.for.the.dev

elopment

.of.app

lications

.run

ning

.on.mul

tiple

.pla

tforms.

.Act

iveX

.

com

ponents

.can.be.dev

eloped

.in.Mic

roso

.Vis

ual

.Basi

c

.or.Mic

roso

.Vis

ual

.C++

.

.er

e

.is.the.onl

y

.

cho

ice

.in.cas

es

.whe

n

.Jav

a

.is.too.slo

w,

.or.whe

n

.som

e

.acc

ess

.to.the.ope

rating

.sys

tem

.fun

ctionality

.or.

dev

ices

.sup

ported

.onl

y

.by.Wind

ows

.OS.is.nec

essary.

.e.eas

y

.acc

ess

.to.the.ope

rating

.sys

tem

.for

m

.

an.Act

iveX

.com

ponent

.mak

es

.it.imp

ossible

.to.pro

vide

.add

itional

.sec

urity

.by.lim

iting

.the.fea

tures

.or.

res

ources

.av

ailable

.to.th

e

.co

mponents

.[W

10].

64.2.5 COrBa and DCOM

Common.Object.Request.Broker.Architecture.(CORBA).is.a.technology.developed.in.the.early.1990s.

for.net

work

.dis

tributed

.app

lications.

.It.is.a.pro

tocol

.for.han

dling

.dis

tributed

.dat

a,

.whi

ch

.has.to.be.

© 2011 by Taylor and Francis Group, LLC

64-4 Industrial Communication Systems

exchanged.among.multiple.platforms..A.CORBA.server.or.servers.must.be.installed.to.access.distrib-

uted

.dat

a.

.COR

BA

.in.a.way.can.be.con

sidered

.as.a.ver

y

.hig

h-level

.app

lication

.pro

gramming

.int

erface

.

(AP

I).

.It.all

ows

.sen

ding

.dat

a

.ove

r

.the.net

work,

.sha

ring

.loc

al

.dat

a

.tha

t

.are.reg

istered

.wit

h

.the.COR

BA

.

ser

ver

.amo

ng

.mul

tiple

.pro

grams.

.Mic

roso

.dev

eloped

.its.own.pro

prietary

.API.tha

t

.wor

ks

.onl

y

.in.the.

Wind

ows

.op

erating

.sy

stem.

.It.is.ca

lled

.DC

OM

.an

d

.ca

n

.be.us

ed

.on

ly

.in.Ac

tiveX

.te

chnology

.[W

10].

64.2.6 Common Gateway Interface

Common.Gateway.Interface.(CGI).can.be.used.for.the.dynamic.creation.of.web.pages..Such.dynamically.

cre

ated

.pag

es

.are.an.exc

ellent

.int

erface

.bet

ween

.a.use

r

.and.an.app

lication

.run.on.the.ser

ver.

.CGI.pro

-

gram

.is.exe

cuted

.whe

n

.a.for

m

.embe

dded

.in.HTM

L

.is.sub

mitted

.or.whe

n

.a.pro

gram

.is.ref

erred

.dir

ectly

.

via.a.web.pag

e

.lin

k.

.e.web.ser

ver

.tha

t

.rec

eives

.a.req

uest

.is.cap

able

.of.dis

tinguishing

.whe

ther

.it.sho

uld

.

ret

urn

.a.web.pag

e

.tha

t

.is.alr

eady

.pro

vided

.on.the.har

d

.dri

ve

.or.run.a.pro

gram

.tha

t

.cre

ates

.one

.

.Any.suc

h

.

pro

gram

.can.be.cal

led

.a.CGI.scr

ipt.

.CGI.des

cribes

.a.var

iety

.of.pro

gramming

.tool

s

.and.str

ategies.

.All.

dat

a

.pro

cessing

.can.be.don

e

.by.one.pro

gram,

.or.one.or.mor

e

.oth

er

.pro

grams

.can.be.cal

led

.fro

m

.a.CGI.

scr

ipt.

.e.nam

e

.CGI.scr

ipt

.doe

s

.not.den

ote

.tha

t

.a.scr

ipting

.lan

guage

.mus

t

.be.use

d.

.How

ever,

.dev

elop-

ers

.in.fa

ct

.pr

efer

.sc

ripting

.la

nguages,

.an

d

.PE

RL

.is.th

e

.mo

st

.po

pular

.on

e

.[W

10].

Because

.of.the.nat

ure

.of.the.pro

tocol

.tha

t

.all

ows

.for.tra

nsfer

.of.web.pag

es

.and.exe

cution

.of.CGI.

scr

ipts,

.the

re

.is.a.uni

que

.cha

llenge

.tha

t

.mus

t

.be.fac

ed

.by.a.so

ware

.dev

eloper.

.Alt

hough

.use

rs

.wor

king

.

wit

h

.CGI

-based

.pro

grams

.hav

e

.the.sam

e

.exp

ectations

.as.in.cas

e

.of.loc

al

.use

r

.int

erface,

.the.int

erface

.

mus

t

.be.desi

gned

.int

ernally

.in.an.ent

irely

.di

erent

.way

.

.e.web.tra

nsfer

.is.a.sta

teless

.pro

cess.

.It.mea

ns

.

tha

t

.no.inf

ormation

.is.sen

t

.by.web.bro

wsers

.to.the.web.ser

vers

.tha

t

.ide

ntify

.eac

h

.use

r.

.Eac

h

.tim

e

.the.

new.use

r

.int

erface

.is.sen

t

.as.a.web.pag

e,

.it.mus

t

.con

tain

.all.inf

ormation

.abo

ut

.the.cur

rent

.sta

te

.of.the.

pro

gram.

.at.sta

te

.is.rec

reated

.eac

h

.tim

e

.a.new.CGI.scr

ipt

.is.sen

t

.and.inc

reases

.the.net

work

.tra

c

.and.

tim

e

.la

tency

.ca

used

.by.li

mited

.ba

ndwidth

.an

d

.ti

me

.ne

cessary

.to.pr

ocess

.da

ta

.on

ce

.ag

ain.

In

.add

ition,

.the.ser

ver-side

.so

ware

.mus

t

.be.pre

pared

.for.inc

onsistent

.dat

a

.str

eams.

.For.exa

mple,

.a.

use

r

.can.bac

k

.o.thr

ough

.one.or.mor

e

.web.pag

es

.and.giv

e

.a.di

erent

.res

ponse

.to.a.par

ticular

.dia

log

.box

,

.

and.execute.the.same.CGI.script..At.the.time.of.the.second.execution.of.the.same.script,.the.data.sent.

bac

k

.wit

h

.the.req

uest

.may.alr

eady

.be.out.of.syn

chronization

.fro

m

.the.dat

a

.kept.on.the.ser

ver.

.er

efore,

.

add

itional

.val

idation

.mec

hanisms

.mus

t

.be.imp

lemented

.in.the.so

ware,

.whi

ch

.is.not.nec

essary

.in.cas

e

.

of.a.sin

gle

.pr

ogram.

64.2.7 PErL

Practical.Extraction.Report.Language.(PERL).is.an.interpretive.language.dedicated.for.text.processing..

It.is.pri

marily

.use

d

.as.a.ver

y

.adv

anced

.scr

ipting

.lan

guage

.for.bat

ch

.pro

gramming

.and.for.tex

t

.dat

a

.

pro

cessing.

.PER

L

.int

erpreters

.hav

e

.bee

n

.dev

eloped

.for.mos

t

.of.the.exi

sting

.com

puter

.pla

tforms

.and.

ope

rating

.sys

tems.

.Mod

ern

.PER

L

.int

erpreters

.are.in.fac

t

.not.int

erpreters

.but.com

pilers

.tha

t

.pre

-compile

.

the.wh

ole

.sc

ript

.be

fore

.ru

nning

.it

.

.PE

RL

.wa

s

.or

iginally

.de

veloped

.fo

r

.Un

ix

.as.a.sc

ripting

.la

nguage

.th

at

.

wou

ld

.all

ow

.for.auto

mation

.of.adm

inistrative

.tas

ks.

.It.has.man

y

.ver

y

.ec

ient

.str

ings,

.dat

a

.str

eams,

.and.

le.pro

cessing

.fun

ctions.

.os

e

.fun

ctions

.mak

e

.it.esp

ecially

.att

ractive

.for.CGI.pro

cessing

.tha

t

.dea

ls

.

wit

h

.rea

ding

.dat

a

.fro

m

.the.net

worked

.str

eams,

.exe

cuting

.ext

ernal

.pro

grams,

.org

anizing

.dat

a,

.and.in.

the.end.pro

ducing

.the.fee

dback

.to.the.use

r

.in.the.for

m

.of.a.tex

t

.bas

ed

.HTM

L

.doc

ument

.tha

t

.is.sen

t

.

bac

k

.as.an.upd

ate

.of.the.use

r

.int

erface.

.Sup

port

.of.alm

ost

.any.pos

sible

.com

puting

.pla

tform

.and.OS,.and.

exi

stence

.of.ma

ny

.pr

ogram

.li

braries

.ma

ke

.it.a.pl

atform

.in

dependent

.too

l

.[W

10].

64.2.8 PHP

PHP,.a.server-side.scripting.language,.is.the.most.popular.technology.that.is.especially.suited.for.devel-

oping

.the.dyn

amic,

.int

eractive

.Web.sit

es.

.PHP

,

.whi

ch

.ori

ginally

.sto

od

.for.“Pe

rsonal

.Hom

e

.Pag

e,”

.is.the.

© 2011 by Taylor and Francis Group, LLC

Running Software over Internet 64-5

open-source.soware.initially.created.to.replace.a.small.set.of.PERL.scripts.that.had.been.used.in.Web.

site

s.

.Grad

ually,

.PHP.beca

me

.a.gene

ral

.purp

ose

.scri

pting

.lang

uage

.that.is.used.espe

cially

.for.web.deve

lop-

ment

.and.now.call

ed

.“Hyp

ertext

.Prep

rocessor.”

.PHP.is.embe

dded

.into.HTML.and.inte

rpreted

.on.a.web.

serv

er

.con

gured

.to.oper

ate

.PHP.scri

pts.

.PHP.is.a.serv

er-side

.scri

pting

.lang

uage

.simi

lar

.to.othe

r

.serv

er-

side

.scri

pting

.lang

uages

.like.Micr

oso’s

.Acti

ve

.Serv

er

.Page

s

.and.Sun.Micr

osystems’

.Java.Serv

er

.Page

s

.or.

Java.Serv

lets.

.PHP.rese

mbles

.its.synt

ax

.to.C.and.PERL

.

.e.main.die

rence

.betw

een

.PHP.and.PERL.lays.

in.the.set.of.stan

dard

.buil

t-in

.libr

aries

.that.supp

ort

.the.gene

ration

.of.HTML.code

,

.proc

essing

.data.from.

and.to.the.web.serv

er,

.and.hand

ling

.cook

ies

.[W10

].

.PHP.is.used.in.conj

unction

.with.datab

ase

.syst

ems,

.

such.as.Post

greSQL,

.Orac

le,

.and.MySQ

L,

.to.crea

te

.the.powe

rful

.and.dyna

mic

.serv

er-side

.appl

ications.

e

.data

base

.come

s

.to.pict

ure

.when.it.come

s

.to.stori

ng

.a.huge.amou

nt

.of.info

rmation

.and.retr

iev-

ing

.back.when.it.is.need

ed

.by.the.web.site..e.data

base

.can.cont

ain

.info

rmation

.coll

ected

.from.the.

user.or.from.the.admi

nistrator.

.MySQ

L

.(wri

tten

.in.C.and.C++).is.an.open.sour

ce

.rela

tional

.data

base

.

mana

gement

.syst

em

.(RDB

MS)

.that.is.base

d

.on.the.stru

cture

.quer

y

.lang

uage

.(SQL

)

.for.proc

essing

.data.

in.the.data

base

.and.mana

ges

.mult

i-user

.acce

ss

.to.a.numbe

r

.of.data

bases.

.In.a.rela

tional

.data

base,

.ther

e

.

are.tabl

es

.that.store.data

.

.e.colu

mns

.den

e

.what.kind.of.info

rmation

.will.be.store

d

.in.the.tabl

e,

.and.

a.row.cont

ains

.the.actu

al

.valu

es

.for.thes

e

.spec

ied

.colu

mns.

.MySQ

L

.work

s

.on.many.die

rent

.sys-

t

em

.plat

forms,

.incl

uding

.Linu

x,

.Mac.OS.X,.Micr

oso

.Windo

ws,

.Open

Solaris,

.and.many.othe

rs.

.PHP.

(Hyp

ertext

.Prep

rocessor)

.and.MySQ

L

.(a.port

able

.SQL.serv

er)

.toget

her

.have.made.the.task.of.buil

ding

.

and.acc

essing

.the.rel

ational

.dat

abases

.muc

h

.easi

er

.[WL

04,U07].

64.3 Examples

With.the.increase.of.Internet.bandwidth,.the.World.Wide.Web.(WWW).could.revolutionize.design.

proc

esses

.by.ushe

ring

.in.an.area.of.pay-

per-use

.tools

.

.With.this.appr

oach,

.very.soph

isticated

.tools.will.

beco

me

.acce

ssible

.for.engi

neers

.in.larg

e

.and.smal

l

.busin

esses

.and.for.educ

ational

.and.rese

arch

.pro-

c

esses

.in.acad

emia.

.Curr

ently,

.such.soph

isticated

.syst

ems

.are.avai

lable

.only.for.spec

ialized

.comp

anies

.

with.lar

ge

.na

ncial

.res

ources.

64.3.1 Neural Network trainer through Computer Networks

Several.neural.network.trainer.tools.are.available.on.the.market..One.of.the.freeware.available.tools.is.

“Stut

tgart

.Neur

al

.Netw

ork

.Simu

lator”

.base

d

.on.wide

ly

.C.plat

form

.and.dist

ributed

.in.both.exec

utable

.

and.sour

ce

.code.versi

on.

.Howe

ver,

.the.inst

allation

.of.this.tool.requ

ires

.cert

ain

.know

ledge

.of.comp

iling

.

and.sett

ing

.up.the.appl

ication.

.Also

,

.it.is.base

d

.on.XGUI.that.is.not.free

ware

.and.stil

l

.singl

e

.type.arch

i-

tecture—Unix

.arc

hitecture

.[MW

M02].

During

.sow

are

.deve

lopment,

.it.is.impo

rtant

.to.just

ify

.whic

h

.part.of.the.sow

are

.shou

ld

.run.on.the.

clie

nt

.mach

ine

.and.whic

h

.part.shou

ld

.run.on.the.serv

er.

.CGI.is.quit

e

.die

rent

.from.writ

ing

.Java.appl

ets.

.

Appl

ets

.are.tran

sferred

.thou

gh

.a.netw

ork

.when.requ

ested

.and.the.exec

ution

.is.perf

ormed

.enti

rely

.on.

the.clie

nt

.mach

ine

.that.made.a.requ

est.

.In.CGI,.much.less.info

rmation

.has.to.be.pass

ed

.to.the.serv

er,

.

and.the.serv

er

.exec

utes

.inst

ructions

.base

d

.on.the.give

n

.info

rmation

.and.send

s

.the.resu

lts

.back.to.the.

loca

l

.mach

ine

.that.make

s

.the.requ

est.

.In.case.of.neur

al

.netw

ork

.trai

ner,

.it.only.make

s

.sens

e

.to.use.CGI.

for.the.trai

ning

.proc

ess.

.To.send.the.trai

ner

.sow

are

.thro

ugh

.comp

uter

.netw

orks

.for.ever

y

.requ

esting

.

time.is.not.a.wise.choi

ce

.beca

use

.this.make

s

.the.trai

ning

.proc

ess

.slow

er

.and.sow

are

.is.not.prot

ected.

.

ere

fore,

.it.is.imp

ortant

.to.dev

elop

.met

hods

.tha

t

.tak

e

.adv

antage

.of.net

works.

is

.trai

ning

.tool.curr

ently

.inco

rporates

.CGI,.PHP,.HTML

,

.and.Java

-Script.

.A.CGI.prog

ram

.is.exe-

c

uted

.on.a.serv

er

.when.it.rece

ives

.a.requ

est

.to.proc

ess

.info

rmation

.from.a.web.brow

ser.

.A.serv

er

.then.

deci

des

.if.a.requ

est

.shou

ld

.be.gran

ted.

.If.the.auth

orization

.is.secu

red,

.a.serv

er

.exec

utes

.a.CGI.prog

ram

.

and.send

s

.the.resu

lts

.back.to.a.web.brow

ser

.that.requ

ested

.it..e.trai

ner

.NBN.2.0.is.deve

loped

.base

d

.

on.Visu

al

.Studi

o

.6.0.using.C++.lang

uage

.host

ing

.on.a.serv

er

.and.inte

racting

.with.clie

nts

.thro

ugh

.PHP.

scri

pts.

.Its.mai

n

.int

erface

.is.sho

wn

.in.Fig

ure

.64.

1.

© 2011 by Taylor and Francis Group, LLC

64-6 Industrial Communication Systems

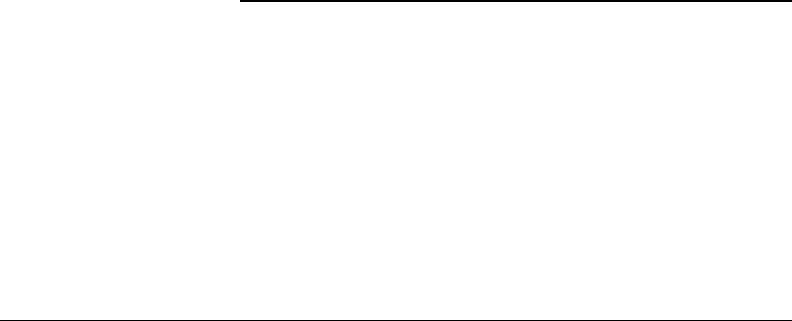

e.neural.network.interface.will.receive.requests.from.users.with.uploading.les.and.input.param-

eters

.to.generate.data.les.and.then.send.a.command.to.the.training.soware.on.the.server.machine..

When.all.data.requirements.are.set.up.properly,.the.training.process.will.start..Otherwise,.the.training.

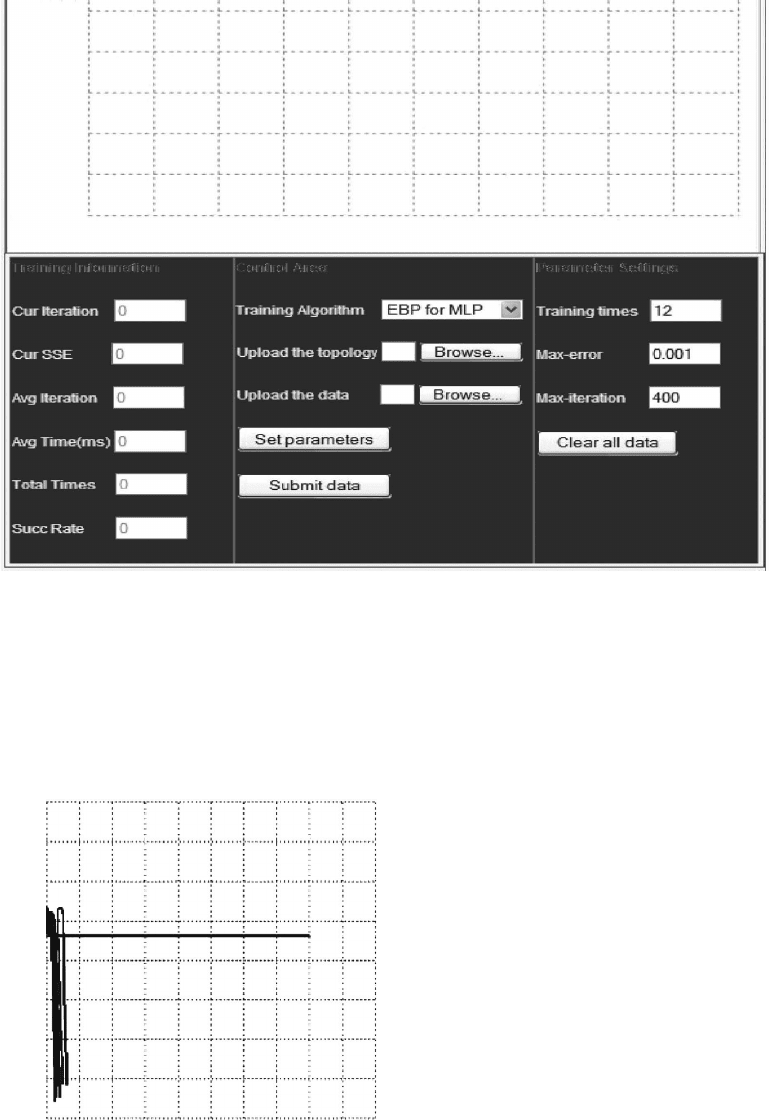

tool.will.send.error.warnings.back.to.clients..If.the.training.process.is.successful,.the.training.result.will.

be.generated..e.training.result.le.is.used.to.store.training.information.and.results.such.as.training.

algorithm,.training.pattern.le,.topology,.parameters,.initial.weights,.and.resultant.weights.(Figure.64.2).

1.0E+01

1.0E–00

1.0E–01

1.0E–02

1.0E–03

1.0E–04

0 1 2 3 4 5 6 7 8 9 10

FIGURE 64.1 User.interface.of.NBN.2.0.

1.0E+04

1.0E+03

1.0E+02

1.0E+01

1.0E–01

1.0E–00

1.0E–02

1.0E–03

1.0E–04

0 1 2 3 4 5 6

Iteration [×50]

7 8 9 10

Parameters

Data file: parity4 in

Topology

Neurons

Initial weights

–0.0200 –0.2200

–0.9200

–0.3400

0.2000

0.2600 0.3800

0.0400

0.1400

0.5000

0.5000 –0.5600

–0.1200

–0.1200

–0.6400

–0.8800

–0.5600

–6.4887

–6.4869

–6.1057

–5.3840

7.2167 7.2210

40.822 –0.1793

–6.0018

–1.6357

–1.7794

20.2874

–20.5338

–20.4403

–20.5296

20.287

6.5580

Results weights

Training results

Total iteration: 501

Total error: 4.00000000

Training Time: 0

Biplor gain = 1.00, der = 0.01

Biplor gain = 1.00, der = 0.01

Biplor gain = 1.00, der = 0.01

5 1 2 3 4

1 2 3 4

1 2 3 4 5 6

6

7

NBN mu = 0.01000000 scale = 10.00000000

FIGURE 64.2 Training.results.

© 2011 by Taylor and Francis Group, LLC

Running Software over Internet 64-7

e.neural.network.trainer.can.be.used.remotely.through.any.network.connection.or.any.operating.

sys

tem

.can.be.use

d

.to.acc

ess

.it,.mak

ing

.the.appl

ication

.ope

rating

.sys

tem

.ind

ependent.

.Als

o,

.muc

h

.les

s

.

ins

tallation

.tim

e

.and.con

guration

.tim

e

.is.req

uired

.bec

ause

.the.tra

ining

.too

l

.loc

ates

.onl

y

.on.cen

tral

.

mac

hine.

.Man

y

.use

rs

.can.acc

ess

.at.the.sam

e

.tim

e.

.Use

rs

.can.tra

in

.and.see.the.tra

ining

.res

ults

.dir

ectly

.

thr

ough

.net

works.

.And.the.mos

t

.imp

ortant

.thi

ng

.is.tha

t

.the.so

ware

.dev

elopers

.can.pro

tect

.the

ir

.int

el-

lectual

.pro

perty

.whe

n

.net

work

.bro

wsers

.are.use

d

.as.use

r

.int

erfaces.

64.3.2 Web-Based C++ Compiler

During.the.process.of.soware.development,.more.than.one.compiler.package.is.frequently.required..

Som

e

.pro

ducts

.are.kno

wn

.to.be.ver

y

.use

ful

.for.loc

ating

.err

ors

.or.deb

ugging,

.whi

le

.oth

ers

.per

form

.

ext

remely

.wel

l

.whe

n

.a.pro

gram

.or.lib

rary

.is.in.the.na

l

.sta

ge

.of.dev

elopment

.and.sho

uld

.be.opti

mized

.

as.muc

h

.as.pos

sible.

.Als

o,

.whe

n

.fac

ing

.obs

cure

.err

or

.mes

sages,

.whi

ch

.may.res

ult

.in.a.tim

e-consuming

.

sea

rch

.for.the.err

or,

.a.di

erent

.err

or

.mes

sage

.fro

m

.the.sec

ond

.com

piler

.fre

quently

.cut

s

.tha

t

.tim

e

.dra

-

matically

.[M

W00].

erefore,

.stu

dents

.sho

uld

.be.to.som

e

.ext

ent

.exp

osed

.to.di

erent

.com

pilers

.at.som

e

.poi

nt

.in.the

ir

.

so

ware

.cou

rses

.cur

riculum.

.Alt

hough

.all.nec

essary

.so

ware

.is.ins

talled

.in.the.com

puter

.lab

oratories,

.

mos

t

.stu

dents

.pre

fer

.to.wor

k

.on.the

ir

.com

puters

.at.hom

e

.or.dor

mitory

.and.con

nect

.to.the.uni

versity

.

net

work.

.at.situ

ation

.cre

ates

.an.unn

ecessary

.bur

den

.eit

her

.for.the.net

work

.adm

inistrators

.who.hav

e

.

to.ins

tall

.add

itional

.so

ware

.on.man

y

.mac

hines

.of.non

-standard

.con

guration,

.or.on.stu

dents

.who.

mus

t

.pu

rchase

.an

d

.in

stall

.on.th

eir

.ow

n

.se

veral

.so

ware

.pa

ckages

.al

ong

.wi

th

.th

eir

.fu

ll

.co

urse

.of.st

udy.

In

.ord

er

.to.sol

ve

.the.pro

blem

.at.lea

st

.par

tially

.in.the.are

a

.of.pro

gramming,

.a.so

ware

.pac

kage

.was.

dev

eloped

.th

at

.al

lows

.fo

r

.we

b-based

.in

terfacing

.of.va

rious

.co

mpilers.

.We

b-page

.ba

sed

.fr

ont

.en

d

.al

lows

.

the

m

.to.acc

ess

.wit

hout

.any.res

trictions

.reg

arding

.the.com

puter

.sys

tem

.req

uirements,

.thu

s

.all

owing

.for.

the

ir

.us

e

.on.di

erent

.op

erating

.sy

stem

.pl

atforms

.an

d

.al

so

.on.ol

der

.ma

chines

.wi

th

.le

sser

.pe

rformance.

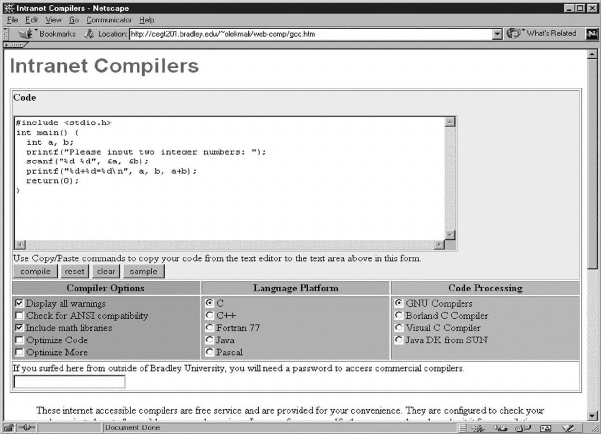

A

.com

mon

.fro

nt

.end.is.use

d

.for.all.com

pilers

.and.is.pre

sented

.in.Fig

ure

.64.

3.

.is.HTM

L

.pag

e

.all

ows

.

for.sel

ecting

.a.ven

dor

.and.a.lan

guage,

.and.for.set

ting

.a.few.basi

c

.com

pilation

.opti

ons.

.Use

r

.use

s

.cop

y

.

and.pas

te

.com

mands

.to.ent

er

.the.sou

rce

.cod

e

.into.the.com

piler

.fro

nt

.end

.

.er

e

.are.thr

ee

.men

us

.loc

ated

.

under.the.source.code.area.as.shown..e.middle.menu.is.used.to.select.the.programming.language.

FIGURE 64.3 e.common.Web-based.front-end.to.C++.compilers.

© 2011 by Taylor and Francis Group, LLC

64-8 Industrial Communication Systems

while.the.right.menu.is.used.to.select.the.compiler.vendor..Currently.the.Intranet.Compilers.package.

sup

ports

.C,.C++

,

.Ada

,

.For

tran,

.Mod

ula,

.Pas

cal,

.and.Jav

a

.lan

guages.

.It.uti

lizes

.Min

GW

.(Mi

nimalistic

.

GNU.for.Wind

ows

.ver

.

.3.4

),

.Bor

land

.(ve

r.

.5.0

),

.and.Mic

roso

.(VS.200

5)

.com

pilers

.for.C.and.C++

,

.com

-

piler

.fo

r

.Fo

rtran

.an

d

.Pa

scal,

.an

d

.Su

n’s

.JD

K

.(v

er.

.2.

6)

.fo

r

.Ja

va.

One

.of.the.pre

set

.com

piling

.con

gurations

.can.be.sel

ected

.fro

m

.the.le.men

u.

.e.use

r

.can.dec

ide

.

whe

ther

.agg

ressive

.bin

ary

.cod

e

.opti

mization

.or.str

ict

.err

or

.che

cking

.and.ASN

I

.sty

le

.vio

lation

.che

cking

.

are.nec

essary.

.e.com

piler

.ven

dor

.and.ver

sion

.can.be.sel

ected

.fro

m

.the.rig

ht

.men

u.

.In.cas

e

.of.sel

ecting

.

one.of.the.com

mercial

.com

pilers

.whi

le

.wor

king

.at.o-

campus

.loc

ation,

.the.use

r

.is.req

uested

.to.inp

ut

.a.

pas

sword

.to.ve

rify

.hi

s

.or.he

r

.el

egibility

.to.us

e

.li

censed

.pr

oducts.

One

.of.the.majo

r

.adv

antages

.of.con

solidating

.mor

e

.tha

n

.one.com

piler

.is.the.abi

lity

.to.cro

ss-reference

.

err

or

.me

ssages

.am

ong

.di

erent

.ve

ndor

.pr

oducts

.us

ed

.fr

om

.th

e

.sa

me

.in

terface.

.e.pr

ocess

.of.co

mpila-

tion

.is.per

formed

.in.bat

ch

.mod

e.

.Ae

r

.set

ting

.the.desi

red

.opti

ons

.and.pas

ting

.the.sou

rce

.cod

e

.into.the.

app

ropriate

.tex

t

.box

,

.the.tas

k

.can.be.sta

rted

.by.pre

ssing

.the.COM

PILE

.but

ton.

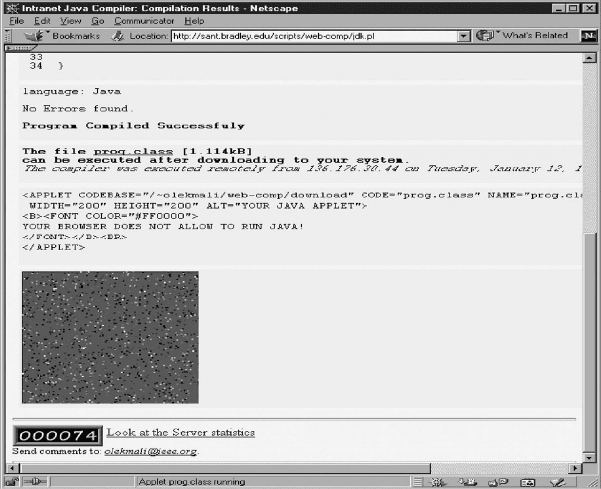

.As.a.res

ult,

.ano

ther

.web.

pag

e

.wit

h

.HTM

L

.wra

pped

.inf

ormation

.is.sen

t

.bac

k

.to.the.use

r.

.e.res

ult

.pag

e

.is.dis

played

.in.ano

ther

.

bro

wser

.win

dow

.so.tha

t

.the.use

r

.can.cor

rect

.the.sou

rce

.cod

e

.in.the.ori

ginal

.win

dow

.and.res

ubmit

.it.if.

nec

essary

.(F

igure

.64

.4).

64.3.3 SPICE-Based Circuit analysis Using Web Pages

e.common.problem.being.faced.by.many.electronic.engineers.in.the.industry.is.that.their.design.

tool

s

.oe

n

.ope

rate

.on.sev

eral

.di

erent

.pla

tforms

.suc

h

.as.UNI

X,

.DOS

,

.Wind

ows

.95,.Wind

ows

.NT,.or.

on.Mac

intosh.

.Ano

ther

.lim

itation

.is.tha

t

.the.req

uired

.desi

gn

.so

ware

.mus

t

.be.ins

talled

.and.a.lic

ense

.

pur

chased

.for.eac

h

.com

puter

.whe

re

.so

ware

.is.use

d.

.Onl

y

.one.use

r

.int

erface

.han

dled

.by.a.net

work

.

bro

wser

.wou

ld

.be.req

uired.

.Fur

thermore,

.ins

tead

.of.pur

chasing

.the.so

ware

.lic

ense

.for.eac

h

.com

puter,

.

ele

ctronic

.de

sign

.au

tomation

.(E

DA)

.too

ls

.ca

n

.be.us

ed

.on.a.pa

y-per-use

.ba

sis

.[W

MR01].

Network

.pro

gramming

.use

s

.dis

tributed

.res

ources.

.Par

t

.of.the.com

putation

.is.don

e

.on.the.ser

ver

.and.

ano

ther

.par

t

.on.the.cli

ent

.mac

hine.

.Cer

tain

.inf

ormation

.mus

t

.be.fre

quently

.sen

t

.bot

h

.way

s

.bet

ween

.the.

FIGURE 64.4 e.result.page.

© 2011 by Taylor and Francis Group, LLC

Running Software over Internet 64-9

client.and.server..It.would.be.nice.to.follow.the.JAVA.applet.concept.and.have.most.of.the.computation.

don

e

.on.th

e

.cl

ient

.ma

chine.

.i

s

.ap

proach,

.ho

wever,

.is.no

t

.vi

sible

.fo

r

.th

ree

.ma

jor

.re

asons:

•

. EDA.pro

grams

.are.usu

ally

.ver

y

.lar

ge

.and.thu

s

.not.pra

ctical

.to.be.sen

t

.ent

irely

.via.net

work

.as.

app

lets.

•

. So

ware

.de

velopers

.ar

e

.gi

ving

.aw

ay

.th

eir

.so

ware

.wi

thout

.th

e

.ab

ility

.of.co

ntrolling

.it

s

.us

age.

•

. JAV

A

.ap

plets

.us

ed

.on

-line

.an

d

.on.de

mand

.ar

e

.sl

ower

.th

an

.re

gular

.so

ware.

e

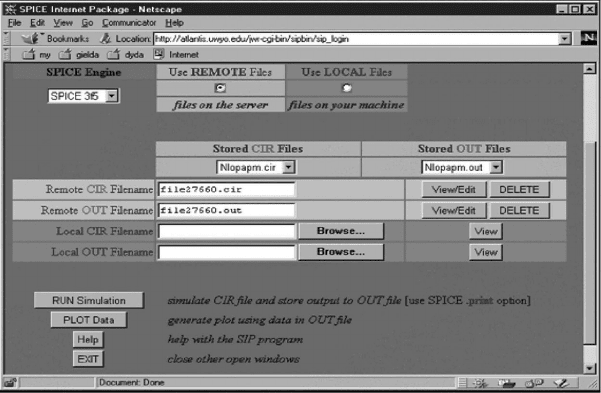

.Spic

e

.imp

lementation

.use

d

.in.thi

s

.pre

sentation

.is.jus

t

.one.exa

mple

.of.a.net

worked

.app

lication.

.

An.app

lication

.cal

led

.the.Spic

e

.Int

ernet

.Pac

kage

.has.bee

n

.dev

eloped

.for.use.thr

ough

.the.Int

ernet

.and.

Int

ranet

.net

works.

.e.SIP.pro

vides

.an.ope

rating

.sys

tem

.ind

ependent

.int

erface,

.whi

ch

.all

ows

.Spic

e

.

simu

lation

.and.ana

lysis

.to.be.per

formed

.fro

m

.any.com

puter

.tha

t

.has.a.web.bro

wser

.on.the.Int

ernet

.

or.Int

ranet.

.e.SIP.has.a.use

r-friendly

.GUI.(Gr

aphical

.Use

r

.Int

erface)

.and.fea

tures

.inc

lude

.pas

sword

.

pro

tection

.and.use

r

.acc

ounts,

.loc

al

.or.rem

ote

.le

s

.for.simu

lation,

.edi

ting

.of.cir

cuit

.le

s

.whi

le

.vie

wing

.

simu

lation

.re

sults,

.an

d

.an

alysis

.of.sim

ulated

.da

ta

.in.th

e

.fo

rm

.of.im

ages

.or.fo

rmatted

.te

xt.

In

.the.cas

e

.of.the.Spic

e

.Int

ernet

.it.wou

ld

.be.imp

ossible

.to.sen

d

.the.Spic

e

.eng

ine

.thr

ough

.the.net

work

.

eve

ry

.tim

e

.it.was.req

uested

.and.thi

s

.wou

ld

.be.ext

remely

.slo

w.

.Jav

a

.tec

hnology

.cou

ld

.als

o

.be.use

d

.for.

fun

ctions

.lik

e

.gen

erating

.and.man

ipulating

.gra

phs

.and.imp

lementing

.the.GUI.on.the.cli

ent

.side

.

.e.

SIP.pro

gram

.cur

rently

.inc

orporates

.CGI

,

.PER

L,

.HTM

L,

.and.Jav

aScript.

.A.uni

que

.fea

ture

.of.the.SIP.

ver

sus

.oth

er

.Spic

e

.simu

lators

.is.tha

t

.it.is.ope

rating

.sys

tem

.ind

ependent.

.Any

one

.tha

t

.has.acc

ess

.to.the.

Internet.and.a.web.browser,.such.as.Mozilla.Firefox.or.MS.Internet.Explorer,.can.run.a.Spice.simulation.

and.vi

ew

.th

e

.re

sults

.gr

aphically

.fr

om

.an

ywhere

.in.th

e

.wo

rld

.usi

ng

.an

y

.op

erating

.sy

stem

.(F

igure

.64

.5).

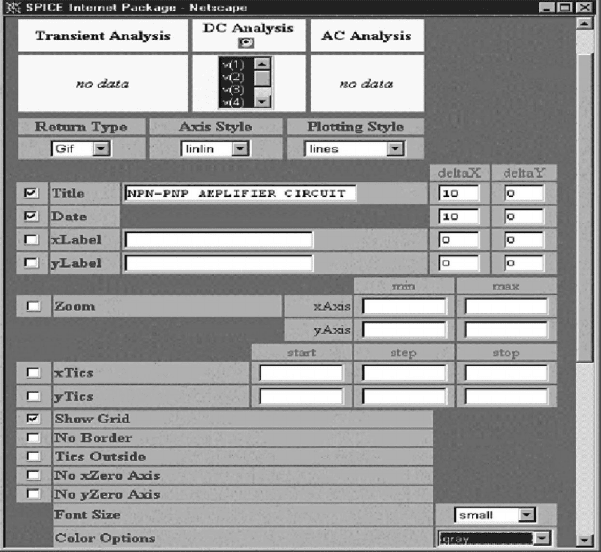

e

.ser

ver

.is.con

gured

.to.acc

ept

.req

uests

.fro

m

.web.bro

wsers

.thr

ough

.net

work

.con

nections.

.e.

ser

ver

.pro

cesses

.the.req

uest

.for.Spic

e

.simu

lation

.or.ana

lysis

.and.ret

urns

.the.res

ults

.to.the.req

uesting

.

web.bro

wser

.as.an.HTM

L

.doc

ument.

.e.hea

rt

.of.the.so

ware

.is.a.ser

ver-located

.PER

L

.scr

ipt.

.is.

scr

ipt

.is.exe

cuted

.rs

t

.whe

n

.the.use

r

.log

s

.in.to.SIP

.

.en.eac

h

.tim

e

.the.use

r

.sel

ects

.any.act

ivity,

.a.new.

dia

log

.box.in.the.for

m

.of.an.on-

the-y

.gen

erated

.Jav

aScript

.enh

anced

.HTM

L

.web.pag

e

.is.sen

t

.bac

k.

.

Suc

h

.pag

es

.may.con

tain

.a.tex

t

.edi

tor,

.a.simu

lation

.rep

ort,

.a.gra

phic

.pos

tprocessor

.men

u,

.or.gra

phic

.

ima

ge

.of.plo

tted

.res

ults.

.To.com

plete

.som

e

.tas

ks,

.the.PER

L

.scr

ipt

.may.run.add

itional

.pro

grams

.ins

talled

.

onl

y

.for.the.ser

ver

.suc

h

.as.the.mai

n

.CAD.pro

gram,

.i.e

.,

.Ber

keley

.Spic

e;

.Gnu

Plot,

.whi

ch

.gen

erates

.plo

ts;

.

and.som

e

.uti

lity

.pro

grams

.(ne

tpbmp)

.to.con

vert

.plo

tter

.le

s

.into.sta

ndard

.ima

ges

.rec

ognized

.by.all.

gra

phical

.we

b

.br

owsers.

FIGURE 64.5 Graphical.user.interface.for.SIP.package.

© 2011 by Taylor and Francis Group, LLC

64-10 Industrial Communication Systems

SIP.is.a.very.good.example.for.network.trac.considerations..e.amount.of.data.produced.by.a.single.

sim

ulation

.may.di

er

.sig

nicantly

.fro

m

.a.few.hun

dred

.byt

es

.to.a.few.hun

dred

.KB,.dep

ending

.on.the.

num

ber

.of.sim

ulation

.ste

ps

.req

uested.

.In.cas

e

.of.lar

ge

.dat

a

.le

s,

.it.is.bet

ter

.to.gen

erate

.gra

phical

.ima

ges

.

of.plo

ts

.and.sen

d

.the

m

.to.the.use

r.

.Use

rs

.fre

quently

.ins

pect

.the.obta

ined

.res

ults

.a.few.tim

es,

.for.exa

mple,

.

by.cha

nging

.the.ran

ge

.or.var

iables

.to.dis

play.

.In.the.cas

e

.whe

n

.the

re

.may.be.man

y

.req

uests

.for.di

erent

.

plo

ts

.of.the.sam

e

.dat

a,

.it.cou

ld

.be.bet

ter

.to.sen

d

.the.dat

a

.onc

e

.tog

ether

.wit

h

.a.cus

tom

.Jav

a

.app

let

.tha

t

.

cou

ld

.dis

play

.the.sam

e

.inf

ormation

.in.man

y

.di

erent

.for

ms

.wit

hout

.fur

ther

.com

municating

.wit

h

.the.

ser

ver.

Several

.fea

tures

.mak

e

.the.Spic

e

.Int

ernet

.Pac

kage

.a.desi

rable

.pro

gram

.for.com

puter-aided

.eng

ineer-

ing

.and.desi

gn.

.Onl

y

.one.cop

y

.of.the.Spic

e

.eng

ine

.nee

ds

.to.be.ins

talled

.and.con

gured.

.One.mac

hine

.

act

s

.as.the.ser

ver

.and.oth

er

.mac

hines

.can.simu

ltaneously

.acc

ess

.the.Spic

e

.eng

ine

.thr

ough

.net

work

.con

-

nections.

.Rem

ote

.acc

ess

.to.SIP.all

ows

.use

rs

.to.run.Spic

e

.simu

lations

.fro

m

.any.com

puter

.on.the.net

work,

.

and.tha

t

.mig

ht

.be.fro

m

.hom

e

.or.ano

ther

.oc

e

.in.ano

ther

.bui

lding

.or.town

.

.Als

o,

.the.cur

rent

.Spic

e

.

eng

ine

.bei

ng

.use

d

.is.Spic

e3f5

.fro

m

.Ber

keley,

.whi

ch

.all

ows

.an.unl

imited

.numb

er

.of.tra

nsistors,

.unl

ike

.

var

ious

.“s

tudent

.ve

rsions”

.of.Spi

ce

.pr

ograms

.th

at

.ar

e

.av

ailable

.(F

igure

.64

.6).

Computer

.net

works

.are.als

o

.use

d

.in.the.sys

tems

.for.con

trolling

.obje

cts

.suc

h

.as.rob

ots,

.dat

abase

.

man

agement,

.etc

.

.[MW

01,WM01,PYW10].

.A.web.ser

ver

.is.use

d

.to.pro

vide

.the.cli

ent

.app

lication

.to.the.

ope

rator.

.e.cli

ent

.use

s

.cus

tom

.TCP

/IP

.pro

tocol

.to.con

nect

.to.the.ser

ver,

.whi

ch

.pro

vides

.an.int

erface

.

to.the.spe

cic

.rob

otic

.man

ipulators.

.Sen

sors

.and.vid

eo

.cam

eras

.pro

vide

.fee

dback

.to.the.cli

ent.

.Man

y

.

robotic.manipulators.may.be.connected.at.a.time.to.the.server.through.either.serial.or.parallel.ports.of.

the.com

puter.

.In.cas

e

.of.auto

nomous

.rob

ots,

.the.ser

vers

.pas

s

.the.com

mands

.add

ressed

.to.the.rob

ot.

.In.

cas

e

.of.a.simp

le

.rob

ot,

.the.ser

ver

.run

s

.a.sep

arate

.pro

cess

.tha

t

.int

erfaces

.to.the.rob

ot.

.Dat

a

.mon

itoring

.

and.con

trol

.thr

ough

.a.com

puter

.net

work

.or.som

e

.oth

er

.pro

prietary

.net

work

.is.no.lon

ger

.pro

hibitively

.

FIGURE 64.6 Upper.part.of.the.plotting.conguration.screen.

© 2011 by Taylor and Francis Group, LLC