Stefanica D. A Primer for the Mathematics of Financial Engineering

Подождите немного. Документ загружается.

84

CHAPTER

3.

PROBABILITY.

BLACK-SCHOLES

FORMULA.

(iii)

if

Ak

E

JF,

k = 1 : 00,

then

U~l

Ak

E

JF.

The

set

S represents

the

sample

space,

any

element

of

S is a possible

outcome,

and

a

set

A E

JF

is

an

event

1

,

i.e., a collection of possible

outcomes.

A

probability

function

P :

JF

---t

[0,

1]

defined

on

JF

is a

nonnegative

function

such

that

P(S) = 1

and

P

(Q

Ak)

=

~

P(A

k

),

V

Ak

E

JF,

k = 1 :

00,

V Ai n Aj =

0,

i

of

j.

A

function

X : S

---t

JR

is called a

random

variable

if

{ s E S

such

that

X ( s)

::;

t}

E

JF,

\;j

t E

JR.

The

cumulative

distribution

function

F :

JR

---t

[0,

1]

of

the

random

variable

X is defined as

F(t)

=

P(X::;

t). (3.5)

For

any

random

variable X

considered

here, we

assume

2

that

X

has

a

probability

density

function

f (x), i.e., we

assume

that

there

exists a

function

f :

JR

---t

JR

such

that

P(a

<::

X

<::

b)

= l f(x)dx.

(3.6)

For

an

integrable

function

f

(x)

to

be

the

probability

density

function

of

a

random

variable

it

is necessary

and

sufficient

that

f(x)

~

0, for all x E

JR,

and

I:

f(x)

dx

=

1.

(3.7)

Lemma

3.2.

Let

f(x)

be

the probability

density

function

of

the

random

vari-

able

X.

Then

the cumulative distribution

function

of

X can

be

written

as

F(t) =

l~

f(x)

dx.

(3.8)

Definition

3.2.

Let

f(x)

be

the probability density

function

of

the

random

variable

X.

The expected value

E[X]

of

X (also called the

mean

of

X)

is

defined

as

E[X] =

I:

xf(x)

dx.

(3.9)

1 A family of subsets

IF

of S satisfying

the

properties

(i)~(iii)

is called a sigma-algebra

of

S.

We introduce

the

concepts of continuous probability

without

formally discussing measure

theory

topics such as sigma-algebras

and

measurable functions. For more details

on

measure

theory,

we

refer

the

reader

to

Royden

[22]

or

Rudin

[23].

2It

can

be

shown

that

the

random

variable X has a probability density function if

and

only if

the

cumulative density function F(t)

of

X is

an

absolutely continuous function.

3.2.

CONTINUOUS

PROBABILITY

CONCEPTS

85

The

expected

values

of

a

random

variables is a

linear

operator:

Lemma

3.3.

Let

X

and

Y

be

two

random

variables

over

the

same

probability

space.

Then

E[X+Y]

E[eX]

E[X]

+ E[Y];

c

E[X],

\;j

e E

JR.

(3.10)

(3.11)

The

following

result

will

be

used

throughout

this

chapter,

and

is

presented

without

proof:

Lemma

3.4.

Let

h :

JR

---t

JR

be

a piecewise

continuous

function,

and

let

X : S

---t

JR

be

a

random

variable with probability

density

function

f (x)

such

that

flR

Ih(x)f(x)

dx

< 00.

Then

h(X)

: S

---t

JR

is a

random

variable

and

the expected value

of

h(

X)

is

E[h(X)] =

I:

h(x)

f(x)

dx.

(3.12)

3.2.1

Variance,

covariance,

and

correlation

The

variance

and

standard

deviation

of

a

random

variable

offer

important

information

about

its

distribution.

Definition

3.3.

Let

f(x)

be

the probability

density

function

of

the

random

variable

X,

and

assume

that

flR

x

2

f(x)

dx

< 00.

Let

m =

E[X]

denote the

expected value

of

X.

The variance

var(X)

of

X is defined as

var(X) =

E[(X

- E[X])2] =

I:

(x

-

rn)2

f(x)

dx.

The standard deviation a-(X)

of

X is defined as

a-(X)

=

Vvar(X).

Therefore,

var(X)

=

(a-(X)?

=

a-

2

(X).

From

(3.13)

and

(3.14),

it

is

easy

to

see

that

var(eX)

a-(eX)

e

2

var(X),

\;j

e E

JR;

lei

a-(X),

\;j

e E

JR.

(3.13)

(3.14)

(3.15)

(3.16)

The

next

result

corresponds

to

that

of

Lemma

3.1,

and

its

proof

follows

along

the

same

lines:

86

CHAPTER

3.

PROBABILITY.

BLACK-SCHOLES

FORMULA.

Lemma

3.5.

If

X is a random variable) then

var(X)

= E[X2] - (E[X])2.

Definition

3.4.

Let X and Y

be

two random variables over the same prob-

ability space. The covariance cov(X,

Y)

of

X and Y is defined

as

cov(X,

Y)

=

E[(X

- E[X])

(Y

- E[Y])].

(3.17)

The correlation corr(X, Y) between X and Y is

equal

to the covariance

?f

X

and Y normalized with respect to the standard deviations

of

X and

Y)

2.

e.)

cov(X,

Y)

corr(X,

Y)

= o-(X) o-(Y) ,

(3.18)

where o-(X) and o-(Y)

are

the standard deviations

of

X and

Y)

respectively.

Lemma

3.6.

Let X and Y

be

two random variables over the same probability

space. Then

cov(X,

Y)

=

E[XY]

- E[X]E[Y].

(3.19)

Proof. Using (3.10)

and

(3.11) repeatedly, we find

that

cov(X, Y)

E[ (X - E[X])

(Y

- E[Y]) ]

E[

XY

-

XE[Y]

-

YE[X]

+

E[X]E[Y]]

E[XY]

- E[

XE[Y]

] - E[

YE[X]

] + E[X]E[Y].(3.20)

Since E[X]

and

E[Y] are constants, we conclude from (3.11)

that

E[

XE[Y]]

= E[Y] E[X]

and

E[

YE[X]]

= E[X] E[Y].

Therefore, formula (3.20) becomes

cov(X, Y)

=

E[XY]

- 2E[X]E[Y] + E[X]E[Y]

E[XY]

- E[X]E[Y].

D

Let

X Y

and

U

be

random

variables over

the

same probability space.

, , .

The

following properties are easy

to

establish

and

show

that

the

covanance

of two

random

variables is a

symmetric

bilinear operator:

cov(X,

Y)

cov(X

+

U,

Y)

cov(X, Y

+

U)

cov(cX, Y)

cov(cIX,

C2

Y

)

cov(Y, X);

cov(X,

Y)

+

cov(U,

Y);

cov(X,

Y)

+ cov(X,

U);

cov(X,

cY)

= c cov(X,

Y),

\j

c E

1R;

CIC2

cov(X, Y),

\j

Cl,

C2

E

JR..

(3.21)

(3.22)

3.2.

CONTINUOUS

PROBABILITY

CONCEPTS

87

Lemma

3.7.

Let X and Y

be

two random variables over the same probability

space.

Then)

var(X

+ Y) =

var(X)

+ 2cov(X,

Y)

+ var(Y) , (3.23)

or)

equivalently)

var(X

+

Y)

= o-2(X) + 2o-(X) o-(Y) corr(X,

Y)

+ o-2(y),

(3.24)

where o-(X) and o-(Y)

are

the standard deviation

of

X and

Y)

respectively.

Proof.

Formula (3.23) is derived from definitions (3.13)

and

(3.17), by using

the

additivity

of

the

expected value (3.10),

as

follows:

var(X

+

Y)

E [ ( (X +

Y)

E[X

+

Y]

)2

]

E [ (

(X

- E[X]) + (Y - E[Y])

)2

]

E [

(X

- E[X])2 ] + 2E[

(X

- E[X]) (Y - E[Y]) ]

+ E [

(Y

- E[y])2 ]

var(X)

+ 2cov(X,

Y)

+

var(Y).

Formula (3.24) is a direct consequence

of

(3.18)

and

(3.23).

D

The

relevant information contained

by

the

covariance

of

two

random

vari-

ables is

related

to

whether

they

are

positively

or

negatively correlated, i.e.,

whether cov(X,

Y)

> 0

or

cov(X, Y) <

O.

The

correlation of

the

two vari-

ables contains

the

same

information as

the

covariance,

in

terms

of

its

sign,

but

its size is also relevant, since

it

is scaled

to

adjust

for multiplication by

constants, i.e.,

corr(cIX,C2Y)

= sign(clc2)

corr(X,Y),

\j

CI,C2

E

JR..

(3.25)

Here,

the

sign function sgn(

c)

is equal

to

1, if C > 0,

and

to

-1

is C <

O.

To see this, recall from (3.16)

that

o-(CIX) = ICllo-(X)

and

o-(C2Y)

IC2Io-(Y)

Then,

from (3.18)

and

using (3.22),

it

follows

that

cov(cIX,

C2Y)

CIC2

cov(X, Y)

0-(

CIX)

0-(

C2Y)

ICII

IC21

o-(X) o-(Y)

CIC2

cov(X, Y)

I

C

IC21

o-(X) o-(Y) = sgn(clc2)

corr(X,

Y).

Lemma

3.8.

Let X and Y

be

two random variables over the same probability

space.

Then

-1

:s;

corr(X,

Y)

:s;

1.

(3.26)

88

CHAPTER

3.

PROBABILITY.

BLACK-SCHOLES

FORMULA.

Proof. Let a E

JR

be

an

arbitrary

constant.

From (3.24), (3.21), (3.15),

and

(3.18) we find

that

var(X

+

aY)

var(X)

+ 2cov(X,

aY)

+

var(aY)

var(X)

+

2a

cov(X,

Y)

+ a

2

var(Y)

0"2(X)

+

2a

O"(X)

O"(Y)

corr(X,

Y)

+ a

2

0"2(y).

Since

var(X

+

aY)

~

0, we

obtain

that

a

2

0"2(y)

+

2a

O"(X)O"(Y)corr(X,

Y)

+

0"2(X)

~

0,

\j

a E

JR.

(3.27)

Note

that

the

left

hand

side of (3.27) is a

quadratic

polynomial of

a.

The

in-

equality (3.27) holds

true

for

any

real

number

a if

and

only if

this

polynomial

has

at

most

one real double root, i.e., if

its

discriminant is nonpositive:

(20"(X)0"(Y)corr(X, y))2 -

40"2(X)0"2(y)

~

o.

This

is equivalent

to

40"2(X)0"2(y)

(corr(X, y))2

::;

40"2(X)0"2(Y),

which

hap-

pens

if

and

only if Icorr(X, Y)I

~

1, which is equivalent

to

(3.26). 0

The

elegant technique used in

the

proof

of

Lemma

3.8 can also

be

applied,

e.g., for showing

that

the

inner

product

of two vectors is

bounded

from above

by

the

product

of

the

norms of

the

vectors

3:

(

t

Xi

Yi)

2

::;

(t

XI)

(t

Yi),

\j

Xi,

Yi

E

JR,

i = 1 : n.

(3.28)

2=1 2=1 2=1

The

expected value

and

variance of a linear combination of n

random

vari-

ables

can

be

obtained by

induction

from

the

corresponding formulas

(3.10-

3.11)

and

(3.23-3.24) for two

random

variables.

Lemma

3.9.

Let

Xi,

i = 1 :

n,

be

random

variables over the

same

probability

space, and let

Ci E

JR

be

real numbers. Then,

E

[~~Xi]

n

(3.29)

i=1

vaT

(~~Xi)

=

~c;var(Xi)

+ 2

,<;t-1<;n

CiCjCOV(Xi,X

j

).

(3.30)

3A

proof for (3.28), also called

the

Cauchy-Schwarz inequality,

can

be

given using

the

fact

that

n

I)Xi + aYi)2

~

0,

'If

a E R

i=l

3.3.

THE

STANDARD

NORMAL

VARIABLE

89

If

Pi,j

=

corr(Xi'

X

j

), 1

::;

i < j

~

n,

is the correlation between

Xi

and

X

j

,

and

if

0";

=

var(X

i

),

i = 1 :

n,

then

(3.30) can also

be

written as

var

(t~Xi)

=

tc;O";

+ 2 L

CiCjO"iO"jCOrr(Xi,Xj).

2=1 2=1

1:5:i<j:5:n

(3.31)

3.3

The

standard

normal

variable

The

standard

normal

variable, denoted by

Z,

is

the

random

variable

with

probability density function

f(x)

(3.32)

It

is easy

to

see

that

f (

x)

> 0,

\j

X E

JR.

To conel

ude

that

f (

x)

is indeed

a density function, we would have

to

show

that

1

00

1 1

00

x

2

f(x)

dx

= .

f(C

e-

Z

dx

=

1.

-00

V

27f

-00

(3.33)

We

postpone

the

proof

of

this

fact until section 7.5

and

assume for now

that

(3.33) holds true.

The

following result will be needed in

the

proof

of

Lemma

3.10:

(3.34)

x

2

Note

that

x

2

e-

z

is

an

even function.

From

Lemma

0.1,

it

follows

that

(3.35)

(

2)'

2

Note

that

-e-.y

=

xe-.y.

By

integration

by

parts,

we find

that

(3.36)

90

CHAPTER

3.

PROBABILITY.

BLACK-SCHOLES

FORMULA.

From

(3.35)

and

(3.36)

it

follows

that

2

t~

(-te-'?

+ l'

e-

f

dX)

2 lim

rt

e-~

dx

= 2

roo

e-~

dx.

t-too

Jo Jo

(3.37)

Recall from (3.33),

that

J~oo

e-~dx

=

v'21r.

Since

e-~

is

an

even function,

we

obtain

from

Lemma

0.1

that

e-

T

dx = -

e-

T

dx

=

-.y'2;-

1

00

x2

1 1

00

x

2

1

-_

~7r2.

o 2

-00

2

(3.38)

From

(3.37)

and

(3.38),

it

follows

that

1 1

00

x

2

1 1

00

x

2

--

x

2

e-

T

dx

=

f2='1T".

2 0

e-

T

dx

v'21r

-00

V .£,71

1,

and

therefore (3.34) holds

true.

Lemma

3.10.

The standard

normal

variable has mean 0 and variance

I,

i.e.,

E[Z]

var(Z)

o·

,

1.

(3.39)

(3.40)

x

2

Proof.

By

definition (3.9),

and

using

the

fact

that

xe-

T

is

an

odd

function

and

Lemma

0.2, we

obtain

that

1

00

1 1

00

x

2

E[Z] =

xf(x)

dx

=

rc:L

xe-

T

dx

=

o.

-00

v27r

-00

To

compute

the

variance

of

Z,

note

that

where

the

last

equality follows from (3.34).

o

Lemma

3.11.

If

Z is the standard

normal

variable, then E[Z2] =

1.

Proof.

From

Lemma

3.5,

it

follows

that

var(Z)

= E[Z2] - (E[Z])2. Since

var(Z)

= 1

and

E[Z] = 0, see (3.39-3.40), we conclude

that

E[Z2] = 1. 0

Definition

3.5.

Denote

by

N(t)

the cumulative distribution

of

the standard

normal

variable

Z.

Then,

1

1t

x

2

N(t)

=

P(Z

S

t)

=

rc:L

e-

T

dx.

v

27r

-00

(3.41 )

3.4.

NORMAL

RANDOM

VARIABLES

91

Lemma

3.12.

Let

Z

be

the standard

normal

variable. Then,

P(Z

~

a) =

P(Z

S

-a),

V a E

JR.

(3.42)

In

other words,

if

N(t)

is the cumulative distribution

of

Z,

then

1-N(a)

=

N(-a),

VaEJR.

(3.43)

Proof.

By

definition (3.6),

and

using (3.32), we find

that

1 1

00

x

2

P(Z

~

a) =

rc:L

e-

T

dx.

v27r a

We use

the

substitution

x =

-y.

Then

dx

=

-dy

and

the

limits

of

integration

change from x = a

to

y =

-a

and

from x =

00

to

Y =

-00.

Thus,

P(Z~a)

=

~

1

00

e-~

dx

=

_1_1-

00

e-(-~)2

(-dy)

v

27r

a

v'21r

-a

-

_1_1-

00

e-

f

dy

-1-1-ooa

e-

f

dy

v'21r

-a

v'21r

P(Z

S

-a),

and

(3.42) is proven.

To

obtain

(3.43) from (3.42)

note

that,

by

definition (3.41),

P(Z

~

a) =

1-

P(Z

S a) = 1

N(a)

and

P(Z

S

-a)

=

N(-a).

o

3.4

Normal

random

variables

Definition

3.6.

The random variable X is a

normal

variable

if

and only

if

x =

/--l

+

CYZ,

where Z is the standard normal variable and

/--l,

CY

E

JR.

Lemma

3.13.

Let

X =

/--l

+

cyZ

be

a normal variable. Then

E[X]

var(X)

cy(X)

(3.44)

(3.45)

(3.46)

(3.47)

92

CHAPTER

3.

PROBABILITY.

BLACK-SCHOLES

FORMULA.

Proof. Using

Lemma

3.3

and

the

fact

that

E[Z] = 0,

it

follows

that

E[X] =

E[IL

+

o-Z]

=

IL

+

o-E[Z]

=

IL·

From

(3.13),

and

using

Lemma

3.3

and

the

fact

that

E[Z2]

= 1, cf.

Lemma

3.11, we find

that

var(X)

=

E[(X

- E[X])2] =

E[(IL

+

o-Z

-1L)2] =

E[0-2Z2]

=

0-2E[Z2]

=

0-

2

.

D

Note

that

the

constant

random

variable X =

IL

is a degenerate

normal

vari-

able

with

mean

IL

and

standard

deviation

O.

Lemma

3.14.

Let X = lL+o-Z

be

a normal variable, with 0-

=1=

0, and denote

by

h( x) the probability density function

of

X.

Then,

h(x)

=

~

exp

( (x

-IL?)

10-1

21r

20-

2

'

(3.48)

Proof.

From

definition (3.6), we know

that

P(a

:'S

X

:'S

b)

= l h(y)

dy,

V

Q,

<

b,

(3.49)

Assume

that

0-

>

O.

Since X =

IL

+

0-

Z,

we find

that

P(a~X~b)

=

(

a

IL

b -

IL)

P(a~lL+o-Z~b)

= P

-o--~Z~-o--

1 1 (b-J-L)/a

(x2)

--

exp

--

dx

V27r

(a-J-L)/a

2'

(3.50)

since

vk

e-%

=

vk

exp

(_~2)

is

the

density function of

Z.

We make

the

substitution

x =

Y~J-L

in (3.50).

The

integration limits change

from

x =

a-J-L

to

y = a

and

from x =

b-J-L

to

Y =

b.

Also, dx =

dy.

Therefore,

a a a

we find from (3.50)

that

P(a~X~b)

=

.~

lb

exp

(

(y_~)2)

dy,

Va<b.

o-y

21r

a

20-

(3.51 )

From

(3.49)

and

(3.51),

it

follows

that

the

density function h(x) of X is

1

((Y-IL?)

1 (

(x-

IL

?)

h(y) =

0-V27r

exp

20-2

=

10-1V27r

exp

20-2

'

3.4.

NORMAL

RANDOM

VARIABLES

93

since

0- >

O.

If

0- < 0,

then

(3.50) becomes

P(a~X~b)=P

--2Z2--

=--

exp

--

(

a -

IL

b -

IL)

1

j,(a-J-L)/a

(x2)

0- 0-

V27r

(b-J-L)/a 2

dx.

The

substitution

x =

Y~J-L

yields

P(a~X~b)

=

1 l

a

((Y-IL?)

--

exp

dy

0-V27r

b

20-

2

-l!~

lex

p

(

(y~;)2)

dy,

and

therefore

1 (

(x-

IL

?)

10-1

V27f

exp

20-

2

'

since

10-/

=

-0-

if

0-

<

O.

o

Example:

Let

X =

IL

+

0-

Z

be

a normal variable,

and

let

Y =

21L

-

X.

Show

that

X

and

Y have

the

same

probability densities.

Answer: Note

that

Y = 21L- X =

IL-O-

Z is a

normal

variable. Let

hx(x)

and

hy(x) be

the

probability

density functions of X

and

Y, respectively. From

(3.48),

it

follows

that

hy(x) =

hx(x).

D

We

note

that

the

sum

two independent

normal

variables (and, in general,

the

sum

of

any

finite

number

of independent

normal

variables) is also a normal

variable.

This

is

not

the

case if

the

normal variables

are

not

independent· see

section 4.3 for more details. '

FINANCIAL

APPLICATIONS

The

Black-Scholes formula.

94

CHAPTER

3.

PROBABILITY.

BLACK-SCHOLES

FORMULA.

The

Greeks of plain vanilla

European

call

and

put

options.

Implied volatility.

The

concept of hedging.

~-hedging

and

r-hedging

for options.

Implementation

of

the

Black-Scholes formula.

3.5

The

Black-Scholes

formula

The

Black-Scholes formulas give

the

price of plain vanilla

European

call

and

put

options,

under

the

assumption

that

the

price of

the

underlying asset

4

~as

lognormal distribution. A detailed discussion of

the

lognormal assumptlOn

can

be

found

in

section 4.6.

To introduce

the

Black-Scholes formula,

it

is enough

to

assume

that,

for

. h d . bl

S(t2)'

1 1

any

values of

tl

and

t2

WIth

tl

< t

2

, t e

ran

om

vana

e

S(tl)

IS

ognorma

with

parameters

(J-L

- q -

~2)

(t2

-

tl)

and

a

2

(t2

- tl), i.e.,

(3.52)

where

Z is

the

standard

normal variable.

The

constants

J-L

and

a are called

the

drift

and

the

volatility of

the

price

8(t)

of

the

underlying asset

and

represent

the

expected value

and

the

standard

deviation of

the

returns

of

the

asset; q

is

the

continuous

rate

at

which

the

asset pays dividends; see section 4.6 for

more details.

In

the

Black-Scholes formulas,

the

price of a plain vanilla

European

option

depends

on

the

following parameters:

•

8,

the

spot

price of

the

underlying asset

at

time

t;

•

T,

the

maturity

of

the

option;

note

that

the

time

to

maturity

is T -

t;

•

K,

the

strike price of

the

option;

• r,

the

risk-free interest

rate,

assumed

to

be

constant over

the

life of

the

option, i.e., between t

and

T;

•

a,

the

volatility of

the

underlying asset, i.e.,

the

standard

deviation of

the

returns

of

the

asset;

• q,

the

dividend

rate

of

the

underlying asset;

the

asset is assumed

to

pay

dividends

at

a continuous

rate.

4Examples of underlying assets are stocks, futures, indeces, etc.

3.5.

THE

BLACK-SCHOLES

FORMULA

95

The Black-Scholes Formulas for European Call and

Put

Options:

Assume

that

the

price of

the

underlying asset

has

lognormal distribution

and

volatility

a,

that

the

asset pays dividends continuously

at

the

rate

q,

and

that

the

risk-free interest

rate

is

constant

and

equal

to

r. Let C

(S,

t) be

the

value

at

time

t of a call option

with

strike K

and

maturity

T,

and

let

P(S,

t)

be

the

value

at

time

t of a

put

option

with

strike K

and

maturity

T. Then,

where

0(8,

t)

P(8,

t)

8e-

q

(T-t)

N(d

1

) -

Ke-r(T-t)

N(d

2

);

Ke-r(T-t)

N(

-d

2

) -

8e-

q

(T-t)

N(

-d

1

),

In

(~

) +

(r

-q +

~)

(T

- t)

avT-t

In

(~

) +

(r

-q -

~2)

(T

- t)

d1-aVT-t

=

~

.

a

T-t

(3.53)

(3.54)

(3.55)

(3.56)

Here,

N

(z)

is

the

cumulative distribution of

the

standard

normal variable:

N(z) =

.~

jZ

e-4- dx.

V

27r

-00

For a non-dividend-paying asset, i.e., for q = 0,

the

Black-Scholes formu-

las are

where

0(8,

t)

P(8,

t)

SN(d

1

) -

Ke-r(T-t)

N(d

2

);

Ke-r(T-t)

N(

-d

2

) -

8N(

-dd,

(3.57)

(3.58)

(3.59)

(3.60)

Note

that

the

general rule of

thumb

that,

for a continuous dividend paying

asset

with

dividend

rate

q,

the

Black-Scholes formulas (3.53)

and

(3.54) can

be

obtained

from

the

formulas (3.57)

and

(3.58) for a non-dividend paying

asset by

substituting

r q for r, is wrong.

The

reason for this is somewhat

subtle: while

r represents

the

risk-neutral

drift

of

the

underlying asset

in

(3.59)

and

(3.60),

and

therefore (3.55)

and

(3.56)

can

be

obtained

from (3.59)

96

CHAPTER

3.

PROBABILITY.

BLACK-SCHOLES

FORMULA.

and

(3.60)

by

substituting

r - q for r,

the

term

e-r(T-t) from (3.57)

and

(3.58) is

the

discount factor from

time

T

to

time

t

and

does

not

change

in

the

formulas (3.53)

and

(3.54) corresponding

to

the

dividend-paying

asset.

In

general, after a result is

obtained

for a

European

call option,

the

cor-

responding

result for a

European

put

option

can

be

derived

by

using

the

Put-Call

parity. For example,

the

Black-Scholes formula (3.54) for

the

Eu-

ropean

put,

can

be

obtained

from

the

Black-Scholes formula (3.53) for

the

European

call as follows:

From

(3.53), we know

that

C(8,

t)

=

8e-

q

(T-t)

N(d

1

) -

Ke-r(T-t)

N(d

2

).

Then,

from

the

Put-Call

parity

formula (1.47), we find

that

P(8,

t)

K e-r(T-t) -

8e-

q

(T-t) +

C(8,

t)

Ke-r(T-t)

_

8e-

q

(T-t) +

(8e-

q

(T-t)

N(d

1

) -

Ke-r(T-t)

N(d

2

))

Ke-r(T-t)

(1

-

N(d

2

))

-

8e-

q

(T-t)

(1

-

N(d

1

))

Ke-r(T-t)

N(

-d

2

) -

8e-

q

(T-t)

N(

-d

1

),

since 1 -

N(a)

=

N(

-a),

for

any

a E

IR;

cf.

Lemma

3.12.

If

V(8(t),

K)

denotes

the

value

at

time

t of a plain vanilla

options

with

strike K

and

spot

price

8(t)

of

the

underlying asset,

it

is easy

to

see, from

(3.53-3.56),

that

V(a8(t),

aK)

=

aV(8(t),

K)

for

any

a >

O.

Therefore,

Thus,

the

relevant factor when pricing

an

option

with

strike K is

8-K

K

8(t)

- K

K

which represents

the

percentage

by

which

an

option

is

in-the-money

or

out-

of-the-money

at

time

t.

Example: A call

option

with

strike K = 60 is 15%

out

of

the

money.

Find

the

spot

price of

the

underlying asset.

Answer:

For

an

out-of-money

call,

the

spot

price is smaller

than

the

strike

price, i.e.,

8(t)

<

K.

The

call is 15%

out

of

the

money if

K -

8(t)

=

015

K . ,

i.e.,

if

8(t)

=

0.85K.

For K = 60, we find

that

8(t)

= 51. D

3.6.

THE

GREEKS

OF

EUROPEAN

OPTIONS

97

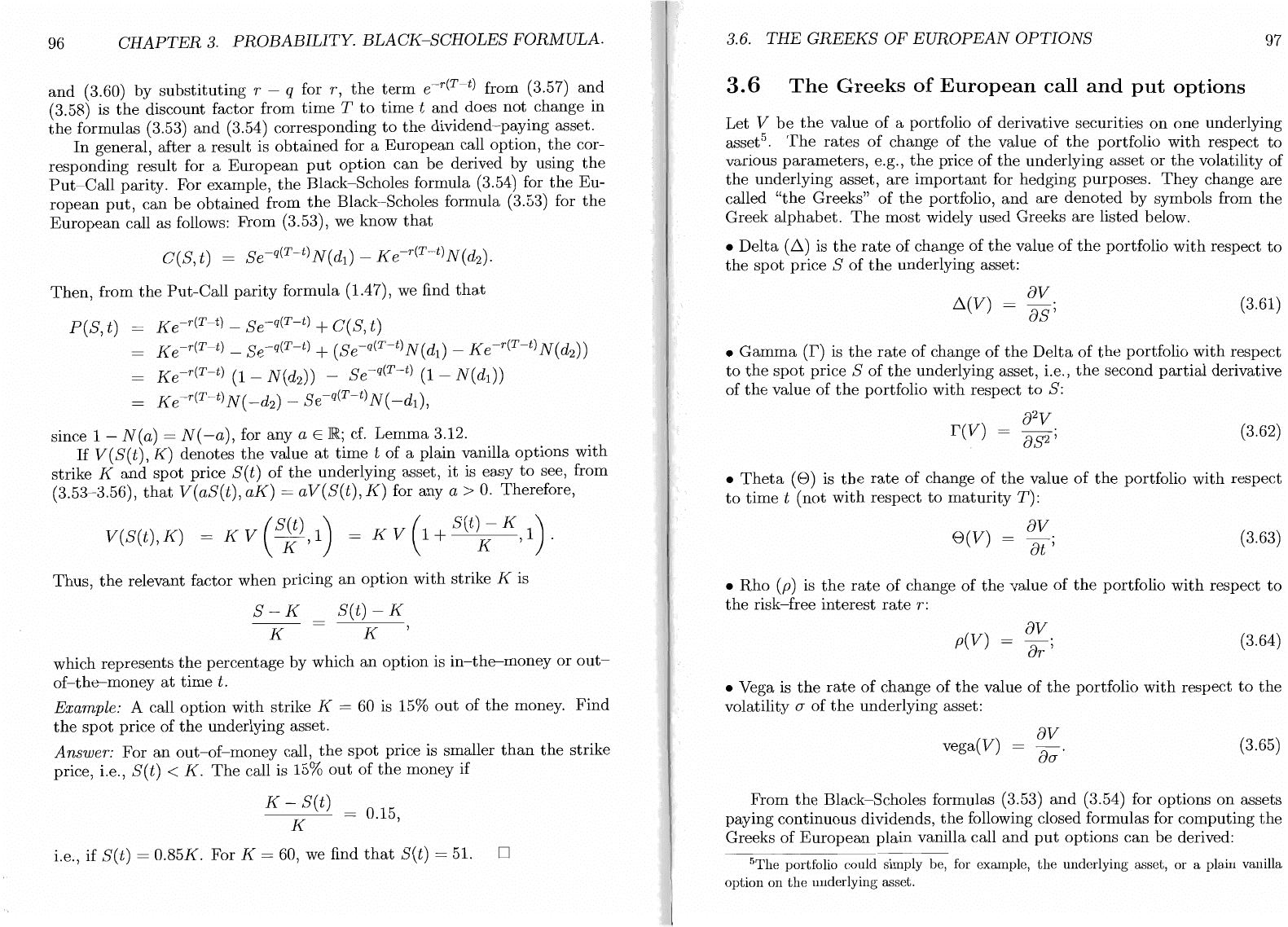

3.6

The

Greeks

of

European

call

and

put

options

Let V

be

the

value

of

a portfolio

of

derivative securities

on

one underlying

asset

5

•

The

rates

of

change of

the

value

of

the

portfolio

with

respect

to

various

parameters,

e.g.,

the

price

of

the

underlying asset

or

the

volatility

of

the

underlying asset,

are

important

for hedging purposes.

They

change are

called

"the

Greeks"

of

the

portfolio,

and

are

denoted

by

symbols from

the

Greek

alphabet.

The

most

widely used Greeks

are

listed below.

•

Delta

(~)

is

the

rate

of

change

of

the

value

of

the

portfolio

with

respect

to

the

spot

price 8

of

the

underlying asset:

~(V)

=

8V.

88'

(3.61)

•

Gamma

(r)

is

the

rate

of

change

of

the

Delta

of

the

portfolio

with

respect

to

the

spot

price 8

of

the

underlying asset, i.e.,

the

second

partial

derivative

of

the

value

of

the

portfolio

with

respect

to

8:

r(V)

= 8

2

V.

88

2

'

(3.62)

•

Theta

(8)

is

the

rate

of

change

of

the

value

of

the

portfolio

with

respect

to

time

t

(not

with

respect

to

maturity

T):

8V

8(V)

=

7ft;

(3.63)

•

Rho

(p)

is

the

rate

of

change

of

the

value

of

the

portfolio

with

respect

to

the

risk-free

interest

rate

r:

8V

p(V)

=

8r;

(3.64)

• Vega is

the

rate

of

change

of

the

value of

the

portfolio

with

respect

to

the

volatility a

of

the

underlying asset:

vega(V)

8V

80'

(3.65)

From

the

Black-Scholes formulas (3.53)

and

(3.54) for options

on

assets

paying continuous dividends,

the

following closed formulas for

computing

the

Greeks

of

European

plain

vanilla call

and

put

options

can

be

derived:

5The portfolio could simply be, for example,

the

underlying asset,

or

a plain vanilla

option

on

the

underlying asset.

98

CHAPTER

3.

PROBABILITY.

BLACK-SCHOLES

FORMULA.

8(C)

8(P)

~(C)

e-q(T-t)

N(d

1

);

~(P)

_e-q(T-t)

N(

-d

1

);

e-q(T-t)

1

d

2

_.-1

2 •

r(C)

--e

So-VT

- t

V2ir

,

r(p)

r(C);

1 d

2

vega(C)

Se-q(T-tLJT

- t

--e---t·

V2ir

'

vega(P)

vega(C);

Sa-e-q(T-t)

d

2

_.-1

e

2

2J2Tr(T

- t)

+ qSe-q(T-t)

N(d

1

) -

r K e-r(T-t)

N(

d

2

);

Sa-e-q(T-t)

d

2

1

e

-2

2J2Tr(T

- t)

- qSe-q(T-t)

N(

-d

1

)

+

rKe-

r

(T-t)N(-d

2

);

p(C)

p(P)

K(T

- t)e-r(T-t)

N(d

2

);

-

K(T

- t)e-r(T-t)

N(

-d

2

).

(3.66)

(3.67)

(3.68)

(3.69)

(3.70)

(3.71)

(3.72)

(3.73)

(3.74)

(3.75)

The

computations

for deriving

these

formulas are based

on

differentiating

improper

integrals

with

respect

to

the

integration limits;

cf.

section 2.3.

Note

that,

as soon as

the

formulas for

the

Greeks corresponding

to

a call

option

are derived,

the

Put-Call

parity

can

be

used

to

obtain

the

correspond-

ing Greeks for

the

put

option. For example,

to

compute

the

Delta

of a

put

option, we write

the

Put-Call

parity

formula (1.47) as

P = C - Se-q(T-t) + Ke-r(T-t).

(3.76)

By

differentiating (3.76)

with

respect

to

S,

we find

that

~(P)

=

~(C)

- e-q(T-t).

Using formula (3.66) for

~(C)

and

Lemma

3.12, we

obtain

the

formula (3.67)

for

~(P),

i.e.,

~(P)

= e-q(T-t)

N(d

1

) -

e-q(T-t) =

_e-

q

(T-t)(1_

N(d

1

))

= _e-q(T-t)

N(

-d

1

).

3.6.

THE

GREEKS

OF

EUROPEAN

OPTIONS

99

While definitions (3.61-3.65) are valid for

the

Greeks of any option

on

one underlying asset, including, e.g., American

and

exotic options, closed

formulas for

the

Greeks

can

only

be

obtained

if closed formulas for pricing

the

options exist.

This

happens

very rarely for options

that

are

not

plain

vanilla

European,

a

notable

exception being

European

barrier options; see

section 7.8.

3.6.1

Explaining

the

magic

of

Greeks

computations

It

is interesting

to

note

that

the

formulas (3.66-3.75) for

the

Greeks are

simpler

than

expected. For example,

the

Delta

of

a call

option

is defined as

~(C)

= ac

as'

Differentiating

the

Black-Scholes formula (3.53)

with

respect

to

S, we

obtain

~(C)

= e-q(T-t)

N(d

1

)

+ Se-q(T-t)

:S

(N(d

1

))

-

Ke-r(T-t)

:S

(N(d

2

))

,

(3.77)

since

both

d

1

and

d

2

are

functions of

S;

cf. (3.55)

and

(3.56).

However, we know from (3.66)

that

~(C)

= e-q(T-t)

N(d

1

).

(3.78)

To

understand

how (3.77) reduces

to

(3.78), we

apply

chain rule

and

obtain

that

Then,

using (3.79)

and

(3.80), we can write (3.77) as

~(C)

= e-q(T-t)

N(dd

+

Se-q(T-t)

N'(d

)

ad

1

_

Ke-r(T-t)

N'(d

)

ad

2

1

as

2 as'

(3.79)

(3.80)

(3.81)

Note

that

formulas (3.55)

and

(3.56) for d

1

and

d

2

can

be

written

as

In

(~)

+ (r -

q)(T

- t)

a-VT

- t

d

1

a-vr=t

+ 2 ; (3.82)

d

2

= d

1

-

o-VT

_ t = In

(~)

+ (r -

q)(T

- t)

a-vr=t

(3.83)

a-VT

- t 2

The

following result explains why (3.81) reduces

to

(3.78):

100

CHAPTER

3.

PROBABILITY.

BLACK-SCHOLES

FORMULA.

Lemma

3.15.

Let

d

1

and

d

2

be

given by {3.82} and {3.83}.

Then

Se-q(T-t)

N'(d

1

)

=

Ke-r(T-t)

N'(d

2

).

(3.84)

Proof. Recall

that

N (z) is

the

cumulative distribution of

the

standard

normal

variable, i.e.,

1 z2

From

Lemma

2.3, we find

that

N'(z) = y'2ife-

T

.

Then,

1

-dI

--e~'

V21i

'

1

-d~

--e~

V21i

.

(3.85)

(3.86)

Therefore,

in

order

to

prove (3.84),

it

is enough

to

show

that

the

following

formula holds true:

(3.87)

Recall

the

notation

exp(x) =

eX.

Since exp(ln(x)) = x,

we

find

that

Se-q(T-t)

K

e-r(T-t)

!ie(r-q)(T-t)

K

Ke-

r

(7'-t)

exp

(Ill

(:) +

(r

- q)(T -

t))

.

From

(3.82),

it

is easy

to

see

that

In

(:)

+

(r

- q)(T - t)

and

therefore

Se-q(T-t)

= K

e-r(T-t)

exp d

1

(J..jT - t -

(

.

(J2(T

2

-

t))

.

Using

the

fact

that

d

2

= d

1

-

(J-VT

- t, we

obtain

(3.87) as follows:

d

2

( d

2

(J2(T

2

-

t))

Se-q(T-t)

e--t

= K

e-r(T-t)

exp -

21

+ d

1

(J-VT

- t -

K

-r(T-t)

((d

1

-

(J~?)

e exp 2

d

2

Ke-r(T-t)

e---f.

D

3.6.

THE

GREEKS

OF

EUROPEAN

OPTIONS

We

return

our

attention

to

proving formula (3.78) for .6(C).

From (3.82)

and

(3.83),

we

find

that

101

1

(3.88)

Using (3.88)

and

Lemma

3.15, we conclude

that

formula (3.81) becomes

.6(C)

e-q(T-t)

N(d

1

)

+

Se-q(T-t)

N'(d

1

)

8d

1

_

Ke-r(T-t)

N'(d

2

) 8d

2

8S

8S

e-

q

(7't)

N(d

1

)

+

Se-

Q

(7'-t)

N'(d

,

)

(~i

-

~~)

e-q(T-t)

N(d1)'

Formula (3.78) is therefore proven.

The

simplified formulas (3.70), (3.72),

and

(3.74) for

the

vega,

8,

and

p

of a

European

call

option

6

are

obtained

similarly using

Lemma

3.15.

The formula

for

vega(

C)

:

We differentiate

the

Black-Scholes formula (3.53)

with

respect

to

(J.

Follow-

ing

the

same

steps

as

in

the

computation

for

the

Delta

of

the

call option, i.e.,

using chain rule

and

Lemma

2.3, we

obtain

that

vega(C) =

8C

=

Se-q(T-t)

N'(d

l

)

8d

1

-

Ke-r(T-t)

N'(d

2

)

88~'

8(J

8(J

v

Using

the

result

of

Lemma

3.15, we conclude

that

vega(C)

=

Se-q(T-t)

N'(d

l

)

(8d

l

_

8d

2

).

8(J 8(J

Since d

2

= d

1

-

(J..jT - t, we find

that

d

l

-

d

2

= (J..jT - t

and

thus

8d

l

_

8d

2

= ..jT _ t.

8(J 8(J

.

-4

Then, uSIng

the

fact

that

N'(d

l

)

=

vk-

e~,

see (3.85), we conclude

that

1

dI

vega( C) =

V21i

Se-q(T-t)

e-

T

..jT - t,

-----------------------

6Note

that

the

formulas (3.71), (3.73), and (3.75) for

the

vega,

8,

and p of a European

put

option

can

be

obtained from (3.70), (3.72),

and

(3.74) by using

the

Put-Call

parity.

102

CHAPTER

3.

PROBABILITY.

BLACK-SCHOLES

FORMULA.

which is

the

same as formula (3.70).

The

formula

for

8(C):

We differentiate

the

Black-Scholes formula (3.53)

with

respect

to

t.

chain rule

and

Lemma 2.3 we

obtain

that

8(C)

=

Se-q(T-t)

N'(d

1

)

a~l

+

qSe-q(T-t)

N(d

1

)

_

Ke-r(T-t)

N'(d

2

)

a~2

-

rKe-

r

(T-t)N(d

2

).

Using

the

result of

Lemma

3.15, we conclude

that

8(C) =

Se-q(T-t)

N'(d

1

)

(ad

1

_

ad

2

)

at at

+

qSe-q(T-t)

N(d

1

)

rKe-r(T-t)

N(d

2

).

Since d

1

-

d

2

=

o--VT

- t, we find

that

ad

1

ad2

---

at

at

_d

2

Since

N'(d

1

)

=

vk

e-T-,

cf.

(3.85), we conclude

that

1

~

0-

8(C)

=

---

Se-q(T-t)

e-

T

-==

V2if

2-VT - t

+

qSe-q(T-t)N(d

1

) -

rKe-

r

(T-t)N(d

2

),

which is

the

same as formula (3.72).

The

formula

for

p(

C) :

Using

We differentiate

the

Black-Scholes formula (3.53)

with

respect

to

r. Using

Chain

Rule

and

Lemma

2.3 we

obtain

that

p(C) =

Se-q(T-t)N'(d1)ad

1

-

Ke-

r

(T-t)N'(d

2

)ad

2

+K(T

t)e-

r

(T-t)N(d

2

).

ar ar

Using

Lemma

3.15, we find

that

p(C) =

Se-q(T-t)

N'(d

1

)

(ad

1

_

ad

2

)

+

K(T

-

t)e-r(T-t)

N(d

2

).

ar

ar

Since d

1

-

d

2

=

o--VT

- t, we find

that

ad

1

ad

2

ar

ar'

and

therefore,

p(C) =

K(T

-

t)e-r(T-t)

N(d

2

),

which is

the

same as formula (3.74).

3.6.

THE

GREEKS

OF

EUROPEAN

OPTIONS

103

3.6.2

Implied

volatility

The

only

parameter

needed in

the

Black-Scholes formulas (3.53-3.56)

that

is

not

directly observable

in

the

markets is

the

volatility

0-

of

the

underlying

asset.

The

risk free

rate

r

and

the

continuous dividend yield q of

the

asset

can

be

estimated

from

market

data;

the

maturity

date

T

and

the

strike K

of

the

option, as well as

the

spot

price S

of

the

underlying asset are known

when a price for

the

option

is quoted.

The

implied volatility

o-imp

is

the

value of

the

volatility

parameter

0-

that

makes

the

Black-Scholes value of

the

option

equal

to

the

traded

price of

the

option. To formalize this, denote by CBS(S,

K,

T,

0-,

r,

q)

the

Black-Scholes

value of a call

option

with

strike K

and

maturity

T

on

an

underlying asset

with

spot

price S paying dividends continuously

at

the

rate

q, if interest

rates

are

constant

and

equal

to

r.

If

C is

the

quoted

values of a call

with

parameters

S,

K,

T,

0-,

r,

and

q,

the

implied volatility

o-imp

corresponding

to

the

price C is, by definition,

the

solution

to

CBS(S,

K,

T,

o-imp,

r,

q)

= C.

(3.89)

The

implied volatility

can

also be derived from

the

given price P of a

put

option,

by

solving

PBS(S,

K,

T,

o-imp,

r,

q)

=

P,

(3.90)

where PBS(S,

K,

T,

0-,

r,

q)

is

the

Black-Scholes value

of

a

put

option.

Note

that,

as functions

of

volatility,

the

Black-Scholes values of

both

call

and

put

options

are

strictly

increasing since

1

dt

Se-

qT

VT

--e-

T

>

O'

V2if

'

(3.91)

cf.

(3.70)

and

(3.71).

(Throughout

this section,

to

keep

notation

simple, we

assume

that

the

present

time

is t = 0.)

Therefore, if a solution

o-imp

for (3.89) exists,

it

will

be

unique,

and

the

implied volatility will

be

well defined. Similarly,

equation

(3.90) has

at

most

one solution.

For

the

implied volatility

to

exist

and

be

nonnegative,

the

given value C

of

the

call

option

must

be

arbitrage-free, i.e.,

(3.92)

The

bounds

for

the

call

option

price from (3.92)

can

be

obtained

by using

the

Law

of

One

Price; cf.

Theorem

1.10.