Nof S.Y. Springer Handbook of Automation

Подождите немного. Документ загружается.

405

Automation U

24. Automation Under Service-Oriented Grids

Jackson He, Enrique Castro-Leon

For some companies, information technology

(IT) services constitute a fundamental function

without which the company could not exist. Think

of UPS without the ability to electronically track

every package in its system or any large bank

managing millions of customer accounts without

computers. IT can be capital and labor intensive,

representing anywhere between 1% and 5% of

a company’s gross expenditures, and keeping costs

commensurate with the size of the organization is

a constant concern for the chief information officer

(CIO)s in charge of IT.

A common strategy to keep labor costs in check

todayisthroughadeliberatesourcing or service

procurement strategy, which may include in-

sourcing using in-house resources or outsourcing,

which involve the delegation of certain standard-

ized business processes such as payroll to service

companies such as ADP.

Yet another way of keeping labor costs in

check with a long tradition is through the use

of automation, that is, the integrated use of

technology, machines, computers, and processes

to reduce the cost of labor.

The convergence of three technology domains,

namely virtualization, service orientation, and

grid computing promises to bring the automation

of provision and delivery of IT services to levels

never seen before. An IT environment where these

three technology domains coexist is said to be

a virtual service-oriented environment or VSG

environment. The cost savings are accrued through

systemic reuse of resources and the ability to

quickly integrate resources not just within one

department, but across the whole company and

beyond.

In this Chapter we will review each of the

constituent technologies for a virtual service-

oriented grid and examine how each contributes

to the automation of delivery of IT services.

The increasing adoption of service-oriented

architectures (SOAs) represents the increasing

recognition by IT organizations of the need for

business and technology alignment. In fact, under

SOA there is no difference between the two. The

unit of delivery for SOA is a service, which is usually

defined in business terms.

In other words, SOA represents the up-leveling

of IT,empoweringIT organizations to meet the

business needs of the community they serve. This

up-leveling creates a gap, because for IT business

requirements eventually need to be translated into

technology-based solutions.

Our research indicates that this gap is be-

ing fulfilled by the resurgence of two very old

technologies, namely virtualization and grid

computing.

To begin with, SOA allowed the decoupling

of data from applications through the magic of

extensible mark-up language (XML).

A lot of work that used to be done by appli-

cation developers and integrators now gets done

by computers. When most data centers run at

5–10% utilization, growing and deploying more

data centers is not a good solution. Virtualization

technology came in very handy to address this sit-

uation, allowing the decoupling of applications

from the platforms on which they run. It acts as the

gearbox in a car, ensuring efficient transmission of

power from the engine to the wheels.

The net effect of virtualization is that it al-

lows utilization factors to increase to 60–70%.

The technique has been applied to mainframes

for decades. Deploying virtualization to tens of

thousands of servers has not been easy.

Finally, grid technology has allowed very fast,

on-the-fly resource management, where resources

are allocated not when a physical server is pro-

visioned, but for each instance that a program is

run.

Part C 24

406 Part C Automation Design: Theory, Elements, and Methods

24.1 Emergence

of Virtual Service-Oriented Grids............ 406

24.2 Virtualization ....................................... 406

24.2.1 Virtualization Usage Models........... 407

24.3 Service Orientation ............................... 408

24.3.1 Service-Oriented Architectural

Tenets......................................... 409

24.3.2 Services Needed

for Virtual Service-Oriented Grids ... 410

24.4 Grid Computing .................................... 414

24.5 Summary and Emerging Challenges........ 414

24.6 Further Reading ................................... 415

References .................................................. 416

24.1 Emergence of Virtual Service-Oriented Grids

Legacy systems in many cases represent a substantial

investment and the fruits of many years of refinement.

To the extent that legacy applications bring business

value with relatively little cost in operations and main-

tenance there is no reason to replace them. The adoption

of virtual service-oriented grids does not imply whole-

sale replacement of legacy systems by any means.

Newer virtual service-oriented applications will coex-

ist with legacy systems for the foreseeable future. If

anything,the adoption ofa virtual service-oriented envi-

ronment will create opportunities for legacy integration

with the new environment through the use of web-

service-based exportable interfaces. Additional value

will be created for legacy systems through extended life

cycles and new revenue streams through repurposing

older applications.

These goals are attained through the increasing use

of machine-to-machine communications during setup

and operation.

To understand how the different components of vir-

tual service-oriented grids came to be, we have to look

at theevolutionof its three constituenttechnologies: vir-

tualization, service orientation, and grids. We also need

to examine how they become integrated and interact

with each other to form the core of a virtual service-

oriented grid environment. This section also addresses

the tools and overall architecture components that keep

Grid computing

Service-orientationVirtualization

Virtual service-oriented

grids

Fig. 24.1 Virtual service-oriented grids represent the con-

fluence of three key technology domains

virtual service-oriented functions as integral business

services that deliver ultimate value to businesses. Fig-

ure 24.1 depicts the abstract relationship between the

three constituent technologies. Each item represents

a complex technical domain of its own.

In the following sections, we describe how key

technology components in these domains define the

foundation for a virtual service-oriented grid environ-

ment, elements such as billing and metering tools,

service-level agreement (SLA) management, as well as

security, data integrity, etc. Further down, we discuss

architecture considerations to put all these components

together to deliver tangible business solutions.

24.2 Virtualization

Alan M. Turing, in his seminal 1950 article for the

British psychology journal Mind, proposed a test for

whether machines were capable of thinking by having

a machine and a human behind a curtain typing text

messages to a human judge [24.1]. A thinking machine

would pass the test if the judge could not reliably deter-

mine whether answers from the judge’s question came

from the human or from the machine.

The emergence of virtualization technology poses

a similar test, perhaps not as momentous as attempting

to distinguish a human from a machine. Every compu-

tation result, such as a web page retrieval, a weather

report, a record pulled from a database or a spread-

sheet result can be ultimately traced to a series of state

changes. These state changes can be represented by

monkeys typing at random at a keyboard, humans scrib-

Part C 24.2

Automation Under Service-Oriented Grids 24.2 Virtualization 407

bling numbers on a note pad, or a computer running

a program. One of the drawbacks ofthe monkey method

is the time it takes to arrive at a solution [24.2]. The

human or manual method is also too slow for most prob-

lems of practical size today, which involve millions or

billions of records.

Only machines are capable of addressing the scale

of complexity of most enterprise computational prob-

lems today. In fact, their performance has progressed to

such an extent that, evenwith large computationaltasks,

they are idle most of the time. This is one of the reasons

for the low utilization rates for servers in data centers

today. Meanwhile, this infrastructure represents a sunk

cost, whether fully utilized or not.

Modern microprocessor-based computers have so

much reserve capacity that they can be used to simulate

other computers. This is the essence of virtualization:

the use of computers to run programs that simulate

computers of the same or even different architectures.

In fact, machines today can be used to simulate many

computers, anywhere between 1 and 30 for practical

situations. If a certain machine shows a load factor of

5% when running a certain application, that machine

can easily run ten virtualized instances of the same

application.

Likewise, three machines of a three-tier e-com-

merce application can be run in a single physical

machine, including a simulation of the network linking

the three machines.

Virtual computers, when compared with real phys-

ical computers, can pass the Turing test much more

easily than when humans are compared to a machine.

There is essentially no difference between results of

computations in a physical machine versus the compu-

tation in a virtual machine. It may take a little longer,

but the results will be identical to the last bit. Whether

running in a physical or a virtualized host, an applica-

tion program goes through the same state transitions,

and eventually presents the same results.

As we saw, virtualization is the creation of substi-

tutes for real resources. These substitutes have the same

functions and external interfaces as their counterparts,

but differ in attributes, such as size, performance, and

cost. These substitutes are called virtual resources.Be-

cause the computational results are identical, users are

typically unaware of the substitution. As mentioned,

with virtualization we can make one physical resource

look like multiple virtual resources; we can also make

multiple physical resources into shared pools of virtual

resources, providing a convenient way of divvying up

a physical resource into multiple logical resources.

In fact, theconcept of virtualization has been around

for a long time. Back in the mainframe days, we

used to have virtual processes, virtual devices, and

virtual memory [24.3–5]. We use virtual memory in

most operating systems today. With virtual memory,

computer software gains access to more memory than

is physically installed, via the background swapping

of data to disk storage. Similarly, virtualization con-

cepts can be applied to other IT infrastructure layers

including networks, storage, laptop or server hard-

ware, operating systems, and applications. Even the

notion of process is essentially an abstraction for a vir-

tual central processing unit (CPU) running a single

application.

Virtualization on x86 microprocessor-based sys-

tems is a more recent development in the long history

of virtualization. This entire sector owes its existence

to a single company, VMware; and in particular, to

founder Rosenblum [24.6], a professor of operating

systems at Stanford University. Rosenblum devised

an intricate series of software workarounds to over-

come certain intrinsic limitations of the x86 instruction

set architecture in the support of virtual machines.

These workarounds became the basis for VMware’s

early products. More recently, native support for vir-

tualization hypervisors and virtual machines has been

developed to improve the performance and stability of

virtualization. An example is Intel’s virtualization tech-

nology (VTx) [24.7].

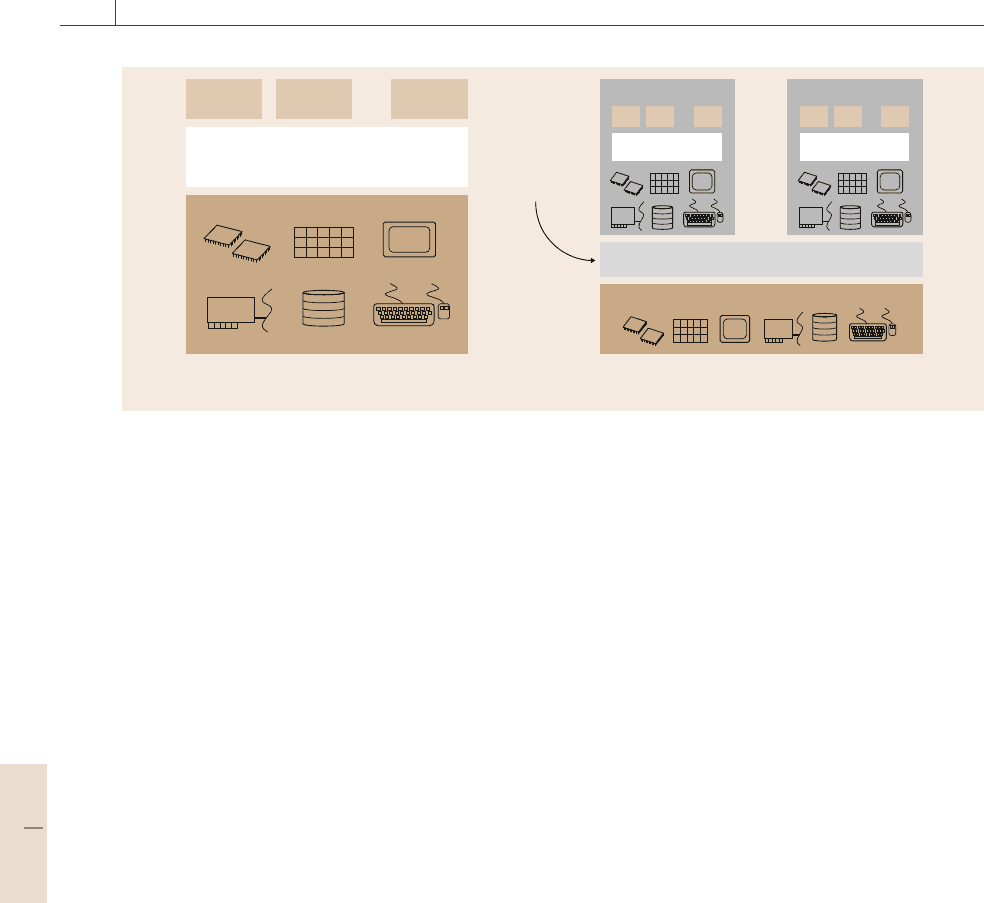

To look further into the impact of virtualization

on a particular platform, Fig. 24.2 illustrates a typi-

cal configuration of a single operating system (OS)

platform without virtual machines (VMs) and a config-

uration of multiple virtual machines with virtualization.

As indicated in the chart on the right, a new layer

of abstraction is added, the virtual machine monitor

(VMM), between physical resources and virtual re-

sources. A VMM presents each VM on top of its virtual

resources and maps virtual machine operations to phys-

ical resources. VMMs can be designed to be tightly

coupled with operating systems or can be agnostic to

operating systems. The latter approach provides cus-

tomers with the capability to implement an OS-neutral

management infrastructure.

24.2.1 Virtualization Usage Models

Virtualization is not just about increasing load fac-

tors; it brings a new level of operational flexibility and

convenience to the hardware that was previously asso-

ciated with software only. Virtualization allows running

Part C 24.2

408 Part C Automation Design: Theory, Elements, and Methods

Operating system

Guest OS

0

VM

0

App App App

...

Guest OS

1

VM

1

App App App

...

...

...

Physical host hardware

Physical host hardware

Virtual machine monitor (VMM)

Without VMs: Single OS owns all

hardware resources

With VMs: Multiple OSs share

hardware resources

App App

Storage

Memory

Network

Processors

Keyboard/mouse

Graphics

App

A new layer

of abstraction

Fig. 24.2 A platform with and without virtualization

instances of virtualized machines as if they were ap-

plications. Hence, programmers can run multiple VMs

with different operating systems, and test code across

all configurations simultaneously. Once systems admin-

istrators started running hypervisors in test laboratories,

they found a treasure trove of valuable use cases. Mul-

tiple physical servers can be consolidated onto a single,

more powerful machine. The big new box still draws

less energy and is easier to manage on a per-machine

basis. This server consolidation model provides a good

solution to server sprawl, the proliferation of physical

servers, a side effect of deploying servers supporting

a single application.

There exist additional helper technologies com-

plementing and amplifying the benefits brought by

virtualization; for example, extended manageability

technologies will be needed to automatically track and

manage the hundreds and thousands of virtual machines

in the environment, especially when many of them are

created and terminated dynamically in a data center or

even across data centers.

Virtual resources, even though they act in lieu of

physical resources, are in actuality software entities that

can bescheduled andmanaged automaticallyunder pro-

gram control, replacing the very onerous process of

physically procuring machines. The capability exists to

deploy these resources on the fly instead of the weeks

or months that it would take to go through physical

procurement.

Grid computing technologies are needed to trans-

parently and automatically orchestrate disparate types

of physical systems to become a pool of virtual re-

sources across the global network.

In this environment, standards-based web services

become the fabric of communicate among heteroge-

neous systems and applications coming from different

manufacturers, insourced and outsourced, legacy and

new alike.

24.3 Service Orientation

Service orientation represents the natural evolution

of current development and deployment models. The

movement started as a programming paradigm and

evolved into an application and system integration

methodology with many different standards behind it.

The evolution of service orientation can be traced

back to the object-oriented models in the 1980s and

even earlier and the component-based development

model in the 1990s. As the evolution continues, ser-

vice orientation retains the benefits of component-based

development (self-description, encapsulation, dynamic

discovery, and loading).

Service orientation brings a shift in paradigm from

remotely invoking methods on objects, toone of passing

messages between services. In short, service orienta-

tion can be defined as a design paradigm that specifies

the creation of automation logic in the form of services

based on standard messaging schemas.

Part C 24.3

Automation Under Service-Oriented Grids 24.3 Service Orientation 409

Messaging schemas describe not only the structure

of messages, but also behavior and semantics of accept-

able message exchange patterns and policies. Service

orientation promotes interoperability among heteroge-

neous systems, and thus becomes the fabric for systems

integration, as messages can be sent from one service to

another without consideration of how the service han-

dling those messages has been implemented.

Service orientation provides an evolutionary ap-

proach to building distributed systems that facilitate

loosely coupled integration and resilience to change.

With the arrival of web services and WS* stan-

dards (WS* denotes the multiple standards that govern

web service protocols), service-oriented architectures

(SOAs) have made service orientation a feasible

paradigm for the development and integration of soft-

ware and hardware services.

Some advantages yielded by service orientation are

described below.

Progressive and Based on Proven Principles

Service orientation is evolutionary (not revolutionary)

and grounded in well-known information technology

principles taking into account decades of experi-

ence in building real-world distributed applications.

Service orientation incorporates concepts such as self-

describing applications, explicit encapsulation, and

dynamic loading of functionality at runtime-principles

first introduced in the 1980s and 1990s through object-

oriented and component-based development.

Product-Independent

and Facilitating Innovation

Service orientation is a set of architectural principles

supported by open industry standards independent of

any particular product.

Easy to Adopt and Nondisruptive

Service orientation can and should be adopted through

an incremental process. Because it follows some of the

same information technology principles, service orien-

tation is not disruptive to current IT infrastructures and

can often be achieved through wrappers or adapters to

legacy applications without completely redesigning the

applications.

Adapt to Changes

and Improved Business Agility

Service orientation promotes a loosely coupled archi-

tecture. Each of the services that compose a business

solution can be developed and evolved independently.

24.3.1 Service-Oriented Architectural Tenets

The fundamental building block of service orientation

is a service. A service is a program interacting through

well-defined message exchange interfaces. The inter-

faces are self-describing,relatively stableover time, and

versioning resilient, and shield the service from imple-

mentation details. Services are built to last whileservice

configurations and aggregations are built for change.

Some basic architectural tenets must be followed for

service orientation to accomplish a successful service

design and minimize the need for human intervention.

Designed for Loose Coupling

Loose coupling is a primary enabler for reuse and

automatic integration. It is the key to making service-

oriented solutions resilient to change. Service-oriented

application architects need to spend extra effort to de-

fine clearboundaries of services and assure that services

are autonomous (have fewer dependencies), to ensure

that each component in a business solution is loosely

coupled and easy to compose (reuse).

Encapsulation. Functional encapsulation, the process

of hiding the internal workings of a service to the

outside world, is a fundamental feature of service ori-

entation.

Standard Interfaces. We need to force ubiquity at the

edge of the services. Web services provide a collec-

tion of standards such as simple object access protocol

(SOAP), web service definition language (WSDL),

and the WS* specifications, which take anything not

functionally encapsulated for conversion into reusable

components. At the risk of oversimplification, in the

same way the browser became the universal graphic

user interface (GUI) during the emergence of the First

Web in the 1990s, web services became the universal

machine-to-machine interface in the Second Web of the

2000s, with the potential of automatically integrating

self-describing software components without human in-

tervention [24.8,9].

Unified Messaging Model. By definition, service orien-

tation enables systems to be loosely bound for both the

composition of services, as well as the integration of

a set of services into a business solution. Standard mes-

saging and interfaces should be used for both service

integration and composition. The use of a unified mes-

saging model blurs the distinction between integration

and composition.

Part C 24.3

410 Part C Automation Design: Theory, Elements, and Methods

Designed for Connected Virtual Environments

With advances in virtualization as discussed in the

previous section, service orientation is not limited to

a single physical execution environment, but rather

can be applied using interconnected virtual machines

(VMs). Related content on virtual network of machines

is usually referred to as a service grid. Although the

original service orientation paradigm does not mandate

full resource virtualization, the combination of service

orientation and a grid of virtual resources hint at the

enormous potential business benefits brought by the au-

tonomic and on-demand compute models.

Service Registration and Discovery. As services are

created, and their interfaces and policies are registered

and advertised, using ubiquitous formats. As more ser-

vices are created and registered, a shareable service

network is created.

Shared Messaging Service Fabric. In addition to net-

working the services, a secure and robust messaging

service fabric is essential for service sharing and com-

munication among the virtual resources.

Resource Orchestration and Resolution. Once a ser-

vice is discovered, we need to have effective ways to

allocate sufficient resources for the services to meet the

service consumer’s needs. This needs to happen dynam-

ically and be resolved at runtime. This means special

attention is placed on discovering resources at runtime

and using these resources in a way that they can be

automatically released and regained based on resource

orchestration policies.

Designed for Manageability

The solutions and associated services should be built to

be managed with sufficient interfaces to expose infor-

mation for an independent management system, which

by itself could be composed of a set of services, to ver-

ify that the entire loosely bound system will work as

designed.

Designed for Scalability

Services are meant to scale. They should facilitate hun-

dreds or thousands of different service consumers.

Designed for Federated Solutions

Service orientation breaks application silos. It spans

traditional enterprise computing boundaries, such as

network administrative boundaries, organizational and

operational boundaries, and the boundaries of time and

space. There are no technical barriers for crossing cor-

porate or transnational boundaries. This means services

need ahigh degree ofbuilt-insecurity, trust,and internal

identity, so that they can negotiate and establish feder-

ated service relationships with other services following

given policies administrated by the management sys-

tem. Obviously, cohesiveness across services based on

standards in a network of service is essential for service

federation and to facilitate automated interactions.

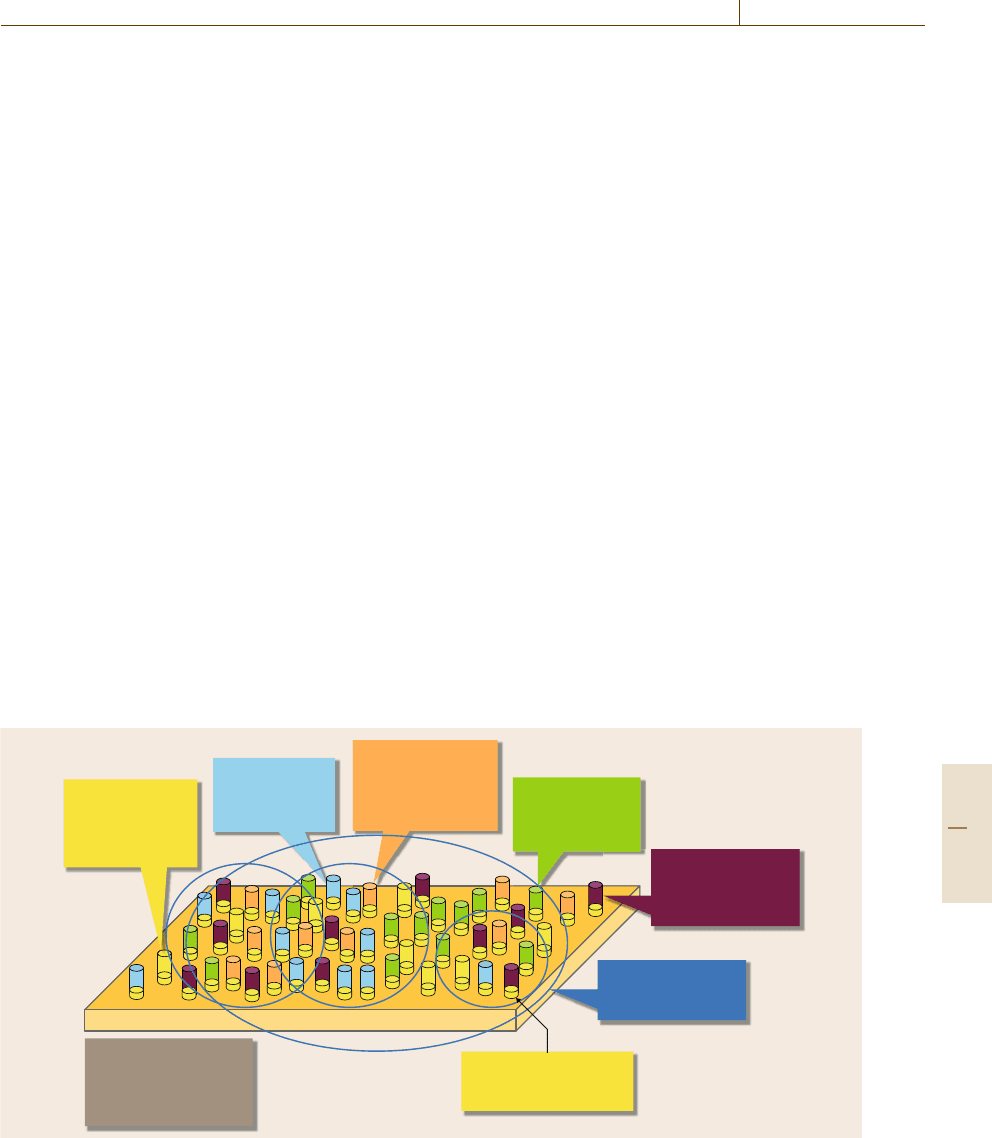

24.3.2 Services Needed

for Virtual Service-Oriented Grids

A set of foundation servicesis essential tomake service-

oriented grids work. These services, carried out through

machine-to-machine communication, are the building

blocks for a solution stack at different layers provid-

ing some of the automated functions supported by

VSGs.

Fundamental services can be divided into the fol-

lowing categories similar to the open grid services

architecture (OGSA) approach, as outlined in Fig.24.3

and in applications in Fig. 24.4. The items are provided

for conceptual clarity without an attempt to provide an

exhaustive list of all the services. We highlight some

example services, along with a high-level description.

Resource Management Services

Management of thephysical and virtual resources (com-

puting, storage, and networking) in a grid environment.

Asset Discovery and Management

Maintaining an automatic inventory of all connected

devices, always accurate and updated on a timely basis.

Provisioning

Enabling bare metal provisioning, coordinating the

configuration between server, network, and storage in

a synchronous and automatic manner, making sure soft-

ware gets loaded on the right physical machines, taking

platforms in and out of service as required for testing,

maintenance, repair orcapacity expansion; remote boot-

ing a system from another system, and managing the

licenses associated with software deployment.

Monitoring and Problem Diagnosis

Verifying that virtual platforms are operational, de-

tecting error conditions and network attacks, and

responding by running diagnostics, deprovisioning plat-

forms and reprovisioning affected services, or isolating

network segments to prevent the spread of malware.

Part C 24.3

Automation Under Service-Oriented Grids 24.3 Service Orientation 411

Infrastructure Services

Services to manage the common infrastructure of a vir-

tual grid environment and offering the foundation for

service orientation to operate.

QoS Management

In a shared virtualized environment, making sure

that sufficient resources are available and system uti-

lization is managed at a specific quality-of-service

(QoS) level as outlined in the service-level agreement

(SLA).

Load Balancing

Dynamically reassigning physical devices to applica-

tions to ensure adherence to specified service (perfor-

mance) levels and optimized utilization of all resources

as workloads change.

Capacity Planning

Measuring and tracking the consumption of virtual re-

sources to be able to plan when to reserve resources for

certain workloads or when new equipment needs to be

brought on line.

Utilization Metering

Tracking the use of particular resources as designated

by management policy and SLA. The metering service

could be used for chargeback and billing by higher-level

software.

Provisioning

• Configuration

• Deployment

• Optimization

Security

Physical environment

• Authentication

• Authorization

• Policy

implementation

Physical environment

• Hardware

• Network

• Sensors

• Equipment

Virtual domains

• Service groups

• Virtual organizations

Data

• Storage management

• Transport

• Replica management

Resources

• Virtualization

• Management

• Optimization

Execution

management

• Execution planning

• Workflow

• Work managers

Infrastructure profile

• Required interfaces

supported by all services

Fig. 24.3 OGSA service model from OGSA Spec 1.5, July 2006

Execution Management Services

Execution management services are concerned with the

problems of instantiating and managing to completion

units of work or an application.

Business Processes Execution

Setting up generic procedure as building blocks to stan-

dardize business processes andenabling interoperability

across heterogeneous system management products.

Workflow Automation

Managing a seamless flow of data as part of the busi-

ness process to move from application to application.

Tracking the completion of workflow and managing ex-

ceptions.

Execution Resource Allocation

In a virtualized environment, selecting optimal re-

sources for a particular application or task to execute.

Execution Environment Provisioning

Once an execution environmentis selected, dynamically

provision the environment as required by the applica-

tion, so that a new instance of the application can be

created.

Managing Application Lifecycle

Initiate, track status of execution, and adminis-

ter the end-of-life phase of a particular application

Part C 24.3

412 Part C Automation Design: Theory, Elements, and Methods

and release virtual resources back to the resource

pool.

Data Services

Moving data as required, such as data replication and

updates; managing metadata, queries, and federated

data resources.

Remote Access

Access remote data resources across the grid environ-

ment. The services hide the communication mechanism

from the service consumer. They can also hide the exact

location of the remote data.

Staging

When jobs are executed on a remote resource, the data

services are often used to stage input data to that re-

source ready for the job to run, and then to move the

result to an appropriate place.

Replication

To improve availability and to reduce latency, the same

data can be stored in multiple locations across a grid

environment.

Federation

Data services can integrate data from multiple data

sources that are created and maintained separately.

Derivation

Data services should support the automatic generation

of one data resource from another data source.

Metadata

Some data service can be used to store descriptions of

data held in other data services. For example, a repli-

cated file system may choose to store descriptions of

the files in a central catalogue.

Security Services

Facilitate the enforcement of the security-related policy

within a grid environment.

Authentication

Authentication is concerned with verifying proof of an

asserted identity.This functionalityis partof thecreden-

tial validation and trust services in a grid environment.

Identity Mapping

Provide the capability of transforming an identity that

exists in one identity domain into an identity within

another identity domain.

Authorization

The authorization service is to resolve a policy-based

access-control decision. For the resource that the ser-

vice requestor requests, it resolves, based on policy,

whether or not the service requestor is authorized to

access the resource.

Credential Conversion

Provide credential conversion from one type of creden-

tial to another type or form of credential. This may

include such tasks as reconciling group membership,

privileges, attributes, and assertions associated with en-

tities (service consumers and service providers).

Audit and Secure Logging

The audit service, similarly to the identity mapping and

authorization services, is policy driven.

Security Policy Enforcement

Enforcing automatic device and software load authen-

tication; tracing identity, access, and trust mechanisms

within and across corporate boundaries to provide se-

cure services across firewalls.

Logical Isolation and Privacy Enforcement

Ensuring that a fault in a virtual platform does not prop-

agate to another platform in the same physical machine,

and that there are no data leaks across virtual platforms

which could belong to different accounts.

Self-Management Services

Reduce the cost and complexity of owning and operat-

ing a grid environment autonomously.

Self-Configuring

A set of services adapt dynamically and autonomously

to changes in a grid environment, using policies pro-

vided by the grid administrators. Such changes could

trigger provisioning requests leading to, for example,

the deployment of new components or the removal of

existing ones, maybe due to a significant increase or

decrease in the workload.

Self-Healing

Detect improper operations of and by the resources

and services, and initiate policy-based corrective action

without disrupting the grid environment.

Self-Optimizing

Tune different elements in a grid environment to the

best efficiency to meet end-user and business needs.The

tuning actions could mean reallocating resources to im-

prove overall utilization or optimization byenforcing an

SLA.

Part C 24.3

Automation Under Service-Oriented Grids 24.3 Service Orientation 413

a)

b)

c)

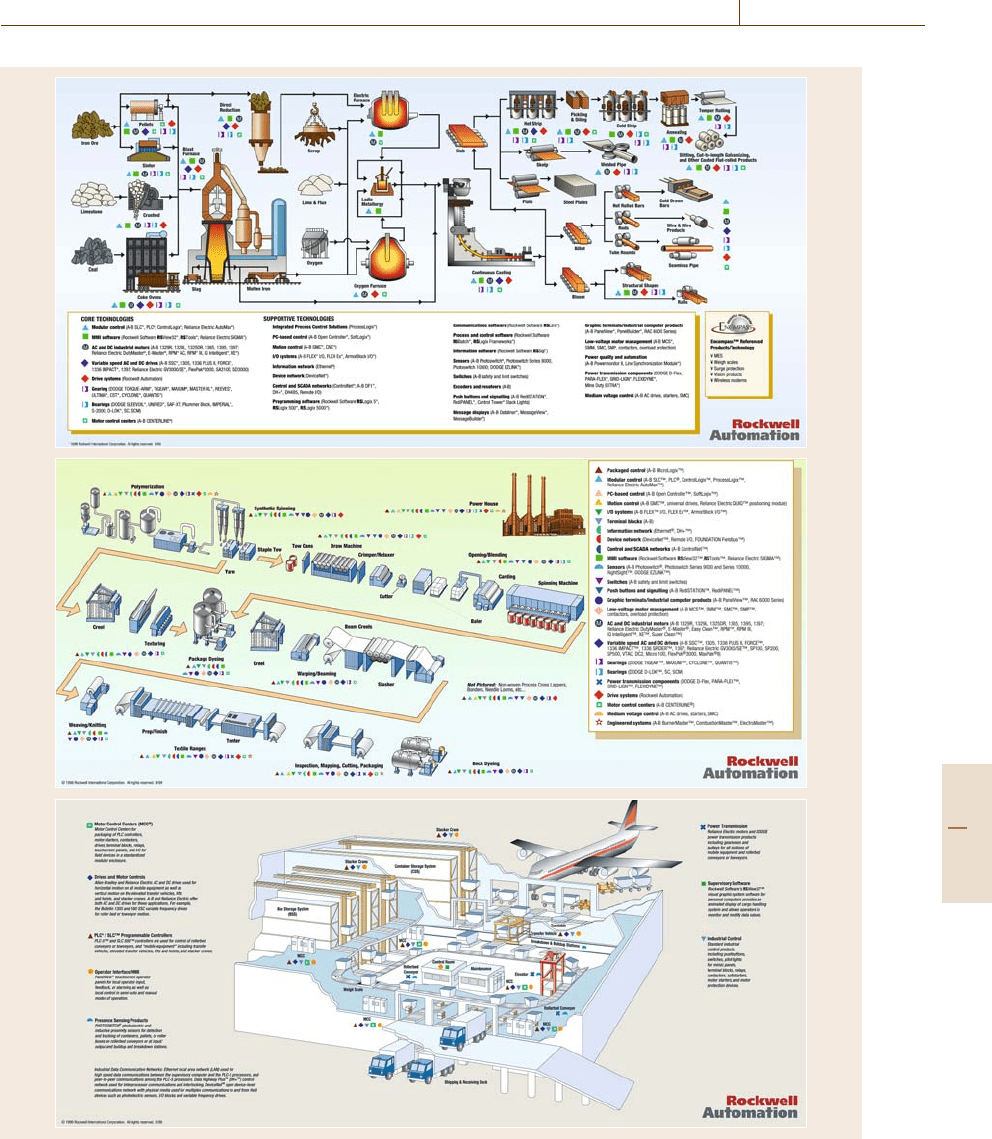

Fig. 24.4a–c Examples of applications that can benefit from virtual services as shown in Fig. 24.3. (a) Steel industry;

(b) textile industry; (c) material handling at a cargo airport (courtesy of Rockwell Automation, Inc.)

Part C 24.3

414 Part C Automation Design: Theory, Elements, and Methods

24.4 Grid Computing

The most common description of grid computing in-

cludes an analogy to a power grid. When you plug

an appliance or other object requiring electrical power

into a receptacle, you expect that there is power of

the correct voltage available, but the actual source of

that power is not known. Your local utility company

provides the interface into a complex network of gen-

erators and power sources and provides you with (in

most cases) an acceptable quality of service for your en-

ergy demands. Rather than each house or neighborhood

having to obtain and maintain its own generator of elec-

tricity, the power grid infrastructure provides a virtual

generator. The generator is highly reliable and adapts

to the power needs of the consumers based on their

demand.

The vision of grid computing is similar. Once the

proper grid computing infrastructure is in place, a user

will have access to a virtual computer that is reliable

and adaptable to the user’s needs. This virtual com-

puter will consist of many diverse computing resources.

But these individual resources will not be visible to

the user, just as the consumer of electric power is un-

aware of how their electricity is being generated. In

a grid environment, computers are used not only to run

the applications but to secure the allocation of services

that will run the application. This operation is done au-

tomatically to maintain the abstraction of anonymous

resources [24.10].

Because these resources are widely distributed and

may belong to different organizations across many

countries, there must be standards for grid computing

that will allow a secure and robust infrastructure to be

built. Standards such as the open grid services architec-

ture (OGSA) and tools such as those provided by the

Globus Toolkit provide the necessary framework. Ini-

tially, businesses will build their own infrastructures,

but over time, these grids will become interconnected.

This interconnectionwill be made possibleby standards

such as OGSA and the analogy of grid computing to the

power grid will become real.

The ancestry of grids is rooted in high-performance

computing (HPC) technologies, where resources are

ganged together toward a single task to deliver the

necessary power for intensive computing projects such

as weather forecasting, oil exploration, nuclear reactor

simulation, and so on. In addition to expensive HPC

supercomputer centers, mostly government funded, an

HPC grid emerged to link together these resources and

increase utilization. In a concurrent development, grid

technology was used to join not just supercomputers,

but literally millions of workstations and personal com-

puters (PCs) across the globe.

24.5 Summary and Emerging Challenges

In essence, a virtual service-oriented environment en-

courages the independent and automated scheduling of

data resources from the applications that use the data

and from the compute engines that run the applications.

SOA decouples data from applications and provides

the potential for automated mechanisms for aligning IT

with business through business process management.

Finally, grid technologies provide dynamic, on-the-fly

resource management.

Most challenges in the transition to a virtualized

service-oriented grid environment will likely be of both

technical and nontechnical origin, for instance imple-

menting end-to-end trust management: even if it is

possible to automatically assemble applications from

simpler service components, how do we ensure that

these components can be trusted? How do we also

ensure that, even if the applications that these compo-

nents support function correctly, that they will provide

satisfactory performance and that they will function re-

liably?

A number of service components that can be used to

assemble more complex applications are available from

well-known providers: Microsoft Live, Amazon.com,

Google, eBay, and PayPal. The authors expect that, as

technology progresses, smaller players worldwide will

enter the market, fulfilling every conceivable IT need.

These resources may represent business logic building

blocks, storage over the network, or even computing

resources in the form of virtualized servers.

The expected adoption of virtual service-oriented

environments will increase the level of automation in

the provisioning and delivery of IT services. Each of

the constituent technologies brings a unique automa-

tion capability into the mix. Grid technology enables

the automatic harnessing of geographically distributed,

anonymous computing resources. Service orientation

Part C 24.5