Mei C., Zhou J., Peng X. Simulation and Optimization of Furnaces and Kilns for Nonferrous Metallurgical Engineering

Подождите немного. Документ загружается.

10 Multi-objective Systematic Optimization of FKNME

subarea

A

m

:

⎟

⎟

⎠

⎞

⎜

⎜

⎝

⎛

+=

∑∑

==

n

i

N

j

jmjgmg

m

m

JssTsg

A

H

11

4

1

σ

)hm(kJ

12 −−

⋅⋅ (10.5)

in which,

mg

sg and

mj

ss are respectively the gases-surface and surface-

surface direct exchange areas calculated by the zone method; J

j

is the effective

radiation at subsurface j:

()

mjjjj

HTJ

εσε

−+= 1

4

)hm(kJ

12 −−

⋅⋅

(10.6)

i

α

is the convection heat transfer coefficient between gas and material in the

furnace. Many empirical formulations are available in various handbooks to

calculate

i

α

. The parameter

m

q (kJ/t) denotes the heat (absorbed from the

furnace) required to process unit load or unit outputting products (usually

measured in ton).

b) Output rates function defined by the time for material transforming: for

continuous process, the average residence time of loads staying in the reaction

zone is:

()

M

t

G

=

wl

h

(10.7)

where

M

wl

is the capacity of the reaction zone measured in ton or kilogram; G is

the mass flow rate of the loads passing through the reaction zone, t/h.

The furnace’s processing capability

G is calculated by rewriting Eq.10.7 as:

M

G

t

=

wl

Herein

t is the time to complete all physical and chemical processes

necessary for processing or transforming the loads. Assuming

t* is the complete

reaction time determined either by the chemical reaction dynamics model or by

the experiments, then there must be:

t

ı

*

t

(10.8)

The complete reaction times predicted by simulations are listed in Table 10.1

for different processes.

For the periodic operational FKNME:

∑

=

=

n

i

i

t

M

G

1

wl

(10.9)

where

wl

M is the mass of the loads per working session measured in ton;

i

t is

the time needed for the process

i. Taking the copper anode reverberating furnace

as an example,

i

t represents the times for the processes of material loading,

melting, oxidizing, reducing and casting. These times are normally determined

empirically. In order to improve the productivity of the furnace’s productivity,

(kJ/(m

2

gh))

(kJ/(m

2

gh))

Xiaoqi Peng, Yanpo Song, Zhuo Chen and Junfeng Yao

each individual process should be investigated so that the processing time can be

minimized and the processes can be intensified.

c) Output rate function defined by the conveying capability of the furnaces. The

conveying capacity is often an explicit indication of the output rate of the FKNME

that mechanically load/unload materials. Examples are the rotary kiln, mechanical

roasting shaft kilns, copper refinery furnace, cathode zinc melting furnace, and

aluminum melting furnace etc. The failure of properly matching the functions

among different parts in the conveying mechanism would be an important factor

limiting the enhancement of the output rate. The conveying capacity of the rotary

kiln is relatively more complicated to be determined, of which the influencing

factors include the rotating speed, the slope of the kiln, the materials angle of

repose and the cross-sectional filling ratio in the kiln.

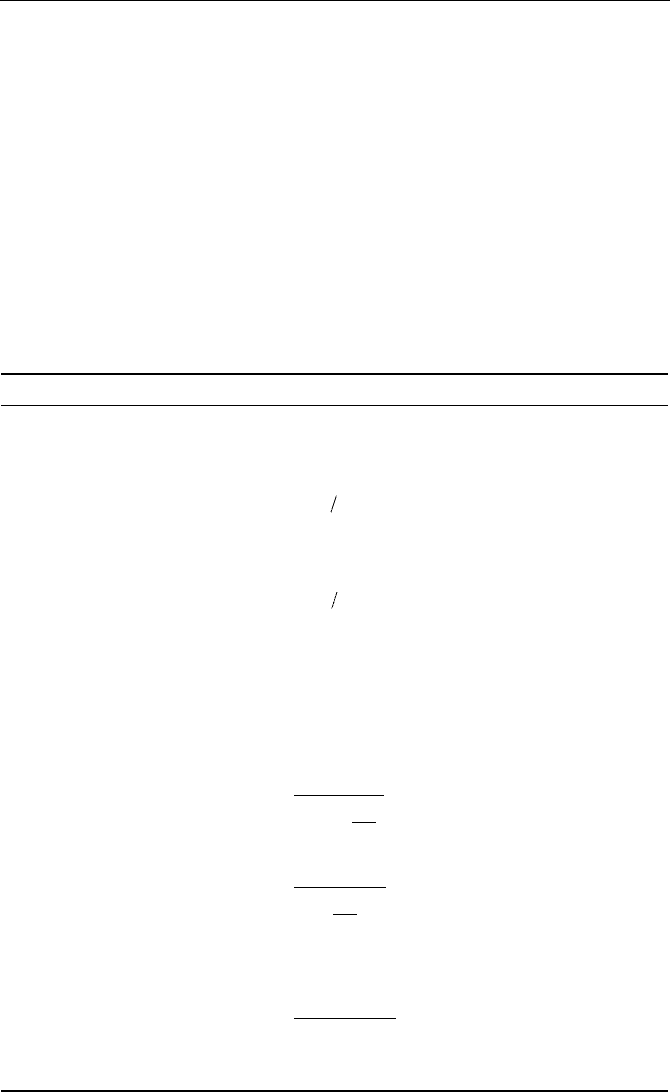

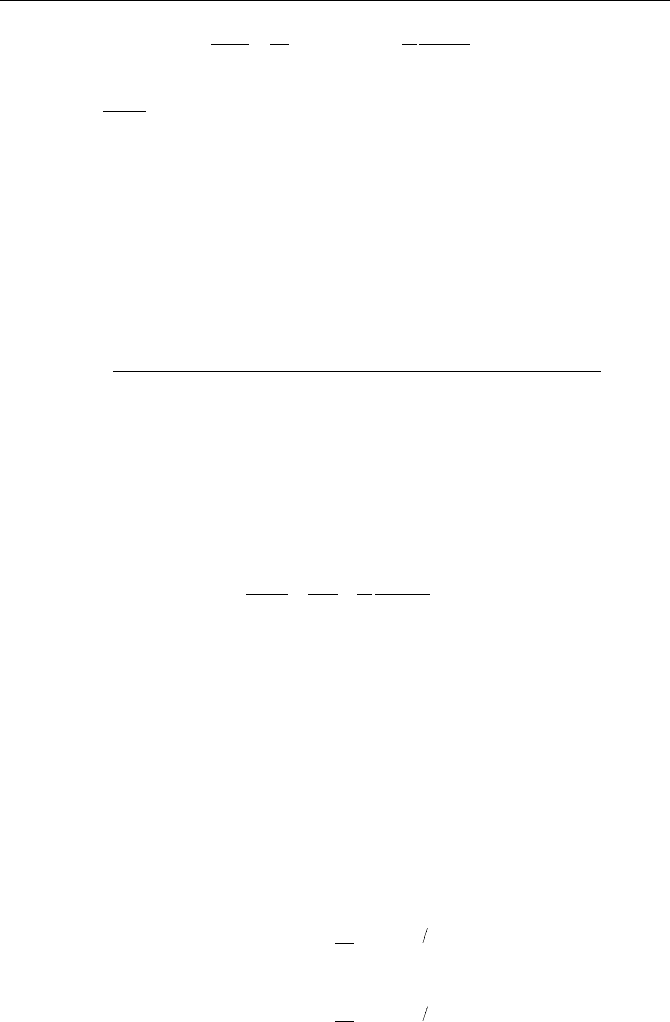

Table 10.1 Estimation of time to complete a process (Xiao and Xie, 1997; Mei, 2000)

Process Reaction models

t

ķ

Remark

Homogenous

reaction

6.908/k

v

g

C

A

This is the time needed for

99.9% transformation

Membrane

diffusion control

in shrinking core

model

Afsms

6 Cbkd

ρ

b denotes the quantity of solid

reactants in mole reacting with

1mol gas

Chemical

reaction control

in shrink core

model

Afsms

2 Cbkd

ρ

Transforma-

tion of the

solid

particles in

gas-solid

reactions

Diffusing

control through

ash layer in

shrinking core

model

d

s

2

²

sm

/24b¥

fa

C

A

a) Reaction heat

is negligible

b)

glqt

WW >

pq gl

gl

qt

3(1)

1

c

W

av

W

ρ

ε

−

⎛⎞

Σ−

⎜⎟

⎜⎟

⎝⎠

Loads

heated by

air flow in

the shaft

furnaces

(fixed beds or

mobile beds)

a) Reaction heat

is negligible

b) W

qt

<W

gl

pq gl

gl

qt

3(1)

1

c

W

v

W

ρ

ε

α

−

⎛⎞

Σ−

⎜⎟

⎜⎟

⎝⎠

Load heated to 95% of the gas

exhaust temperature.

W

gl

is water equivalent of

material flow; W

qt

is water

equivalent of gas flow

Thin plane

materials

melted in

furnaces

under constant

temperature

Internal thermal

resistance of

materials is

negligible

D

p

rt gr

ss

()

qL

Gq c t

QQ

−

+Δ

−

D

q

is heat needed for melting one

ton materials, kJ/kg; c

prt

is melt

specific heat, kJ/(kg

gK

-1

); Q

ss

is

heat loss of melting bath, kJ/h;

Q

q

−

L

is heat transfer between gas

and surface of melting bath,

kJ/h

10 Multi-objective Systematic Optimization of FKNME

Continues Table 10.1

Process Reaction models

t

ķ

Remark

Thin plane

materials

heated in

furnaces at

constant

temperature

Internal thermal

resistance of

materials is

excluded

⎥

⎥

⎦

⎤

⎢

⎢

⎣

⎡

⎟

⎟

⎠

⎞

⎜

⎜

⎝

⎛

′

−

⎟

⎟

⎠

⎞

⎜

⎜

⎝

⎛

′′

×

∑

q

wl

q

wl

4

q

8

10

T

T

T

T

FTC

CV

ϕϕ

ρ

In which:

⎥

⎥

⎦

⎤

⎢

⎢

⎣

⎡

⎟

⎟

⎠

⎞

⎜

⎜

⎝

⎛

−

⎟

⎟

⎠

⎞

⎜

⎜

⎝

⎛

+

+

⎟

⎟

⎠

⎞

⎜

⎜

⎝

⎛

=

⎟

⎟

⎠

⎞

⎜

⎜

⎝

⎛

q

wl

q

wl

q

wl

q

wl

11ln

2

1

arctan

2

1

T

T

T

T

T

T

T

T

ϕ

ρ

, c, V, F are respectively the

density, specific heat, volume

and heated area of the

materials to be heated;

q

T

is

the gas temperature;

wl

T

′

and

wl

T

′′

are the initial and end

temperatures of materials

Slag

settling in

smelting

bath

Melt without

disturbance

motion in the

direction that

is normal to the

fluid flow

()

zrt

2

m

zzzc

18

ρρ

ρν

−gd

h

zc

h

is the thickness of the slag

layer;

z

ν

is the kinematic

viscosit

y

of the sla

g

melt;

z

ρ

is the density of the slag

melt; g is the gravity

acceleration;

m

d

is the

diameter of the metal or alloy

melt;

rt

ρ

is the density of the

metal or alloy melt

ķ

Nomenclatures:

v

k

,

c

k

are the reaction rate constants based on particle volume and particle

surface respectively, cm

3

/(mol

g

s),cm/s;

f

k

is the mass transfer coefficient between gas the and

particles, cm/s;

fa

δ

is the gas diffusivity in ashes, cm

2

/s;

sm

ρ

is the molar density of solid reactants,

mol/cm

3

.

The output rate function of rotary kiln is written as:

m

(t / h)Gu A

ϕ

= ••

where

u

m

represents the mean axial speed of materials in the kiln, s/m;

A

represents the cross-sectional area of the empty kiln,

2

m

;

ϕ

is the filling ratio

of the materials to the kiln’s cross section.

For usual drying and roasting kilns:

mr

5.78 (m / h)uDn

β

≈ •• (10.10)

where D

r

represents the diameter of the empty kiln, m ;

β

represents the

inclined angle of the kiln’s central axis, (

e) ; n denotes the rotate speed, r/min. As

for high-temperature sintering kilns, materials are to be softened, aggregated and

sintered in the high temperature zone. In such a case, we have

3

r

m

11

sin

2.32 (m / h)

sin 2 sin 2

Dni

u

θ

αθ θ

≈

−

• (10.11)

where i is the inclines of the kiln, %;

θ

2 is the central angle of the sector

occupied by materials in the kiln’s cross section; and

α

is the angle of repose of

materials in the rotating kiln.

For the periodically operated FKNME, the material unloading speed (in casting

Xiaoqi Peng, Yanpo Song, Zhuo Chen and Junfeng Yao

period) is dependent on the capability of the casting machine. For continuous

casting, the speed is determined by the cross-sectional area of the

crystallizer and

the forced cooling capacity for the crystallization. In this case, the output rate

function usually has to be empirically determined.

d) Output rate function defined by gas flux (or gas exhaust capability). In many

furnaces and kilns, the gas flowrate are strictly restricted, which in consequence

limits the output capacity of the furnaces.

Assuming

sy

u is the velocity of high temperature gases in the furnace, the

output rate of the furnace should be:

dc

sy

3600

V

Au

G

=

(10.12)

where

sy

u is the economical velocity of gas, s/m; A is the cross-sectional area

of the furnace chamber,

2

m

;

dc

V is the gas volume needed to process unit mass

of material or product, t/m

3

, which is determined through mass and heat

balance calculations. The range of limits for

sy

u is given in Table 10.2.

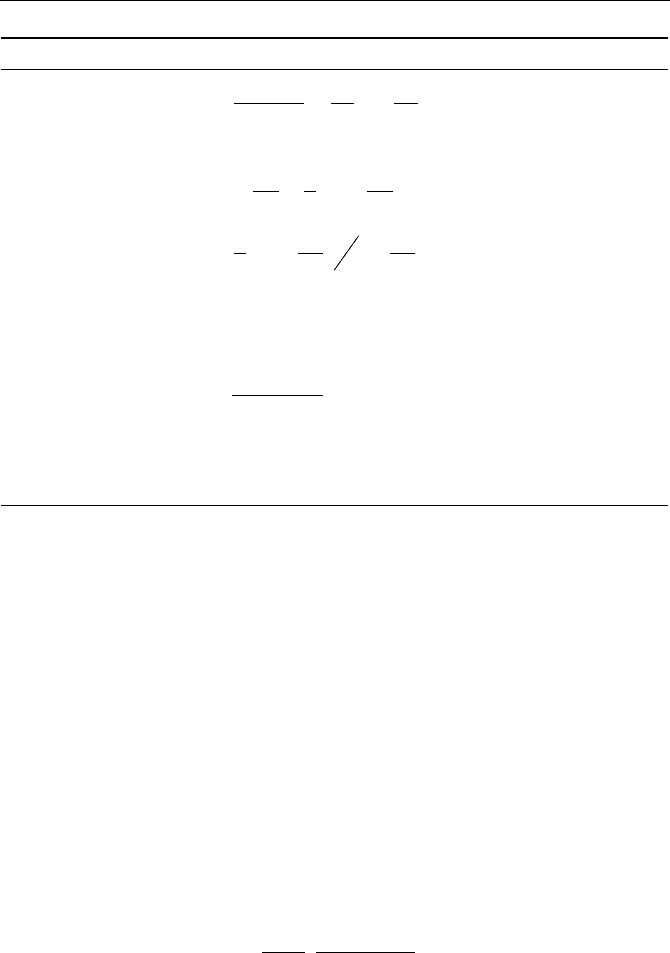

Table 10.2 Limits of the proper gas speed

sy

u in hearth or flue

FKNME type u

sy

/mgs

−1

Remarks

Dense phase in fluidized bed

tsymf

uuu <<

Refer to Section 8.1

Circulating fluidized bed

FDsycd

uuu <<

Gas drying or conveying

FDsy

uu <

Shaft smelting furnaces and blast

smelting furnaces

mfsy

uu <

Flame furnace (without powder in bath) 10

̚16

(with powder in bath) 6

̚9

Rotary kilns (for roasting and sintering) 4

̚8

(lots of powder materials) 2.5

̚5.0

Gas vent or wind pipe

1600 2.4

800 3.0

160 4.9

16 9.4

Density of conveying

media /kg

gm

−3

:

0.16 18.0

A usual way to intensify the process for higher output capacity is to raise the

effective gases flow rate through the furnaces/kilns. This can be done by changing

either the material properties or the composition of the gases. In the general

fluidized beds, for example, granularizing the powder-formed load may effectively

increase the size of the particles to raise the critical fluidizing velocity

u

mf

and the

terminal velocity

u

t

or u

FD

. Increasing oxygen concentration in air may also

effectively reduce the quantity of inertia gases such as nitrogen.

10 Multi-objective Systematic Optimization of FKNME

10.2.2

Quality control functions

The meanings of a good processing quality of the feeding in furnaces are twofold:

a) High reactivity or high conversion rate.

b) High direct recovery rate (or in other words, low burning or volatile loss) of

the materials in processes.

The control functions of materials’ conversion rate and recovery rate are largely

variable, depending on different furnaces/kilns and different processes. There is no

universal expression covering all situations.

For gas-solid reactions, much work has been reported on correlations between

the conversion coefficient and the factors including the residence time in the

reaction zone, composition of gas phase, reaction rate constant etc. Some

examples are given in Table 10.3.

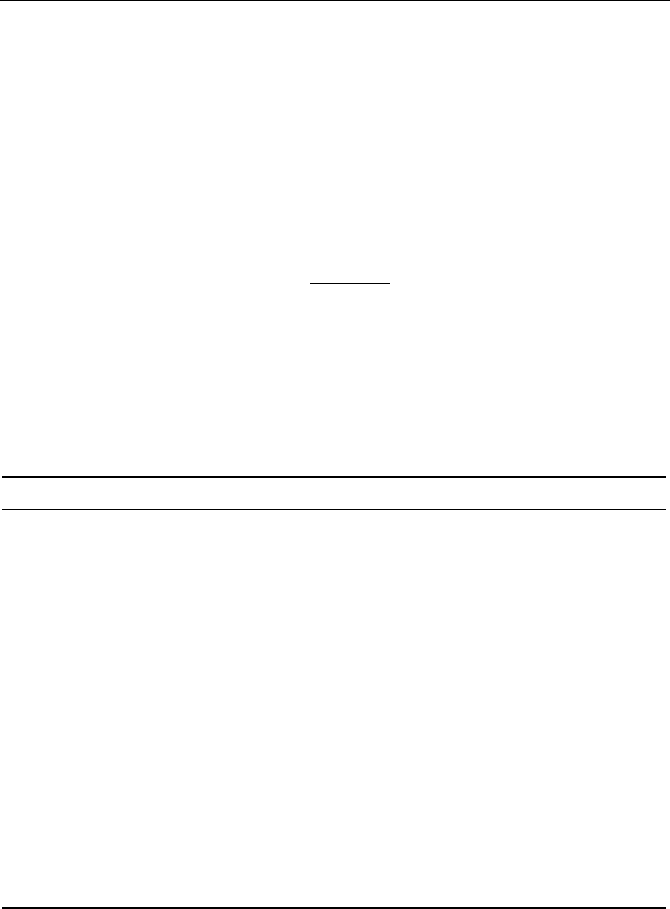

Table 10.3 Conversion coefficient in gas-solid reactions (Hetsroni, 1997)

Kinetic models

Average conversion

ķ

Remark

Uniform reaction model:

Single order

vA

1

1

1 kC t

−

+

N-order in series

vA

1

1

1

N

i

kC t

−

+

⎡⎤

⎢⎥

⎣⎦

i

t

t

N

=

Shrinking core model:

a) Gas film diffusion control:

Single order

()

1

1e

β

β

−

−

t

t

=

β

ķ

N-order in series

⎥

⎦

⎤

⎢

⎣

⎡

−

+−

∑

−

=

−−

−

1

0

1

)!(

)(1

e

1

N

m

mN

N

mN

Nm

β

ββ

β

m is a natural

number

(

mİN−1)

b) Chemical reaction control:

Single order

()

β

βββ

−

−

⎟

⎟

⎠

⎞

⎜

⎜

⎝

⎛

+

⎟

⎟

⎠

⎞

⎜

⎜

⎝

⎛

−

⎟

⎟

⎠

⎞

⎜

⎜

⎝

⎛

e

1

6

1

6

1

3

32

When

1

β

˚1

32

0083.005.025.01

βββ

−+−

β

-order in series

()

()

()

()

()

∑

∑

=

−

=

−

−

⎟

⎟

⎠

⎞

⎜

⎜

⎝

⎛

−

−−

−+

−

+−

+−

−

3

0

1

0

3

1

)!3(!!1

!3!1

e

!!1

!2

1

m

m

N

m

N

m

NmmN

mN

N

mmN

mN

β

β

β

c) Ash diffusion control:

Single order

432

0015.00089.0045.02.01

ββββ

+−+−

ķ Definition of symbols in this table identical to that in Table 10.1.

Xiaoqi Peng, Yanpo Song, Zhuo Chen and Junfeng Yao

The losses in smelting and melting processes include flying ashes, molten slag

and discarded slag taken away from the furnace and burning loss etc. To estimate

these losses, specific empiric correlations have to be developed for different

furnaces and processes.

Given

B

x as the conversion coefficient and R the recovery rate, the control

function of the product quality of a furnace is:

B

xR

ϕ

= • (10.13)

10.2.3

Control function of service lifetime

For most FKNME, the service lifetime under continuous working condition is

usually determined by the erosion rate of the refractory linings. Generally, the

causes of refractory linings erosion include:

a) Molten slag permeating through the gaps between or holes in refractory

bricks (static erosion).

b) Strength reducing or melting of the refractory due to high temperature (high

temperature erosion).

c) Scouring and dissolving of the lining surface by molten slag (chemical and

mechanical erosion).

According to the research of F. Oeters et al. (Oeters, 1994), the erosion rates can

be estimated as follows:

a) Static erosion rate

jt

v

:

jt

jt

0

d

cos

d16

s

d

v

tt

σ

γξ

η

⎛⎞

==

⎜⎟

⎝⎠

•

(10.14)

where

d

0

represents the original diameter of a gap or hole;

σ

represents the

surface tension at interface;

γ

represents the wetting angle between molten slag

and the refractory lining;

ξ

represents the coefficient of labyrinthic degree

(

10 <<

ξ

);

η

represents the viscosity of molten slag; t represents the time that

molten slag contacts the refractory surface; and

jt

s represents the thickness of

the eroded refractory linings.

b) High temperature erosion

gw

v

: for electric arc furnace, giving the arc voltage

arc

U

, current

arc

I

, the erosion coefficient of the furnace’s lining

w

RE

at high

temperature can be expressed as (Oeters, 1994),

2

arc

2

arc

w

r

IU

RE

= (10.15)

where

r denotes the radius of the hearth.

Taking the static erosion into account, the erosion rate of the linings of an

electric arc furnace can be expressed as:

10 Multi-objective Systematic Optimization of FKNME

gw

wa

gw s w jt

(1 )

d

ds

tt

K

v

f

RE v

tL L

λ

δ

−

==− − +

(10.16)

where

w

w

RE

e

K = is a ratio with

w

e representing the radiative heat flux through

hearth wall surface. The shielding coefficient f

s

measures how much the arc is

shaded by the solid charges in the furnace (0<f

s

<1). L represents the melting heat

of the refractory materials;

λ

represents the heat conductivity of the refractory

linings,W/(mgK); t

w

and t

a

represent respectively the temperatures at the inner and

outer surfaces of the refractory wall; and

δ

represents the thickness of the lining.

In flame furnaces, the radiation heat flux received by the lining of the wall that

faces the flame is given by (Mei, 1987):

[]

m

nb f nb f m f m nb nb m f nb

nb

fffmf

(1 )(1 ) / (1 ) /

(1 ) (1 )

EEFFEFF

q

εεεε ε ε

εϕ εεε ε

+−− + −

=

+− + −

••

(10.17)

where

nb

ε

,

f

ε

and

m

ε

denote the emissivities of the inner walls, the flames

and the materials respectively;

m

F and

nb

F denote the contacting areas of the

bath surface and inner walls with the flames. The emission of the flames

4

fff

TE

σε

= , the emission of the surface of the melting bath

4

mmm

TE

σε

= .

Similar to Eq.10.16, the lining’s high temperature erosion rate v

gw

in the flame

furnaces is given by:

jt

awnb

gw

gw

d

d

v

tt

LL

q

t

s

v +

−

−==

δ

λ

(10.18)

c) Chemical and mechanical erosion: the process of flowing molten slag

scouring and eroding the refractory lining can be analyzed in a way similar to the

analysis of convective mass transfer.

The rate of the soluble compositions in the refractory dissolving into the molten

slag is estimated by:

()

∞−−

−= CCj

izczc

β

(10.19)

where

zc−

β

is the mass transfer coefficient between refractory lining and molten

slag, s/cm ;

i

C and

∞

C are respectively molal concentration of the soluble

compositions at the dissolving interface and in the molten slag,mol/cm

3

.

The mass transfer coefficients are empirically correlated by experiments:

For laminar flow:

315.0

064.0

zzzc

ScRe

l

D

=

−

β

(10.20)

For turbulent flow:

319.0

015.0

zzzc

ScRe

l

D

=

−

β

(10.21)

where D denotes the diffusivity of the soluble compositions in the molten slag. (as

an example, the diffusivity of MgO in the slag of the electrical steel-making

(mol/(cm

2

• s))

Xiaoqi Peng, Yanpo Song, Zhuo Chen and Junfeng Yao

furnaces is around s/m 102.1

28−

× ); l denotes the distance of the molten slag

flowing around the lining; Re

z

is the Reynolds number of the molten slag flow

close to the wall; Sc

z

is the Schmidt number of molten slag.

The chemical and mechanical erosion rate of the molten slag can be expressed

as:

6

hr

h

c

d()10

d

jczi j

j

sCCM

v

t

β

ρ

−

−∞

−×

==

•

s)/(cm

(10.22)

where M

rj

is the molecular weight of the soluble compositions of the lining, and

c

ρ

is the density of the lining, kg/m

3

.

The overall erosion rate of a furnace can be estimated by adding up the two

individual erosion rates:

hjgw

vvv +=

Σ

(10.23)

To maximize the service lifetime of a furnace,

Σ

v should be maintained as low

as possible. Depending on the conditions in each specific case, the Eq. 10.14

̚Eq.

10.20 may serve as a guide to develop a strategy of minimizing

Σ

v through

optimizing the operations and/or the structure.

10.2.4

Functions of energy consumption

Every type of furnace or production process has its own fuel consumption (or

electricity consumption) function.

For combustion furnaces, the fuel consumption is defined as:

yx yq hs js sr sl hr kq mq w1

DW

()Gq Q Q Q Q Q Q Q Q Q

B

Q

+++++− ++ +

=

(kg/h or m

3

/h)

(10.24)

where

yx

q is the heat to produce unit product; G is the output rate or processing

capability of the furnace;

yq

Q ,

hs

Q ,

js

Q ,

sr

Q and

sl

Q are respectively the

heat brought away by gas, heat lost due to chemically incomplete burning, heat

lost due to mechanically incomplete burning, heat lost through dissipation and

heat carried away by coolant;

hr

Q ,

kq

Q ,

mq

Q and Q

wl

are respectively the

chemical reaction heat (positive for exothermic reaction and negative for

endothermic reaction), and physical heat carried in by air, coal gas and materials;

DW

Q is the average heat value (lower) of fuel,

kg/kJ

or kJ/m

3

.

For electrically heating furnaces, the electricity consumption function, DRF

(dissipation rate function) is defined by:

()

3600

wlhrslsrxsyqyx

QQQQQQGq

DRF

+−++++

=

(kW)

(10.25)

where Q

yq

and Q

xs

are the heat losses in exhaust and electric circuits, kJ/h; the

definitions of other symbols are the same as those in Eq. 10.24. The minimization

10 Multi-objective Systematic Optimization of FKNME

of B and DRF is often achieved through optimized arrangement of the terms in

Eq.10.24 and Eq.10.25.

10.2.5

Control functions of air pollution emissions

Pollutants from the FKNME usually include SO

x

, NO

x

, smoke, dust, chloride,

hydrogen chloride, fluorine, fluoride, silicon dioxide, asbestos dust and the

compounds/vapor of metals such as Pb, Be, Ni, Sn and Hg etc. These emissions

have been subject to strict regulation specified by national standards. Particularly

in China, the following criteria must be met in the development and operation of

the FKNME:

[]

[]

GB

i

i

WRW

WRW

WRF

= İ1 (10.26)

where [WRW

i

] is the emission rate (kg/h) or density (mg/m

3

) of the ith pollutant

discharged into air. For particulates in the smoke, [WRW

i

] can also be smoke

emissivity measured by Lingman’s fume meter. For any possible pollutant

produced by the furnace, its specific [WRW

i

] must be measured. [WRW

i

]

GB

denotes the allowed upper limit of the emission of pollutant

i ruled by Chinese

National Standard (GB). Interested readers can refer to “The Comprehensive

Emission Standard of Air Pollutants

ü 1996 (GB16297)”, “The Emission

Standard of Air Pollutants for Industrial Furnaces and Kilns

ü1996 (GB9078)”

and “The Emission Standard of Air Pollutants for Boilers

ü2001 (GB13271)”

for more information.

10.3

The General Methods of the Multi-purpose Synthetic

Optimization

The optimization of each subobject f

1

(x), f

2

(x), …,f

q

(x) or

ϕ

1

(x),

ϕ

2

(x),…,

ϕ

q

(x)

may collide with each other in the Multi-purpose synthetic optimization. It is

impossible to have their minimum point overlap together, which means, the

optimum solution of each controlling functions can’t be obtained simultaneously.

Decision favorable to the overall optimization then should be made by

harmonizing all the optimum solution of each object.

Various optimization methods are introduced as follows.

10.3.1

Optimization methods of artificial intelligence

The artificial intelligent optimization methods include (Liu and Bao, 1999):

Xiaoqi Peng, Yanpo Song, Zhuo Chen and Junfeng Yao

a) To converse multi-object optimization into single-object optimization. The

comprehensive score is given to each sample according to some standards. The

samples are then classified in terms of a score limit, or thinking of multi-object

comprehensively, and they can be optimized by classes.

b) To find out the common optimization zone. The optimization zone of a single

object, in which optimum samples are situated, is searched by some spacial

transmission. The overlapped optimization zones of individual objects are then the

common ones of the multi objects, in which many targets are considered. The

samples appeared in each common optimization zone have common

characteristics of optimization.

c) To optimize by combining neural network with multi-outputs and genetic

algorithm (GA). In this method, the multi objects are regarded as output

parameters, the original industrial parameters act as input ones, and the network is

trained by iterations so that the functions of the input and output are constructed.

The network function is consisted of the values of the multi optimization objects,

which is the fitness function of GA, and the optimization values could be found by

iterations.

The process of the optimization can be divided into three steps (Nanjing

University, 1978):

a) Optimization of a single target variable:

üSample classification: the training samples of the industry production are

classified according to a single target variable, i.e. each variable corresponds to a

certain standard.

üMapping information: firstly, the noise samples are filtrated by applying

belonging degree. Then, the best two-dimensional mapping graph of each of the

good samples are obtained by applying the primary component analysis (PCA),

the optimum decision plate (ODP), and the partial least square (PLS) separately.

Thirdly, the best mapping graph is selected. The optimum industrial parameters of

any single variable could be gotten in details from the best mapping graph of each

of the single target.

b) Comprehensive target optimization (Qin et al., 1980; Gong, 1979):

üComprehensive classification of samples: the samples that met the indices of

multi targets simultaneously are regarded as the first level; otherwise they belong

to the second level. The classification pattern recognition research is conducted by

applying belonging degree of samples and the back-propagation neural network

(BPN).

üOptimization direction: the target values of the sample pattern of the two

class centers are forecasted with BPN. The two class centers can show the features

of each of the sample class if the two target values differ greatly. Thus, the first

class center corresponds to the stable and optimized sample pattern, which is the

center of a high dimensional optimization space. The typical variable parameters