Bayro-Corrochano E., Scheuermann G. Geometric Algebra Computing: in Engineering and Computer Science

Подождите немного. Документ загружается.

468 D. Fontijne and L. Dorst

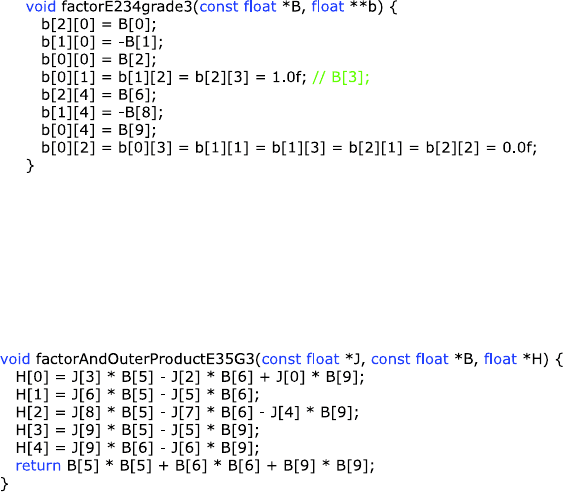

Fig. 2 Example of a generated factorization function which computes the factors b

i

of a nor-

malized blade B. This function implements the core of the FastFactorization algorithm for

n = 5, k = 3, and F = e

2

∧ e

3

∧ e

4

. The indices refer to a particular ordering of the basis

blades in this implementation. For instance, the first line b[2][0] = B[0] corresponds to

(e

4

(e

2

∧e

3

∧e

4

)

−1

)(β

123

e

1

∧e

2

∧e

3

) =β

123

e

1

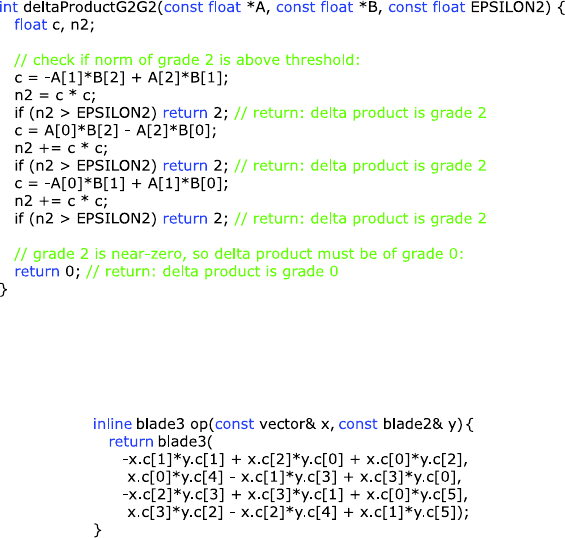

Fig. 3 Example of a generated function which combines the extraction of a single factor of B

with computing the outer product of J with that factor. The function stores the outcome of the

outer product in H and returns the squared norm of the factor. This particular function computes

H =J ∧((e

i

F)B) for (e

i

F =e

3

∧e

5

),grade(J) =3, and n =5

The most significant optimization is the main loop of the algorithm: the imple-

mentation combines the factorization of Step 5b with the outer product of Step 5c.

This allows it to take advantage of the zero coordinates which are in the factors due

to the FastFactorization algorithm. One factor-and-outer-product function is gener-

ated for each valid combination of e

i

F and grade(J) in Steps 5b, c of the algorithm.

These functions are called via a lookup table. Figure 3 shows an example of such a

function.

There are in the order of O(

j

k

n

k

) = O(n2

n

) of these functions, each

with code size proportional to n

n

j

, for a total code size of O(

j

k

n

k

n

n

j

) =

O(n2

2n

).

The functions do not normalize the factors; instead, they return the squared norm

of the factors. The main loop uses this norm to correct the threshold check (Step 5d).

This optimization was described in Sect. 3.1.

After the main loop of the algorithm has been completed, the function may com-

pute the grade of the delta product. This is only done if its outcome is required to

verify that J is of the correct grade, as was described in Sect. 3.4. The delta product

is implemented by an optimized function described next.

Efficient Algorithms for Factorization and Join of Blades 469

4.4 Implementation of the Delta Product

The delta product is the highest-nonzero-grade part of the geometric product. In the

context of the StableFastJoin algorithm, we are only interested in its grade, but we

need to compute (part of) the actual delta product to establish what this grade is.

Fortunately, it is possible to limit the candidate grades, so that we can avoid com-

puting the full geometric product. Our FastDeltaGrade(A, B,δ) algorithm evaluates

the grade of the delta product using the following optimizations:

• First of all, we compute the grade parts from high-to-low and abort early when we

find that the norm of the grade part is above the threshold δ. We implement this

early-abort strategy per coordinate. So only as many coordinates of the geometric

product are computed as are required to determine which grade part has a norm

exceeding δ.

• Secondly, no grade part of the geometric product AB above n needs to be com-

puted, as such grade parts do not exist in the geometric algebra of V

n

. Also, no

grade part above 2n −(grade(A) +grade(B)) needs to be computed; these grade

parts cannot be nonzero for then (4) would have grade(

join(A, B)) exceeding n,

which is obviously impossible.

• The lowest possible grade part that may be nonzero is (grade(A) − grade(B))

(recall that grade(A) ≥ grade(B) after Step 2 of the FastJoin algorithm). But

this grade part actually never has to be computed explicitly: if all grade parts

above it are zero, then B must be fully contained in A, and then grade(AΔB) =

grade(A) −grade(B).

To summarize, the grade parts k of the geometric product which must actually be

evaluated to implement the delta product for use with the FastJoin algorithm are

grade(A) −grade(B)<k≤min

n, 2 n −grade(A) −grade(B)

.

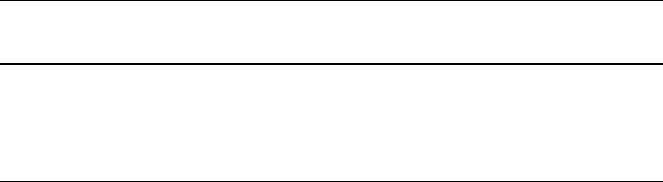

The code for the implementation of the FastDeltaGrade(A, B,δ) algorithm is gen-

erated automatically. One function is generated for each valid combination of

grade(A) and grade(B). Figure 4 sho

ws an example of such a function.

If a stands for the grade of A and b stands for the grade of B, then in the order

of O(

b

b

2

) = O(n

3

) such functions are generated, each with a code size in the

order of O(

b

a≤b

n

b

n

a

)<O(

b

n

b

2

n−1

) =O(2

2n−1

). The total code size is

O(

b

a≤b

ba

n

b

n

a

)<O((

b

b

n

b

)(

a

a

n

a

)) =O(n

2

2

2n−2

).

4.5 Benchmarks

We performed our benchmarks on a 1.83 GHz Core2 Duo notebook, using a single

thread (i.e., one CPU). The programs were compiled with Visual C++ 2005, using

standard optimization settings. 32 bit floating point arithmetic was used.

We ran benchmarks for 3 ≤ n ≤ 6. Above 6-D, our particular implementation

starts to make less sense because the amount of generated code becomes too large

470 D. Fontijne and L. Dorst

Fig. 4 Example of a function that computes AΔB for grade(A) = 2, grade(B) = 2, and n = 3.

Note how the squared norm of the grade 2 part is built up, allowing for early-abort after the evalu-

ation of each coordinate

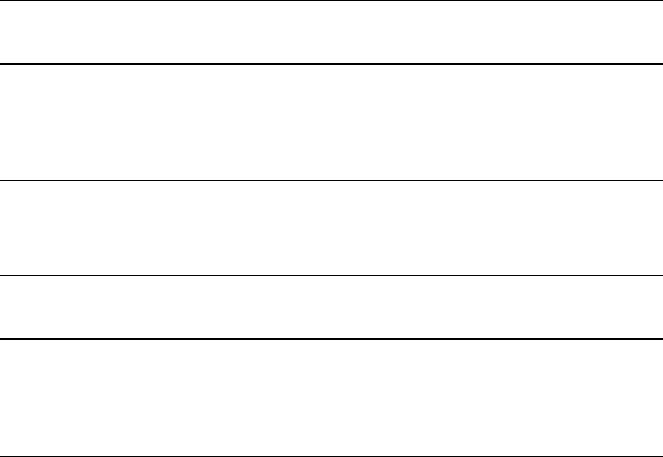

Fig. 5 Example of a generated outer product function from Gaigen 2. Our benchmarks are

relative to this type of functions. This particular example computes the outer product of a vector

and a 2-blade for n = 4

(one may then switch to a conventional hand-written implementation which will be

somewhat less efficient, see the discussion in Sect. 5). Besides giving absolute val-

ues, such as the number of factorizations that can be performed per second, we also

list benchmarks relative to the outer product of a vector and a 2-blade in the same

algebra. This gives a fair impression of how expensive a factorization or

join is rel-

ative to a straightforward bilinear product. The efficiency of such bilinear products

as generated by Gaigen 2 is comparable to optimized hand-coded linear alge-

bra [10]. Figure 5 shows an example of an outer product.

4.5.1 Factorization Benchmarks

To benchmark the FastFactorization(A, B) algorithm, we generated a number of ran-

dom blades of random grades. The grades of the blades were uniformly distributed

over the range [0,n]. A random k-blade was generated by computing the outer prod-

uct of k random vectors. A random vector was generated by setting the n coordinates

of the vector to random values, uniformly distributed in the range [−1, 1]. Table 1

shows the results for our implementation.

Efficient Algorithms for Factorization and Join of Blades 471

Table 1 Benchmarks of our implementation of the FastFactorization algorithm. The first column

(“without orthogonalization”) lists the cost of a factorization relative to the outer product of a

vector and a 2-blade, without using Gram–Schmidt to orthogonalize resulting the factors. The

third column “with orthogonalization” lists those values for the case where the Gram–Schmidt

algorithm is applied after factorization. The columns “factorizations per second” lists absolute

values (in millions), with and without using orthogonalization

n Without Factorizations With Factorizations

orthogonalization per second (w/o) orthogonalization per second (with)

35.1× 15 M 8.5× 9.1 M

45.2× 9.2 M 9.9× 4.8 M

53.4× 5.2 M 6.5× 2.8 M

63.8× 2.8 M 7.1× 1.5 M

For reference, we also benchmarked the implementation of the factorization al-

gorithm as described in [6], which is the direct predecessor the FastFactorization al-

gorithm. This algorithm uses the regular projection operator to find factors, and the

implementation does not use code generation as extensively as the implementation

of our new algorithm. We measured 1.5 M factorizations per second in 3-D, 0.47 M

factorizations per second in 4-D, and 0.25 M factorizations per second in 5-D (a 6-D

implementation is not provided with the software of [6]). Thus we achieved a more

than tenfold improvement in performance with our new algorithm.

4.5.2 Join Benchmarks

To benchmark the FastJoin and StableFastJoin algorithms, we generated pairs of

random blades A and B using the same method as described for the factorization

benchmark. The grades of the blades A and B were uniformly distributed over the

range [0,n]. However, the pairs of random blades were generated such that they

shared a common factor. The grade of the common factor was uniformly distributed

over the range [0, min{grade(A), grade(B)}].

We measured the time it took to compute the

join and the time it took to compute

the

meet as well (i.e., the meet is computed from the join using (1)). We bench-

marked both the FastJoin algorithm (ε = 10

−6

) and the StableFastJoin algorithm

(ε =10

−2

, δ =10

−6

).

Table 2 shows the relative results, while Table 3 shows the absolute results.

In Table 2 also shows figures for computing the Gram–Schmidt orthogonalization

of the factors of each pair of random blades. For this, we retained the factors that

generated the blades, performed a standard Gram–Schmidt orthogonalization, and

discarded the dependent factors (using the same ε threshold as for the

join). This

algorithm was implemented using the same principles (i.e., optimizing and unrolling

the inner loop of the algorithm, but without using code generation) as the FastJoin

algorithm. Hence, the last column allows us to compare the FastJoin algorithms to

472 D. Fontijne and L. Dorst

Table 2 Relative benchmarks of our implementation of the FastJoin algorithm. The values are

relative to the outer product of a vector and a grade 2 blade in the same algebra (i.e., lower values

are better)

n FastJoin FastJoin StableFastJoin StableFastJoin Gram–

+

meet + meet Schmidt

39.8× 12× 9.8× 12× 12×

48.7× 11× 9.1× 11× 12×

55.8× 7.7× 7.0× 8.8× 7.9×

66.4× 9.5× 6.8× 10× 8.0×

Table 3 Absolute benchmarks of our implementation of the FastJoin algorithm (millions of prod-

ucts per second). See Table 2 for relative benchmarks

n FastJoin FastJoin StableFastJoin StableFastJoin

+

meet + meet

3 7.4 M 6.0 M 7.4 M 6.0 M

4 5.4 M 4.1 M 5.2 M 4.0 M

5 3.1 M 2.4 M 2.6 M 2.1 M

6 1.8 M 1.2 M 1.6 M 1.1 M

a classical linear algebra approach for computing a minimal basis set which spans a

subspace union.

As with the factorization algorithm, we also performed a benchmark on the

join

algorithm described in [6], which was the predecessor to our new algorithm. This

algorithm does not use fast factorization and uses less extensive code generation. It

also computes the

join and meet simultaneously, a strategy which actually paid off

for that algorithm (as opposed to our new algorithm, see Sect. 5.3). We measured

0.58 M joins per second in 3-D, 0.46 M joins per second in 4-D, and 0.29 M joins

per second in 5-D. Thus we have achieved approximately a 10-times performance

improvement.

4.5.3 Code Size

Table 4 lists the size of the generated code for our factorization and

join implemen-

tation. The code size grows in approximate agreement with the computed complex-

ities of O(n

2

2

n−1

) and O(n2

2n

), respectively. We state “approximate” because for

low-dimensional spaces, the constant code size of the algorithm (which is included

in the table) can be relatively large compared to the amount of generated code, es-

pecially for the factorization algorithm.

Efficient Algorithms for Factorization and Join of Blades 473

Table 4 Source code size of

our FastFactorization and

StableFastJoin

implementations

n FastFactorization FastJoin

3 3.74kB 14.7kB

4 5.85kB 26.4kB

5 11.4 kB 75.9 kB

6 25.8 kB 321 kB

7 62.1kB 1.47MB

5 Discussion

5.1 Fast Factorization Algorithm

Our benchmarks show that the FastFactorization algorithm is in the order of 5 times

slower than a regular outer product in the same space. Adding a Gram–Schmidt

orthogonalization to orthogonalize the factors approximately doubles the cost of the

function.

The time complexity of the FastFactorization algorithm is O

n

n/2

=O(n

−1/2

2

n

)

(using the Stirling approximation of factorials) due to the step which finds the largest

coordinate of the input blade. The fact that this step uses conditional statements

makes it extra expensive on modern processors. The outer product of a vector and

a 2-blade relative to which we presented the benchmarks in Table 1 has a time

complexity of O(n

3

) and uses no conditional statements (an outer product of ar-

bitrary blades has a time complexity around O(2

n

)). As a result, FastFactorization

is about five times more expensive than such an outer product. The benchmarks

in Fig. 1 suggest that the FastFactorization algorithm becomes less expensive

compared to the outer product as n becomes larger, but if one plots

n

n/2

/n

3

for

1 ≤n ≤20, it becomes clear that n =6 is in fact the turning point beyond which the

FastFactorization should become exceedingly expensive relative to the outer prod-

uct. So our figure of five times slower is only valid for the limited range of n for

which we benchmarked.

Note that it is rather remarkable (but logical) that in general the FastFactorization

algorithm does not use all coordinates of the input blade once it has found which

coordinate is the largest one: the k factors of a k-blade have kncoordinates, which

in many cases is less than the

n

k

coordinates of the blade. For example, in Fig. 2,

the coordinates B[4], B[5], and B[7] are not used (B[3] is known to be 1, and

so it is not used either). This demonstrates the redundant encoding of blades in the

additive representation, and the implications of the Plücker relations [7].

The code size (Table 4) of the generated implementation is acceptable (less than

100 kB) up to 7-D, but extrapolation of the figures suggests that a 10-D implementa-

tion would about 1 MB in size. This is confirmed by the theoretical figure that code

size should be in the order of O(n

2

2

n−1

). Thus, in high-dimensional spaces, we

recommend using a more conventional implementation approach. Our initial imple-

mentation of the FastFactorization algorithm was implemented without using code

generation and was about two times slower than the generated implementation.

474 D. Fontijne and L. Dorst

The FastFactorization algorithm is a useful building block for other algorithms.

In this paper we used it for computing the

join. Another useful application may be a

fast “blade manifold projection” function which projects a nonblade onto the blade

manifold in Grassmann space (the elements satisfying the Plücker relations). This

may be implemented by naively “factoring” the nonblade and using the factors thus

obtained to compute a valid blade as their outer product. We have also used the

FastFactorization algorithm to factor conformal point pairs into individual points.

5.2 FastJoin Algorithms

The benchmarks show that our implementation of the FastJoin algorithms is slightly

faster than an implementation of Gram–Schmidt orthogonalization applied to the

factors of the input blades. This is quite remarkable, as it means that even if the only

geometry you need is computing the

join, you may be better off using the basis-of-

blades representation rather than a factorized representation in terms of basis sets

(at least for such low-dimensional spaces).

To make sure the grade of the

join which is computed by our FastJoin algorithm

is independent of the (arbitrary) basis, use of the delta product is required, invoking

additional computational cost. However, the delta product needs to be invoked only

when the algorithm cannot determine that it has computed a

join of the right grade.

As a result, the cost of the StableFastJoin (which uses the delta product) is only

about 10% higher than that of the straightforward FastJoin algorithm.

The time complexity of the FastJoin algorithms is O(n

2

n

n/2

) = O(n

3/2

2

n

),as

we need to compute in the order of n the outer product of vectors with blades (in

Step 5c), and each of these outer products has a time complexity of order n

n

n/2

.

This means that the cost FastJoin algorithm relative to a vector-2-blade outer product

should increase right from n =3. The fact that the benchmarks in Fig. 2 do not agree

with this is likely due to the decreasing relative cost of the overhead (filtering out

special cases, and such) of the algorithm.

We implemented our

join algorithms using code generation. Starting around 7-D,

this is no longer tractable. The generated code for 6-D is 0.32 MB, while the code for

7-D is 1.47 MB in size; generating and compiling the 7-D code took several minutes.

The size of the code is in the order of n2

2n

. Hence, for n ≥7, we recommend using a

more conventional implementation which does not explicitly spell out the functions

used in the inner loop for all possible arguments.

5.3 Simultaneous Computation of Meet and Join Costs More

It is possible to compute the meet directly, instead of computing it from the join

using (1). In [6, 10], we factorize the dual of the delta product

(AΔB)

∗

=s

1

∧s

2

∧···∧s

k

Efficient Algorithms for Factorization and Join of Blades 475

and project the factors s

i

onto either A or B. If those projections are not zero, they

are factors of

meet(A, B). This method is due to [2].

Using this approach, one may also compute both the

join and the meet simulta-

neously. Since factors of the dual of the

join may be obtained as the rejection of the

s

i

from A or B (i.e., the operation (s

i

∧A)A

−1

,see[6]), such an algorithm is able

to compute the

join and the meet simultaneously and terminate as soon as either is

fully known.

We implemented this idea using the same code generation techniques as used for

the FastJoin algorithms, expecting it to be somewhat faster than the StableFastJoin

algorithm. However, it turned out to be slightly slower (5% to 15%) and required

about 1.5 times as much code to be generated.

One of the reasons for it being slower is that a full evaluation of the (dual of the)

delta product is always required. By contrast, the StableFastJoin algorithm merely

computes the grade of the delta product (not its numerical value), and only when

“in doubt.” One of the reasons for the larger code size is that one needs both a

meet-from-join and a join-from-meet function.

Besides being slower, the implementation of the simultaneous

meet and join al-

gorithm also takes more effort because the algorithm is more complex. For all these

reasons, we did not include a detailed description of it in this paper.

6Conclusion

Using our FastFactorization algorithm, the outer factorization of a k-blade in V

n

is a computationally trivial operation. It amounts to copying and possibly negating

selected coordinates of the input blade into the appropriate elements of the factors.

Implemented as such, factorization is only about five times slower than an outer

product in the same algebra, at least in the low-dimensional spaces (less than 7-D)

for which we benchmarked. The O(n

−1/2

2

n

) time complexity of the algorithms

is determined by the number of coordinates of the input k-blade, which becomes

exceedingly large in high-dimensional spaces.

The

join and meet of blades are relatively expensive products, due to their nonlin-

earity. However, when efficiently implemented through our FastJoin algorithm, their

cost is only in the order of 10 times that of an outer product in the same algebra,

compared to 100 times in previous research. Again, these figures are valid only for

low-dimensional spaces. The O(n

3/2

2

n

) time complexity makes clear that in high-

dimensional spaces, one should use a multiplicative presentation of blades and use

classical linear algebra algorithms like QR (which has O(n

3

) time complexity) to

implement the

join,asin[10]. Our StableFastJoin algorithm, which takes both grade

and numerical stability into account, is just 10% slower than the FastJoin algorithm.

These speeds are obtained at the expense of generating efficient code that spells

out the operations for certain combinations of the basis blades and grades in the

arguments. While efficient, this is only truly possible for rather low-dimensional

spaces, since the amount of code scales as O(n

2

2

n−1

) for FastFactorization and

O(n2

2n

) for the FastJoin algorithm.

476 D. Fontijne and L. Dorst

References

1. Ablamowicz, R., Fauser, B.: CLIFFORD—a Maple package for Clifford algebra computations

with bigebra, http://math.tntech.edu/rafal/cliff12/, March 2009

2. Bell, I.: Personal communication (2004–2005)

3. Bouma, T.: Projection and factorization in geometric algebra. Unpublished paper (2001)

4. Bouma, T., Dorst, L., Pijls, H.: Geometric algebra for subspace operations. Acta Math. Appl.

73, 285–300 (2002)

5. Dorst, L.: The inner products of geometric algebra. In: Dorst, L., Doran, C., Lasenby, J.

(eds.) Applications of Geometric Algebra in Computer Science and Engineering, pp. 35–46.

Birkhäuser, Basel (2002)

6. Dorst, L., Fontijne, D., Mann, S.: Geometric Algebra for Computer Science: An Object Ori-

ented Approach to Geometry. Morgan Kaufmann, San Mateo (2007)

7. Eastwood, M., Michor, P.: Some remarks on the Plücker relations. Rendiconti del Circolo

Matematico di Palermo, pp. 85–88 (2000)

8. Fauser, B.: A treatise on quantum Clifford algebras. Habilitation, Uni. Konstanz, Jan. 2003

9. Fontijne, D.: Gaigen 2: a geometric algebra implementation generator. GPCE 2006 Proceed-

ings

10. Fontijne, D.: Efficient Implementation of geometric algebra. Ph.D. thesis, University of Ams-

terdam (2007). http://www.science.uva.nl/~fontijne/phd.html

11. Hildenbrand, D., Koch, A.: Gaalop—high performance computing based on conformal geo-

metric algebra. In: AGACSE 2008 Proceedings. Springer, Berlin (2008)

12. Perwass, C.: The CLU project, Clifford algebra library and utilities. http://www.perwass.de/

cbup/programs.html

Gaalop—High Performance Parallel Computing

Based on Conformal Geometric Algebra

Dietmar Hildenbrand, Joachim Pitt,

and Andreas Koch

Abstract We present Gaalop (Geometric algebra algorithms optimizer), our tool for

high-performance computing based on conformal geometric algebra. The main goal

of Gaalop is to realize implementations that are most likely faster than conventional

solutions. In order to achieve this goal, our focus is on parallel target platforms like

FPGA (field-programmable gate arrays) or the CUDA technology from NVIDIA.

We describe the concepts, current status, and future perspectives of Gaalop dealing

with optimized software implementations, hardware implementations, and mixed

solutions. An inverse kinematics algorithm of a humanoid robot is described as an

example.

1 Introduction

In recent years, geometric algebra, and especially the 5D Conformal geometric al-

gebra, has proved to be a powerful tool for the development of geometrically in-

tuitive algorithms in a lot of engineering areas like robotics, computer vision, and

computer graphics. However, runtime performance of these algorithms was often

a problem. In this chapter, we present our approach for the automatic generation

of high-performance implementations with a focus on parallel target platforms like

FPGA or CUDA. In Sects. 2 and 3, we present some related work and the basics of

conformal geometric algebra. Our main goal with Gaalop is to realize implemen-

tations that are most likely faster than conventional solutions. The main concepts

combining both approaches for the optimization of software and of hardware im-

plementations are presented in Sect. 4. The corresponding architecture of Gaalop

is described in Sect. 5. An inverse kinematics algorithm for the leg of a humanoid

robot is presented in Sect. 6 as a complex example for the use of Gaalop. The current

status of Gaalop and its future perspectives can be found in Sect. 7.

D. Hildenbrand (

)

Interactive Graphics Systems Group, University of Technology Darmstadt, Darmstadt, Germany

e-mail: dhilden@gris.informatik.tu-darmstadt.de

E. Bayro-Corrochano, G. Scheuermann (eds.), Geometric Algebra Computing,

DOI 10.1007/978-1-84996-108-0_22, © Springer-Verlag London Limited 2010

477