Zhu J., Cook W.D. (Eds.) Modeling Data Irregularities and Structural Complexities in Data Envelopment Analysis

Подождите немного. Документ загружается.

301

DMU 3, is an inefficient one. Due to ignorance of the inaccurate estimation

of DMU 3 in the 1% case, the total difference in this case is smaller than that

of the 1% case. The total and average differences are 0.1723 and 0.0115,

respectively, where the average is twenty times larger than that of the fuzzy

set approach. Regarding the average error in estimating the true efficiency, it

is 1.4048%, a value which is also twenty times larger than that of the fuzzy

set approach.

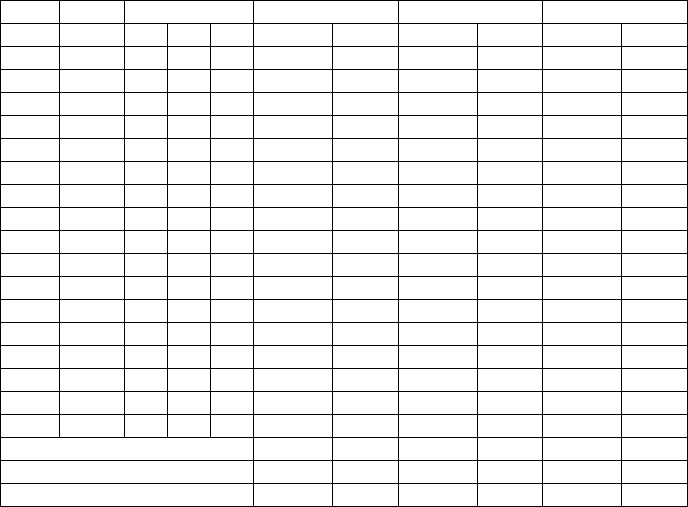

Table 16-3. Results of the DMU-deletion approach

0% Relative rank 1% missing 2% missing 5% missing

DMU Eff. 1% 2% 5% Eff. Rank Eff. Rank Eff. Rank

1 0.8283 13 12 10 0.8442 14 0.8442 13 0.8442 11

2 1.0000 - - - - - - - - -

3 0.9581 11 - - 0.9921 10 - - - -

4 1.0000 1.0000 1.0000 1.0000

5 1.0000 1.0000 1.0000 1.0000

6 0.9572 12 11 9 0.9856 11 0.9856 10 1.0000 8

7 1.0000 1.0000 1.0000 1.0000

8 0.7941 15 14 - 0.8073 15 0.8073 14 - -

9 1.0000 - 1.0000 1.0000 - -

10 1.0000 1.0000 1.0000 1.0000

11 1.0000 1.0000 1.0000 1.0000

12 0.8172 14 13 11 0.8728 13 0.8728 12 0.8728 10

13 0.7441 16 15 - 0.7956 16 0.7956 15 - -

14 0.9644 10 10 8 0.9721 12 0.9721 11 0.9728 9

15 1.0000 - 1.0000 1.0000 - -

16 1.0000 1.0000 1.0000 1.0000

17 1.0000 1.0000 1.0000 1.0000

Total absolute difference 0.2063 6 0.1723 4 0.1227 4

Average difference 0.0129 0.3750 0.0115 0.2667 0.0112 0.3636

Average error 1.5388% 1.4048% 1.2787%

For the last case of the 5% missing rate, there are 11 DMUs left after

deleting 6 of them. As expected, the efficiency of every DMU is greater than

or equal to those of the original case. The total difference from the 11 DMUs

is 0.1227, with an average of 0.0112. This value is approximately four times

larger than the value of 0.0026, calculated from the fuzzy set approach. The

average error in estimating the true efficiency is 1.2787%, which is

approximately 4.6 times larger than that of the fuzzy set approach. The total

and average differences in ranks are 4 and 0.3636, respectively. The average

difference in ranks is also greater than that of the fuzzy set approach: 0.3636

versus 0.1176, a ratio of 3.09.

The results from three missing rates show that the fuzzy set approach is

superior to the conventional DMU-deletion approach, because it not only

produces better estimates of the efficiencies but also preserves the available

Kao & Liu, Data Envelopment Analysis with Missing Data

302

information of all DMUs. It is also worthwhile to note that as the missing

rate increases the error in estimating the true efficiency for the DMU-

deletion approach shows a decreasing trend, indicating that this approach

may perform better for higher missing rates.

5. CONCLUSION

The basis of DEA is data and missing data thus hinders the calculation of

efficiency. In practice, the DMUs with values missing are usually deleted to

make the DEA approach applicable. However, this has two consequences,

one is the loss of information contained in those deleted DMUs and the other

is an overestimation of the efficiencies of the remaining DMUs. In this study

we represent the missing values by fuzzy numbers and apply a fuzzy DEA

approach to calculate the efficiencies of all DMUs. Since each fuzzy number

is constructed from the available input/output information of the DMU in

concern, it appropriately represents the value which is missing.

To investigate whether the proposed approach can produce satisfactory

estimates for true efficiencies, we select a typical problem with complete

data and delete different numbers of input/output values to result in cases of

incomplete data. The errors in estimating true efficiencies for three missing

rates are less than 0.3% for the fuzzy set approach and approximately 1.5%

for the DMU-deletion approach, and thus the former obviously outperforms

the latter. Most importantly, the efficiencies of those DMUs with some

values missing can also be calculated.

The conclusions of this study are based on one problem with three

missing rates. While the proposed approach is intuitively attractive and the

results are promising, a comprehensive comparison which takes into account

different problem sizes, missing rates, and other problem characteristics is

necessary in order to draw conclusions applicable to all situations.

REFERENCES

1. Allison, P.D. (2002), Missing Data. LA: Sage Publications.

2. Banker, R.D. A. Charnes, and W.W. Cooper (1984), Some models for

estimating technical and scale inefficiencies in data envelopment

analysis, Management Science 30, 1078-1092.

3. Charnes, A. and W.W. Cooper (1962), Programming with linear

fractional functionals, Naval Research Logistics Quarterly 9, 181-186.

Chapter 16

303

4. Charnes, A. and W.W. Cooper (1984), The non-Archimedean CCR ratio

for efficiency analysis: A rejoinder to Boyd and Färe, European J.

Operational Research 15, 333-334.

5. Charnes, A., W.W. Cooper, and E. Rhodes (1978), Measuring the

efficiency of decision making units, European J. Operational Research

2, 429-444.

6. Charnes, A., W.W. Cooper, and E. Rhodes (1979), Short

communication: Measuring the efficiency of decision making units,

European J. Operational Research 3, 339.

7. Chen, C.B. and C.M. Klein (1997), A simple approach to ranking a

group of aggregated fuzzy utilities, IEEE Trans. Systems, Man and

Cybernetics Part B 27, 26-35.

8. Cooper, W.W., L.M. Seiford and K. Tone (2000), Data Envelopment

Analysis: A Comprehensive Text with Models, Applications, References

and DEA-Solver Software. Boston: Kluwer Academic Publishers.

9. Kao, C. (2006), Interval efficiency measures in data envelopment

analysis with imprecise data, European J. Operational Research 174,

1087-1099.

10. Kao, C. and S.T. Liu (2000a), Fuzzy efficiency measures in data

envelopment analysis, Fuzzy Sets and Systems 113, 427-437.

11. Kao, C. and S.T. Liu (2000b), Data envelopment analysis with missing

data: an application to university libraries in Taiwan, J. Operational

Research Society 51, 897-905.

12. Kao, C. and Y.C. Yang (1992), Reorganization of forest districts

via efficiency measurement. European J. Operational Research 58,

356-362.

13. Law, A.M. and W.D. Kelton (1991), Simulation Modeling & Analysis,

NY: McGraw-Hill.

14. Rubin, D.B. (2004), Multiple Imputation for Nonresponse in Survey.

NY: Wiley Interscience.

15. Schafer, J.L. (1997), Analysis of Incomplete Multivariate Data. London:

Chapman & Hall.

16. Seiford, L.M. (1996), Data envelopment analysis: the evolution of the

state of the art (1978-1995). J. Productivity Analysis 7, 99-137.

17. Seiford, L.M. (1997), A bibliography for data envelopment analysis

(1978-1996). Annals of Operations Research 73, 393-438.

18.

Simar, L. and P. Wilson (1998), Sensitivity of efficiency scores: How to

bootstrap in nonparametric frontier models. Management Science 44,

49-61.

19. Simar, L. and P. Wilson (2000), A general methodology for

bootstrapping in nonparametric frontier models. J. Applied Statistics 27,

779-802.

Kao & Liu, Data Envelopment Analysis with Missing Data

304

20. Snedecor, G.W. and W.G. Cochran (1967), Statistical Methods, sixth

edition, Ames, Iowa: The Iowa State University Press.

21. Yager, R.R. (1986), A characterization of the extension principle, Fuzzy

Sets and Systems 18, 205-217.

22. Zadeh, L.A. (1978), Fuzzy sets as a basis for a theory of possibility,

Fuzzy Sets and Systems 1, 3-28.

23. Zimmermann, H.J. (1996), Fuzzy Set Theory and Its Applications, 3rd

edition, Boston: Kluwer-Nijhoff.

Chapter 16

Chapter 17

PREPARING YOUR DATA FOR DEA

Joe Sarkis

Graduate School of Management, Clark University, 950 Main Street, Worcester, MA, 01610-

1477, jsarkis@clarku.edu

Abstract: DEA and its appropriate applications are heavily dependent on the data set that

is used as an input to the productivity model. As we now know there are

numerous models based on DEA. However, there are certain characteristics of

data that may not be acceptable for the execution of DEA models. In this

chapter we shall look at some data requirements and characteristics that may

ease the execution of the models and the interpretation of results. The lessons

and ideas presented here are based on a number of experiences and

considerations for DEA. We shall not get into the appropriate selection and

development of models, such as what is used for input or output data, but

focus more on the type of data and the numerical characteristics of this data.

Key words: Data Envelopment Analysis (DEA), Homogeneity, Negative, Discretionary

1. SELECTION OF INPUTS AND OUTPUTS

AND NUMBER OF DMUS

Selection of inputs and outputs and number of DMUs is one of the core

difficulties in developing a productivity model and in preparation of the data.

In this brief review, we will not focus on the managerial reasoning for

selection of input and output factors, but more on the computational and data

aspects of this selection process.

Typically, the choice and the number of inputs and outputs, and the

DMUs determine how good of a discrimination exists between efficient and

inefficient units. There are two conflicting considerations when evaluating

the size of the data set. One consideration is to include as many DMUs as

306

possible because with a larger population there is a greater probability of

capturing high performance units that would determine the efficient frontier

and improve discriminatory power. The other conflicting consideration with

a large data set is that the homogeneity of the data set may decrease,

meaning that some exogenous impacts of no interest to the analyst or beyond

control of the manager may affect the results (Golany and Roll 1989; Haas

and Murphy, 2003). Also, the computational requirements would tend to

increase with larger data sets. Yet, there are some rules of thumb on the

number of inputs and outputs to select and their relation to the number of

DMUs.

Homogeneity

There are methods to look into homogeneity based on pre-processing

analysis of the statistical distribution of data sets and removing “outliers” or

clustering analysis, and post-processing analysis such as multi-tiered DEA

(Barr, et al., 1994) and returns-to-scale analysis to determine if homogeneity

of data sets is lacking. However, multi-tiered approaches require large

numbers of DMU to do this.

Another set of three strategies to adjust for non-homogeneity were

proposed by Haas and Murphy (2003). The first, a multi-stage approach by

Sexton et al. (1994) is one technique. In the first stage they perform DEA

using raw data producing a set of efficiency scores for all DMUs. In the

second stage they run a stepwise multiple regression on that set of efficiency

scores using a set of site (exogenous) characteristics that are expected to

account for differences in efficiency. In the third stage they adjust DMU

outputs to account for the differences in site characteristics and perform a

second DEA to produce a new set of efficiency scores based on the adjusted

data. The adjusted output levels used in the second DEA are derived by

multiplying the level of each output by the ratio of the DMU’s unadjusted

efficiency score to its expected efficiency score. The magnitude of error

method (actual minus forecast) is the second technique suggested by Haas

and Murphy (2003). In this approach the adjustment is to estimate inputs

and outputs using regression analysis. The factors causing non-homogeneity

are treated as independent variable(s). DEA is then applied using the

differences between actual and forecast inputs and outputs, rather than the

original inputs and outputs. The ratio of actual to forecast method

(actual/forecast) is the third technique suggested by Haas and Murphy

(2003). This time instead of using differences, they use the ratio of actual

input to forecast input and actual outputs to forecast outputs executing DEA.

But simulation experiments showed that none of these three strategies

performed well. At this time alternative approaches for addressing non-

homogeneity are limited.

Chapter 17

307

To further determine homogeneity and heterogeneity of data sets, using

clustering analysis may be appropriate. Clustering techniques such as using

Ward’s method maximizes within-group homogeneity and between-group

heterogeneity and forms cluster and dendograms to help identify

homogeneous groups. A good example of this preliminary data analysis

approach is found in Cinca and Molinero (2004).

Size of Data Set

Clearly, there are advantages to having larger data sets to complete a

DEA analysis, but there are minimal requirements as well. Boussofiane et al.

(1991) stipulate that to get good discriminatory power out of the CCR and

BCC models the lower bound on the number of DMUs should be the

multiple of the number of inputs and the number of outputs. This reasoning

is derived from the issue that there is flexibility in the selection of weights to

assign to input and output values in determining the efficiency of each

DMU. That is, in attempting to be efficient a DMU can assign all of its

weight to a single input or output. The DMU that has one particular ratio of

an output to an input as highest will assign all its weight to those specific

inputs and outputs to appear efficient. The number of such possible inputs is

the product of the number of inputs and the number of outputs. For example,

if there are 3 inputs and 4 outputs the minimum total number of DMUs

should be 12 for some discriminatory power to exist in the model.

Golany and Roll (1989) establish a rule of thumb that the number of units

should be at least twice the number of inputs and outputs considered. Bowlin

(1998) and Friedman and Sinuany-Stern (1998) mention the need to have

three times the number of DMUs as there are input and output variables or

that the total number of input and output variables should be less than one

third of the number of DMUs in the analysis: (m + s) < n/3. Dyson et al.

(2001) recommend a total of two times the product of the number of input

and output variables. For example with a 3 input, 4 output model Golany

and Roll recommend using 14 DMUs, while Bowlin/Friedman and Sinuany

recommend at least 21 DMUs, and Dyson et al. recommend 24. In any

circumstance, these numbers should probably be used as minimums for the

basic productivity models.

These rules of thumb attempt to make sure that the basic productivity

models are more discriminatory. If the analyst still finds that the

discriminatory power is lost due to the fewer number of DMUs, they can

either reduce the number of input and output factors, or the analyst can turn

to a different productivity model that has more discriminatory power. DEA-

based productivity models that can help discriminate among DMUs more

effectively regardless of the size of the data set include models introduced or

Sarkis, Preparing Your Data for DEA

308

developed by Andersen and Petersen (1993), Rousseau and Semple (1995),

and Doyle and Green (1994).

2. REDUCING DATA SETS FOR INPUT/OUTPUT

FACTORS THAT ARE CORRELATED

With extra large data sets, some analysts may wish to reduce the size by

eliminating the correlated input or output factors. To show what will happen

in this situation, a simple example of illustrative data is presented in Table

17-1. In this example, we have 20 DMUs, 3 inputs and 2 outputs. The first

input is perfectly correlated with the second input. The second input is

calculated by adding 2 to the first input for each DMU. The outputs are

randomly generated numbers.

Table 17-1. Efficiency Scores from Models with Correlated Inputs

DMU Input 1 Input 2 Input 3 Output 1 Output 2 Efficiency Score

(3 Inputs, 2

Outputs)

Efficiency Score

(2 Inputs, 2

Outputs)

1

10 12 7 34 7 1.000 1.000

2

24 26 5 6 1 0.169 0.169

3

23 25 3 24 10 1.000 1.000

4

12 14 4 2 5 0.581 0.576

5

11 13 5 29 8 0.954 0.948

6

12 14 2 7 2 0.467 0.460

7

44 46 5 39 4 0.975 0.975

8

12 14 7 6 4 0.400 0.400

9

33 35 5 2 10 0.664 0.664

10

22 24 4 7 6 0.541 0.541

11

35 37 7 1 4 0.220 0.220

12

21 23 5 0 6 0.493 0.493

13

22 24 6 10 6 0.437 0.437

14

24 26 8 20 9 0.534 0.534

15

12 14 9 5 4 0.400 0.400

16

33 35 2 7 6 0.900 0.900

17

22 24 9 2 3 0.175 0.175

18

12 14 5 32 10 1.000 1.000

19

42 44 7 9 7 0.352 0.352

20

12 14 4 5 3 0.349 0.346

The basic CCR model is executed for the 3-input case (where the first

two inputs correlate) and a 2 input case where Input 2 is removed from the

Chapter 17

309

analysis. The efficiency scores are in the last two columns. Notice that in this

case, the efficiency scores are also almost perfectly correlated.

1

The only

differences that do occur are in DMUs 4, 5, 6, and 20 (for three decimal

places). This can save some time in data acquisition, storage, and

calculation, but the big caveat is that even when a perfectly correlated factor

is included it may provide a slightly different answer. What happens to the

results may depend on the level of correlation that is acceptable and whether

the exact efficiency scores are important.

Jenkins and Anderson (2003) describe a systematic statistical method,

using variances and covariances, for deciding which of the original

correlated variables can be omitted with least loss of information, and which

should be retained. The method uses conditional variance as a measure of

information contained in each variable and delete the variable that loses the

least information. They also found that even omitting variables that are

highly correlated and which contain little additional information can have a

major influence on the computed efficiency measures.

Reducing Number of Input and Output Factors – Principal Component

Analysis

Given this initial caveat that correlated data may still provide some

information and that removing data can cause information loss, the

minimization of information loss has been a concern among researchers.

Yet, researchers are investigating how to complete this data reduction with

minimal information loss. Principal component analysis (PCA) has been

applied to data reduction for empirical survey type data. PCA is a

multivariate statistical tool for data reduction. It is designed with the goal of

reducing a large set of variables to a few factors that may represent a fewer

underlying factors for which the observed data are partial surrogates. The

aim is to form new factors that are linear combinations of the original factors

(items), and explain best the deviation of each observed datum from that

variable’s mean value.

A number of researchers have considered using PCA with DEA. Ueda

and Hoshiai (1997; Adler and Golany, 2001) considered using a few PCA

factors of the original data to obtain a more discerning ranking of DMUs.

Zhu (1998) in his approach compared DEA efficiency measures with an

efficiency measure made up as the ratio of a reduced set of PCA factors of

output variables divided by a reduced set of PCA factors for input variables.

Adler and Golany (2002) introduce three separate PCA–DEA formulations

which utilize the results of PCA to develop objective, assurance region type

constraints on the DEA weights. So PCA can be used at various junctures of

1

A simple method to determine correlation is by evaluating the correlation of the data in a

statistical package or even on a spreadsheet with correlation functions.

Sarkis, Preparing Your Data for DEA

310

DEA analysis to more effectively provide discriminatory power of DEA, as

well, without losing additional information.

Yet, a shortcoming of PCA is that it is dependent on the actual values of

the data. Any changes in the data values will result in different factors being

computed. Even though PCA is robust where small perturbations in the data

will cause only a small perturbation in the calculated values of all the factors

for a specific DEA run, it may not be too sensitive. On the other hand, if the

study is replicated later with the same DMUs, or repeated with another set of

comparable DMUs, the factors, and the ensuing DEA may not be strictly

comparable, because the composition of the factors may have changed.

3. IMBALANCE IN DATA MAGNITUDES

One of the best ways of making sure there is not much imbalance in the

data sets is to have them at the same or similar magnitude. A way of making

sure the data is of the same or similar magnitude across and within data sets

is to mean normalize the data. The process to mean normalize is taken in two

simple steps. First step is to find the mean of the data set for each input and

output. The second step is to divide each input or output by the mean for that

specific factor.

Table 17-2. Raw Data Set for the Mean Normalization Example

DMU Input 1 Input 2 Output 1 Output 2

1 1733896 97 1147 0.82

2 2433965 68 2325 0.45

3 30546 50 1998 0.23

4 1052151 42 542 0.34

5 4233031 15 1590 0.67

6 3652401 50 1203 0.39

7 1288406 65 1786 1.18

8 4489741 43 1639 1.28

9 4800884 90 2487 0.77

10 536165 19 340 0.57

Column Mean 2425119 53.9 1505.7 0.67

For example, let us look at a small set of 10 random data points (10

DMUs) with 2 inputs and 2 outputs as shown in Table 17-2. The magnitudes

range from 10

0

to 10

6

; in many cases this situation may be more extreme.

2

2

For example, if total sales of a major company (usually in billions of dollars) was to be

compared to the risk associated with that company (usually a “Beta” score of

approximately 1).

Chapter 17