Mitchell Т. Machine learning

Подождите немного. Документ загружается.

4.8.2

Alternative Error Minimization Procedures

While gradient descent is one of the most general search methods for finding a

hypothesis to minimize the error function, it is not always the most efficient. It

is not uncommon for BACKPROPAGATION to require tens of thousands of iterations

through the weight update loop when training complex networks. For this reason,

a

number of alternative weight optimization algorithms have been proposed and

explored. To see some of the other possibilities, it is helpful to

think

of a weight-

update method as involving two decisions: choosing a direction in which to alter

the current weight vector and choosing a distance to move. In BACKPROPAGATION,

the direction is chosen by taking the negative of the gradient, and the distance is

determined by the learning rate constant

q.

One optimization method, known as

line search,

involves a different ap-

proach to choosing the distance for the weight update. In particular, once a line is

chosen that specifies the direction of the update, the update distance is chosen by

finding the minimum of the error function along this line. Notice this can result

in a very large or very small weight update, depending on the position of the

point along the line that minimizes error.

A

second method, that builds on the

idea of line search, is called the

conjugate gradient

method. Here, a sequence of

line searshes is performed to search for a minimum in the error surface. On the

first step in this sequence, the direction chosen is the negative of the gradient.

On each subsequent step, a new direction is chosen so that the component of the

error gradient that has just been made zero, remains zero.

While alternative error-minimization methods sometimes lead to improved

efficiency in training the network, methods such as conjugate gradient tend to

have no significant impact on the generalization error of the final network. The

only likely impact on the final error is that different error-minimization procedures

may fall into different local minima. Bishop

(1996)

contains a general discussion

of several parameter optimization methods for training networks.

4.8.3

Recurrent Networks

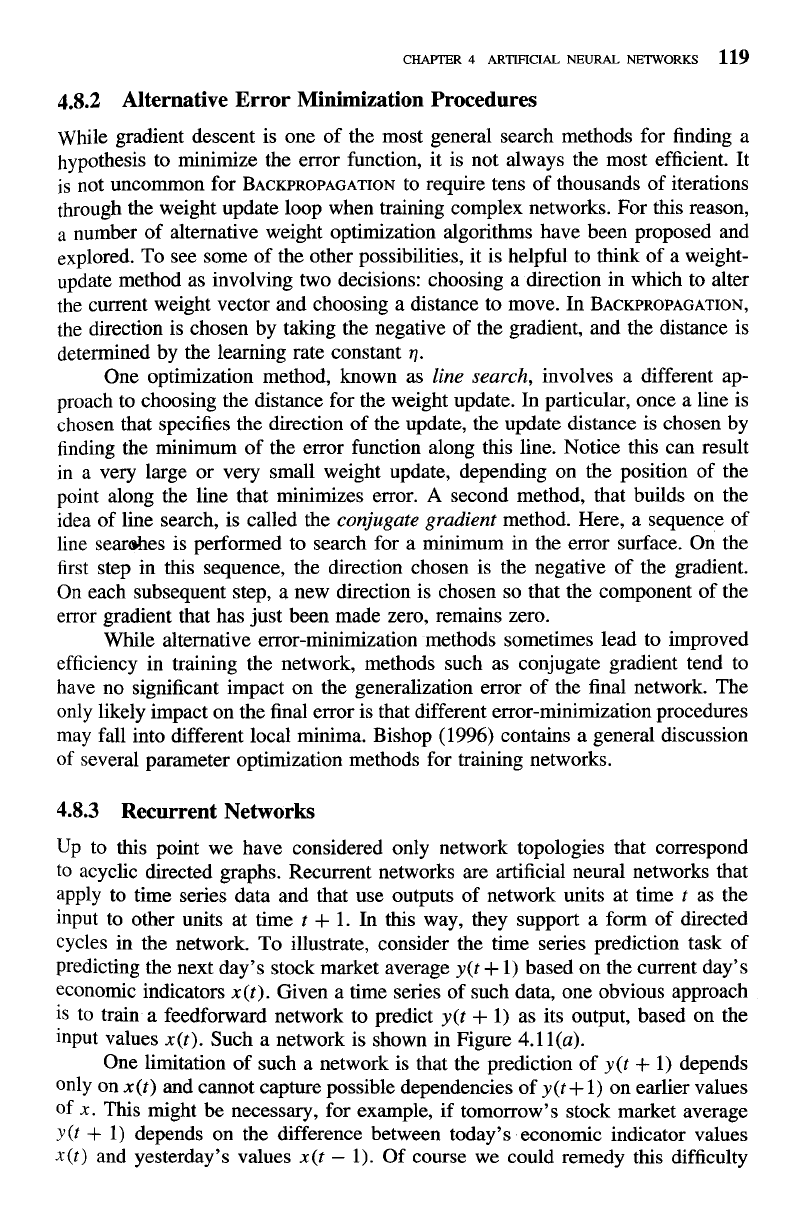

Up to this point we have considered only network topologies that correspond

to acyclic directed graphs. Recurrent networks are artificial neural networks that

apply to time series data and that use outputs of network units at time

t

as the

input to other units at time

t

+

1.

In this way, they support a form of directed

cycles in the network. To illustrate, consider the time series prediction task of

predicting the next day's stock market average

y(t

+

1)

based on the current day's

economic indicators

x(t).

Given a time series of such data, one obvious approach

is to train a feedforward network to predict

y(t

+

1)

as its output, based on the

input values

x(t).

Such a network is shown in Figure

4.11(a).

One limitation of such a network is that the prediction of

y(t

+

1)

depends

only on

x(t)

and cannot capture possible dependencies of

y

(t

+

1)

on earlier values

of

x.

This might be necessary, for example, if tomorrow's stock market average

~(t

+

1)

depends on the difference between today's economic indicator values

x(t)

and yesterday's values

x(t

-

1).

Of course we could remedy this difficulty

I

120

MACHINE

LEARNING

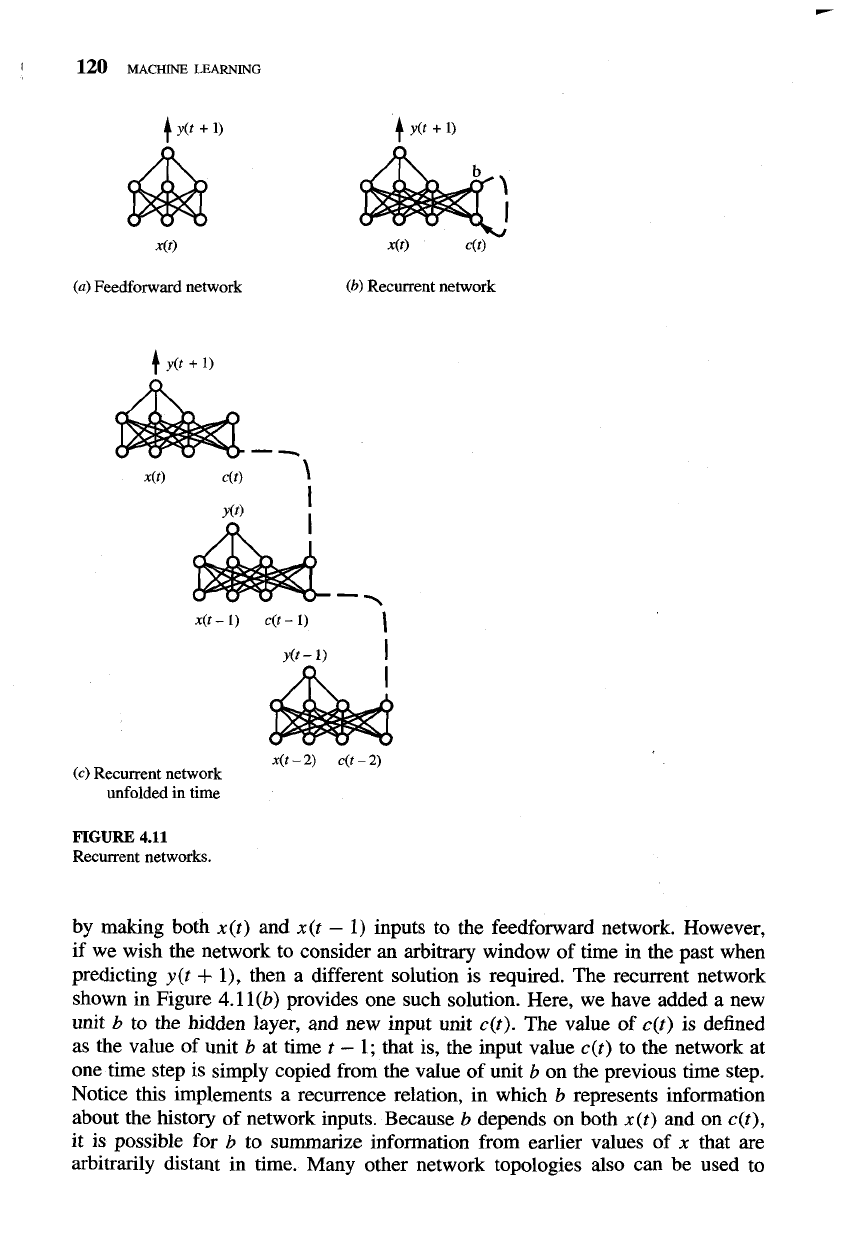

(4

Feedforward network

(b)

Recurrent network

x(t

-

2)

c(t

-

2)

(d

Recurrent network

unfolded in time

FIGURE

4.11

Recurrent networks.

by

making both x(t) and x(t

-

1) inputs to the feedforward network. However,

if

we wish the network to consider

an

arbitrary window of time in the past when

predicting y(t

+

l), then a different solution is required. The recurrent network

shown in Figure 4.1 1(b) provides one such solution. Here, we have added a new

unit

b

to the hidden layer, and new input unit c(t). The value of c(t) is defined

as the value of unit

b

at time t

-

1; that is, the input value c(t) to the network at

one time step is simply copied from the value of unit

b

on the previous time step.

Notice this implements a recurrence relation, in which b represents information

about the history of network inputs. Because

b

depends on both x(t) and on c(t),

it is possible for

b

to summarize information from earlier values of x that are

arbitrarily distant in time. Many other network topologies also can be used to

CHAPTER

4

ARTIFICIAL

NEURAL

NETWORKS

121

represent recurrence relations. For example, we could have inserted several layers

of

units between the input and unit

b,

and we could have added several context

in parallel where we added the single units

b

and c.

How can such recurrent networks be trained? There are several variants of

recurrent networks, and several training methods have been proposed (see, for

example, Jordan 1986; Elman 1990; Mozer 1995; Williams and Zipser 1995).

Interestingly, recurrent networks such as the one shown in Figure 4.1

1(b) can be

trained using a simple variant of BACKPROPAGATION. TO understand how, consider

Figure 4.11(c), which shows the data flow of the recurrent network "unfolded

in time. Here we have made several copies of the recurrent network, replacing

the feedback loop by connections between the various copies. Notice that this

large unfolded network contains no cycles. Therefore, the weights in the unfolded

network can be trained directly using BACKPROPAGATION. Of course in practice

we wish to keep only one copy of the recurrent network and one set of weights.

Therefore, after training the unfolded network, the final weight

wji

in the recurrent

network can be taken to be the mean value of the corresponding

wji

weights in

the various copies. Mozer (1995) describes this training process in greater detail.

In practice, recurrent networks are more difficult to train than networks with no

feedback loops and do not generalize as reliably. However, they remain important

due to their increased representational power.

4.8.4

Dynamically Modifying Network Structure

Up to this point we have considered neural network learning as a problem of

adjusting weights within a fixed graph structure.

A

variety of methods have been

proposed to dynamically grow or shrink the number of network units and intercon-

nections in an attempt to improve generalization accuracy and training efficiency.

One idea is to begin with a network containing no hidden units, then grow

the network as needed by adding hidden units until the training error is reduced

to some acceptable level. The CASCADE-CORRELATION algorithm

(Fahlman and

Lebiere 1990) is one such algorithm. CASCADE-CORRELATION begins by construct-

ing a network with no hidden units. In the case of our face-direction learning task,

for example, it would construct a network containing only the four output units

completely connected to the 30

x

32

input nodes. After this network is trained for

some time, we may well find that there remains a significant residual error due

to the fact that the target function cannot be perfectly represented by a network

with this single-layer structure. In this case, the algorithm adds a hidden unit,

choosing its weight values to maximize the correlation between the hidden unit

value and the residual error of the overall network. The new unit is now installed

into the network, with its weight values held fixed, and a new connection from

this new unit is added to each output unit. The process is now repeated. The

original weights are retrained (holding the hidden unit weights fixed), the residual

error is checked, and a second hidden unit added if the residual error is still above

threshold. Whenever a new hidden unit is added, its inputs include all of the orig-

inal network inputs plus the outputs of any existing hidden units. The network is

122

MACHINE

LEARNING

grown in this fashion, accumulating hidden units until the network residual enor

is reduced to some acceptable level. Fahlman and Lebiere (1990) report cases in

which CASCADE-CORRELATION significantly reduces training times, due to the fact

that only a single layer of units is trained at each step. One practical difficulty

is that because the algorithm can add units indefinitely, it is quite easy for it to

overfit the training data, and precautions to avoid overfitting must be taken.

A

second idea for dynamically altering network structure is to take the

opposite approach. Instead of beginning with the simplest possible network and

adding complexity, we begin with a complex network and prune it as we find that

certain connections are inessential. One way to decide whether a particular weight

is inessential is to see whether its value is close to zero.

A

second way, which

appears to be more successful in practice, is to consider the effect that a small

variation in the weight has on the error

E.

The effect on

E

of varying

w

(i.e.,

g)

can be taken as a measure of the salience of the connection. LeCun et al.

(1990)

describe a process in which a network is trained, the least salient connections

removed, and this process iterated until some termination condition is met. They

refer to this as the "optimal brain damage" approach, because at each step the

algorithm attempts to remove the least useful connections. They report that in

a character recognition application this approach reduced the number of weights

in a large network by a factor of

4,

with a slight improvement in generalization

accuracy and a significant improvement in subsequent training efficiency.

In general, techniques for dynamically modifying network structure have

met with mixed success. It remains to be seen whether they can reliably improve

on the generalization accuracy of BACKPROPAGATION. However, they have been

shown in some cases to provide significant improvements in training times.

4.9

SUMMARY AND FURTHER READING

Main points of this chapter include:

0

Artificial neural network learning provides a practical method for learning

real-valued and vector-valued functions over continuous and discrete-valued

attributes, in a way that is robust to noise in the training data. The BACKPROP-

AGATION

algorithm is the most common network learning method and has

been successfully applied to a variety of learning tasks, such as handwriting

recognition and robot control.

0

The hypothesis space considered by the BACKPROPAGATION algorithm is the

space of all functions that can be represented by assigning weights to the

given, fixed network of interconnected units. Feedforward networks contain-

ing three layers of units are able to approximate

any

function to arbitrary

accuracy, given a sufficient (potentially very large) number of units in each

layer. Even networks of practical size are capable of representing a rich

space of highly nonlinear functions, making feedforward networks a good

choice for learning discrete and continuous functions whose general form is

unknown in advance.

BACKPROPAGATION searches the space of possible hypotheses using gradient

descent to iteratively reduce the error in the network fit to the training

examples. Gradient descent converges to a local minimum in the training

error with respect to the network weights. More generally, gradient descent is

a potentially useful method for searching many continuously parameterized

hypothesis spaces where the training error is a differentiable function of

hypothesis parameters.

One of the most intriguing properties of BACKPROPAGATION is its ability to

invent new features that are not explicit in the input to the network. In par-

ticular, the internal (hidden) layers of multilayer networks learn to represent

intermediate features that are useful for learning the target function and that

are only implicit in the network inputs. This capability is illustrated, for ex-

ample, by the ability of the

8

x

3

x

8

network in Section 4.6.4 to invent the

boolean encoding of digits from 1 to

8

and by the image features represented

by the hidden layer in the face-recognition application of Section

4.7.

Overfitting the training data is an important issue in

ANN

learning. Overfit-

ting results in networks that generalize poorly to new data despite excellent

performance over the training data. Cross-validation methods can be used to

estimate an appropriate stopping point for gradient descent search and thus

to minimize the risk of overfitting.

0

Although BACKPROPAGATION is the most common

ANN

learning algorithm,

many others have been proposed, including algorithms for more specialized

tasks. For example, recurrent neural network methods train networks con-

taining directed cycles, and algorithms such as CASCADE CORRELATION alter

the network structure as well as the network weights.

Additional information on ANN learning can be found in several other chap-

ters in this book. A Bayesian justification for choosing to minimize the sum of

squared errors is given in Chapter 6, along with a justification for minimizing

the cross-entropy instead of the sum of squared errors in other cases. Theoretical

results characterizing the number of training examples needed to reliably learn

boolean functions and the Vapnik-Chervonenkis dimension of certain types of

networks can be found in Chapter

7.

A discussion of overfitting and how to avoid

it

can be found in Chapter

5.

Methods for using prior knowledge to improve the

generalization accuracy of

ANN

learning are discussed in Chapter 12.

Work on artificial neural networks dates back to the very early days of

computer science. McCulloch and Pitts (1943) proposed a model of a neuron

that corresponds to the perceptron, and a good deal of work through the 1960s

explored variations of this model. During the early 1960s Widrow and Hoff (1960)

explored perceptron networks (which they called "adelines") and the delta rule,

and Rosenblatt (1962) proved the convergence of the perceptron training rule.

However, by the late 1960s it became clear that single-layer perceptron networks

had limited representational capabilities, and no effective algorithms were known

for training multilayer networks. Minsky and Papert (1969) showed that even

simple functions such as XOR could not be represented or learned with single-

layer perceptron networks, and work on ANNs receded during the 1970s.

During the mid-1980s work on

ANNs experienced a resurgence, caused in

large part by the invention of BACKPROPAGATION and related algorithms for train-

ing multilayer networks (Rumelhart and McClelland 1986; Parker 1985). These

ideas can be traced to related earlier work (e.g., Werbos 1975). Since the 1980s,

BACKPROPAGATION has become a widely used learning method, and many other

ANN approaches have been actively explored. The advent of inexpensive com-

puters during this same period has allowed experimenting with computationally

intensive algorithms that could not be thoroughly explored during the 1960s.

A number of textbooks are devoted to the topic of neural network learning.

An early but still useful book on parameter learning methods for pattern recog-

nition is Duda and Hart (1973). The text by

Widrow and Stearns (1985) covers

perceptrons and related single-layer networks and their applications. Rumelhart

and McClelland (1986) produced an edited collection of papers that helped gen-

erate the increased interest in these methods beginning in the mid-1980s. Recent

books on neural network learning include Bishop (1996); Chauvin and Rumelhart

(1995); Freeman and Skapina (1991); Fu (1994); Hecht-Nielsen (1990); and Hertz

et

al.

(1991).

EXERCISES

4.1.

What are the values of weights

wo, wl,

and

w2

for the perceptron whose decision

surface is illustrated in Figure

4.3?

Assume the surface crosses the

xl

axis at

-1,

and the

x2

axis at

2.

4.2.

Design a two-input perceptron that implements the boolean function

A

A

-.

B. Design

a two-layer network of perceptrons that implements

A

XO

R

B.

4.3.

Consider two perceptrons defined by the threshold expression

wo

+

wlxl+ ~2x2

>

0.

Perceptron

A

has weight values

and perceptron

B has the weight values

True or false? Perceptron

A

is

more-general~han

perceptron

B.

(more-general~han

is defined in Chapter

2.)

4.4.

Implement the delta training rule for a two-input linear unit. Train it to fit the target

concept

-2

+

XI+

2x2

>

0.

Plot the error

E

as a function of the number of training

iterations. Plot the decision surface after

5, 10, 50, 100,

. . .

,

iterations.

(a)

Try this using various constant values for

17

and using a decaying learning rate

of

qo/i

for the ith iteration. Which works better?

(b)

Try

incremental and batch learning. Which converges more quickly? Consider

both number of weight updates and total execution time.

4.5.

Derive

a

gradient descent training rule for a single unit with output

o,

where

4.6.

Explain informally why the delta training rule in Equation (4.10) is only an approx-

imation to the true gradient descent rule of Equation (4.7).

4.7.

Consider a two-layer feedforward ANN with two inputs

a

and

b,

one hidden unit

c,

and one output unit

d.

This network has five weights (w,,

web,

wd, wdc, wdO), where

w,o represents the threshold weight for unit

x.

Initialize these weights to the values

(.

1, .l, .l, .l, .I), then give their values after each of the first two training iterations of

the

BACKPROPAGATION

algorithm. Assume learning rate

17

=

.3,

momentum

a!

=

0.9,

incremental weight updates, and the following training examples:

abd

101

010

4.8.

Revise the

BACKPROPAGATION

algorithm in Table 4.2 so that it operates on units

using the squashing function

tanh

in place of the sigmoid function. That is, assume

the output of a single unit is

o

=

tanh(6.x').

Give the weight update rule for output

layer weights and hidden layer weights. Hint:

tanh'(x)

=

1

-

tanh2(x).

4.9.

Recall the 8

x

3

x

8

network described in Figure 4.7. Consider trying to train a 8

x

1

x

8

network for the same task; that is, a network with just one hidden unit. Notice the

eight training examples in Figure 4.7 could be represented by eight distinct values for

the single hidden unit

(e.g., 0.1,0.2,

. . . ,0.8). Could a network with just one hidden

unit therefore learn the identity function defined over these training examples? Hint:

Consider questions such as "do there exist values for the hidden unit weights that

can create the hidden unit encoding suggested

above?'"do there exist values for

the output unit weights that could correctly decode this encoding of the input?'and

"is gradient descent likely to find such weights?'

4.10.

Consider the alternative error function described in Section 4.8.1

Derive the gradient descent update rule for this definition of

E.

Show that it can be

implemented by multiplying each weight by some constant before performing the

standard gradient descent update given in Table 4.2.

4.11.

Apply

BACKPROPAGATION

to the task of face recognition. See World Wide Web

URL

http://www.cs.cmu.edu/-tomlbook.html

for details, including face-image data,

BACKPROPAGATION

code, and specific tasks.

4.12.

Consider deriving a gradient descent algorithm to learn target concepts corresponding

to rectangles in the

x, y

plane. Describe each hypothesis by the

x

and

y

coordinates

of the lower-left and upper-right comers of the rectangle

-

Ilx, Ily, urn,

and

ury

respectively. An instance

(x, y)

is labeled positive by hypothesis

(llx, lly, urx, ury)

if and only if the point

(x, y)

lies inside the corresponding rectangle. Define error

E

as

in the chapter. Can you devise a gradient descent algorithm to learn such

rectangle hypotheses? Notice that

E

is not a continuous function of

llx, Ily, urx,

and

ury,

just as in the case of perceptron learning. (Hint: Consider the two solutions

used for perceptrons: (1) changing the classification rule to make output predictions

continuous

functions of the inputs, and (2) defining an alternative error-such as

distance to the rectangle center-as in using the delta rule to train perceptrons.)

Does your algorithm converge to the minimum error hypothesis when the positive

and negative examples are separable by a rectangle? When they are not? Do you

have problems with local minima? How does your algorithm compare to symbolic

methods for learning conjunctions of feature constraints?

REFERENCES

Bishop, C. M. (1996). Neural networks for pattern recognition. Oxford, England: Oxford University

Press.

Chauvin,

Y.,

&

Rumelhart, D. (1995). BACKPROPAGATION: Theory, architectures, and applications

(edited collection). Hillsdale,

NJ:

Lawrence Erlbaum Assoc.

Churchland, P. S.,

&

Sejnowski, T. J. (1992). The computational brain. Cambridge, MA: The MIT

Press.

Cyhenko, G. (1988). Continuous valued neural networks with two hidden layers are sufficient (Tech-

nical Report). Department of Computer Science, Tufts University, Medford, MA.

Cybenko, G. (1989). Approximation by superpositions of a sigmoidal function.

Mathematics of Con-

trol, Signals, and Systems,

2, 303-3 14.

Cottrell, G. W. (1990). Extracting features from faces using compression networks: Face, identity,

emotion and gender recognition using holons. In D. Touretzky

(Ed.),

Connection Models:

Proceedings of the

1990

Summer School. San Mateo, CA: Morgan Kaufmann.

Dietterich, T. G., Hild, H.,

&

Bakiri, G. (1995). A comparison of ID3 and BACKPROPAGATION for

English text-to-speech mapping.

Machine Learning, 18(1), 51-80.

Duda, R.,

&

Hart, P. (1973). Pattern class@cation and scene analysis. New York: John Wiley

&

Sons.

Elman, J.

L.

(1990). Finding structure in time. Cognitive Science, 14, 179-21 1.

Fahlman, S.,

&

Lebiere, C. (1990). The CASCADE-CORRELATION learning architecture (Technical

Report CMU-CS-90-100). Computer Science Department, Carnegie Mellon University, Pitts-

burgh, PA.

Freeman, J.

A.,

&

Skapura,

D.

M. (1991). Neural networks. Reading, MA: Addison Wesley.

Fu, L. (1994).

Neural networks in computer intelligence. New York: McGraw Hill.

Gabriel, M.

&

Moore, J. (1990). Learning and computational neuroscience: Foundations of adaptive

networks

(edited collection). Cambridge, MA: The MIT Press.

Hecht-Nielsen, R. (1990).

Neurocomputing. Reading, MA: Addison Wesley.

Hertz, J., Krogh, A.,

&

Palmer, R.G. (1991). Introduction to the theory of neural computation. Read-

ing, MA: Addison Wesley.

Homick, K., Stinchcombe, M.,

&

White, H. (1989). Multilayer feedforward networks are universal

approximators.

Neural Networks, 2, 359-366.

Huang, W. Y.,

&

Lippmann, R. P. (1988). Neural net and traditional classifiers.

In

Anderson (Ed.),

Neural Information Processing Systems (pp. 387-396).

Jordan, M. (1986). Attractor dynamics and parallelism in a connectionist sequential machine.

Pro-

ceedings of the Eighth Annual Conference of the Cognitive Science Society

(pp. 531-546).

Kohonen, T. (1984).

Self-organization and associative memory. Berlin: Springer-Verlag.

Lang, K.

J.,

Waibel, A. H.,

&

Hinton, G. E. (1990). A time-delay neural network architecture for

isolated word recognition.

Neural Networks,

3,

3343.

LeCun,

Y.,

Boser, B., Denker, J. S., Henderson, D., Howard, R. E., Hubbard, W.,

&

Jackel,

L.D.

(1989). BACKPROPAGATION applied to handwritten zip code recognition. Neural Computa-

tion,

l(4).

LeCun,

Y.,

Denker,

J.

S.,

&

Solla, S. A. (1990). Optimal brain damage. In D. Touretzky (Ed.),

Advances in Neural Information Processing Systems (Vol. 2, pp. 598405). San Mateo, CA:

Morgan Kaufmann.

Manke, S., Finke, M.

&

Waibel, A. (1995). NPEN++: a writer independent, large vocabulary on-

line cursive handwriting recognition system.

Proceedings of the International Conference on

Document Analysis

and

Recognition. Montreal, Canada: IEEE Computer Society.

McCulloch,

W.

S.,

&

Pitts, W. (1943). A logical calculus of

the

ideas immanent in nervous activity.

Bulletin

of

Mathematical Biophysics,

5,

115-133.

Mitchell, T. M.,

&

Thrun, S. B. (1993). Explanation-based neural network learning for robot control.

In Hanson, Cowan,

&

Giles (Eds.),

Advances in neural informution processing systems

5

(pp. 287-294). San Francisco: Morgan Kaufmann.

Mozer, M. (1995). A focused BACKPROPAGATION algorithm for temporal pattern recognition.

In

Y. Chauvin

&

D. Rumelhart (Eds.),

Backpropagation: Theory, architectures, and applications

(pp. 137-169). Hillsdale, NJ: Lawrence Erlbaum Associates.

Minsky, M.,

&

Papert, S. (1969).

Perceptrons.

Cambridge, MA: MIT Press.

Nilsson, N. J. (1965).

Learning machines.

New York: McGraw Hill.

Parker, D. (1985).

Learning logic

(MIT Technical Report TR-47). MIT Center for Research in

Computational Economics and Management Science.

pomerleau, D. A. (1993). Knowledge-based training of artificial neural networks for autonomous

robot driving. In J. Come11

&

S. Mahadevan (Eds.),

Robot Learning

(pp. 19-43). Boston:

Kluwer Academic Publishers.

Rosenblatt, F. (1959). The perceptron: a probabilistic model for information storage and organization

in the brain.

Psychological Review,

65, 386-408.

Rosenblatt, F. (1962).

Principles of neurodynamics.

New York: Spartan Books.

Rumelhart,

D.

E.,

&

McClelland, J.

L.

(1986).

Parallel distributed processing: exploration in the

microstructure of cognition

(Vols. 1

&

2). Cambridge, MA: MIT Press.

Rumelhart, D., Widrow, B.,

&

Lehr,

M. (1994). The basic ideas in neural networks.

Communications

of the ACM,

37(3), 87-92.

Shavlik, J.

W.,

Mooney, R. J.,

&

Towell, G. G. (1991). Symbolic and neural learning algorithms:

An

experimental comparison.

Machine Learning,

6(2), 11 1-144.

Simard, P. S., Victorri, B., LeCun, Y.,

&

Denker, J. (1992). Tangent prop--A formalism for specifying

selected invariances in an adaptive network. In Moody, et al. (Eds.),

Advances in Neural

Information Processing Systems

4

(pp. 895-903). San Francisco: Morgan Kaufmann.

Waibel,

A.,

Hanazawa,

T.,

Hinton, G., Shikano, K.,

&

Lang, K. (1989). Phoneme recognition using

time-delay neural networks.

ZEEE Transactions on Acoustics, Speech and Signal Processing.

Weiss, S.,

&

Kapouleas, I. (1989). An empirical comparison of pattern recognition, neural nets, and

machine learning classification methods.

Proceedings of the Eleventh ZJCAI

@p. 781-787).

San Francisco: Morgan Kaufmann.

Werbos, P. (1975). Beyond regression:

New tools for prediction and analysis in the behavioral sciences

(Ph.D. dissertation). Harvard University.

Widrow, B.,

&

Hoff, M.

E.

(1960). Adaptive switching circuits.

IRE WESCON Convention Record,

4,96104.

Widrow, B.,

&

Stearns, S. D. (1985).

Adaptive signalprocessing.

Signal Processing Series. Englewood

Cliffs, NJ: Prentice

Hall.

Williams, R.,

&

Zipser, D. (1995). Gradient-based learning algorithms for recurrent networks and their

computational complexity. In

Y.

Chauvin

&

D. Rumelhart (Eds.),

Backpropagation: Theory,

architectures, and applications

(pp. 433-486). Hillsdale, NJ: Lawrence Erlbaum Associates.

Zometzer, S.

F.,

Davis,

J.

L.,

&

Lau, C. (1994).

An introduction to neural and electronic neiworks

(edited collection) (2nd ed.). New York: Academic Press.

CHAPTER

EVALUATING

HYPOTHESES

Empirically evaluating the accuracy of hypotheses is fundamental to machine learn-

ing. This chapter presents an introduction to statistical methods for estimating hy-

pothesis accuracy, focusing on three questions. First, given the observed accuracy

of a hypothesis over a limited sample of data, how well does this estimate its ac-

curacy over additional examples? Second, given that one hypothesis outperforms

another over some sample of data, how probable is it that this hypothesis is more

accurate

in

general? Third, when data is limited what is the best way to use this

data to both learn a hypothesis and estimate its accuracy? Because limited samples

of data might misrepresent the general distribution of data, estimating true accuracy

from such samples can be misleading. Statistical methods, together with assump-

tions about the underlying distributions of data, allow one to bound the difference

between observed accuracy over the sample of available data and the true accuracy

over the entire distribution of data.

5.1

MOTIVATION

In

many cases it is important to evaluate the performance of learned hypotheses

as precisely as possible. One reason is simply to understand whether to use the

hypothesis. For instance, when learning from a limited-size database indicating

the effectiveness of different medical treatments, it is important to understand

as

precisely as possible the accuracy of the learned hypotheses.

A

second reason is

that evaluating hypotheses is an integral component of many learning methods.

For example, in post-pruning decision trees to avoid

overfitting,

we

must evaluate