Dubois E., Gray P., Nigay L. (Eds.) The Engineering of Mixed Reality Systems

Подождите немного. Документ загружается.

276 J.M.V. Misker and J. van der Ster

and to incorporate participant’s feedback throughout the whole design process. An

extensive background on mixed reality in the arts is presented in Section 14.2.

In Section 14.4 the V2_Game Engine (VGE) is described, the platform we

developed to engineer and author mixed reality environments. Furthermore, we

summarise various techniques that, when implemented properly, can have a very

large impact on the user experience.

We conclude with recommendations that represent the outcome of several case

studies from our own practice. Our recommendations take the current technical state

of affairs, including its shortcomings, as a given; in Sections 14.4.2 and 14.4.3 we

suggest workarounds where needed in order to focus on the design issues and user

experiences.

14.1.1 Definitions and Assumptions

In this section we explain some of the terminology and assumptions that are used

throughout the chapter.

14.1.1.1 Mixed Reality

We define mixed reality as the mix of virtual content, visual or auditory, with the

real world. The virtual content is created in such a way that it could be perceived

as if it was real, e.g. contrary to applications in which information is overlaid using

text or lines.

The visual virtual content can be projected over a video of the real world or on

transparent displays, but in either case the virtual coordinate system is fixed with

respect to the real-world coordinate system.

14.1.1.2 Immersion

In this context we define immersion as the (subjective) believability or engagement

of the mix between real and virtual, combined with (objective) precision of mea-

suring user state and (subjective + objective) visual similarity of real and virtual

objects.

14.1.1.3 Absolute vs. Relative Coordinate Systems

The virtual content in mixed reality environments can be positioned absolutely or

relatively with respect to the real world. Relative positioning means that virtual

objects are aligned to markers, often 2D barcodes, although some projects are using

computer vision to automatically recognise features in the real world to align and

position the virtual objects [8]. A downside of this approach is that the markers or

14 Authoring Immersive Mixed Reality Experiences 277

features have to be visible to a camera at all times and that the overlay of virtual con-

tent is done on the camera image. It is quite difficult to scale this approach to more

viewpoints, because the coordinate systems of the different viewpoints all have to

be aligned.

In an absolutely positioned system, sensors are used to fix the virtual coordinate

system to the real world. This requires a multitude of sensors, as the positioning sen-

sors often cannot be used for measuring orientation. The major benefit is that these

systems can be made very robust, especially when fusing different sensor streams. A

downside is that there needs to be an absolute reference coordinate system, usually

“the world”.

In our system and for our applications we chose to focus on creating an absolutely

aligned coordinate system because it allows for more versatile systems; different

types of mixed reality environments and perspectives can be explored for its artistic

merits.

14.2 Background: Mixed Reality Environments in the Arts

Artistic use of mixed reality environments is an interesting application domain

because the goal of an artwork is to change the perspective of the user/audience/

spectator, by giving them a new experience. From a user-centred perspective it

is obvious that the focus of the mixed reality environment is not on technol-

ogy but on immersing the user or spectator in a mix of real and virtual worlds.

We have created several mixed reality projects, which will be described in more

detail.

14.2.1 Motivation for Using Mixed Reality in the Arts

Many artistic concepts revolve around realistic alternative realities, ranging from

the creation of beautiful utopian worlds to making horrific confronting scenarios

(refs). Whichever the artistic concept is, in general, the experience envisioned by the

artist benefits from a high level of r ealism for the user (i.e. spectator). Such realism

can be achieved by allowing the same sensory–motor relations to exist between the

user and the alternative reality, as those which exist between a user and the real

world.

In this light, mixed reality technology, when implemented successfully, provides

the favoured solution, because t he sensory–motor relations we use to perceive the

real world can also be used to perceive the alternative reality. First of all because

the alternative reality partly consists of real-world, real-time images. Second, the

sensory changes resulting from a user’s interaction with the virtual elements in the

alternative reality are carefully modelled on those that would occur in the real world

[18, 19].

278 J.M.V. Misker and J. van der Ster

14.2.2 Examples of Mixed Reality in the Arts

14.2.2.1 Example: Markerless Magic Books

The Haunted Book [15] is an artwork that relies on recent computer vision and

augmented reality techniques to animate the illustrations of poetry books. Because

there is no need for markers, they can achieve seamless integration of real and virtual

elements to create the desired atmosphere. The visualisation is done on a computer

screen to avoid head-mounted displays. The camera is hidden into a desk lamp for

easing even more t he spectator immersion. The work is the result of a collaboration

between an artist and computer vision researchers.

14.2.2.2 Mixed Reality as a Presentation Medium

A noteworthy development is the increasing use of mixed reality techniques in

museums. This makes it possible for the public to explore artworks in a new way,

for example, by showing information overlaid on the work or by recreating a mixed

reality copy that can be handled and touched in a very explorative manner. The

museum context is used often in previous studies, but often mainly as a test bed for

technological development [6]. Only recently has mixed reality started to be used

outside of the scientific context for the improvements i t can make to the museum

visit, e.g. [12].

Another inspiring example of the use of mixed reality in a museum context is the

installation Sgraffito in 3D,

1

produced by artist J oachim Rotteveel in collaboration

with Wim van Eck and the AR Lab of the Royal Academy of Arts, The Hague.

Sgraffito is an ancient decorative technique in which patterns are scratched into the

wet clay. The artist has made this archaeological collection accessible in a new way

using 3D visualisation and reconstruction techniques from the worlds of medicine

and industry. Computed axial tomography (CAT) scans, as used in medicine, create

a highly detailed 3D representation that serve as a basis for the visualisation and

reconstruction using 3D printers. The collection is made accessible on the Internet

and will even be cloned. The exhibition Sgraffito in 3D allowed the public to explore

the process of recording, archiving and reconstruction for the first time, step by step.

Video projections show the actual CAT scans. The virtual renderings enable visitors

to view the objects from all angles. The workings of the 3D printer are demonstrated

and the printed clones will compete with the hundred original Sgraffito objects in

the exhibition.

14.2.2.3 Crossing Borders: Interactive Cinema

It should be noted that using and exploring a new medium is something that comes

very natural to artists; one could argue that artists are on the forefront of finding

innovative uses for new technology.

1

http://www.sgraffito-in-3d.com/en/

14 Authoring Immersive Mixed Reality Experiences 279

An interesting recent development is the increasing use of interactive techniques

to change the cinematic experience [2]. For example, in the BIBAP (Body in Bits

and Pieces)

2

project a dance movie changes with respect to the viewers position

in front of a webcam and the sound the viewer makes. We foresee that interactive

cinema and mixed reality will merge sooner rather than later, opening up an entirely

new usage domain.

14.3 Related Work: Authoring Tools

From the moment mixed reality evolved from a technology to a medium, researchers

have stressed the need for properly designed authoring tools [10]. These tools would

eliminate the technical difficulties and allow content designers to experiment with

this new medium.

AMIRE was one of the earliest projects in which authoring for mixed reality

environments was researched [1]. Within this project valuable design cycles and

patterns to design mixed reality environments were proposed. However, the devel-

oped toolbox is mainly focused on marker-based augmented reality and has not been

actively maintained.

DART is an elaborated mixed reality authoring tool creating a singular and uni-

fied toolbox primarily focusing on designers [11]. DART is in essence an extension

of Macromedia Director, a media application that provides a graphical user interface

to stage and score interactive programs. Programs can be created using the scripting

language Lingo provided within Director. DART implements the ARToolKit

3

and

a range of tracking sensors using VRPN

4

(virtual reality peripheral network) and

interfaces them in Director. DART is closely interwoven with the design metaphors

of Director and is designed to allow the users to design and create different mixed

reality experiences. Images and content can be swapped, added and removed at run

time. DART also includes some interesting design features. For instance it allows the

designer to easily record and playback recorded data, e.g. video footage and move-

ment of markers. The system has been constructed in such a way that the designer

can easily switch between recorded and live data. Designers can work with this

data to design mixed reality without mixed reality technology or having to move a

marker or set up a camera frequently. Although DART provides an elegant and intu-

itive suite for editing mixed reality, we decided not to use it primarily because of

the Shockwave 3D rendering engine. This engine does not provide the functionality

and performance we desire for our applications.

Finally, existing mixed reality tools are primarily focused on creating mixed real-

ity experiences that are based on relative alignment of a camera image with a virtual

2

http://www.bibap.nl/

3

http://www.hitl.washington.edu/artoolkit/

4

http://www.cs.unc.edu/Research/vrpn/

280 J.M.V. Misker and J. van der Ster

image [5, 9]. Because we use an absolute coordinate system in our setup different

design issues arise and need to be addressed.

14.4 Authoring Content for Mixed Reality Environments

We propose to distinguish three types of activities involved in authoring content for

mixed reality environments.

– Engineering the underlying technology, which is included in this list because it

provides crucial insight into the exact technical possibilities, e.g. the type and

magnitude of position measurement errors.

– Designing the appearance of the real environment, virtual elements and the way

they are mixed, as well as creating the sound.

– Directing the user experience, i.e. designing the game play elements and the activ-

ities a user/player can undertake when engaged in a mixed reality environment.

These activities can be compared to creating a movie or video game, for which

many tools are available. However, these tools are not readily applicable to author-

ing content for mixed reality environments. For example, tools exist that allow an

artist to mix video images with virtual images, e.g. Cycling ’74 Max/MSP/Jitter

5

or Apple Final Cut Pro.

6

However, these are not designed to create complex game

play scenarios or place a real-time generated 3D virtual object somewhere in 2D live

streaming video image. There are tools that allow artists to design video games and

create different game play scenarios, e.g. Blender

7

or Unity,

8

but these are focused

on creating real-time 3D images and designing game play; they rarely incorporate

streaming video images into this virtual world.

Even though mixed reality environments are becoming more accessible on a tech-

nological level, there are still many technical issues that have an immediate effect on

the user experience. Artists and content designers should have a proper understand-

ing of the technical limitations, but they should not have to fully understand them.

The data recording and playback functionality of the DART system [11] is a good

example of a user-friendly way to solve this issue. Currently no such functionality

is included yet in our tool VGE, described in the following section.

14.4.1 Engineering and Authoring Platform: VGE

This section details the platform developed at V2_Lab for engineering and authoring

of mixed reality environments.

5

http://www.cycling74.com

6

http://www.apple.com/nl/finalcutstudio/finalcutpro

7

http://www.blender.org

8

http://www.unity3d.com

14 Authoring Immersive Mixed Reality Experiences 281

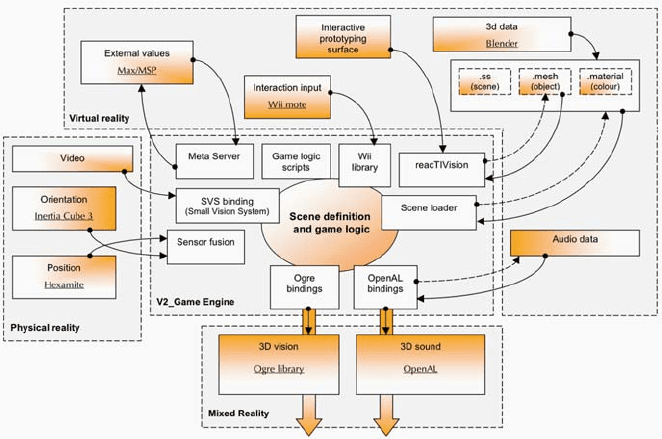

14.4.1.1 Overall Architecture

During the earlier stages of our mixed reality environments development, the focus

was on the technology needed for working prototypes, finding a balance between

custom and off-the-shelf hardware and software tools. Based on our experiences in

the case studies, we developed a more generic platform called V2_ Game Engine

(VGE). We engineered the platform to enable artists and designers to create content

for mixed reality environments.

On a technical level the platform allows for flexible combinations between the

large amounts of components needed to realise mixed reality environments. We

chose to work with as many readily available software libraries and hardware com-

ponents as possible, enabling us to focus on the actual application within the scope

of mixed reality. Figure 14.1 provides an overview of all components that are part

of VGE.

The basis of VGE is the Ogre real-time 3D rendering engine.

9

We use Ogre to

create the virtual 3D images that we want to place in our mixed reality environ-

ment. Next t o Ogre we use OpenAL, the Bullet physics engine, SDL and several

smaller libraries needed to control our sensors and cameras to create the final mixed

reality result. Most of these available software libraries are written in C or C++,

but in order to rapidly design programs and experiment with different aspects of

these libraries we used Scheme as a scripting language to effectively glue these

components together. Scheme wraps the various libraries and connects them in a

very flexible manner, making it possible to change most aspects of the environment

at run time. Not only parameters but also the actual routines being executed can

be changed at run time. This allows for the engineers to rapidly test and prototype

all parts of the mixed reality system. Components that run on different computers

communicate using the Open Sound Control (OSC) protocol.

Artists and designers can use the Blender 3D authoring to model the geometry of

the real space and add virtual objects to it. The OSC-based metaserver middleware

makes it possible to feed these data into the VGE-rendering engine in real time,

making it directly visible and audible to a user wearing a mixed reality system and

vice versa. The measured position and orientation of the user can be shown in real

time in the modelling environment. Because VGE can be controlled via the OSC

protocol, it is relatively straightforward to control it from other applications, notably

by the Max/MSP visual programming environment that is used extensively by artists

and interaction designers.

14.4.1.2 Perspectives

As is clear from the case studies, our main research focus is on the first-person

perspective, i.e. the player. But because all virtual content is absolutely positioned

with respect to the real world, it is relatively easy to set up a third-person perspective.

9

http://www.ogre3d.org

282 J.M.V. Misker and J. van der Ster

Fig. 14.1 Technical architecture of VGE

We experimented with this in the context of the Game/Jan project, an ongoing

collaboration of theatre maker Carla Mulder with V2_Lab. The goal of this project

is to create a theatre performance in which the audience, wearing polarised glasses,

sees a mixed reality on the stage, in which a real actor is interacting with a virtual

scene.

14.4.1.3 Sensors and Algorithms

As discussed earlier we want to create a virtual coordinate system that is fixed

with respect to the real world. For positioning we employ off-the-shelf ultrasound

hardware (Hexamite), for which we implemented time difference of arrival (TDoA)

positioning algorithms [13]. Using TDoA eliminates the need to communicate the

time stamp of transmission, increasing r obustness and allows for a higher sampling

rate up to an average of 6 Hz. The calculated absolute positions are transmitted via

WLAN to the player-worn computer. The position measurements are fused with

accelerometer sensor data using Kalman filters to produce intermediate estimates.

Orientation is measured using an off-the-shelf Intersense InertiaCube3, which

combines magnetometers, gyroscopes and accelerometers, all in three dimensions.

Together, this allows for 6 degrees of freedom.

14.4.2 Designing the Real World

Designing the appearance of the real world can be divided into two subtasks, first of

all environmental models are needed, both geometrical as well as visual. Second, the

14 Authoring Immersive Mixed Reality Experiences 283

actual appearance of the real world should be altered; in our case, we used lighting

to achieve this.

14.4.2.1 Geometry and Visual Appearance

In mixed reality applications, knowledge of the geometry and visual appearance

of the real world are of vital importance for the ability to blend in virtual content.

A lot of research is targeted at building up an accurate model of the environment

in real time, e.g. [8]. This research is progressing rapidly but the results are not

yet readily available. For our type of applications we require high-quality models

of the environment, so we chose to work in controlled environments that we can

model in advance. We use two techniques for this: laser scanning and controlled

lighting.

Laser scanning allows us to get a highly detailed model of the environment, in the

form of a point cloud. For budgetary reasons, we developed our own laser scanner

by mounting a SICK LMS200 2D laser scanner on a rotating platform. In one run

this setup can generate about 400,000 points in a 360

◦

× 100

◦

scan, at 0.36

◦

× 0.25

◦

resolution, or 360,000 points in a 360

◦

× 180

◦

scan, at 0.36

◦

× 0.5

◦

resolution.

By making multiple scans of a space from different locations and aligning them

to each other we can get a complete model of the environment. Based on this point

cloud we can model the geometry of the environment, which we still do manually.

The point cloud can be rendered in the mixed reality, as a way to trick the perception

of the user into believing the virtual content is more stable than it really is.

14.4.2.2 Lighting

Taking control over the lighting of an environment provides two important features.

First of all it is known in advance where the lights will be, making it possible to

align the shadows of real and virtual objects. Second, changing the lighting at run

time, synchronously in the real and virtual world, makes it still more difficult for

users to discern real from virtual, enhancing the immersive qualities of the system.

To incorporate this into our platform, we developed a software module that exposes

a DMX controller as an OSC service.

14.4.3 Mixing Virtual Images

We want to blend a 2D camera image with real-time 3D computer-generated images.

We are using these real-time images because this will allow us to eventually create

an interactive game-like mixed reality experience. In our setup we created a video

backdrop and placed the virtual objects in front of this camera image. In order to

create a convincing mixed result, we need to create the illusion of depth in the

2D camera image. The virtual images need to look like they are somewhere in the

camera image. We explored various known techniques [4] that create this illusion

discussed in detail below:

284 J.M.V. Misker and J. van der Ster

– Stereo-pairs

– Occlusion

– Motion

– Shadows

– Lighting

These techniques can be divided in binocular depth cues and monocular or empir-

ical depth cues. Binocular depth cues are hard wired in our visual system and

only work because humans have two eyes and can view the world stereoscopically.

Monocular depth cues are learned over time. We explored the effect these depth cues

have on the feeling of immersion in our mixed reality environments when imple-

mented flawless and flawed. We anticipated that we would not be using a flawless

tracking system and we wanted to know how precise these techniques need to be

implemented in order to create an immersive experience. The tests described below

will explore these different aspects of mixing live camera images with 3D generated

virtual images.

14.4.3.1 Test Setup

In order to experiment with various techniques, we created a test environment. In

this environment we placed virtual objects, models of a well-known glue stick. We

chose this object because everybody knows its appearance and dimensions.

In the test environment, we implemented scenarios in which virtual objects were

floating or standing at fixed positions, orbiting in fixed trajectories (Fig. 14.2) and

falling from the sky, bouncing from the real floor (Fig. 14.3). Note that each step

involves more freedom of movement. Within these scenarios we experimented with

various techniques described above.

Fig. 14.2 Virtual glue stick in orbit

Stereo-Pairs

Stereo-pairs is a technique to present the same or two slightly different images to

the left and the right eye [16]. The difference between these two images corresponds

with the actual difference between the left and right eye and thus creating a binocular

rendering of a mixed reality scene. These binocular depth cues are preattentive;

14 Authoring Immersive Mixed Reality Experiences 285

Fig. 14.3 Two frames showing virtual glue sticks in motion

this means viewers immediately see the distance between themselves and a virtual

glue stick. When the same scene is rendered in monovision, there are no preatten-

tive (binocular) depth cues in the virtual and real images thus one needs to judge

the exact position of the virtual objects in the real scene. The preattentive depth

cues in stereo-pairs greatly enhance the mix between both realities. One immedi-

ately believes the presented mixed realty to be one reality, e.g. the glue stick to

be in the camera image. However, the error margin when rendering a mixed real-

ity scene using stereo-pairs is much smaller than when rendering the same scene

in mono-pairs. When the alignment of both worlds fails, it becomes impossible to

view the stereo-paired scene as a whole. This is most likely because these depth

cues are preattentive. Humans can accommodate their eyes to focus either on the

virtual objects or on the real camera image but not on both. Mono-pair scenes can

always be viewed as a whole.

Occlusion

Occlusion occurs when some parts of the virtual images appear to be behind some

parts of the camera images. This monocular depth cue is great in fusing virtual and

real images. You immediately believe a virtual object is part of the camera image

if it appears from behind a part of the video backdrop. It does not matter if a glue

stick is placed correctly from behind an object or flies through a wall. You will

simply think that this just happened. The image will look unnatural not because the

image seems to be incorrect but because you know it is unnatural for a glue stick

to fly through a wall. This is true for a monovision setup. In a binocular setup this

needs to be done correctly. You will perceive the depth of an object according to the

binocular depth cues and not the way a scene in layered. If this is done incorrectly it

will be impossible to focus the whole image. You will be able to see a sharp virtual

object or the camera image but not both.

Camera Movement

Movement of the virtual and real camera will enhance the feeling of depth in your

scene in mono- or stereo-pairs. The different speed of movement between objects