Atkinson K. An Introduction to Numerical Analysis

Подождите немного. Документ загружается.

198 APPROXIMATION

OF

FUNCTIONS

Theorem 4.1 (Weierstrass) Let

f(x)

be continuous for a

~

x

~

b and let

t:

> 0. Then there

is

a polynomial

p(x)

for which

1/(x)-

p(x)

I~

€

a~x~b

Proof

There

are

many proofs of this result

and

of generalizations

of

it. Since

this is

not

central to our numerical analysis development,

we

just

indicate

a constructive proof.

For

other proofs, see Davis (1963, chap. 6).

Assume

[a,

b]

=

[0,

1]

for simplicity: by an appropriate change

of

.variables, we can always reduce to this case if necessary. Define

Pn(x) = t

(~)!(!:_)xk(1-

x)n-k

k=O

n

O~x~1

Let

f(x)

be bounded

on

[0,

1].

Then

Limitpn(x) =

f(x)

n->

oo

at

any

point

x

at

which f

is

continuous.

If

f(x)

is

continuous

at

every x

in

[0,

1], then the convergence

of

Pn

to f

is

uniform on

[0,

1],

that

is,

Max

lf(x)-

Pn(x)

I~

0

O:$x:$l

.

as n

~

oo

(4.1.1)

This

gives

an

explicit way

of

finding a polynomial that satisfies the

conclusion

of

the theorem.

The

proof

of

these results can

be

found

in

Davis (1963, pp. 108-118), along with additional properties

of

the

approximating polynomials

Pn(x), which are called the Bernstein poly-

nomials. They mimic extremely

well

the

qualitative behavior

of

the

function

f(x).

For

ex~mple,

if

f(x)

is

r times continuously differentiable

on

[0, 1], then

as

n

~

oo

But

such

an

overall approximating property has its price,

and

in this

case

the

convergence in (4.1.1)

is

generally very slow,

For

example, if

f(x)

= x

2

,

then

Limitn[pn(x)-

f(x)]

=

x(1-

x)

n->

oo

and

thus

1

p

(x)-

x

2

,;,

-x(l-

x)

n n

for

large

values of

n.

The

error does

not

decrease rapidly, even for

approximating such a trivial case as

f(x)

= x

2

•

Ill

THE WEIERSTRASS THEOREM

AND

TAYLOR'S THEOREM 199

Tayior's theorem Taylor's theorem was presented earlier, in Theorem

1.4

of

Section 1.1, Chapter

1.

It

is

the first important means for the approximation of a

function,

and

it is often used

as

a preliminary approximation for computing some

more efficient approximation. To aid in understanding why the Taylor approxi-

mation is

not

particularly efficient, consider the following example.

Example Find the error of approximating ex using the third-degree Taylor

polynomial

p

3

(x)

on the interval [

-1,

1],

expanding about

x,;,

0.

Then

with

~

between x and 0.

To

examine the error carefully,

we

bound it from above and below:

1 e

-x

4

<ex-

p

(x)

<

-x

4

24 -

3

-

24

O~x~1

e-

1

1

-x

4

<ex

-p

(x)

<

-x

4

24 -

3

-

24

-1

~X~

0

The

error increases for increasing

lxl,

and by direct calculation,

Max

lex-

p

3

1

,;,

.0516

-l:~x.s;l

(4.1.2)

(4.1.3)

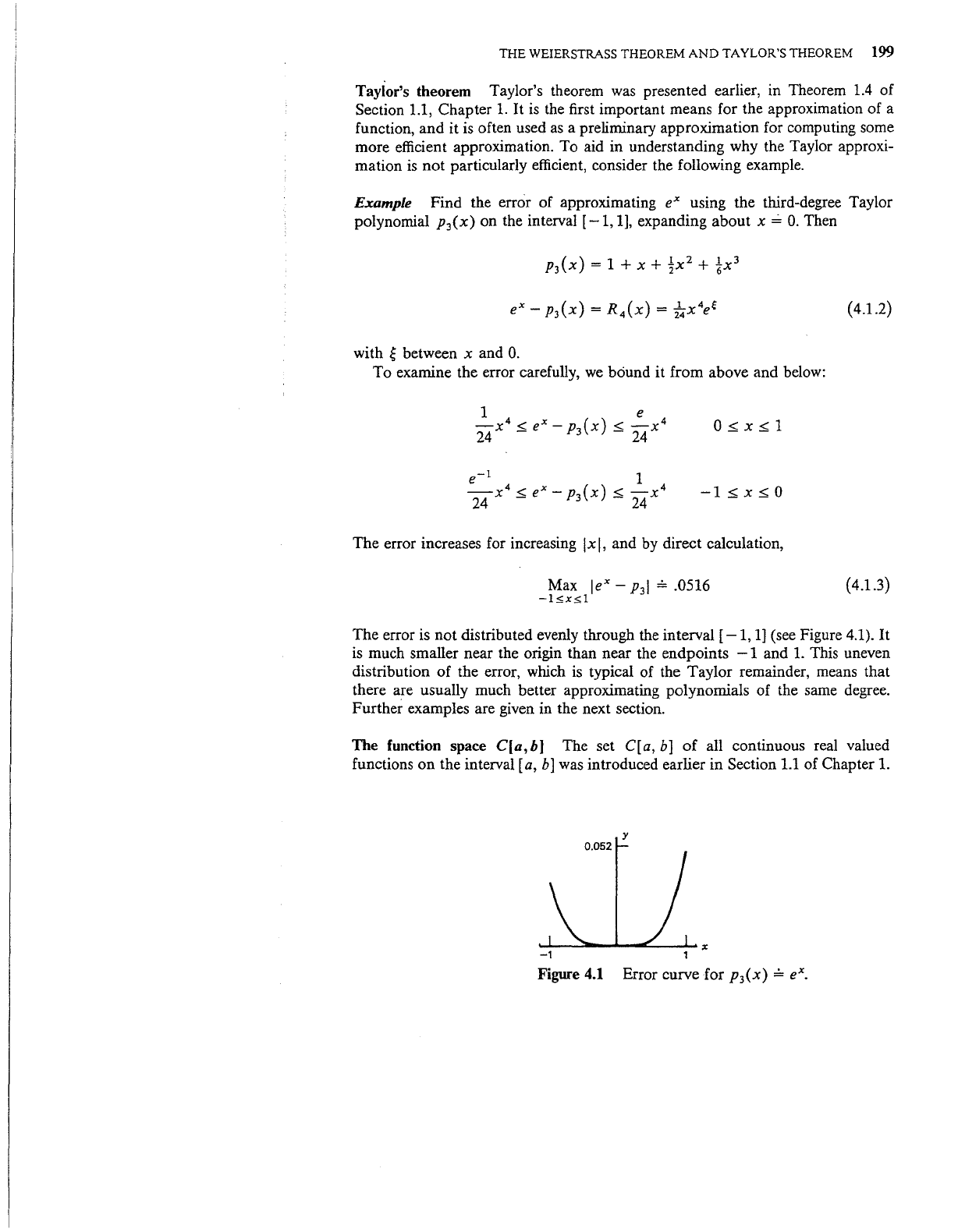

The

error is

not

distributed evenly through the interval [

-1,

1]

(see Figure 4.1).

It

is much smaller near the origin than near the endpoints - 1 and

1.

This uneven

distribution

of

the error, which is typical of the Taylor remainder, means that

there are usually much better approximating polynomials of the same degree.

Further examples are given in the next section.

The

function space

C(a,b]

The set C[a, b]

of

all continuous real valued

functions

on

the interval [a,

b]

was introduced earlier in Section

1.1

of Chapter 1.

y

0.052

-1

Figure

4.1

Error curve

for

p

3

(x)

,;,

ex.

200

APPROXIMATION

OF

FUNCTIONS

With it

we

will generally use the norm

11/lloo

= Max

if(x)

I

as,xs,b

fEC[a,b]

(4.1.4)

It is called variously the

maximum norm, Chebyshev norm, infinity norm, and

uniform norm.

It

is

the natural measure

to

use

in

approximation theory, since

we

will want to determine and c0mpare

II/-

Plloo

= Max

l/(x)-

p(x)

I

a:>,xs,b

(4.1.5)

for various polynomials

p(x).

Another norm for C[a,

b]

is

introduced in Section

4.3, one that is also useful in measuring the size of

f(x)

-

p(x).

As noted earlier in Section 1.1, the maximum norm satisfies the following

characteristic properties of a norm:

11/11

= 0

if and only if f = 0

lla/11

=

Ia

III/II for all f E C[a, b] and all scalars a

II/+

gil~

11/11

+

llgll

all/,

g E

C[a,

b]

(4.1.6)

(4.1.7)

(4.1.8)

The proof

of

these properties for (4.1.4)

is

quite straightforward. And the

properties show that the norm should be thought of

as

a generalization of the

absolute value

of

a number.

Although we will not make any significant use of the idea, it

is

often useful to

regard C

[a,

b]

as a vector space. The vectors are the functions f ( x

),

a

~

x

~

b.

We define the distance from a vector f to a vector g by

D(!,

g) =

II/-

gil

(4.1.9)

which

is

in keeping with our intuition about the concept of distance in simpler

vector spaces. This is illustrated in Figure

4.2.

Using.the inequality (4.1.8),

II/-

gil= II(!-

h)+

(h-

g)

II~

II/-

hll

+

llh-

gil

D(!,

g)~

D(/,

h)+

D(h,

g)

(4.1.10)

This

is

called the triangle inequality, because of its obvious interpretation in

measuring the lengths of sides of a triangle whose vertices

are/,

g,

and

h.

The

name

"triangle

inequality"

is

also applied to the equivalent formulation (4.1.8).

0

g

Figure

4.2 Illustration for defining

distance

D(f,

g).

THE

MINIMAX APPROXIMATION PROBLEM 201

Another useful result

is

the reverse triangle inequality

III/II

-

II

gill

.:-:;

II/-

gil

To

prove it, use (4.1.8) to

give

By

the symmetric argument,

IIIII

.:-:;

Ill-

gil+

II

gil

IIIII

-

II

gil

.:-:;

Ill-

gil

II

gil

-

IIIII

.:-:;

llg

-Ill

=

Ill-

gil

(4.1.11)

with the last equality following from (4.1.7) with a =

-1.

Combining these two

inequalities proves

(4.1.11).

A more complete introduction to vector spaces (although only finite dimen-

sional) and to vector norms

is

given in Chapter

7.

And some additional geometry

for C[

a, b]

is

given

in

Sections 4.3 and

4.4

with the introduction of another

norm, different from

(4.1.4). For cases where

we

want to talk about functions that

have several-continuous derivatives,

we

introduce the function space

cr[a,.b],

consisting of functions

f(x)

that have r continuous derivatives on

[a,

b]. This

function space

is

of

independent interest, but

we

regard it

as

just a simplifying

notational device.

4.2 The Minimax Approximation Problem

Let

f(x)

be continuous on [a, b]. To compare polynomial approximations

p(x)

to

f(x),

obtained by various methods, it

is

natural to ask what

is

the best possible

accuracy that can be attained

by

using polynomials of each degree n

~

0.

Thus

we

are lead to introduce the

minimax

error

Pn(/)

= Infimum

!I/-

qlloo

deg(q):Sn

(4.2.1)

There does not exist a polynomial

q(x)

of

degree.:;;;

n that can approximate

f(x)

with a smaller maximum error than

Pn(f).

Having introduced

Pn(f),

we

seek whether there

is

a polynomial

q:(x)

for

which

(4.2.2)

And if so,

is

it unique, what are its characteristics, and how can it be constructed?

The approximation

q:(

x)

is

called the

minimax

approximation to

[(

x)

on [a, b

],

and its theory

is

developed in Sectiqn

4.6.

Example

Compute the minimax polynomial approximation

q;'(x)

to ex on

-1

.:;;;

x.:;;;

1.

Let

qf(x)

= a

0

+ a

1

x. To find a

0

and a

1

,

we

have to use some

geometric insight. Consider the graph of

y =

ex

with that of a possible ap-

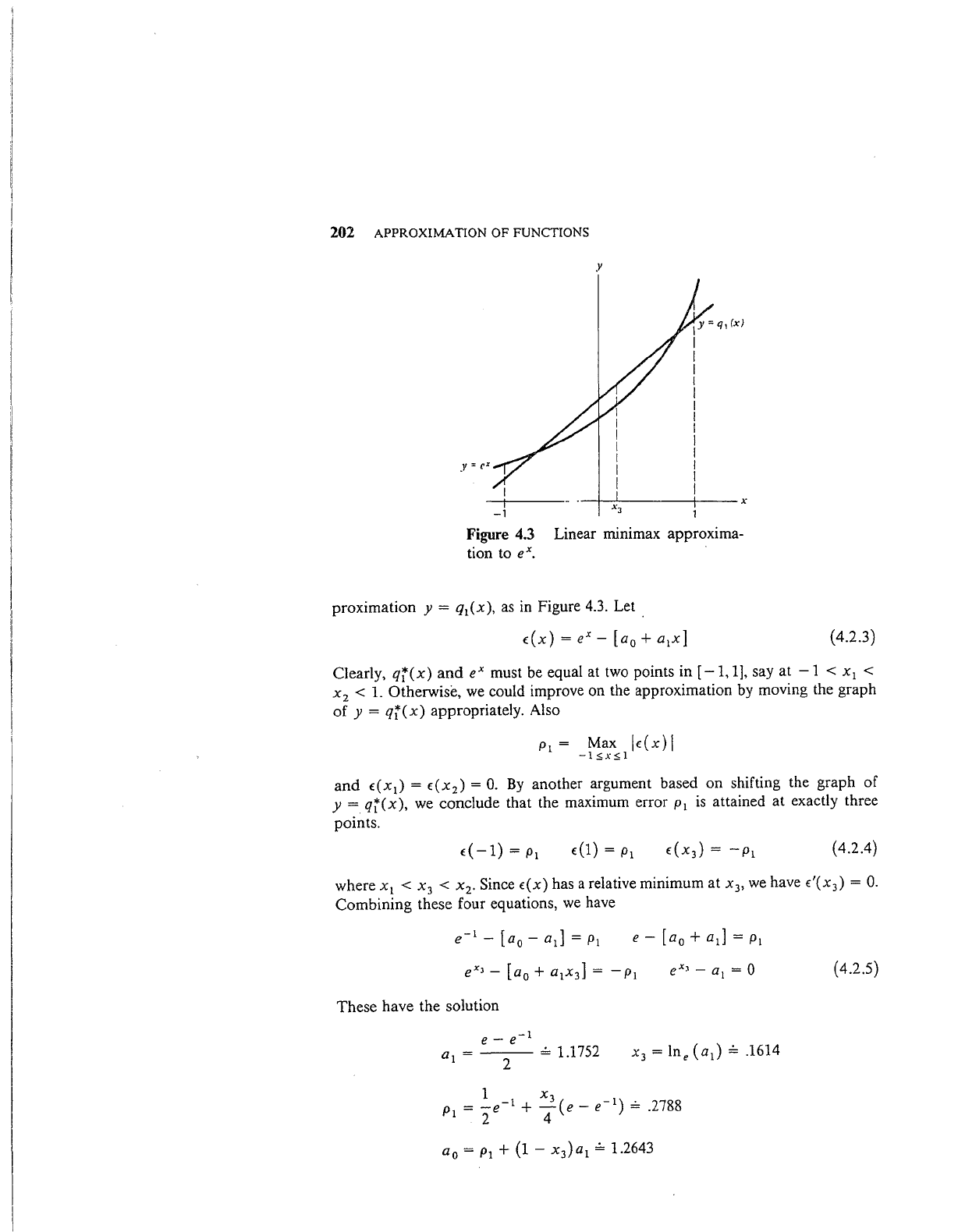

202 APPROXIMATION OF FUNCTIONS

.V

=

cr

-1

Figure

4.3

tion to

ex.

y

--~XLJ-------41-----X

Linear minimax approxima-

proximation

y = q

1

(x),

as in Figure 4.3. Let

t:(x)

=ex-

[a

0

+ a

1

x]

(4.2.3)

Clearly,

qf(x)

and

ex must be equal at two points in [

-1,

1],

say at

-1

< x

1

<

x

2

< 1. Otherwise,

we

could improve on the approximation by moving the graph

of

y =

qf(x)

appropriately. Also

p

1

= Max lt:{x)j

-1sxs1

and

t:(x

1

)

= t:{x

2

)

=

0.

By

another argument based

on

shifting the graph

of

y =

qf(x),

we conclude that the maximum error p

1

is

attained at exactly three

points.

t:(-1)=pl

(4.2.4)

where

x

1

< x

3

< x

2

•

Since t:{x) has a relative minimum at x

3

,

we

have t:'{x

3

)

=

0.

Combining

these four equations,

we

have

(4.2.5)

These

have the solution

a

1

=

--

2

---

= 1.1752

1

X

p

1

=

-e-

1

+

_2(e-

e-

1

)

= .2788

2 4

a

0

= P

1

+

(1-

x

3

)a

1

= 1.2643

Thus

and

p

1

,;,

.2788.

THE MINIMAX APPROXIMATION PROBLEM

203

y

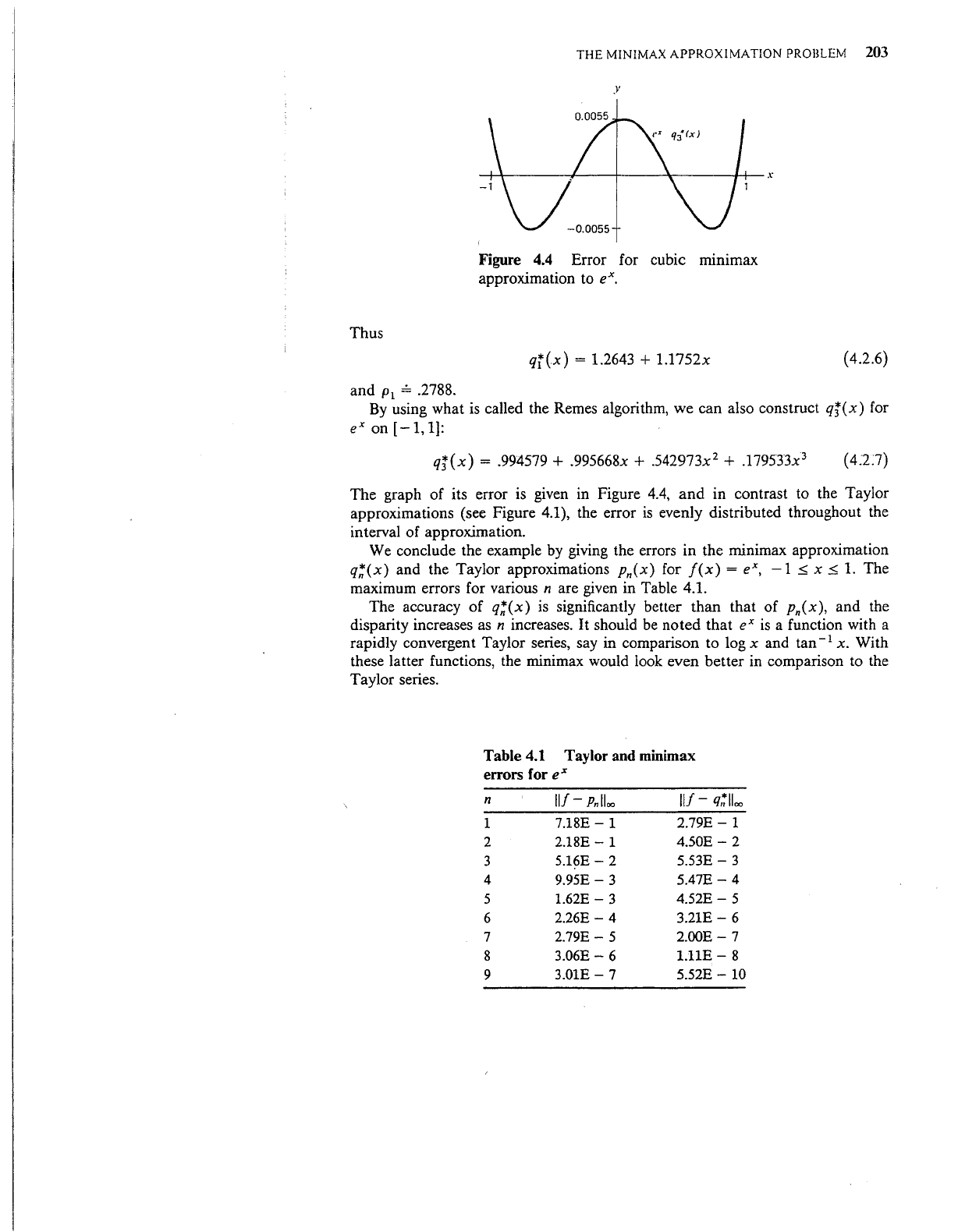

Figure 4.4 Error for cubic minimax

approximation to

ex.

qf(x)

= 1.2643 + 1.1752x

(4.2.6)

By

using what

is

called the Remes algorithm, we can also construct

qj(x)

for

ex on [

-1,

1]:

qj(x)

= .994579 + .995668x + .542973x

2

+ .179533x

3

( 4:2:7)

The graph of its error

is

given

in

Figure

4.4,

and

in contrast to the Taylor

approximations (see Figure 4.1), the error

is

evenly distributed throughout the

interval of approximation.

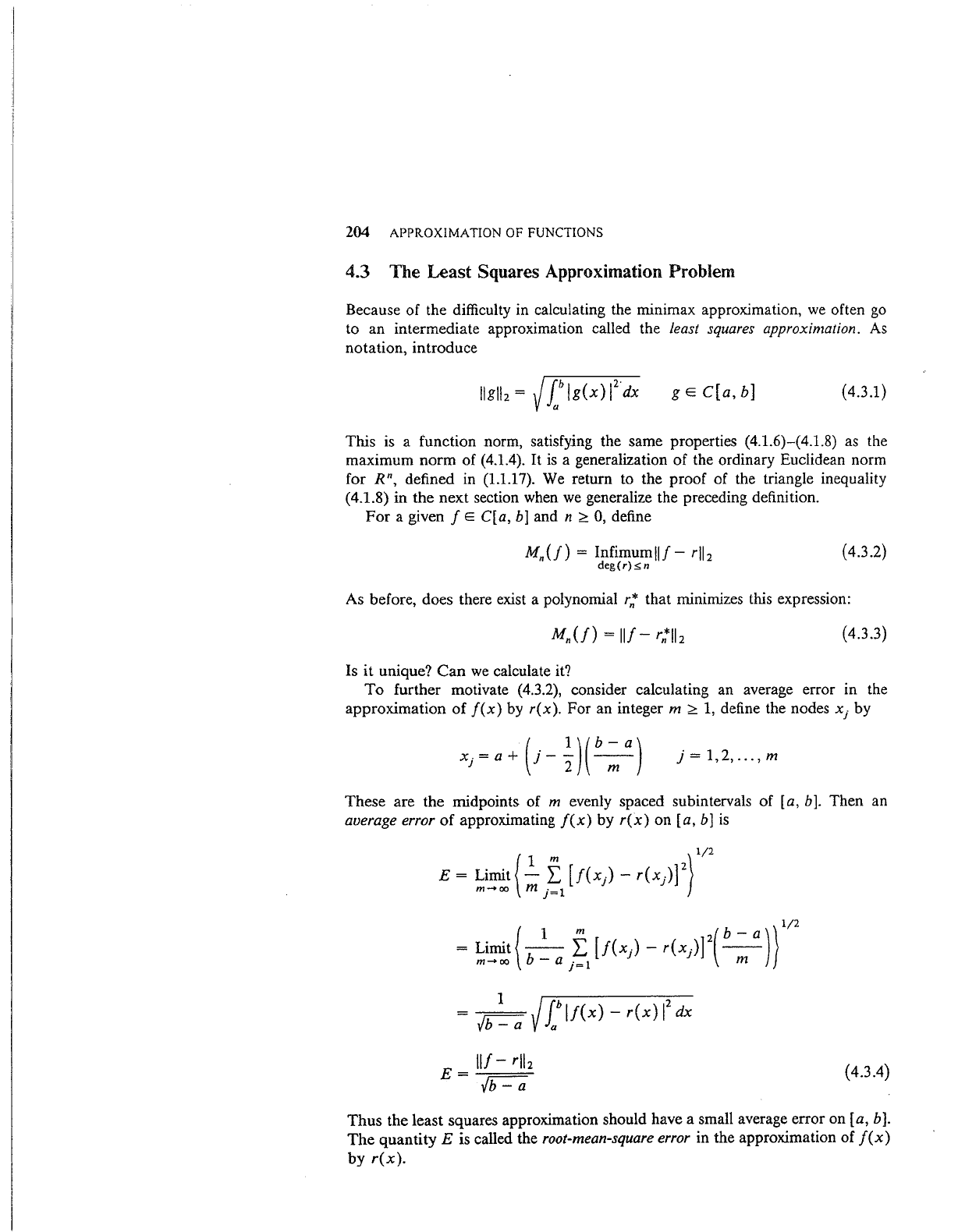

We

conclude the example

by

giving the errors in the minimax approximation

q~(x)

and the Taylor approximations Pn(x) for

f(x)

=eX,

-1

~

x

~

1.

The

maximum errors for various

n are given in Table 4.1.

The accuracy of

q!(x)

is

significantly better than that of Pn(x), and the

disparity increases as

n increases.

It

should

be

noted that ex

is

a function with a

rapidly convergent Taylor series, say in comparison to log

x and tan

-l

x.

With

these latter functions, the minimax would look even better

in

comparison to the

Taylor series.

Table 4.1

Taylor

and

minimax

errors for ex

n

II/-

Pnlloo

II/-

q,;lloo

1

7.18E-

1

2.79E-

1

2

2.18E-

1

4.50E-

2

3

5.16E-

2

5.53E-

3

4

9.95E-

3

5.47E-

4

5

1.62E-

3

4.52E-

5

6

2.26E-

4

3.21E-

6

7

2.79E-

5

2.00E-

7

8

3.06E-

6

l.IIE-8

9

3.01E-

7

5.52E-

10

204 APPROXIMATION

OF

FUNCTIONS

4.3 The Least Squares Approximation Problem

Because

of

the

difficulty in calculating the

minimax

approximation,

we

often go

to

an

intermediate

approximation called

the

least squares approximation. As

notation,

introduce

gEC[a,b]

(4.3.1)

This

is a

function

norm,

satisfying

the

same

properties (4.1.6)-(4.1.8) as the

maximum

norm

of

(4.1.4).

It

is a generalization

of

the ordinary Euclidean

norm

for

Rn, defined in (1.1.17). We

return

to

the

proof

of

the triangle inequality

(4.1.8)

in

the

next

section when we generalize

the

preceding definition.

For

a given f E C[a, b]

and

n

;;::

0, define

Mn(!) =

Infimum

!If-

r!l

2

deg(r):>n

(4.3.2)

As

before,

does

there exist a polynomial

rn*

that

minimizes this expression:

(4.3.3)

Is

it

unique?

Can

we calculate it?

To

further

motivate (4.3.2), consider calculating an average

error

in

the

approximation

of

f(x)

by

r(x).

For

an

integer m

;;::

1,

define the nodes

xj

by

j =

1,2,

...

, m

These

are

the

midpoints

of

m evenly

spaced

subintervals

of

[a, b].

Then

an

average error

of

approximating

f(x)

by

r(x)

on

[a,

b]

is

1/2

E = Limit {

2_

f

[J(x)-

r{x)r}

m-+oo

m

j=l

{

1 m ( b _ a ) }

1

1

2

=Limit

-b

_ L

[f(x)-

r{x))

2

--

m-+oo

aj=l

m

=

~

/Jbif(x)-

r(x)

1

2

dx

b-

a a

E

=!If-

rib

vb-

a

(4.3.4)

Thus

the

least

squares

approximation should have a small average

error

on

[a,

b].

The

quantity

E is called the root-mean-square error in

the

approximation

of

f(x)

by

r(x).

THE

LEAST SQUARES APPROXIMATION PROBLEM 205

Example

Let

f(x)

=ex,

-1

.$

x

.$

1,

and let r

1

(x)

= b

0

+ b

1

x. Minimize

(4.3.5)

If

we expand the integrand and break the integral apart, then

F(b

0

,

b

1

)

is

a

quadratic polynomial in the

two

variables b

0

, b

1

,

F-

j

1

{

e

2

x + b

2

+ b

2

x

2

-

2b

xex

+ 2b b

X}

dx

- 0 1 0

01

-1

To

find a minimum,

we

set

8F

-=0

8b

0

which is a necessary condition at a minimal point. Rather than differentiating in

the previously given integral,

we

merely differentiate through the integral in

(4.3.5),

Then

1

!1

b

0

=-

ex

dx

=

sinh(1)

= 1.1752

2

-1

311

b

1

= -

xex

dx =

3e-

1

= 1.1036

2

-1

r{(x) = 1.1752 + 1.1036x (4.3.6)

By

direct examination,

This is intermediate to the approximations

q{ and p

1

(x)

derived earlier. Usually

the least squares approximation

is

a fairly good uniform approximation, superior

to the Taylor series approximations.

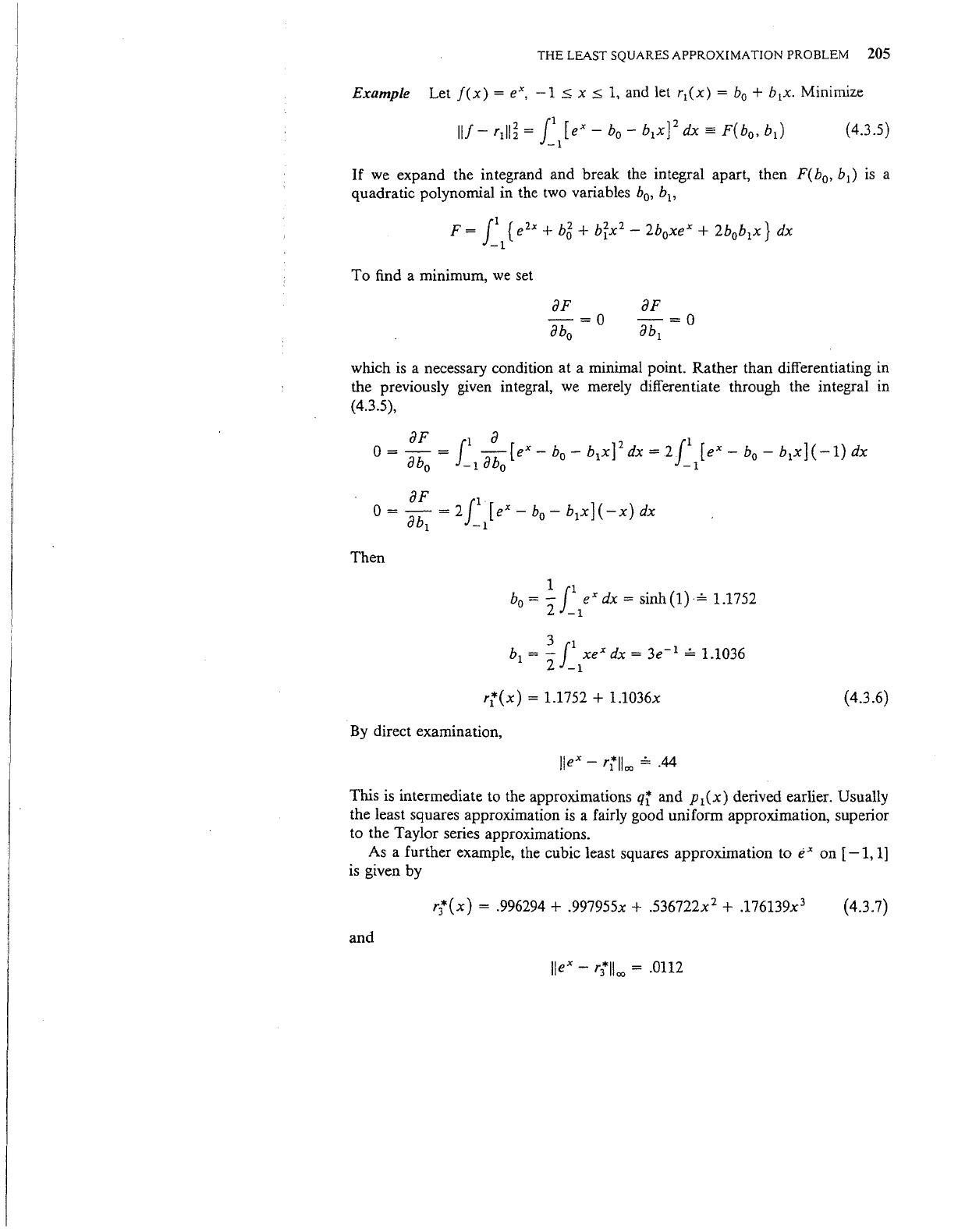

As a further example, the cubic least squares approximation to

ex

on [

-1,

1]

is given by

rt(x)

= .996294 + .997955x + .536722x

1

+ .176139x

3

(4.3.7)

and

206

APPROXIMATION

OF

FUNCTIONS

y

0.011

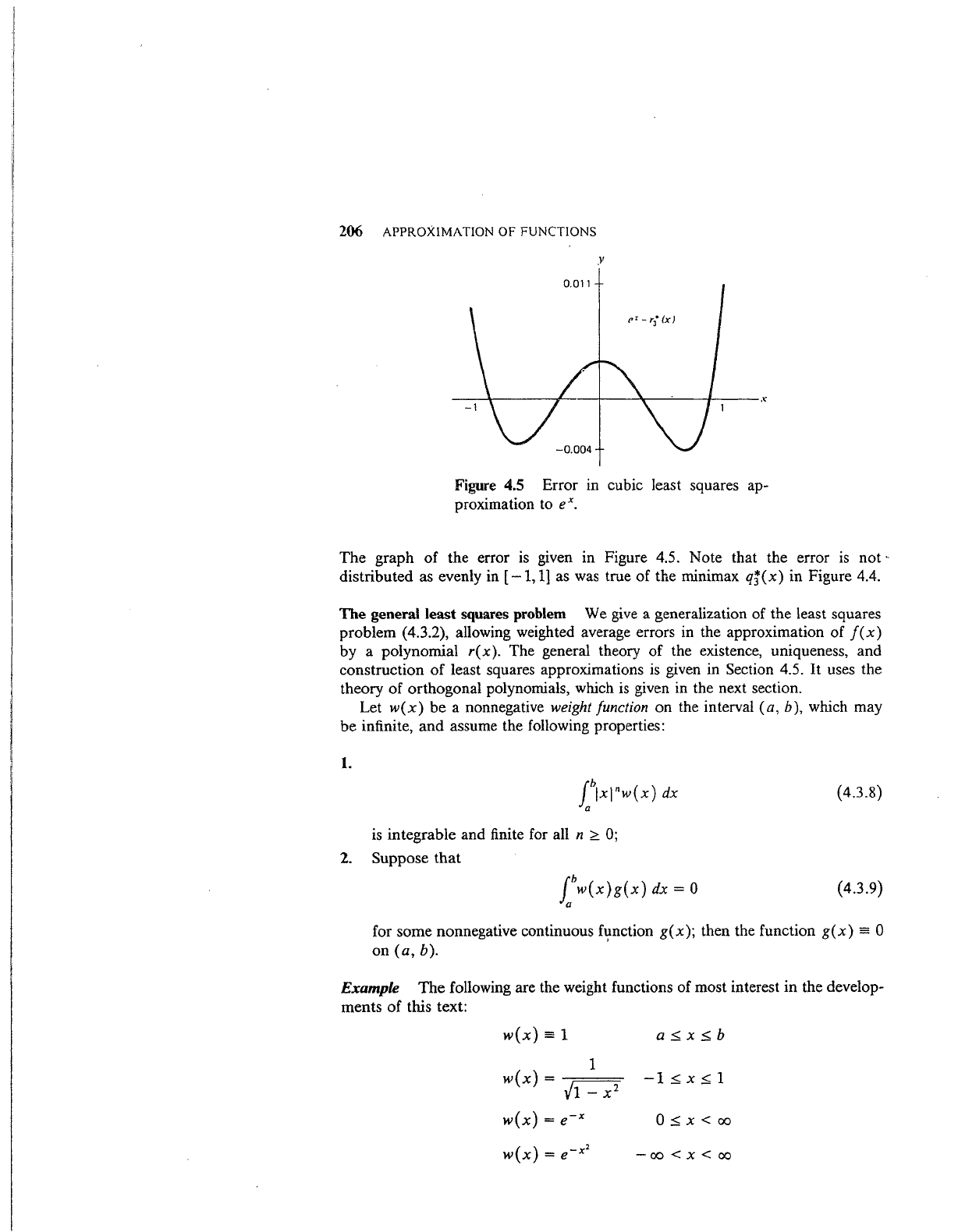

Figure 4.5 Error m cubic least squares ap-

proximation to ex.

The

graph

of

the error is given in Figure 4.5. Note that the error

is

not·

distributed as evenly in [

-1,

1]

as was true

of

the minimax

qj(x)

in Figure 4.4.

The

general least squares problem We give a generalization of the least squares

problem (4.3.2), allowing weighted average errors in the approximation

of

f(x)

by a polynomial

r(

x

).

The general theory

of

the existence, uniqueness,

and

construction

of

least squares approximations

is

given in Section 4.5.

It

uses the

theory

of

orthogonal polynomials, which

is

given in the next section.

Let

w(x)

be

a nonnegative weight function

on

the interval

(a,

b), which may

be

infinite,

and

assume the following properties:

1.

(4.3.8)

is integrable and finite for all n

~

0;

2. Suppose

that

fw{x)g{x)

dx = 0

a

(4.3.9)

for some nonnegative continuous function

g(x);

then the function

g(x)

= 0

on

(a,

b). '

Example

The

following are the weight functions of most interest in the develop-

ments

of

this text:

w(x} = 1

1

w(x}

=

_/

v1-

x

2

w(x}

=e-x

O:s;x<oo

-oo<x<oo

ORTHOGONAL POLYNOMIALS 201

For a finite interval [a, b

],

the general least squares problem can

now

be

stated.

Gicen f E C[a,

b],

does there exist a polynomial

r~(x)

of

degree

.:::;;

n that

minimizes

fw(x)[j(x)-

r(x)]

2

dx

a

(4.3.10)

among

all polynomials r(x)

of

degree.:::;;

n?

The function w(x) allows different

degrees of importance to

be

given to the error

at

different points in the interval

[a, b

].

This will prove useful

in

developing near minimax approximations.

Define

(4.3.11)

in order to compute (4.3.10) for an arbitrary polynomial

r(x)

of degree

.:::;;

n.

We

want to minimize F

as

the coefficients (a;} range over

all

real numbers. A

.necessary condition for a point

(a

0

,

•.•

, an)

to

be a minimizing point

is

BF

-=0

Ba;

i = 0, 1,

...

, n

(4.3.12)

By

differentiating through

the

integral

in

(4.3.11) and using (4.3.12),

we

obtain

the linear system

i = 0, 1,

...

, n (4.3.13)

To

see why this solution of the least squares problem

is

unsatisfactory,

consider the special case w(x)

=

1,

[a, b] =

[0,

1].

Then

the

linear system be-

comes

n

a·

i'

L

..

1

.=

Lf(x)x;dx

j-0

l + J +

·1

0

i=0,1,

...

,n

(4.3.14)

The matrix of coefficients

is

the · Hilbert matrix of order n +

1,

which was

introduced in (1.6.9). The solution of linear system

(4.3.14)

is

extremely sensitive

to small changes

in

the

coefficients or right-hand constants. Thus this

is

not a

good way to approach the least squares problem. In single precision arithmetic

on

an IBM 3033 computer, the cases n

~

4

will

be completely unsatisfactory.

4.4 Orthogonal Polynomials

As

is

evident from graphs of

xn

on

[0,

1],

n

~

0,

these monomials are very nearly

linearly dependent, looking much alike, and this results in the instability

of

the

linear system

(4.3.14). To avoid this problem,

we

consider an alternative basis for