Alfred DeMaris - Regression with Social Data, Modeling Continuous and Limited Response Variables

Подождите немного. Документ загружается.

or, in more compact notation,

E(Y

i

) ⫽

冱

k

β

k

X

ik

, (3.2)

where the index k ranges from 0 to K, and X

0

equals 1 for all cases. [From here on,

for economy of presentation, I denote the conditional mean in the regression model

as E(Y

i

) instead of E(Y

i

冟X

i1

, X

i2

, ..., X

iK

).] The right-hand side of equation (3.2)

called the linear predictor, represents the structural part of the model. As in SLR,

the conditional mean is assumed to be a linear function of the equation parameters—

the β’s. Now, however, the points (x

1

, x

2

,...,x

K

, y) no longer lie on a straight line.

Rather, they lie on a hyperplane in (K ⫹ 1)-dimensional space. A particular combi-

nation of values, x

1

, x

2

,...,x

K

, for the X’s is called a covariate pattern (Hosmer

and Lemeshow, 2000). E(Y

i

) is therefore the mean of the Y

i

’s for all cases with the

same covariate pattern. As before, the equation disturbances, the ε

i

’s, represent the

departure of the individual Y

i

’s at any given covariate pattern from their mean,

E(Y

i

).

The other two quantities that are of importance in MULR are σ

2

and P

2

(rho-

squared). As before, σ

2

is V(ε), the variance of the equation errors, which is assumed

to be constant at each covariate pattern. Because the variance in Y at each covariate

pattern is assumed to be due to random error alone, σ

2

is also the conditional vari-

ance of Y. P

2

is the coefficient of determination for the MULR model, and as in SLR,

is the primary index of the model’s discriminatory power. P

2

can be understood, once

again, by expressing the variance of Y in terms of the model:

V(Y

i

) ⫽ V

冢

冱

k

β

k

X

ik

⫹ ε

i

冣

⫽ V

冢

冱

k

β

k

X

ik

冣

⫹ V(ε

i

).

Dividing through by V(Y

i

), we have

1 ⫽

ᎏ

V

(

冱

V(

k

Y

β

i

k

)

X

ik

)

ᎏ

⫹

ᎏ

V

V

(

(

Y

ε

i

i

)

)

ᎏ

,

or

1 ⫽ P

2

⫹

ᎏ

V

σ

(Y

2

i

)

ᎏ

.

P

2

is therefore the proportion of the variance in Y that is due to variation in the lin-

ear predictor—that is, to the structural part of the model.

Interpretation of the Betas. The interpretation of the betas is, as in SLR, facilitated

by manipulating equation (3.2) so as to isolate β

0

and then each β

k

. By setting all of

the X’s to zero, we see that β

0

is the expected value of Y when all of the X’s equal zero.

The interpretation of any β

k

, say β

1

, can be seen by considering increasing X

1

by 1 unit

EMPLOYING MULTIPLE PREDICTORS 85

c03.qxd 8/27/2004 2:48 PM Page 85

while holding all other X’s constant at specific values. If we let x

⫺1

represent all of the

X’s other than X

1

, the change in the mean of Y is

E(Y 冟x

1

⫹ 1,x

⫺1

) ⫺ E(Y 冟 x

1

,x

⫺1

)

⫽ β

0

⫹ β

1

(x

1

⫹ 1) ⫹ β

2

X

2

⫹

...

⫹ β

K

X

K

⫺ (β

0

⫹ β

1

x

1

⫹ β

2

X

2

⫹

...

⫹ β

K

X

K

)

⫽ β

1

(x

1

⫹ 1 ⫺ x

1

) ⫽ β

1

.

Here it is clear that β

1

represents the change in the mean of Y for a unit increase in

X

1

, controlling for the other regressors in the model. Or, in language with fewer causal

connotations, β

1

is the expected difference in Y for those who are 1 unit apart on X

1

,

controlling for the other regressors in the model. As in SLR, β

1

(and β

k

generally) is

both the unit impact of X

1

(or X

k

) on E(Y ) as well as the first partial derivative of E(Y )

with respect to X

1

(or X

k

).

Assumptions of the Model. The assumptions of MULR relevant to estimation via

OLS mirror those for SLR with perhaps a couple of exceptions:

1. The relationship between Y and the X’s is linear in the parameters; that is,

Y

i

⫽ β

0

⫹ β

1

X

i1

⫹ β

2

X

i2

⫹

...

⫹ β

K

X

iK

⫹ ε

i

for i ⫽ 1,2,...,n.

What does this mean? It means that the parameters enter the equation in a lin-

ear fashion or that the equation is a weighted sum of the parameters, where

the weights are now the X’s. An example of an equation that is nonlinear

in the parameters is

Y

i

⫽ β

0

X

i1

β

1

X

i2

β

2

ε

i

. Here, since β

1

and β

2

enter as exponents

of the X’s, the right-hand side of this equation clearly cannot be described as

a weighted sum of the β’s. On the other hand, this equation is easily made

into one that is linear in the parameters by taking the log of both sides: ln Y

i

⫽

ln β

0

⫹ β

1

ln X

i1

⫹ β

2

ln X

i2

⫹ ln ε

i

. In contrast, there is no simple way to

linearize the equation

Y

i

⫽ β

0

⫹ X

i1

β

1

⫹ X

i2

β

2

⫹ ε

i

.

2. The observations are sampled independently.

3. Y is approximately interval level, or binary (although the ideal procedures

when Y is binary are probit or logistic regression, described in Chapter 7). The

X’s are approximately interval-level, or dummy variables. Dummy variables

are binary-coded X’s that are used to represent qualitative predictors or pre-

dictors that are to be treated as qualitative (more about this in Chapter 4).

4. The X’s are fixed over repeated sampling. As in the case of SLR, this require-

ment can be waived if we are willing to make our results conditional on the

observed sample values of the X’s.

5. E(ε

i

) ⫽ 0 at each covariate pattern. This is the orthogonality condition.

6. V(ε

i

) ⫽ σ

2

at each covariate pattern.

7. Cov(ε

i

,ε

j

) ⫽ 0 for i ⫽ j, or the errors are uncorrelated with each other. Again,

this is equivalent to assumption 2 if the data are cross-sectional.

86 INTRODUCTION TO MULTIPLE REGRESSION

c03.qxd 8/27/2004 2:48 PM Page 86

8. The errors are normally distributed.

9. None of the X

k

is a perfect linear combination, or weighted sum, of the other X’s

in the model. That is, if we regress each X

k

on all of the other X’s in the model,

no such MULR would produce an R

2

of 1.0. Should we find an R

2

of 1.0 for the

regression of one or more X’s on the remaining X’s, we say that there is an exact

collinearity among the X’s; that is, at least one of the X’s is completely deter-

mined by the others. Under this condition, the equation parameters are no longer

identified, and there is no unique OLS solution to the normal equations. This is

almost never a problem, although once in a while the unsuspecting analyst will

try to use X’s that are exactly collinear in a regression. One situation that pro-

duces this condition is when one tries to model an M-category qualitative vari-

able using all M dummy variables that can be formed from the categories. I

postpone discussion of dummies until Chapter 4. Another scenario resulting in

perfect collinearity occurs when someone tries to enter, say, age at marriage,

marital duration, and current age—all in years of age—into a model. In that

current age ⫽ age at marriage ⫹ marital duration, these variables are exactly

collinear. If this happens, it is immediately obvious from software output. There

are no regression results, and an error message appears warning the analyst that

the “XTX matrix” is “singular” or “has no inverse.” (In Chapter 6, where I dis-

cuss the matrix representation of the regression model, these concepts will be

clearer.) Although perfect collinearity is rare, a somewhat more common prob-

lem occurs under conditions of near-collinearity among the X’s. This occurs

when the regression of a given X

k

on the other X’s produces an R

2

close to 1.0,

say .98. This situation is termed multicollinearity. Unlike the case of exact

collinearity, it does not violate an assumption of regression. The normal equa-

tions can still be solved and parameter estimates produced. However, the

estimates and their standard errors may be quite “flawed.” The symptoms, con-

sequences, diagnosis, and remedies for multicollinearity are taken up briefly

below, and with substantially greater rigor in Chapter 6.

Estimation via OLS. Estimation of the MULR model proceeds in a fashion similar

to estimation of the SLR model. The idea, once again, is to find the b

0

, b

1

, b

2

, ...,

b

K

that minimize SSE, where

SSE ⫽

冱

n

i⫽1

(y

i

⫺ yˆ

i

)

2

⫽

冱

n

i⫽1

冤

y

i

⫺

冢

冱

k

b

k

x

ik

冣冥

2

For any given sample of data values, this expression is clearly only a function of the

b

k

’s. To find the b

k

’s that minimize it, we take the first partial derivative of SSE with

respect to each of the b

k

’s and set each resulting expression to zero. This produces a

set of simultaneous equations representing the multivariate version of the normal

equations. These are then solved to find the OLS estimates of the b

k

’s (the solution,

in matrix form, is shown in Chapter 6).

As in SLR, we are also interested in estimating σ

2

and P

2

. In MULR, as in SLR,

the estimate of σ

2

is SSE divided by its degrees of freedom, which is n ⫺ K ⫺ 1.

EMPLOYING MULTIPLE PREDICTORS 87

c03.qxd 8/27/2004 2:48 PM Page 87

Thus,

σ

ˆ

2

⫽ MSE ⫽

ᎏ

n ⫺

S

K

SE

⫺ 1

ᎏ

.

R

2

, the estimate of P

2

, has the same basic formula in MULR as in SLR:

R

2

⫽ 1 ⫺

ᎏ

S

T

S

S

E

S

ᎏ

.

In MULR, R

2

is the proportion of variation in Y that is accounted for by the collec-

tion of X’s in the model, as represented by the linear predictor. R

2

is also the squared

correlation of Y with its model-fitted value. That is,

R

2

⫽ [corr( y,yˆ)]

2

.

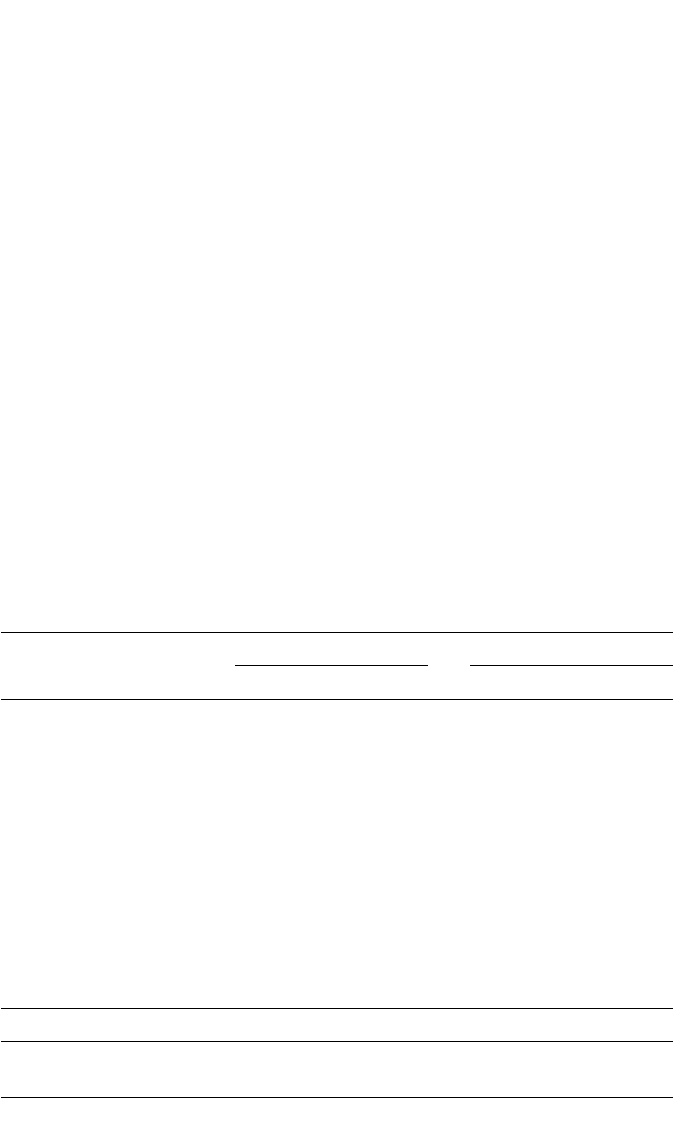

Example. Table 3.1 shows the results of three different regression analyses of stu-

dents’ scores on the first exam in introductory statistics, for 214 students. The first

model is just an SLR of exam1 score on the math diagnostic score and is essentially

a replication of the analysis in Table 2.2. The second model adds college GPA as a

predictor of exam scores, whereas the third model adds attitude toward statistics (a

continuous variable ranging from ⫺10 to 20, with higher values indicating a more

positive attitude), class hours in the current semester (number of class hours the

88 INTRODUCTION TO MULTIPLE REGRESSION

Table 3.1 Regression Models for Score on the First Exam for 214 Students

in Introductory Statistics

Model 1 Model 2 Model 3

Regressor bb

s

bb

s

bb

s

Intercept ⫺35.521** .000 ⫺57.923*** .000 ⫺65.199*** .000

Math diagnostic 2.749*** .517 2.275*** .428 2.052*** .386

score

College GPA 13.526*** .398 13.659*** .402

Attitude toward .376* .122

statistics

Class hours in the .819* .115

current semester

Number of previous 1.580 .094

math courses

RSS 16509.326 25803.823 27762.947

MSE 212.919 169.878 162.909

F 77.538*** 75.948*** 34.084***

R

2

.268 .419 .450

R

2

adj

.264 .413 .437

* p ⬍ .05. ** p ⬍ .01. *** p ⬍ .001.

c03.qxd 8/27/2004 2:48 PM Page 88

student is currently taking), and number of previous math courses (the number of

previous college-level math courses taken by the student).

Recall that when discussing the authenticity of the first model in Chapter 2, I sug-

gested academic ability as the real reason for the association of math diagnostic per-

formance with exam scores. Should this be the case, we would expect that with

academic ability held constant, diagnostic scores would no longer have any impact on

exam performance. That is, if academic ability is Z in Figure 3.2 and diagnostic score

is X, this hypothesis suggests that there is no connection from X to Y, but rather, it is

the connection from X to Z and from Z to Y that causes Y to vary when X varies.

(Instead of a curved line connecting X with Z, we now imagine a directed arrow from

Z to X, since Z is considered to cause X as well as Y. The mathematics will be the same,

as shown below in the section on omitted-variable bias.) The measure of academic

ability I choose in this case is college GPA, since it reflects the student’s performance

across all classes taken prior to the current semester and is therefore a proxy for aca-

demic ability.

The first model shows that exam performance is, on average, 2.749 points higher

for each point higher that a student scores on the diagnostic. Model 2, with college

GPA added, shows that this effect is reduced somewhat but is still significant. (Whether

this reduction itself is significant is assessed below.) Net of academic ability, exam per-

formance is still, on average, 2.275 points higher for each point higher a student scores

on the diagnostic. It appears that academic ability does not explain all of the associa-

tion of diagnostic scores with exam scores, contrary to the hypothesis. College GPA

also has a substantial effect on exam performance. Holding the diagnostic score con-

stant, students who are a unit higher in GPA are estimated to be, on average, about 13.5

points higher on the exam. The model with two predictors explains about 41% of the

variance in exam scores. Adding college GPA apparently enhances the proportion of

explained variation by .419 ⫺ .268 ⫽ .151. This increment to R

2

resulting from the

addition of college GPA is referred to as the squared semipartial correlation coefficient

between exam performance and college GPA, controlling for diagnostic score. The

semipartial correlation coefficient between college GPA and exam performance, con-

trolling for diagnostic score, is the square root of this quantity, or .389. Although the

increment to R

2

is a meaningful quantity, the semipartial correlation coefficient is not

particularly useful. A more useful correlation coefficient that takes into account other

model predictors is the partial correlation coefficient, explained below. Our estimate

of σ

2

for model 2 is MSE, which is 169.878.

The third model adds the last three predictors. This model explains 45% of the

variance in exam scores. Of the three added predictors, two are significant: attitude

toward statistics and class hours in the current semester. Each unit increase in attitude

is worth about a third of a point increase in exam performance, on average. Each addi-

tional hour of classes taken during the semester is worth about eight-tenths of an addi-

tional point on the exam, on average. This last finding is somewhat counterintuitive,

in that those with a greater class burden have less time to devote to any one class.

Perhaps these students are especially motivated to succeed, or perhaps these students

have few other obligations, such as jobs or families, which allows them to devote more

time to studies. The intercept in all three models is clearly uninterpretable, since the

EMPLOYING MULTIPLE PREDICTORS 89

c03.qxd 8/27/2004 2:48 PM Page 89

only predictor that can take on a value of zero is number of previous math courses.

The model might be useful for forecasting exam scores for prospective students, since

its discriminatory power is moderately strong. Suppose that a prospective student

scores 43 on the diagnostic, has a 3.2 GPA, has a 12 on attitude toward statistics, is

taking 15 hours in the current semester, and has had one previous math course. Then

his or her predicted score on the first exam would be yˆ ⫽⫺65.199 ⫹ 2.052(43) ⫹

13.659(3.2) ⫹ .376(12) ⫹ .819(15) ⫹ 1.58(1) ⫽ 85. 1228.

Discriminatory Power, Revisited. Although R

2

is used to tap discriminatory power, it

is an upwardly biased estimator of P

2

. This is evident in the fact that (Stevens, 1986)

E(R

2

冟P

2

⫽ 0) ⫽

ᎏ

n ⫺

K

1

ᎏ

.

That is, even if P

2

equals zero in the population, a regression model with, say, 49 pre-

dictors and 50 cases will show an R

2

of 1.0, regardless of the real utility of the explana-

tory variables in explaining Y. A better estimator of P

2

is the adjusted R

2

, whose

formula, as given in Chapter 2, is

R

2

adj

⫽ 1 ⫺

ᎏ

σ

s

ˆ

2

y

2

ᎏ

.

R

2

adj

is typically smaller in magnitude than R

2

, adjusting for the latter’s tendency to

“overshoot” P

2

. For model 3 in Table 3.1, for example, R

2

is .45 whereas R

2

adj

is .437.

Additionally, R

2

has the rather unpleasant property that it can never decrease

when variables are added to a model, no matter how useless they are in explaining

Y. This could entice one to add ever more variables to a model in order to increase

its discriminatory power. The drawback to this strategy is that we end up with a

model that maximizes R

2

in a given sample but has little replicability across samples.

Another advantage to R

2

adj

is that it reflects whether or not “junk” is being added to

a regression model. Rewriting R

2

adj

as

R

2

adj

⫽ 1 ⫺

ᎏ

SS

T

E

S

/

S

(n

/(

⫺

n ⫺

K

1

⫺

)

1)

ᎏ

⫽ 1 ⫺

ᎏ

n ⫺

n ⫺

K⫺

1

1

ᎏ

ᎏ

S

T

S

S

E

S

ᎏ

,

we see that as K increases—that is, as we add more and more predictors to the model—

the factor (n ⫺ 1)/(n ⫺ K ⫺ 1) also increases. Now TSS is constant for any given set

of Y values. So for R

2

adj

to increase as we add predictors, SSE must be reduced corre-

spondingly. That is, each predictor must be removing systematic variation from the

error term in order for us to experience an increase in R

2

adj

. Hence, R

2

adj

is a more

effective barometer for gauging whether an additional variable should be entered into

a model than is R

2

.

Standardized Coefficients and Elasticities. Frequently, we wish to gauge the rela-

tive importance of explanatory variables in a given model. The unstandardized

coefficients, that is, the b

k

’s, are not suited to this purpose, since their magnitude

90 INTRODUCTION TO MULTIPLE REGRESSION

c03.qxd 8/27/2004 2:48 PM Page 90

depends on the metrics of both X

k

and Y. A better choice is the standardized partial

regression coefficient, denoted b

s

k

in this volume. The formula for b

s

k

is

b

s

k

⫽ b

k

ᎏ

s

s

x

y

k

ᎏ

.

That is, the standardized coefficient is equal to the unstandardized coefficient times

the ratio of the standard deviation of X

k

to the standard deviation of Y. This stan-

dardization removes the dependence of b

k

on the units of measurement of X

k

and Y.

Its interpretation, however, is cumbersome: It represents the estimated standard-

deviation difference in Y expected for a standard-deviation increase in X

k

, net of

other model predictors. Why? Well, if b

k

is the change in the mean of Y for a unit

increase in X

k

, then b

k

s

xk

is the change for a 1-standard-deviation increase in X

k

.

Dividing this by s

y

gives us the change in the mean of Y expressed in standard devi-

ations of Y, which is, of course, b

s

k

. Typically, we are not interested in interpreting b

s

k

but rather, in comparing the magnitudes of the b

s

k

’s. For example, in model 2 the b

k

’s

suggest that college GPA has a substantially stronger effect on exam performance

than that of math diagnostic score. However, the b

s

k

’s paint a different picture, sug-

gesting that math diagnostic score has a stronger impact than college GPA. In the last

model, on the other hand, the picture changes again. In the presence of the other

model predictors, college GPA has the strongest effect on exam performance, fol-

lowed by math diagnostic score, attitude toward statistics, class hours in the current

semester, and number of previous math courses.

Although the b

s

k

’s are useful for gauging the relative importance of predictors in

a given model, they have drawbacks with respect to other model comparisons.

Because they are a function of the sample standard deviations of the predictors and

of the response, they should not be used to compare coefficients across samples. For

example, they should not be used to compare the effects of predictors in, say, two

different populations, based on samples from each population. The reason for this

is that the effect of a given predictor, as assessed by the unstandardized coefficient,

might be the same in each population. But the standard deviations of the predictor

and/or the response might be different in each population, resulting in different

standardized coefficients. The same reasoning suggests that we should not use the

b

s

k

’s to compare coefficients in different samples from the same population—say,

taken in different time periods. In these cases, the b

k

’s are preferable for making

comparisons.

A unitless measure that can be used for comparing the relative importance of

regressor effects both within and across samples is the variable’s elasticity, denoted

E

k

for the kth variable (Pindyck and Rubinfeld, 1981). This statistic is less suscepti-

ble to sampling variability than is the standardized coefficient. For the kth predictor,

the population elasticity is calculated as

E

k

⫽ β

k

ᎏ

x

苶

y

苶

k

ᎏ

.

EMPLOYING MULTIPLE PREDICTORS 91

c03.qxd 8/27/2004 2:48 PM Page 91

The elasticity is the percentage change in Y that could be expected from a 1%

increase in X

k

(Hanushek and Jackson, 1977). To see how this interpretation arises,

consider the change in the mean of Y for a 1% increase in X

k

, holding the other X’s

constant:

E(Y 冟x

k

⫹ .01x

k

, x

⫺k

) ⫺ E(Y 冟 x

k

, x

⫺k

)

⫽ β

0

⫹ β

1

X

1

⫹

...

⫹ β

k

(x

k

⫹ .01x

k

) ⫹

...

⫹ β

K

X

K

⫺ (β

0

⫹ β

1

X

1

⫹

...

⫹ β

k

x

k

⫹

...

⫹ β

K

X

K

)

⫽ .01β

k

x

k

.

Dividing by Y gives us .01β

k

x

k

/Y, which is the proportionate change in E(Y )—as a pro-

portion of Y—for a .01 increase in X. Finally, multiplying by 100 results in β

k

(x

k

/Y),

which is then the percent change in E(Y) resulting from a percent increase in X

k

. This

change depends on the levels of both X

k

and Y, but it is customary to evaluate it at the

means of both variables. For model 3 in Table 3.1, the elasticities are 1.091 for the

diagnostic score, .549 for college GPA, .024 for attitude toward statistics, .158 for

class hours, and .026 for the number of previous math courses. According to the elas-

ticities, math diagnostic score clearly has the strongest impact. Each 1% increase in

the diagnostic score is expected to increase exam performance by 1.09%.

Inferences in MULR

Several inferential tests are available to test different types of hypotheses about vari-

able effects in MULR. The first test we might want to consider is a test for the model

as a whole. Here we ask the question: Is the model of any utility in accounting for

variation in Y? We can think of the null hypothesis as H

0

:P

2

⫽ 0, and the research

hypothesis as H

1

:P

2

⬎ 0. That is, if the model is of any utility in explaining Y, there

is some nonzero proportion of variance in Y in the population that is explained by

the regression. Another way of stating these hypotheses is as follows: H

0

: β

1

⫽

β

2

⫽

...

⫽ β

K

⫽ 0 versus H

1

: at least one β

k

⫽ 0. Actually, this is a narrow represen-

tation of the hypotheses. A more global expression of the hypotheses is: H

0

: all pos-

sible linear combinations of the β

k

’s ⫽ 0 versus H

1

: at least one linear combination

of the β

k

’s ⫽ 0 (Graybill, 1976). The connection between these two (latter) state-

ments of H

0

and H

1

is that β

1

, β

2

,...,β

K

each represents linear combinations, or

weighted sums, of the β

k

’s. To see that, say, β

1

is a weighted sum of the β

k

’s, we sim-

ply write β

1

as 1(β

1

) ⫹ 0(β

2

) ⫹

...

⫹ 0(β

K

). Here it is evident that the first weight is

1 and all the rest of the weights equal zero. The F statistic, which is used to test the

null hypothesis in all three cases here, is actually a test of the third null hypothesis.

The reason for highlighting this is that occasionally, the null hypothesis will be

rejected and none of the individual coefficients turns out to be significant. One rea-

son for this is that it is some other linear combination of the coefficients that is

nonzero, but perhaps not a combination that makes any intuitive sense. (Another rea-

son for this phenomenon is that multicollinearity is present among the X’s; multi-

collinearity is considered below.)

92 INTRODUCTION TO MULTIPLE REGRESSION

c03.qxd 8/27/2004 2:48 PM Page 92

At any rate, the test statistic for all of these incarnations of H

0

is the F statistic,

where

F ⫽

ᎏ

SSE

R

/n

S

⫺

S/K

K⫺ 1

ᎏ

⫽

ᎏ

M

M

S

S

R

E

ᎏ

and MSR denotes mean-squared regression, or the regression sum of squares divided

by its degrees of freedom, K. Under the null hypothesis—that is, if the null hypothesis

is true—this statistic has the F distribution with K and n ⫺ K ⫺ 1 degrees of freedom.

Table 3.1 displays RSS, MSE, and F for all three models. All F tests are highly

significant, suggesting that each model is of some utility in predicting exam scores.

If the F test is significant, it is then important to determine which β

k

’s are not equal

to zero. The individual test for any given coefficient is a t test, where

t ⫽

ᎏ

σ

b

ˆ

b

k

k

ᎏ

has the t distribution with n ⫺ K ⫺ 1 degrees of freedom under the null hypothesis

that β

k

⫽ 0. All coefficients in all models with the exception of the effect of number

of previous math courses, in model 3, are significant. As in simple linear regression,

we may prefer to form confidence intervals for the regression coefficients. A 95%

confidence interval for β

k

is b

k

⫾ t

(.025,n⫺K⫺1)

σ

ˆ

b

k

.

Nested F. Often, it is of interest to test whether a nested model is significantly

different from its parent model. A model, B, is nested inside a parent model, A, if the

parameters of B can be generated by placing constraints on the parameters in A. The

most common constraint is to set one or more parameters in A to zero. For example,

model 2 is nested inside model 3 because the parameters in model 2 can be gener-

ated from those in model 3 by setting the β’s for attitude toward statistics, class

hours in the current semester, and number of previous math courses, in model 3, all

to zero. We can therefore test whether a significant loss in fit is experienced when

setting these parameters to zero, or, alternatively, whether a significant improvement

in fit is experienced when adding these three parameters. In reality, we are testing

H

0

: β

3

⫽ β

4

⫽ β

5

⫽ 0 versus H

1

: at least one of β

3

, β

4

, β

5

⫽ 0. That is, the test is a test

of the validity of the constraints in H

0

. In general form, the nested F-test statistic is

F ⫽

,

where ∆df is the number of constraints imposed on model A to derive model B, or

the difference in the number of parameters estimated in each model. An alternative

but equivalent form of the test statistic is

F ⫽ ,

(R

2

A

⫺ R

2

B

)/∆df

ᎏᎏᎏ

(1 ⫺ R

2

A

)/(n ⫺ K ⫺ 1)

(RSS

A

⫺ RSS

B

)/∆df

ᎏᎏᎏ

MSE

A

EMPLOYING MULTIPLE PREDICTORS 93

c03.qxd 8/27/2004 2:48 PM Page 93

where K is the total number of regressors in the parent model. In the current example,

K ⫽ 5 and ∆df ⫽ 3, since we have constrained three parameters in model 3 to zero to

arrive at model 2. If H

0

is true—that is, the constraints are valid—this statistic has the

F distribution with ∆df and n ⫺ K ⫺ 1 degrees of freedom. In the current example, the

test statistic is

F ⫽⫽4.009.

With 3 and 208 degrees of freedom, the attained significance level is .0083. At con-

ventional significance levels (e.g., .05 or .01), we would reject H

0

and conclude that at

least one of the constrained parameters is nonzero. Individual t tests suggest that two

of the parameters are nonzero: the effect of attitude toward statistics and the effect of

class hours in the current semester. If only one parameter is hypothesized to be zero,

say β

k

, the nested F is just the square of the t test for the significance of b

k

in the model

containing that parameter estimate.

Nesting: Another Example. There are other means of constraining parameters that

do not involve setting them to zero. As an example, Table 3.2 presents two different

regression models for couple modernism, based on the 416 intimate couples in the

couples dataset. Model 1 shows the results of regressing couple modernism on male

(27762.947 ⫺ 25803.823)/3

ᎏᎏᎏᎏ

162.909

94 INTRODUCTION TO MULTIPLE REGRESSION

Table 3.2 Regression Models for Couple Modernism for 416 Intimate Couples,

Showing Nesting to Test Equality of Coefficients

Model 1 Model 2

Regressor bb

s

bb

s

Intercept 23.368*** .000 23.481*** .000

Male’s schooling .110* .139 .160*** .335

Female’s schooling .211*** .237 .160*** .335

Male’s church attendance ⫺.136* ⫺.147 ⫺.067** ⫺.131

Female’s church attendance .020 .021 ⫺.067** ⫺.131

Male’s income ⫺.015 ⫺.097 .004 .030

Female’s income .048*** .189 .004 .030

RSS 473.121 348.285

MSE 5.104 5.370

F 15.450*** 21.621***

R

2

.185 .136

R

2

adj

.173 .130

Partial variance–covariance matrix for model 1

Male’s Schooling Female’s Schooling

Male’s schooling .001990 ⫺.001004

Female’s schooling ⫺.001004 .002340

* p ⬍ .05. ** p ⬍ .01. *** p ⬍ .001.

c03.qxd 8/27/2004 2:48 PM Page 94