Yang J., Nanni L. (eds.) State of the Art in Biometrics

Подождите немного. Документ загружается.

Fingerprint Matching using A Hybr id Shape and Orientation Descriptor 15

3.1.2 Similarity score

Once the n iterations are performed, the final pairs have now been established. From this, the

shape similarity distance measure can be calculated as

D

sc

(P, Q)=

1

n

∑

p∈P

arg min

q∈Q

C(p, T(q)) +

1

m

∑

q∈Q

arg min

p∈P

C(p, T(q)) (47)

where T

(.) denotes the T.P.S transformed representative of the contour point q. In addition, an

appearance term, D

ac

(P, Q), measuring pixel intensity similarity and a bending energy term,

D

be

(P, Q)=I

f

, can be added to the similarity score.

Afterward, the similarity measure was modified as

D

∗

sc

= D

sc

+ βD

be

. (48)

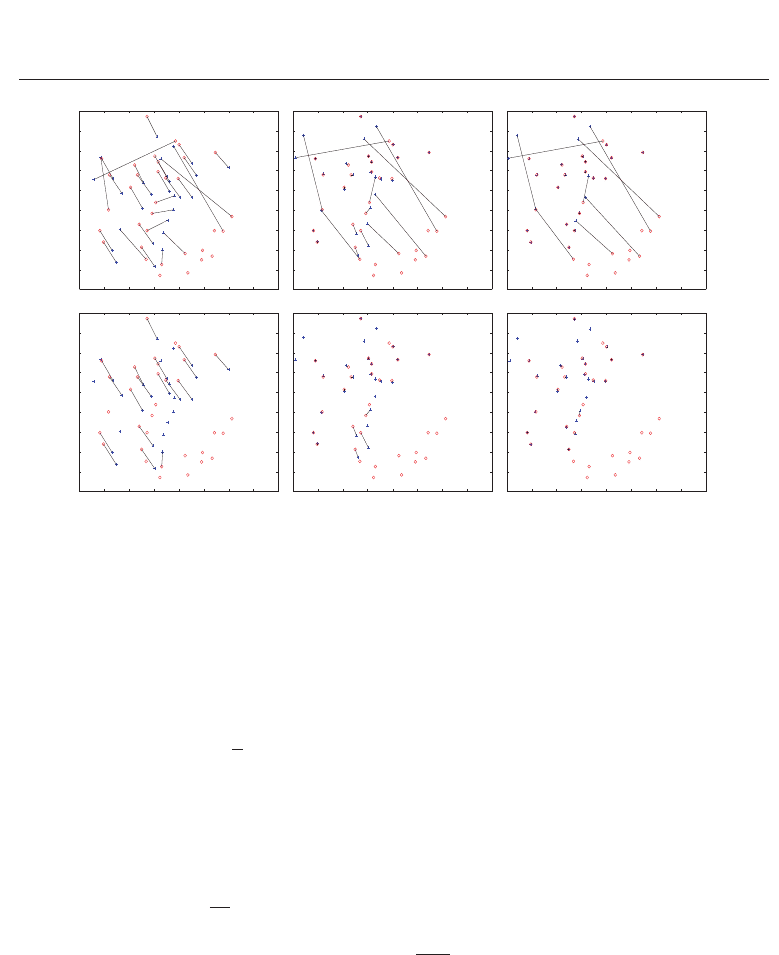

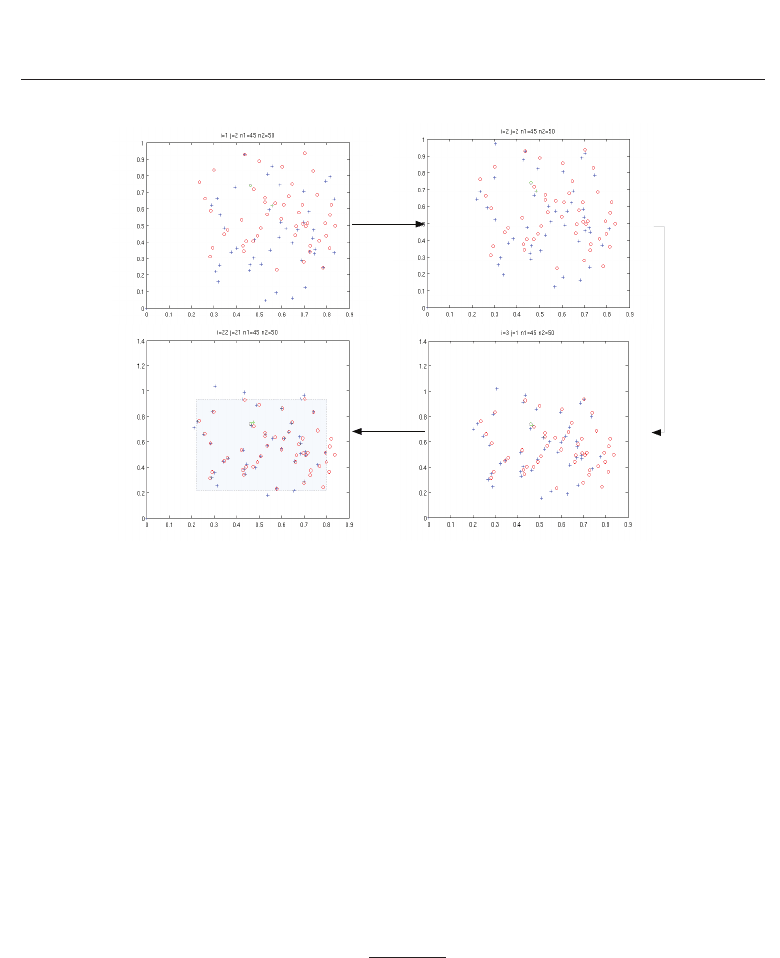

Although this measure does not take into account the strict one-to-one mapping of minutiae;

through experimentation, this method proved to be sound, providing acceptable performance

in fingerprint similarity assessment. However, the resulting minutiae mapping from the

application of the Hungarian algorithm on the contextually based cost histograms produced

some un-natural pairs, as illustrated in Figure 8 (top). This is due to the lack of a minutiae

pair pruning procedure. The existence of un-natural pairs could potentially skew the Thin

Plate Spline (T.P.S) linear transform performed. In addition, such pairs generally increase

the bending energy substantially, thus leading to invalid matching results, particularly for

genuine matches.

3.2 Proposed matching method

Recently, hybrid matching algorithms have been used for fingerprint matching. Although

minutiae detail alone can produce a highly discriminant set of information, the combination

of level 1 feature, such as orientation and frequency, and other level features, can

increase discriminant information, and hence, increase matching accuracy, as illustrated in

Benhammadi et al. (2007), Youssif et al. (2007), Reisman et al. (2002), and Qi et al. (2004).

The detailing of the proposed hybrid matching algorithm based on a modified version of

the enhanced shape context method in Kwan et al. (2006), along with the integration of

the orientation-based descriptor of Tico & Kuosmanen (2003) is given here, illustrating a

significant performance improvement over the enhanced shape context method of Kwan et al.

(2006). The main objective of the integration is two-fold, firstly to prune outlier minutiae pairs,

and secondly to provide more information to use in similarity assessment.

As briefly described earlier in section 2.3, the orientation-based descriptor in Tico &

Kuosmanen (2003) utilises the orientation image to provided local samples of orientation

around minutiae in a concentric layout. Each orientation sample point is calculated as

θ

A

i

c,d

= min(|θ

s

A

c,d

−θ

A

i

|, π −|θ

s

A

c,d

−θ

A

i

|) (49)

being the d

th

sample on the c

th

concentric circle with distance r

c

away from the minutiae

point m

A

i

, where θ

A

i

and θ

s

A

c,d

are the minutia and sample point orientation estimations,

respectively. The orientation distance of equation 22 is used to prune outlier pairs resulting

from the Hungarian algorithm which produced the mapping permutation of equation 30.

Although the shape context defines the similarity measure in equation 47-48 with no strict

one-to-one correspondence, minutiae should have a more strict assessment based on the

optimal mapping, since minutiae are key landmarks as opposed to randomly sampled contour

39

Fingerprint Matching using A Hybrid Shape and Orientation Descriptor

16 Will-be-set-by-IN-TECH

0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

0

0

.1

0

.2

0

.3

0

.4

0

.5

0

.6

0

.7

0

.8

0

.9

28 correspondences (unwarped X)

0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

0

0

.1

0

.2

0

.3

0

.4

0

.5

0

.6

0

.7

0

.8

0

.9

28 correspondences (warped X)

0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

0

0

.1

0

.2

0

.3

0

.4

0

.5

0

.6

0

.7

0

.8

0

.9

28 correspondences (warped X)

0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

0

0

.1

0

.2

0

.3

0

.4

0

.5

0

.6

0

.7

0

.8

0

.9

18 correspondences (warped X)

0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

0

0

.1

0

.2

0

.3

0

.4

0

.5

0

.6

0

.7

0

.8

0

.9

19 correspondences (warped X)

0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

0

0

.1

0

.2

0

.3

0

.4

0

.5

0

.6

0

.7

0

.8

0

.9

19 correspondences (warped X)

Fig. 8. top: The Enhanced Shape Context method producing initial minutiae pair

correspondences. The next two images are for the following iterations. One should note that

there are some clear un-natural pairs produced, where plate foldings are evident (i.e. pair

correspondences that cross other pair correspondences). bottom: The proposed hybrid

method initial minutiae pair correspondences along with following iterations of produced

correspondences.

points. Thus, equation 48 can be modified to only score the pairs with the optimal one-to-one

mapping as

D

∗∗

sc

(P, Q)=

1

n

∑

p

i

∈P|D

o

(p

i

,q

π(i)

)<δ

C(p

i

, q

π(i)

)+ΛD

o

(p

i

, q

π(i)

)+βD

be

(50)

with an addition term in the summation to account for orientation distance scaled by the

tunable parameter, Λ with range

[0, 1 ].

In terms of what concentric circle radii and sample configuration should be used, the method

explained in Tico & Kuosmanen (2003) prescribes that the radius for circle K

l

be r

l

= 2l × τ,

where τ denotes the average ridge period, and for the sample configuration, the circle K

l

should have roughly

πr

l

τ

. As the average ridge period was recorded to be 0.463 mm in

Stoney (1988), for a fingerprint image with dots per inch (dpi) equal to R, the previous formula

for the configuration can be expressed as K

l

=

172.r

l

R

. However, this was really only used

as a rough guide for the configuration used in the experimentation of Tico & Kuosmanen

(2003). This was also used as a rough guide for our implementation of the orientation-based

descriptor.

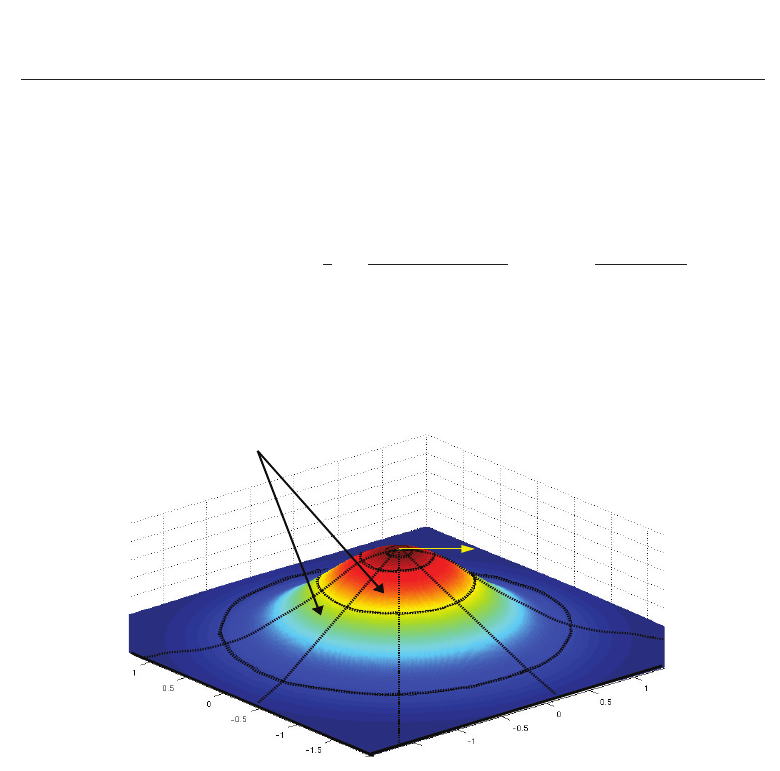

Although the log-polar space adequately provides extra importance toward neighbouring

sample points, additional emphasis on local points may be desirable, since minutiae sets are

largely incomplete and do not entirely overlap. For a given minutia, non-local bin regions may

be partially or largely outside the segmented region of interest for one fingerprint and not the

other. In addition, the non-local bins are spatially larger, and hence, have a higher probability

40

State of the Art in Biometrics

Fingerprint Matching using A Hybr id Shape and Orientation Descriptor 17

of containing false minutia caused by noises. Such issues can potentially result in incorrect

minutiae pairs. Thus, the enhanced shape context’s log-polar histogram cost in equation 27 is

adjusted to contain a tunable Gaussian weighting of histogram bin totals, depending on their

distances away from the centre (reference minutia), with

C

∗∗

(p

i

, q

j

)=

1

−γC

ty pe

ij

C

angl e

ij

.

⎛

⎜

⎝

1

2

K

∑

k=1

h

p

i

(k) −h

q

j

(k)

2

h

p

i

(k)+h

q

j

(k)

×

exp

−

(

r

k

−r

min

)

2

2σ

2

⎞

⎟

⎠

(51)

where r

min

is the outer boundary of the closest bin, r

k

is the current bin outer boundary

distance, and σ

2

is a tunable parameter (see Figure 9).

Fig. 9. The log-polar sample space convolved with a two dimensional Gaussian kernel,

resulting in each bin to be weighted according to its distance from the origin (minutia). The

histogram cost calculation uses the direction of the reference minutia as the directional offset

for the bin order, making the descriptor invariant to rotation.

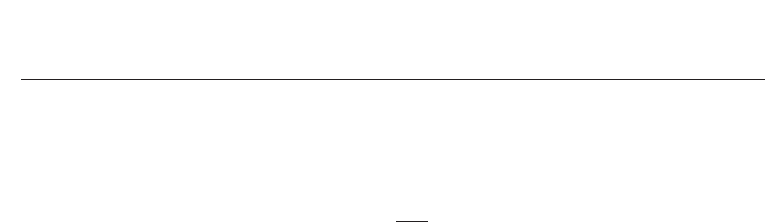

3.2.1 Adaptive greedy registration

If we re-examine the registration of the enhanced shape context method of Kwan et al. (2006)

discussed in section 3.1, we can summarise the iterative process as updating minutiae pairs

with the enhanced shape context descriptor, followed by using T.P.S to perform a global

alignment with the linear transform component, and then performing non-linear transform

to model warping caused by skin elasticity. This method does not utilise any singularities

for the registration process, solely relying on the T.P.S framework for registration. Hence,

registration is largely reliant on the accuracy of the spatial distribution of minutiae relative to

a given reference minutia, which is often inadequate.

The proposed method does not use the T.P.S transform to perform the initial affine transform.

Instead, a greedy method similar to Tico & Kuosmanen (2003) (of equations 20-23) is used.

41

Fingerprint Matching using A Hybrid Shape and Orientation Descriptor

18 Will-be-set-by-IN-TECH

Using the minutiae set representation of equations 3 and 4, each possible pair (m

A

i

, m

B

j

) of

the affine transform, T, is calculated by using the orientation differences of each minutiae

direction as the rotation component, and the difference in x-y coordinates as the offset

component (as illustrated in equations 7-9). The heuristic that requires maximising is

H

(A, B)=arg max

ψ

∑

i

S(m

A

i

, m

B

T(i)

)

n(Φ

ψ

)

(52)

where S

(.) is previously defined in equation 22 as the similarity function based on the

orientation-based descriptor, ψ is the index pair reference for the transform T (i.e.

(i, j) with

m

A

i

= m

B

T(j)

), Φ

ψ

is the anchor point set (with size n(Φ

ψ

)) of minutiae pairs (m

A

p

, m

B

q

) ∈ Φ

ψ

which have each other as closest points from the opposite minutiae sets with distance less

than an empirically set limit, δ

M

, after applying the given transform. In addition, all anchor

point minutiae pairs must have similar orientation, hence meeting the constraint

min

|θ

A

i

−θ

B

j

|, π −|θ

A

i

−θ

B

j

|

< δ

θ

(53)

(see Figure 5 left). From the given definition, we can easily verify that

(i, j) ∈ Φ

ψ

.

If the primary core point exists in both the test and template feature sets, provided that the

core point detection is highly accurate with a given test dataset, then we have good reason to

ignore affine transforms that cause the core points to be greater than a fixed distance, δ

D

, away

from each other. Additional pruning can be achieved by only allowing transforms where the

reference minutiae pair has a orientation-based descriptor similarity score to meet

S

p

i

, q

π(i)

< δ

S

(54)

where δ

S

is empirically set. Such restrictions will not only improve performance, but also

make the registration process much more accurate, since we are not just blindly maximising

the heuristic of equation 52.

Many registration algorithms use singularities, texture, and minutiae pair information as

tools for finding the most likely alignment. However, the overlapped region shape similarity

retrieved from minutiae spatial distribution information provides an additional important

criteria. After finding the bounding box (overlapping region) of a possible affine transform

meeting all prior restrictions, we can then measure shape dissimilarity via the application of

the shape context to all interior points P

⊆ A and Q ⊆ B with equation 50, giving additional

criteria for affine transforms to meet D

sc

(P, Q) < δ

sc

for an empirically set threshold, δ

sc

.

For a candidate transform, we have the corresponding anchor point set Φ

ψ

. We will now

define two additional nearest neighbour sets of the interior points in the overlap region (as

depicted in Figure 10) as

Φ

α

=

i

|

(x

A

i

− x

B

j

)

2

+(y

A

i

−y

B

j

)

2

< δ

N

(55)

where Φ

α

contains all interior nearest neighbour indices from fingerprint A, and likewise, Φ

β

,

containing interior nearest neighbour indices for fingerprint B . Thus, we have

Φ

ψ

⊆

(i, j) | i ∈ Φ

α

and j ∈ Φ

β

. (56)

with 1

≤ i ≤ p and 1 ≤ j ≤ q. The affine transform method is detailed in algorithm 1.

If a candidate affine transform meets the heuristic of equation 52 with the given constraints,

42

State of the Art in Biometrics

Fingerprint Matching using A Hybr id Shape and Orientation Descriptor 19

Algorithm 1 Proposed registration algorithm (Affine component)

Require: minutiae set P and Q (representing fingerprints A and B, respectively).

H

← 0

ψ

←NIL

Φ

ψ

←NIL

Q

T

←NIL

for all possible minutiae pairs

p

i

, q

j

do

S

p

i

, q

j

← (1/K)

∑

L

c

∑

K

c

d

exp

−

2

min(|θ

p

i

c,d

−θ

q

j

c,d

|,π−|θ

p

i

c,d

−θ

q

j

c,d

|)

πμ

!

if S

p

i

, q

j

< δ

S

then

continue

end if

{Perform transform from minutiae pair with offset and orientation parameters from

minutiae x-y and θ differences}

Q

← T(Q)

{Make sure core points are roughly around the same region, provided both cores exist}

if BothCoresE xist

(P, Q

) and CoreDist(P, Q

) > δ

D

then

continue

end if

{Get T.P.S cost matrix (see algorithm 2)}

C

∗∗

← TPS

cost

(P, Q

)

D

sc

←

1

n

∑

p∈P

arg min

q∈Q

C

∗∗

(p, q)+

1

m

∑

q∈Q

arg min

p∈P

C

∗∗

(p, q)

if D

sc

> δ

sc

then

continue

end if

Φ

ψ

P

←

(a, b) | arg min

a∈P

(x

A

a

− x

B

b

)

2

+(y

A

a

−y

B

b

)

2

Φ

ψ

Q

←

(a, b) | arg min

b∈Q

(x

A

a

− x

B

b

)

2

+(y

A

a

−y

B

b

)

2

Φ

ψ

←

(a, b) | (a, b) ∈ Φ

ψ

P

∩Φ

ψ

Q

and

(x

A

i

− x

B

j

)

2

+(y

A

i

−y

B

j

)

2

< δ

M

H

test

←

∑

(a,b)∈Φ

ψ

S(p

a

,T(q

b

))

n(Φ

ψ

)

if H

test

> H then

H

← H

test

ψ ← (i, j)

Φ

ψ

← Φ

ψ

Q

T

← Q

end if

end for

Φ

α

←

i

|

(x

A

i

− x

B

j

)

2

+(y

A

i

−y

B

j

)

2

< δ

N

Φ

β

←

j

|

(x

A

i

− x

B

j

)

2

+(y

A

i

−y

B

j

)

2

< δ

N

return Φ

ψ

, Φ

α

, Φ

β

, Q

T

43

Fingerprint Matching using A Hybrid Shape and Orientation Descriptor

20 Will-be-set-by-IN-TECH

Algorithm 2 TPS

cost

: Calculate TPS cost matrix

Require: minutiae set P and Q (representing fingerprints A and B, respectively).

for all minutiae q

j

∈ Q do

for k

= 1toK do

h

q

j

(k) ← #

q

j

= q

i

: (q

j

−q

i

) ∈ bin(k)

end for

end for

for all minutiae p

i

∈ P do

for k

= 1toK do

h

p

i

(k) ← #

p

j

= p

i

: (p

j

− p

i

) ∈ bin(k)

end for

end for

for all minutiae p

i

∈ P and q

j

∈ Q do

C

ty pe

ij

←

−1iftype(p

i

)=type(q

j

),

0ifty pe

(p

i

) = ty pe(q

j

)

C

angl e

ij

←−

1

2

1

+ cos((∠

initial−w arped

))

C

∗∗

(p

i

, q

j

) ←

1

−γC

ty pe

ij

C

angl e

ij

.

1

2

∑

K

k

=1

h

p

i

(k)−h

q

j

(k)

2

h

p

i

(k)+h

q

j

(k)

×exp

−

(r

k

−r

min

)

2

2σ

2

!

end for

return C

∗∗

we can then focus on the non-affine aspect of registration. The T.P.S non-affine component

adequately modeled the warping caused by skin elasticity in the previously proposed method.

With this in mind, it will be desirable to utilise the non-linear component.

The T.P.S has its point correspondence method modified through having anchor point

correspondences remain static throughout the iterative process, thus attempting to restrict

the affine transform component of T.P.S while finding new correspondences from Φ

α

on to

Φ

β

that do not exist in Φ

ψ

. The shape context’s log-polar histogram cost is modified from

equation 51 as

C

γ

(p

i

, q

j

)=γ

d

γ

θ

C

∗∗

(p

i

, q

j

) (57)

where γ

d

is defined as

γ

d

=

1ifdist

(p

i

, q

j

) < δ

max

,

∞ otherwise

(58)

with δ

max

set as the maximum feasible distance caused by warping after applying the

candidate affine transform, and similarly, γ

θ

, is defined as

γ

θ

=

1 if min

(|θ

A

i

−θ

B

j

|, π −|θ

A

i

−θ

B

j

|) < θ

max

,

∞ otherwise

(59)

with θ

max

set as the maximum feasible orientation difference caused by orientation estimation

error in the extraction process.

44

State of the Art in Biometrics

Fingerprint Matching using A Hybr id Shape and Orientation Descriptor 21

Fig. 10. An example affine transform candidate search sequence with final state and

corresponding bounding box for the overlapped region.

>From the new cost function, the Hungarian algorithm is then used to find additional

one-to-one correspondences to what already existed in the anchor point set. The candidate

affine transform in comparison to the affine component of T.P.S,

A

T

=

⎡

⎣

a

x,x

a

x,y

a

1,x

a

y,x

a

y,y

a

1,y

001

⎤

⎦

, (60)

is used to assess the accuracy of the additional correspondences found. The T.P.S affine

component would not have prominent translation, rotation, and shear parameters since

the candidate transform should have already adequately dealt with the affine registration

task as the Euclidean constraints on the anchor point worked to keep the global transform

rigid. In addition, the inclusion of additional natural minutiae correspondences should not

significantly alter the affine registration required. Thus, the lack of prominent translation,

rotation, and shear parameters for the T.P.S affine transform indicates an existing agreement

between both affine transforms. Evaluation of the translation distance is given by

r

affine

=

a

2

1,x

+ a

2

1,y

< r

max

. (61)

Using the Singular Value Decomposition (SVD) of the non-translation components of A

T

:

SVD

a

x,x

a

x,y

a

y,x

a

y,y

= UDV

T

(62)

where U, V

T

∈ SO(2, R) (i.e. 2x2 dimension rotation matrices with angles θ

α

and θ

β

,

respectively) and D is a 2x2 diagonal matrix representing scaling along the rotated coordinate

45

Fingerprint Matching using A Hybrid Shape and Orientation Descriptor

22 Will-be-set-by-IN-TECH

Algorithm 3 Proposed registration algorithm (Non-affine component)

Require: minutiae set P and Q

T

(for fingerprints A and transform B), anchor set Φ

ψ

, nearest

neighbourhood sets Φ

α

and Φ

β

.

P

←

{

p

i

| i ∈ Φ

α

}

, Q

T

←

q

j

| j ∈ Φ

β

, ω

affine

← 0, D

be

← 0

for all minutiae q

j

∈ Q

T

do

for k

= 1toK do

h

q

j

(k) ← #

q

j

= q

i

: (q

j

−q

i

) ∈ bin(k)

end for

end for

for iter

= 1ton do

for all minutiae p

i

∈ P do

for k

= 1toK do

h

p

i

(k) ← #

p

j

= p

i

: (p

j

− p

i

) ∈ bin(k)

end for

end for

for all minutiae p

i

∈ Φ

α

and q

j

∈ Φ

β

do

C

ty pe

ij

←

−1iftype(p

i

)=type(q

j

),

0ifty pe

(p

i

) = ty pe(q

j

)

C

angl e

ij

←−

1

2

1

+ cos((∠

initial−w arped

))

C

∗∗

(p

i

, q

j

) ←

1

−γC

ty pe

ij

C

angl e

ij

.

1

2

∑

K

k

=1

h

p

i

(k)−h

q

j

(k)

2

h

p

i

(k)+h

q

j

(k)

×exp

−

(r

k

−r

min

)

2

2σ

2

!

C

γ

(p

i

, q

j

) ← γ

d

γ

θ

C

∗∗

(p

i

, q

j

)

end for

{calculate and add minutiae pairs additional to the anchor set pairs.}

Φ

π

← Hungarian(C

γ

, Φ

α

, Φ

β

, fixedMap = Φ

ψ

)

f

(x, y) ←T.P .S (P, Q

T

, Φ

π

, Regul arized = true )

D

be

← D

be

+ WKW

T

r

affine

←

a

2

1,x

+ a

2

1,y

[U, D, V

T

, θ

α

, θ

β

] ← SVD

a

x,x

a

x,y

a

y,x

a

y,y

ω

affine

← ω

affine

+ |θ

α

+ θ

β

|

τ

affine

← log

D

1,1

D

2,2

if D

be

> E

max

or r

affine

≥ δ

max

or ω

affine

≥ θ

max

or τ

affine

≥ τ

max

then

return Φ

ψ

end if

for all minutiae p

i

∈ P do

[x, y] ← [p

i

(x) , p

i

(y)]

[

p

i

(x) , p

i

(y)] ← [ f

x

(x, y), f

y

(x, y)]

end for

end for

return return Φ

π

46

State of the Art in Biometrics

Fingerprint Matching using A Hybr id Shape and Orientation Descriptor 23

axes of V

T

with nonnegative diagonal elements in decreasing order, the evaluation of rotation

ω

affine

= |θ

α

+ θ

β

|< ω

max

, (63)

and shear

τ

affine

= log

D

1,1

D

2,2

< τ

max

, (64)

is performed for empirically set values ω

max

and τ

max

. If the above affine transform criteria

of equations 61, 63, and 64 are not met, no extra minutiae pairs are produced. Unlike the

previous method, this helps uphold spatial consistency by not creating un-natural pairs (see

Figure 8 (bottom)). Essentially, the candidate affine transform is used as the ground truth

registration over the T.P.S affine component.

A final integrity check of the validity of the additional minutiae pairs produced from the

non-affine transform is the measured bending energy, previously defined in equation 45. If

the non-affine transform produces a bending energy distance D

be

> E

max

, then all additional

minutiae pairs are also rejected, in order to avoid un-natural warping to occur. The non-affine

transform component is detailed in algorithm 3.

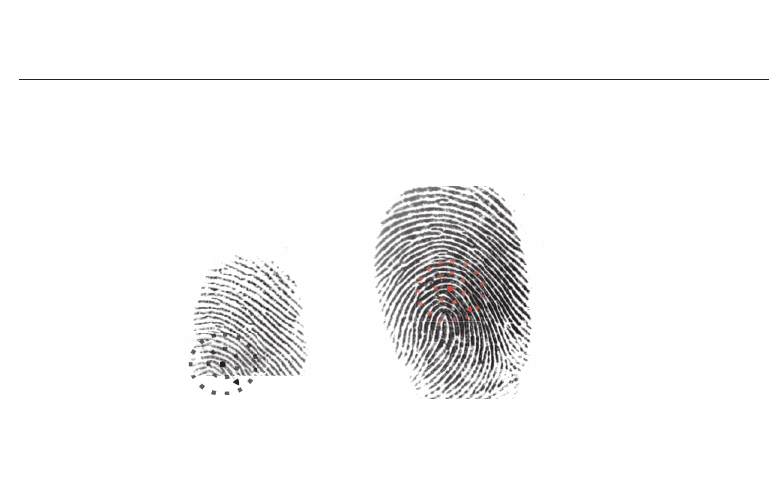

3.2.2 Matching algorithm

Once the minutiae pairs have been established, pruning is performed to remove unnatural

pairings. However, if we closely analyse the orientation-based descriptor used for pruning,

we can see that a fundamental flaw arises with partial fingerprint coverage, specifically for

minutiae pairs near fingerprint image edges. In such a case, the typical formula for distance

calculation cannot count orientation samples that lie outside the region of interest, and

therefore, unnecessarily reduces the orientation distance measure (see Figure 11). Moreover,

regions that have high noise also cannot have their orientation reliably estimated due to

information to be missing (if regions with high noise are masked), and likewise, reduces the

orientation-based descriptor common region coverage.

A proposed modification to the orientation-based descriptor is applied so that the amount of

common region coverage that each descriptor has is reflected in the similarity score. This is

achieved by a simple Gaussian weighting of equation 22 with

S

∗

(m

A

i

, m

B

j

)=S(m

A

i

, m

B

j

) ×exp(−max(0, Δ

cuto f f

−Δ

g_count

).μ

s

) (65)

where Δ

cuto f f

is the cutoff point where all good sample totals below this value are weighed,

Δ

g_count

is the total number of good samples (i.e. where a good sample is defined to be in a

coherent fingerprint region), and μ

s

is a tunable parameter. However, for a more exhaustive

approach, one could empirically review the estimated distribution of orientation-based

similarity scores for true and false cases, with specific attention towards the effect of coverage

completeness on the accuracy of the similarity measure.

Equation 65 relies on the intersection set of valid samples for each minutiae, defined as

I

(A

i

, B

j

)=

s

| s ∈{L, K

c

} and valid(A(s

x

, s

y

))

∩

t

| t ∈{L, K

c

} and valid(B(t

x

, t

y

))

(66)

where L is the sample position set and K

c

is the concentric circle set. Thus, we can also define

a variant of the function S

∗

(m

A

i

, m

B

j

, I) where a predefined sample index set , I, is given to

indicate which samples are only to be used for the similarity calculation, ignoring i /

∈ I even

if corresponding orientation samples are legitimately defined for both fingerprints (note: this

variant is used later for similarity scoring in the matching algorithm).

47

Fingerprint Matching using A Hybrid Shape and Orientation Descriptor

24 Will-be-set-by-IN-TECH

Fig. 11. An example where the orientation-based descriptor for corresponding minutiae in

two different impressions of the same fingerprint have no coverage in a substantial portion

of the orientation sample.

After the filtered minutiae pair set is produced, we can now assess the similarity of the

pairs. Minutiae δ-neighbourhood structure families, where particular spatial and minutiae

information of the δ closest minutiae to a reference minutiae are extracted as features, have

been used before in minutiae based matching algorithms (such as Chikkerur & Govindaraju

(2006) and Kwon et al. (2006)) for both alignment and similarity measure (see Figure 12). The

δ-neighbourhood structure proposed has the following fields:

• Distance d

i

: the distance a neighbourhood minutia is away from the reference minutia.

• Angle

∠

i

: the angle a neighbourhood minutia is from the reference minutia direction.

• Orientation θ

i

: the orientation difference between a neighbourhood minutia direction and

the reference minutia direction.

• Texture Γ

i

: the orientation-based descriptor sample set for a neighbourhood minutia used

to measure the region orientation similarity with the reference minutia for a given sample

index.

giving us the sorted set structure

nδ

(m

A

i

)={{d

A

i

(1)

, ∠

A

i

(1)

, θ

A

i

(1)

, {Γ

A

i

(1)

}},...,{d

A

i

(δ)

, ∠

A

i

(δ)

, θ

A

i

(δ)

, {Γ

A

i

(δ)

}}} (67)

being sorted by the distance field in ascending order. The first two fields are considered as the

polar co-ordinates of the neighbourhood minutiae with the referencing minutia as the origin,

and along with the third field, they are commonly used in local neighbourhood minutiae

based structures ( Kwon et al. (2006) and Jiang & Yau (2000)). Using the modified weighted

orientation-based descriptor, the texture field provides additional information on how each

local orientation information surrounding the δ-neighbourhood minutiae set vary from that

of the reference minutia, with the measure rated using equation 65. These structures can now

be used to further assess and score the candidate minutiae pairs.

When comparing the δ-neighbourhood elements of a candidate minutiae pair, the first three

fields are straight forward to compute the differences with

48

State of the Art in Biometrics